Alteryx Designer Desktop Discussions

Find answers, ask questions, and share expertise about Alteryx Designer Desktop and Intelligence Suite.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Designer Desktop

- :

- Re: Data parsing a stream of data

Data parsing a stream of data

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I have a stream of data that I want to parse into rows but eliminating some of the stream when parsing.

I have a text input (connecting to a server to pull the file), Then I use the download (sftp) function to get the data. and I have tried RegEx as well as Text to Columns

Stream has fields that are Double quote and Comma separated.

Should I be using something else beside parse.

Solved! Go to Solution.

- Labels:

-

Common Use Cases

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi Karen,

Are you trying to eliminate:

A) certain rows based on the content?

B) certain row content?

If you can post some sample data that helps a lot.

Cheers,

Bob

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I have attached a sample file. In the file - you will see the original file is stored on our server as a .csv. But when I pull the file from that location It comes in a stream of data. I want to eliminate the first 2 rows on the attached file as well as data on the each of the following rows up to ORder Date.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi Karen,

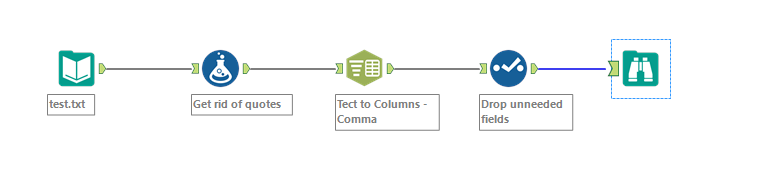

I think this does what you wanted:

The key things to note:

In the settings of the Input data I said that:

1) the first row does not contain field names

2) delimiter is a comma

3) start Data import on line three (to drop the first two lines)

Then I replace the quotes with nothing to remove them

use Text to Columns to split to columns based on comma delimiter

drop the unneeded fields (you could also rename here)

Hope that helps!

Cheers,

Bob

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

can you create this workflow with version 10.6 or how can I import the version you created into 10.6

-

Academy

6 -

ADAPT

2 -

Adobe

203 -

Advent of Code

3 -

Alias Manager

77 -

Alteryx Copilot

24 -

Alteryx Designer

7 -

Alteryx Editions

91 -

Alteryx Practice

20 -

Amazon S3

149 -

AMP Engine

250 -

Announcement

1 -

API

1,207 -

App Builder

116 -

Apps

1,359 -

Assets | Wealth Management

1 -

Basic Creator

14 -

Batch Macro

1,554 -

Behavior Analysis

245 -

Best Practices

2,691 -

Bug

719 -

Bugs & Issues

1 -

Calgary

67 -

CASS

53 -

Chained App

267 -

Common Use Cases

3,820 -

Community

26 -

Computer Vision

85 -

Connectors

1,425 -

Conversation Starter

3 -

COVID-19

1 -

Custom Formula Function

1 -

Custom Tools

1,935 -

Data

1 -

Data Challenge

10 -

Data Investigation

3,485 -

Data Science

3 -

Database Connection

2,217 -

Datasets

5,216 -

Date Time

3,226 -

Demographic Analysis

186 -

Designer Cloud

739 -

Developer

4,364 -

Developer Tools

3,525 -

Documentation

526 -

Download

1,036 -

Dynamic Processing

2,935 -

Email

927 -

Engine

145 -

Enterprise (Edition)

1 -

Error Message

2,253 -

Events

197 -

Expression

1,867 -

Financial Services

1 -

Full Creator

2 -

Fun

2 -

Fuzzy Match

711 -

Gallery

666 -

GenAI Tools

3 -

General

2 -

Google Analytics

155 -

Help

4,704 -

In Database

965 -

Input

4,290 -

Installation

360 -

Interface Tools

1,900 -

Iterative Macro

1,091 -

Join

1,956 -

Licensing

252 -

Location Optimizer

60 -

Machine Learning

259 -

Macros

2,858 -

Marketo

12 -

Marketplace

23 -

MongoDB

82 -

Off-Topic

5 -

Optimization

750 -

Output

5,246 -

Parse

2,325 -

Power BI

227 -

Predictive Analysis

936 -

Preparation

5,161 -

Prescriptive Analytics

205 -

Professional (Edition)

4 -

Publish

257 -

Python

853 -

Qlik

39 -

Question

1 -

Questions

2 -

R Tool

476 -

Regex

2,339 -

Reporting

2,429 -

Resource

1 -

Run Command

573 -

Salesforce

276 -

Scheduler

410 -

Search Feedback

3 -

Server

628 -

Settings

933 -

Setup & Configuration

3 -

Sharepoint

624 -

Spatial Analysis

599 -

Starter (Edition)

1 -

Tableau

512 -

Tax & Audit

1 -

Text Mining

468 -

Thursday Thought

4 -

Time Series

430 -

Tips and Tricks

4,184 -

Topic of Interest

1,126 -

Transformation

3,721 -

Twitter

23 -

Udacity

84 -

Updates

1 -

Viewer

3 -

Workflow

9,965

- « Previous

- Next »