Alteryx Designer Desktop Discussions

Find answers, ask questions, and share expertise about Alteryx Designer Desktop and Intelligence Suite.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Designer Desktop

- :

- Re: Data in source starting from random row number

Data in source starting from random row number

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi,

The position of the data in the data source is not static, that is, it is starting from a random row number.

For eg.: the starting point of the data can be from row number 5 or 10 or sometimes 4.

Is there a way wherein Alteryx always starts digesting the data from respective row numbers.

The good part here is that the column names are always the same.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi @sanketkatoch05 ,

I think an approach is to load all data starting from row number 1 and remove the header or empty rows. Of course you need a criterion to identify start of data (e.g. first row with numeric value in a specific column). If you provide a sample, help could be more specific.

Best,

Roland

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi, due to its confidentiality, I cannot share the exact data. Hence I have created a dummy of it, attached doc.

As you can see, the main data starts from row number 8, and the above columns are just insights into the data (these are random).

Depending on the data, these insights (count) increase or decrease, that is why starting row number of the main data is not static.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi @sanketkatoch05 ,

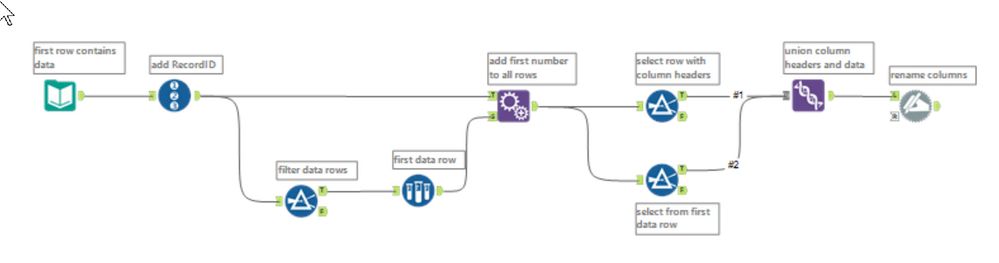

you can read all data, add RecordIds, find the first row with data in column 1 (or e.g. the first "NOT NULL" row in column 3 or 4), and select all rows starting with this one.

You can use the last row before the first data row as column header (as I do in te sample workflow).

Let me know it it works for you.

Best,

Roland

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

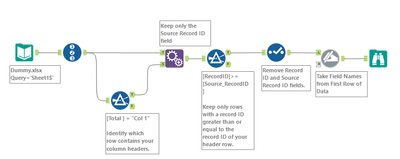

I came up with something similar to Roland but uses a few less tools. Instead of finding the first row of data, just find your header row since you said the headers will always be the same. Append that header row back to your main stream of data and filter out any rows with record ID's less than that number. Finally use the rename tool to make the first row of your data the header row. Good luck!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi @AlteryxTrev, can you share the workflow, so that I can test with the actual data?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

@sanketkatoch05 absolutely, here is the workflow I used. You will just need to reference your file for the input tool. Let me know if it works!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Thank you so much @RolandSchubert & @AlteryxTrev for helping out with the prompt solutions.

-

Academy

6 -

ADAPT

2 -

Adobe

203 -

Advent of Code

3 -

Alias Manager

77 -

Alteryx Copilot

24 -

Alteryx Designer

7 -

Alteryx Editions

91 -

Alteryx Practice

20 -

Amazon S3

149 -

AMP Engine

251 -

Announcement

1 -

API

1,207 -

App Builder

116 -

Apps

1,359 -

Assets | Wealth Management

1 -

Basic Creator

14 -

Batch Macro

1,555 -

Behavior Analysis

245 -

Best Practices

2,691 -

Bug

719 -

Bugs & Issues

1 -

Calgary

67 -

CASS

53 -

Chained App

267 -

Common Use Cases

3,820 -

Community

26 -

Computer Vision

85 -

Connectors

1,425 -

Conversation Starter

3 -

COVID-19

1 -

Custom Formula Function

1 -

Custom Tools

1,935 -

Data

1 -

Data Challenge

10 -

Data Investigation

3,486 -

Data Science

3 -

Database Connection

2,217 -

Datasets

5,216 -

Date Time

3,226 -

Demographic Analysis

186 -

Designer Cloud

739 -

Developer

4,364 -

Developer Tools

3,526 -

Documentation

526 -

Download

1,036 -

Dynamic Processing

2,935 -

Email

927 -

Engine

145 -

Enterprise (Edition)

1 -

Error Message

2,253 -

Events

197 -

Expression

1,867 -

Financial Services

1 -

Full Creator

2 -

Fun

2 -

Fuzzy Match

711 -

Gallery

666 -

GenAI Tools

3 -

General

2 -

Google Analytics

155 -

Help

4,704 -

In Database

965 -

Input

4,290 -

Installation

360 -

Interface Tools

1,900 -

Iterative Macro

1,092 -

Join

1,956 -

Licensing

252 -

Location Optimizer

60 -

Machine Learning

259 -

Macros

2,858 -

Marketo

12 -

Marketplace

23 -

MongoDB

82 -

Off-Topic

5 -

Optimization

750 -

Output

5,247 -

Parse

2,325 -

Power BI

228 -

Predictive Analysis

936 -

Preparation

5,162 -

Prescriptive Analytics

205 -

Professional (Edition)

4 -

Publish

257 -

Python

853 -

Qlik

39 -

Question

1 -

Questions

2 -

R Tool

476 -

Regex

2,339 -

Reporting

2,429 -

Resource

1 -

Run Command

573 -

Salesforce

276 -

Scheduler

411 -

Search Feedback

3 -

Server

628 -

Settings

933 -

Setup & Configuration

3 -

Sharepoint

624 -

Spatial Analysis

599 -

Starter (Edition)

1 -

Tableau

512 -

Tax & Audit

1 -

Text Mining

468 -

Thursday Thought

4 -

Time Series

430 -

Tips and Tricks

4,185 -

Topic of Interest

1,126 -

Transformation

3,722 -

Twitter

23 -

Udacity

84 -

Updates

1 -

Viewer

3 -

Workflow

9,967

- « Previous

- Next »