Alteryx Designer Desktop Discussions

Find answers, ask questions, and share expertise about Alteryx Designer Desktop and Intelligence Suite.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Designer Desktop

- :

- Re: Data Stream In - Temp table SQL Server

Data Stream In - Temp table SQL Server

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

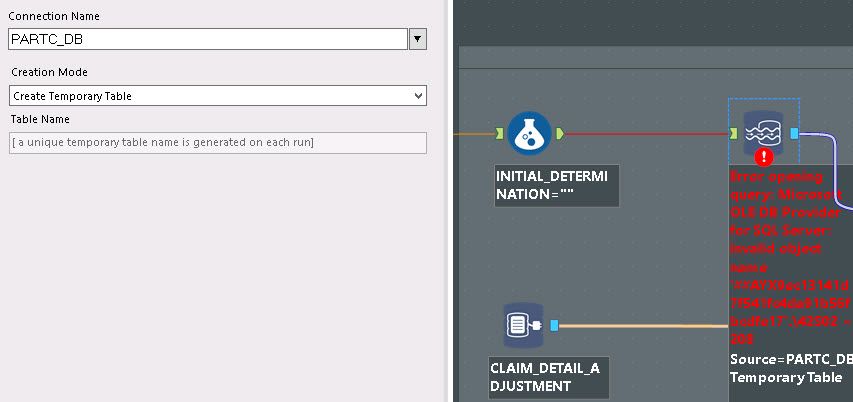

I'm trying to upload records into a temp table on a SQL Server. The connection for the temp table has been used previously in the workflow but I don't seem be able to "re-use" the same connection. If I create a new connection for the Data Stream In, it works fine. But when using the same connection that was used previously in the same workflow, I get this error:

Solved! Go to Solution.

- Labels:

-

In Database

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hey Kieran,

Temp tables can be interesting in SQL - they are context / connection / transaction specific - so if I'm on a specific connection and pump data out to a temp table, as soon as SQL hits a commit it scrubs that temp table. So what we always tried to do, is if you need to share a temp table across different processes (where one may commit before the other) is create a regular table, but call it "tempWorkingXX" so that you can recognise it and so that SQL doesn't throw it away. Temp tables also live in a different place (from recollection, they live in TempDB, not in your own database) and as a result, the SQL server itself is responsible for garbage collecting these - so if you disconnect from the server, it will also throw away your temp table.

This may not be the problem you're having, but I have spent many nights unpicking synchronisation issues across triggers or stored procedures on a SQL server that use temp tables.

If you try to create a normal table (named with some flag to show that it's a working table), you should get round this - but if not, feel free to post a stripped down version of your flow, with the input and outcome you're trying to achieve, and we can see if we can get you to where you need to be.

Cheers

sean

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Thanks Sean. I think the issue I have is a little different. When I say "re-use" I dont mean reuse the temp table I created earlier, I just mean the same connection. So when I add a "Data stream In" too, I want to be able to select the same connection I was using earlier. Alteryx doesnt seem to like that though per the error message

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hey Kieran,

Are you able to share more of the workflow so that we can see if we can get you to a solution.

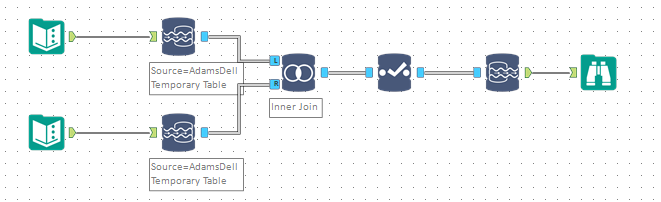

I stripped down the idea of sending in two sets of data into the DB, using the same connection ID - and then joining them, and streaming them out.

This generated a good result - you can see that the data source on these "Data Stream In" tools is the same, but it's not giving me the same error as you're getting.'

Try run this very simple flow on your environment and see if it runs - if it does, then your issue may be somewhere else in the flow?

Hopefully this helps you to debug the issue

All the best

Sean

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

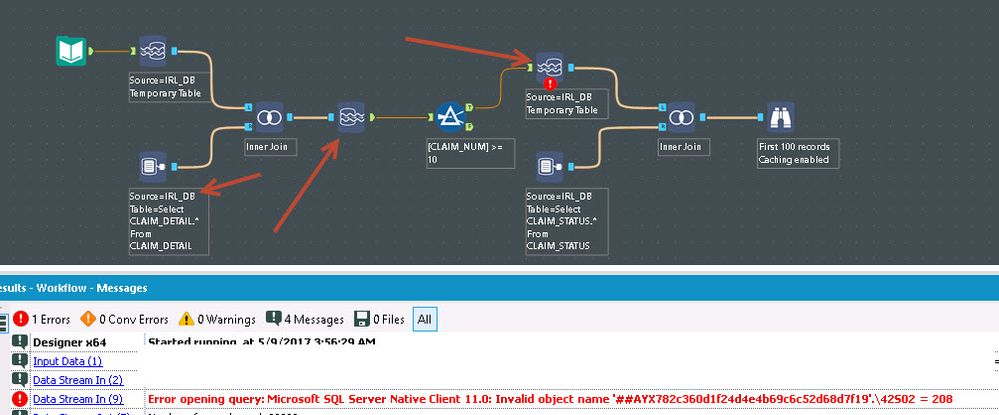

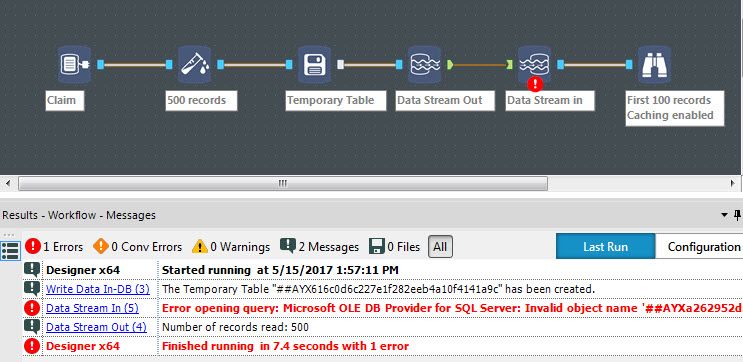

I'm able to recreate the issue with this simple workflow:

So I'm uploading to a temp table, joining a table and drawing the data back down. Then performing a simple filter and uploading back into a temp table on the same server, same connection as before. This is when I receive the error. There's no logical reason for the workflow, obviously doesn't make sense to pull down the data just for a filter, I'm just demonstrating the error.

"Error: Data Stream In (9): Error opening query: Microsoft SQL Server Native Client 11.0: Invalid object name '##AYX782c360d1f24d4e4b69c6c52d68d7f19'.\42S02 = 208"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

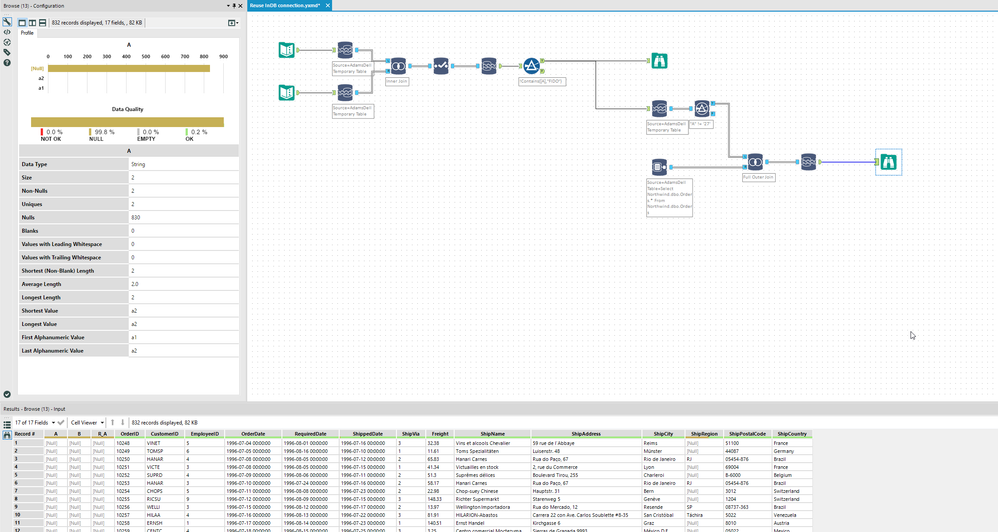

:-) Kieran - I'm bit baffled by this, but I'm getting a good result with a very similar flow to the one that you just posted.

I did get a very strange error using an odd table name when I first put the second Join into this flow, but it turned out to be a type conversion error (joining an integer field to a text field.

I've attached the workflow above too - so that you can inspect the XML that's under the tools to see if there's anything obvious there. I am connecting using the OLEDB driver, that may be helping? I'll try again with a native SQL server connect to see if that breaks it :-)

Some

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Right - connected with ODBC (couldn't find an obvious native SQL connect on the InDB connect - the native seems to be the new SQL driver) and I'm still getting a good result.

Given that you've mocked up your flow, I presume that there is no client-sensitive, or company-specific IP - is it worth posting your exact flow and I can take a look and run it against my server?

It may be that we need to start looking at versions of drivers to see what's causing this - but let's eliminate other possible issues first.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Nope, tried changing to OLE DB provider for SQL server - same error. I'm running 10.6 if that makes a difference. I think your screen shots look like version 11. It still has to go through approval in our IT systems

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hey @Kieran,

A few things we can try next:

a) can you post a simplified version of your workflow (attach the actual alteryx canvas) - if you can extract any client-sensitive or company IP then we can work together on the core problem

b) Additionally - you can look at your ODBC drivers. Not sure if you have access to this (or to get IT to update if outdated), but I've taken a screenshot of the relevant SQL drivers on my machine so you can see the version number.

c) I'll also notify the moderators on this thread that we may need to rope in someone from the SQL team at Alteryx, and we can see if they have seen something similar before.

if you can post your flow, and a simple table definition, I'll gladly work through this on my own home SQL server - just need the table-def of the table you're joining to (or a simplified version that demonstrates the issue), to see if the join might be the problem. What I'm hoping to do is eliminate all factors until we get down to the most simple version of a reproducible error.

Cheers @Kieran

Sean

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi @seanAdams,

Apologies for being slow getting back to you. This isn't specific to my flow, or the database server / tables. I've recreated the issue in its simplest form multiple times, using multiple different database servers. Also confirmed with a colleague that they are receiving the same error.

-

Academy

6 -

ADAPT

2 -

Adobe

204 -

Advent of Code

3 -

Alias Manager

78 -

Alteryx Copilot

26 -

Alteryx Designer

7 -

Alteryx Editions

95 -

Alteryx Practice

20 -

Amazon S3

149 -

AMP Engine

252 -

Announcement

1 -

API

1,209 -

App Builder

116 -

Apps

1,360 -

Assets | Wealth Management

1 -

Basic Creator

15 -

Batch Macro

1,559 -

Behavior Analysis

246 -

Best Practices

2,695 -

Bug

719 -

Bugs & Issues

1 -

Calgary

67 -

CASS

53 -

Chained App

268 -

Common Use Cases

3,825 -

Community

26 -

Computer Vision

86 -

Connectors

1,426 -

Conversation Starter

3 -

COVID-19

1 -

Custom Formula Function

1 -

Custom Tools

1,939 -

Data

1 -

Data Challenge

10 -

Data Investigation

3,488 -

Data Science

3 -

Database Connection

2,221 -

Datasets

5,223 -

Date Time

3,229 -

Demographic Analysis

186 -

Designer Cloud

742 -

Developer

4,373 -

Developer Tools

3,531 -

Documentation

528 -

Download

1,037 -

Dynamic Processing

2,940 -

Email

928 -

Engine

145 -

Enterprise (Edition)

1 -

Error Message

2,260 -

Events

198 -

Expression

1,868 -

Financial Services

1 -

Full Creator

2 -

Fun

2 -

Fuzzy Match

713 -

Gallery

666 -

GenAI Tools

3 -

General

2 -

Google Analytics

155 -

Help

4,711 -

In Database

966 -

Input

4,295 -

Installation

361 -

Interface Tools

1,901 -

Iterative Macro

1,095 -

Join

1,959 -

Licensing

252 -

Location Optimizer

60 -

Machine Learning

260 -

Macros

2,864 -

Marketo

12 -

Marketplace

23 -

MongoDB

82 -

Off-Topic

5 -

Optimization

751 -

Output

5,258 -

Parse

2,328 -

Power BI

228 -

Predictive Analysis

937 -

Preparation

5,171 -

Prescriptive Analytics

206 -

Professional (Edition)

4 -

Publish

257 -

Python

855 -

Qlik

39 -

Question

1 -

Questions

2 -

R Tool

476 -

Regex

2,339 -

Reporting

2,434 -

Resource

1 -

Run Command

575 -

Salesforce

277 -

Scheduler

411 -

Search Feedback

3 -

Server

631 -

Settings

936 -

Setup & Configuration

3 -

Sharepoint

628 -

Spatial Analysis

599 -

Starter (Edition)

1 -

Tableau

512 -

Tax & Audit

1 -

Text Mining

468 -

Thursday Thought

4 -

Time Series

432 -

Tips and Tricks

4,187 -

Topic of Interest

1,126 -

Transformation

3,731 -

Twitter

23 -

Udacity

84 -

Updates

1 -

Viewer

3 -

Workflow

9,982

- « Previous

- Next »