Alteryx Designer Desktop Discussions

Find answers, ask questions, and share expertise about Alteryx Designer Desktop and Intelligence Suite.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Designer Desktop

- :

- Re: Best practice for incremental processing of ve...

Best practice for incremental processing of very large datasets

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

What are some best practices for incrementally processing a very large dataset? By that, I mean a workflow that queries a subset of a very large dataset, manipulates it, and either delete/appends on the original source or saves to a new one.

I have been experimenting with batch macros that have control parameters and no input/output. They run fine on a single batch but seem to run indefinitely without doing anything when feeding in a large number of batches. Any ideas why this wouldn't work? My only theory is that it is trying to do them in parallel and that is causing problems. Is there a better approach?

Solved! Go to Solution.

- Labels:

-

Best Practices

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

@DEllis

It is difficult to conclude anything without seeing the flow :)

but maybe it is the issue of your control parameter?

You mentioned a subset of a very large data set, do you mean, subset A, B, C consitute your large dateset?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I have a few different use cases. In the one I referred to above, I am pulling down a month of data at a time. The control parameters update year and month filters. In other cases it might be a specific product or other dimension. I have run an entire year one month at a time without issue but it won’t work if I try to run all 12 at once- one month runs in about 10 mins but I was waiting over a day with no results on 12 months.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

watch your resource monitor for ram peaking - this is probably a situation where you are maxing out system memory resources. make sure you close everything except Alteryx.

As far as large data sets - figure out a natural partition and batch it or create an artificial partition using multi-field binning? then process the memory consumptive part in a batch macro.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

My PC never goes above 25% memory or cpu utilization. There are no outputs from the macro so the usage shouldn’t be any higher than it takes to run a single month unless they are being run in parallel and/or there is no cleanup after a batch finishes.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Please share the flow and the macro without the data. this should not expose any proprietary data and you can make a copy and XXXX out any embedded company or credential data within a SQL or tool config.

With this we can see how you have settings configured in the macro and maybe generate some test data of our own that we can exercise the flow/macro with?

As @Qiu said "It is difficult to conclude anything without seeing the flow". what people say in explanation often leaves out some nuance that is critical and only found in review of code.

Your theory of doing them in parallel is unlikely as flows single thread. Only if AMP engine is used will it possibly run more than one part of a flow concurrently. Are you using AMP?

Still the macro would not, I think, have parallel instances firing.

you do not say exactly how the parameters are delivered, how many rows are going into the Macro and what the macro does specifically. I am guessing you have 1 row per unique run a query in the flow to identify something including what parameter values to use, then pass that into the macro within which it does another query dynamically altered for the parameter values passed into the macro.

If you do not insure the parameter values are unique, for year and month pairings, then in the above scenario you will execute the query inside the macro once for each row feeding into the macro. so if you have 100 rows for 2022-01 then it will execute the same query 100 times replicating the workload 100times and the data itself is replicated. did you validate the output for the 1 month run and how many rows are feeding into the macro?

without seeing your code we can only speculate based on what we have seen done by others and or ourselves which may be very different than your use case.

Another thing you might try right now is run it for 2 months and see if it goes as you think it ought to. If it does do 4 months, then 8 months until it takes what you consider an unacceptable tie frame, then back up to the previously acceptable run and add half as many months as before until you find the "breakpoint" and see if there is some issue around a specific month.

There are so many questions that having the code would immediately answer. do you create a range of months as parameters? running a single query for month > 01 and < 13 would, except for the limiting by year, cause a table scan and pull back the entire table, or view which can be devastating to performance but if asking in the query for one specific month at a time and iteratively executing the query via a batch macro can provide efficient results.

Turn on these settings for the flow

then run it for 3 sequential months individually, then run it however you coded it for the range of all 3, then share not only the log file/output log/Results view, but also share the yxmd and yxmc

Armed with this one of us should be able to help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Also, if the flow/macro is reading/updating a DB on a server there should be monitoring on that logs activity and this could be useful to see what your query did on the data server side and potentially if something/someone else might have caused the issue like a file lock/contention.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

here are my hypothesis - can you confirm the output when you do run it (even though it takes forever) is correct?

1) there is a join that you think is uni-dimensional but is not uni-dimensional for every month. this is creating a large amount of data.

2) Is this an iterative macro?

3) Does this use live data (api/etc) - could there be a hang up in processing the live data?

4) Does this use datastream in/datastream out?

5) Can you double check the resource monitor?

6) when you say there are no outputs to the batch macro - does it write files? does it move files?

Basically workflow processing shouldn't expand exponentially unless there are memory issues.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

@apathetichell reminded me to mention In-DB tools, if you are reading and transforming then writing back to same data server then a macro with In-DB tools composing the sql might prove considerably more efficient. This assumes you never have to break the input from output processing within IN-DB, if you stream out to take data outside the in-DB process, depending on how, you may defeat the purpose of In-DB of keeping the processing within the Data Server versus transiting it out from Data Server to Designer or Alteryx Server and then pushing it back across a network and back into Data Server in a separate transaction.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Thank you for the ideas @fharper . I will definitely look at the performance profiling, enabling the macro messages, and looking at the server logs. This is a new database for us, so the logs should be pretty easy to look though as there is minimal activity on the database outside my own work.

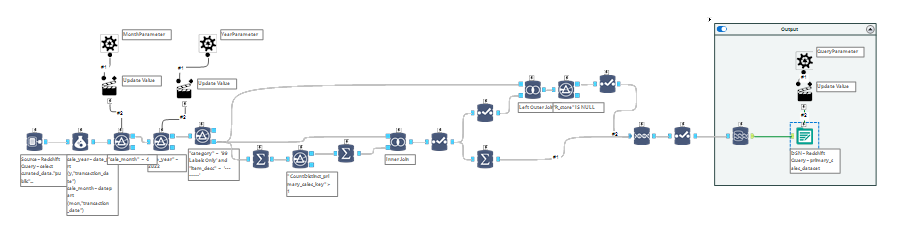

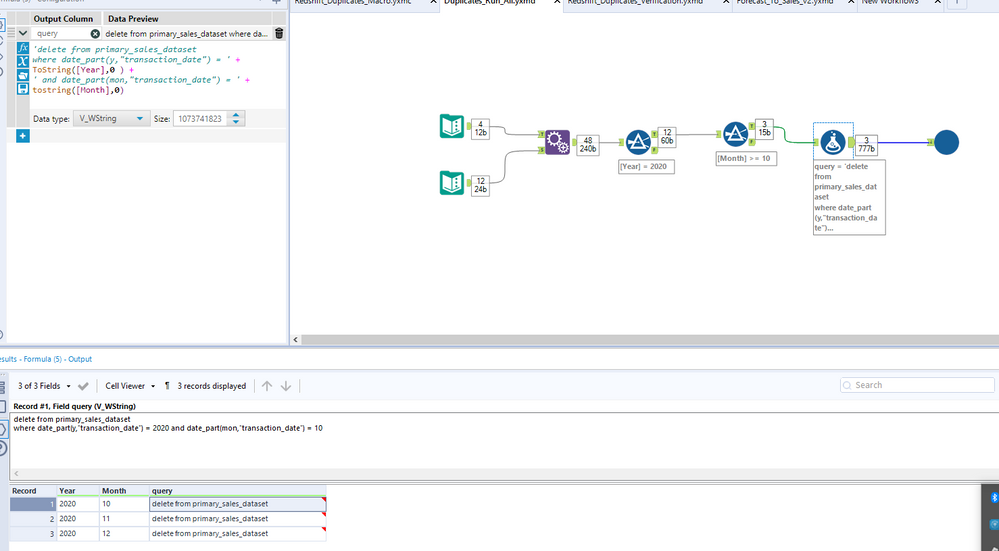

I've attached some screenshots which should answer questions on how the flow is setup. It did run successfully with 3 months as shown here. I am trying 2021 as a year to see if there was something specific to 2020 that caused the hang-up.

I am streaming out of DB and then back in, I chose to do that because I did not see an option to execute pre-SQL on the IN DB write. Is there another approach I could use to delete the records from the table that are being updated before writing?

As far as resource load on my PC, the data that is loaded for each batch is ~6M rows/3GB of memory. My PC has 64gb of memory, so I really don't think it is a performance bottleneck in my pc.

___________________

@apathetichell I think the screenshots may answer most of these but here are the answers written out.

here are my hypothesis - can you confirm the output when you do run it (even though it takes forever) is correct?

When I ran it for 12 months, it was still running over a day later and had not updated the table at all yet, so I stopped it - it was not frozen and resource usage on my pc was low, it was just not making progress. Running the same 12 months individually took about 10 minutes per month, 2 hours total, and updated each of the months.

1) there is a join that you think is uni-dimensional but is not uni-dimensional for every month. this is creating a large amount of data. I don't think so, if you see anything in my screenshots that might be, let me know

2) Is this an iterative macro? It's batch

3) Does this use live data (api/etc) - could there be a hang up in processing the live data? No

4) Does this use datastream in/datastream out? Datastream out, though if I can find a way to get around that, I will

5) Can you double check the resource monitor? If you are referring to the PC resource monitor, I have...I was not familiar with performance profiling in Alteryx so I'm going to look at that today

6) when you say there are no outputs to the batch macro - does it write files? does it move files? It writes back to DB

Basically workflow processing shouldn't expand exponentially unless there are memory issues.

-

Academy

6 -

ADAPT

2 -

Adobe

204 -

Advent of Code

3 -

Alias Manager

78 -

Alteryx Copilot

27 -

Alteryx Designer

7 -

Alteryx Editions

96 -

Alteryx Practice

20 -

Amazon S3

149 -

AMP Engine

252 -

Announcement

1 -

API

1,210 -

App Builder

116 -

Apps

1,360 -

Assets | Wealth Management

1 -

Basic Creator

15 -

Batch Macro

1,559 -

Behavior Analysis

246 -

Best Practices

2,696 -

Bug

720 -

Bugs & Issues

1 -

Calgary

67 -

CASS

53 -

Chained App

268 -

Common Use Cases

3,825 -

Community

26 -

Computer Vision

86 -

Connectors

1,426 -

Conversation Starter

3 -

COVID-19

1 -

Custom Formula Function

1 -

Custom Tools

1,939 -

Data

1 -

Data Challenge

10 -

Data Investigation

3,489 -

Data Science

3 -

Database Connection

2,221 -

Datasets

5,223 -

Date Time

3,229 -

Demographic Analysis

186 -

Designer Cloud

743 -

Developer

4,376 -

Developer Tools

3,534 -

Documentation

528 -

Download

1,038 -

Dynamic Processing

2,941 -

Email

929 -

Engine

145 -

Enterprise (Edition)

1 -

Error Message

2,262 -

Events

198 -

Expression

1,868 -

Financial Services

1 -

Full Creator

2 -

Fun

2 -

Fuzzy Match

714 -

Gallery

666 -

GenAI Tools

3 -

General

2 -

Google Analytics

155 -

Help

4,711 -

In Database

966 -

Input

4,296 -

Installation

361 -

Interface Tools

1,902 -

Iterative Macro

1,095 -

Join

1,960 -

Licensing

252 -

Location Optimizer

60 -

Machine Learning

260 -

Macros

2,866 -

Marketo

12 -

Marketplace

23 -

MongoDB

82 -

Off-Topic

5 -

Optimization

751 -

Output

5,259 -

Parse

2,328 -

Power BI

228 -

Predictive Analysis

937 -

Preparation

5,171 -

Prescriptive Analytics

206 -

Professional (Edition)

4 -

Publish

257 -

Python

855 -

Qlik

39 -

Question

1 -

Questions

2 -

R Tool

476 -

Regex

2,339 -

Reporting

2,434 -

Resource

1 -

Run Command

576 -

Salesforce

277 -

Scheduler

411 -

Search Feedback

3 -

Server

631 -

Settings

936 -

Setup & Configuration

3 -

Sharepoint

628 -

Spatial Analysis

599 -

Starter (Edition)

1 -

Tableau

512 -

Tax & Audit

1 -

Text Mining

468 -

Thursday Thought

4 -

Time Series

432 -

Tips and Tricks

4,187 -

Topic of Interest

1,126 -

Transformation

3,732 -

Twitter

23 -

Udacity

84 -

Updates

1 -

Viewer

3 -

Workflow

9,983

- « Previous

- Next »