Weekly Challenges

Solve the challenge, share your solution and summit the ranks of our Community!Also available in | Français | Português | Español | 日本語

IDEAS WANTED

Want to get involved? We're always looking for ideas and content for Weekly Challenges.

SUBMIT YOUR IDEA- Community

- :

- Community

- :

- Learn

- :

- Academy

- :

- Challenges & Quests

- :

- Weekly Challenges

- :

- Challenge #11: Identify Logical Groups

Challenge #11: Identify Logical Groups

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Here is this week’s challenge, I would like to thank everyone for playing along and for your feedback. The link to the solution for last challenge #10 is HERE.

The use case:

A manufacturing company receives customer complaint data on a daily basis from their call centers about the medical parts they distribute to their customers. The company monitors these comments to understand which parts and part groups have the highest complaint rate. This helps the company prioritize which parts to focus on from a development standpoint.

In this exercise, take the customer complaint data and identify which bucket the complaint falls within. The complaint can fall into multiple buckets and needs to be flagged as these complaints take highest priority. Create an aggregate view of which buckets or bucket pairings have the highest # of complaints.

This is only a subset of data so all records will not be assigned to buckets and can be ignored.

Update: As of 9/26/19, this challenge start file and solution were modified. Your solution will not match those posted by other Community members prior to this date.

- Labels:

-

Advanced

-

Data Analysis

-

Intermediate

-

Join

-

Parse

-

Transform

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

This article has been updated with 2 different solutions.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

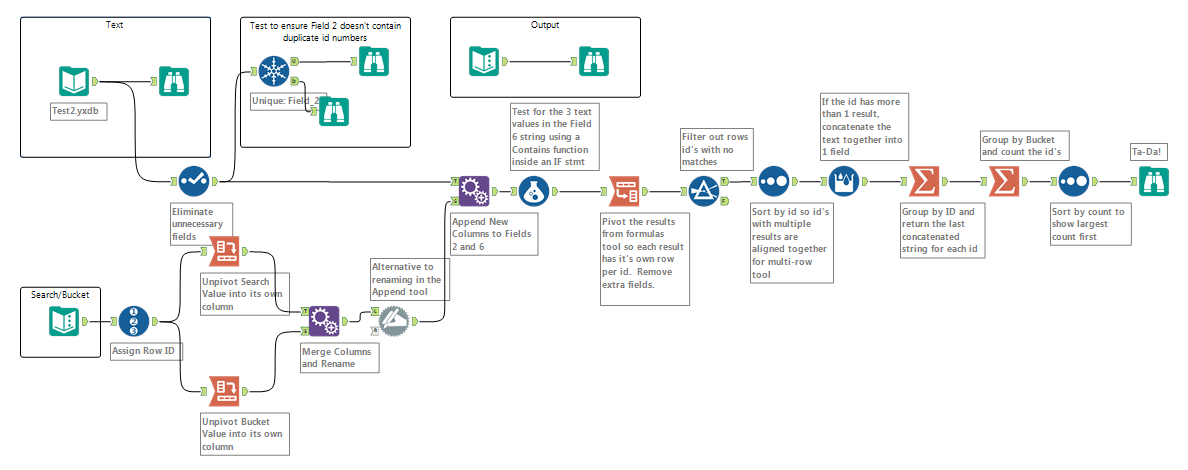

I love comparing all these solutions and learning more about how to utilize different tools. I'd like to see an explanation on what the search macro is doing. I've attached a screen shot of my workflow in the spoiler window. It works great if you know how many words you are looking for. On my machine, it finished in under 3 seconds as compared to 10 secs for solution 1 and 12 secs for solution 2. ![]()

In looking at the Solution 1, I learned that I could've eliminated the sort and multi-row tool I used and used the concatenation option in the first summarize tool. I tested that approach on my workflow and it also worked and took the same amount of time. I found the tricky part to be how to introduce the search items if you don't know how many you will have. I also included a test to ensure each complaint ticket only had one row of data to be on the safe side. I'm happy to send a packaged workflow to anyone who wants it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi,

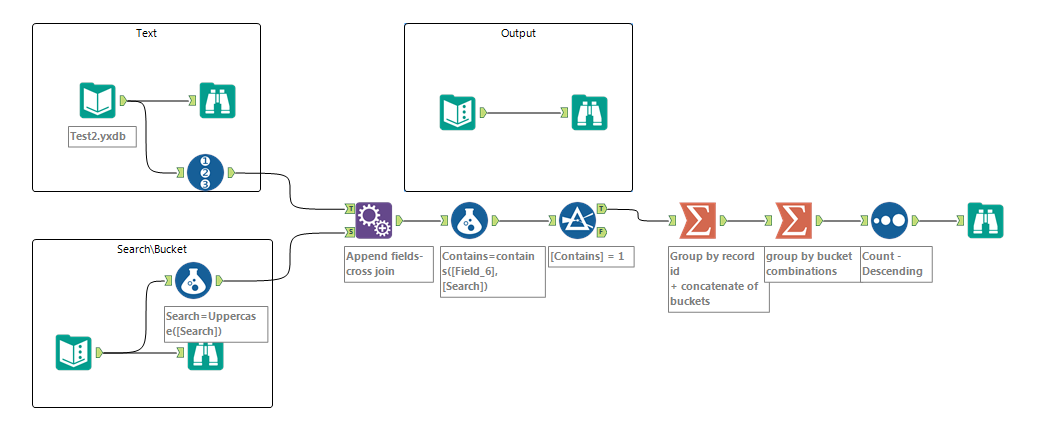

I have also tried to do this faster. I used full cross join and then just filter items which contain search.

And it runs very quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

similar solution to the 2 provided - differences in approach in the spoiler below

This post has been edited by Community Moderation to redact sensitive attachments. The original attachment has been replaced by post_placeholder.txt.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I am pleased with my results 🙂

Cheers,

Mark

This post has been edited by Community Moderation to redact sensitive attachments. The original attachment has been replaced by post_placeholder.txt.

Chaos reigns within. Repent, reflect and restart. Order shall return.

Please Subscribe to my youTube channel.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

My solution, similar to others.

This post has been edited by Community Moderation to redact sensitive attachments. The original attachment has been replaced by post_placeholder.txt.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I wasted a lot of time on this weeks challenge trying to figure out how to parse the complaint field. In the end, I gave up and found a solution that didn't involve parsing the text.

This post has been edited by Community Moderation to redact sensitive attachments. The original attachment has been replaced by post_placeholder.txt.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

-

Advanced

302 -

Apps

27 -

Basic

158 -

Calgary

1 -

Core

157 -

Data Analysis

185 -

Data Cleansing

5 -

Data Investigation

7 -

Data Parsing

14 -

Data Preparation

238 -

Developer

36 -

Difficult

87 -

Expert

16 -

Foundation

13 -

Interface

39 -

Intermediate

268 -

Join

211 -

Macros

62 -

Parse

141 -

Predictive

20 -

Predictive Analysis

14 -

Preparation

272 -

Reporting

55 -

Reporting and Visualization

16 -

Spatial

60 -

Spatial Analysis

52 -

Time Series

1 -

Transform

227

- « Previous

- Next »