Past Analytics Excellence Awards

- Community

- :

- Public Archive

- :

- Past Community Events

- :

- Past Analytics Excellence Awards: Top Ideas

- Mark all as New

- Mark all as Read

- Float this item to the top

- Subscribe to RSS Feed

Author: Michael Barone, Data Scientist

Company: Paychex Inc

Awards Category: Best Use of Predictive

Describe the problem you needed to solve

Each month, we run two-dozen predictive models on our client base (600,000 clients). These models include various up-sell, cross-sell, retention, and credit risk models. For each model, we generally group clients into various buckets that identify how likely they are to buy a product/leave us/default on payment, etc. Getting these results into the hands of the end-users who will then make decisions is an arduous task, as there are many different end-users, and each end-user can have specific criteria they are focused on (clients in a certain zone, clients with a certain number of employees, clients in a certain industry, etc.).

Describe the working solution

I have a prototype app deployed via Alteryx Server that allows the end-user to “self-service” their modeling and client criteria needs. This is not in Production as of yet, but potentially provides great accessibility to the end-user without the need of a “go-between” (my department) to filter and distribute massive client lists.

Step 1: ETL

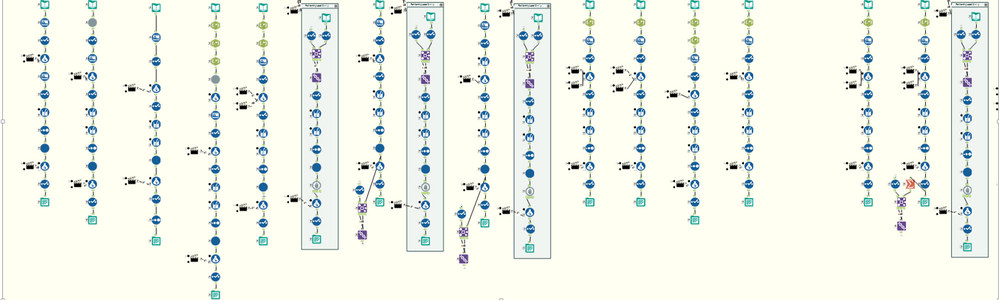

- I have an app that runs every month after our main company data sources have been refreshed:

This results in several YXDBs that are used in the models. Not all YXDBs are used in all models. This creates a central repository for all YXDBs, from which each specific model can pull in what is needed.

- We also make use of Calgary databases as well, for our really large data sets (billions of records).

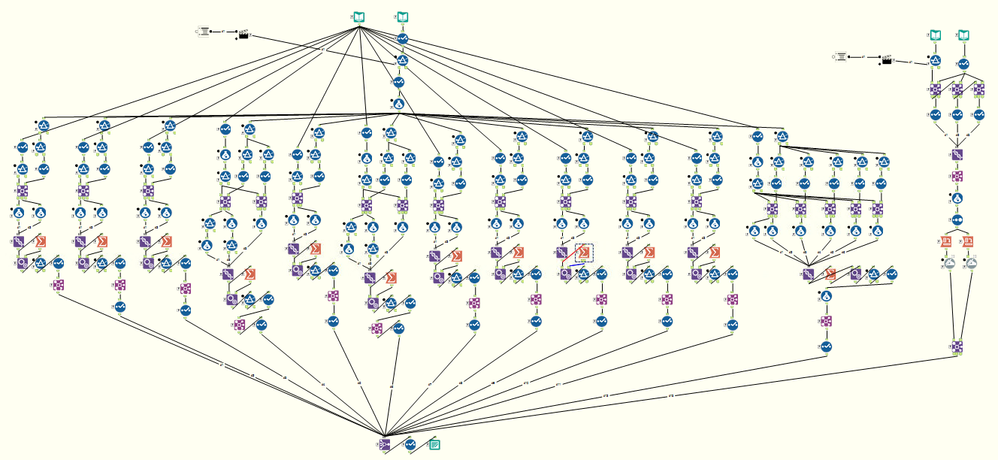

Once all the YXDBs and CYDBs are created, we then run our models. Here is just one of our 24 models:

- Our Data Scientists like to write raw R-code, so the R tool used before the final Output Tool at the bottom contains their code:

The individual model scores are stored in CYDB format, to make the app run fast (since the end-user will be querying against millions and millions of records). Client information is also stored in this format, for this same reason.

Step 2: App

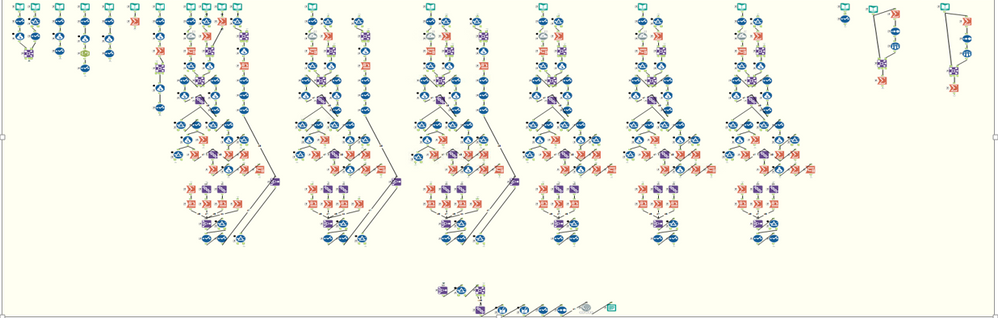

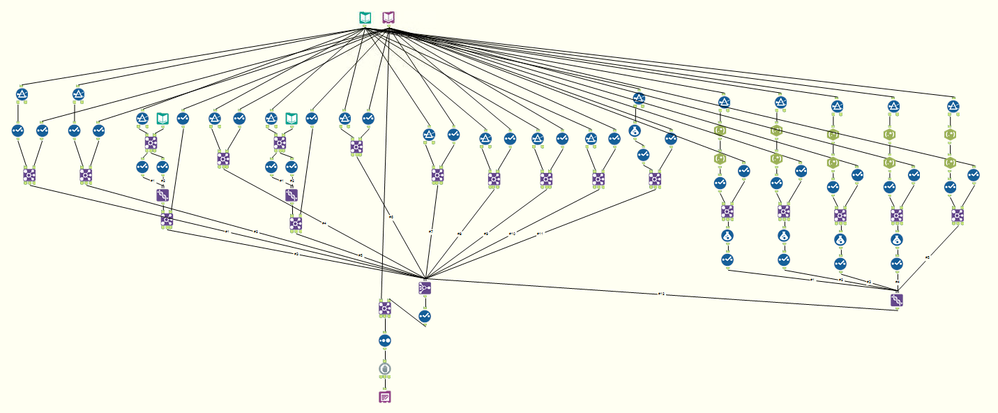

- Since the end-user will be making selections from a tree, we have to create the codes for the various trees and their branches. I want them to be able to pick through two trees – one for the model(s) they want, and one for the client attributes they want. For this app, they must choose a model, or no results will be returned. They DO NOT have to choose client attributes. If no attribute is chosen, then the entire client base will be returned. This presents a challenge in key-building, since normally an app that utilizes trees only returns values for keys that are selected. The solution is to attach keys to each client record for each attribute. My module to build the keys in such a way as I described is here (and there will be 12 different attributes from which the user can choose):

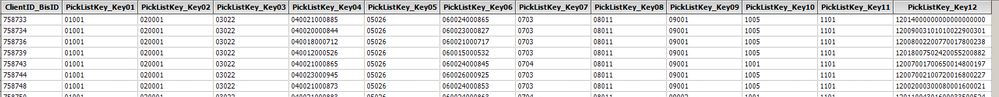

- Here is what the client database looks like once the keys are created and appended:

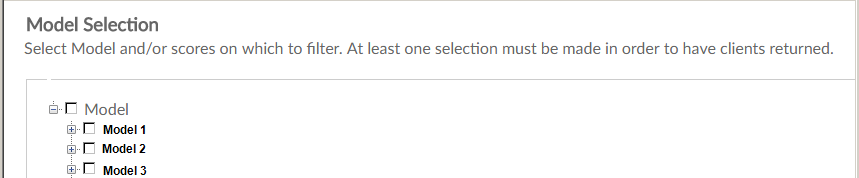

- The model keys do not have to be as complex a build as client keys, because the user is notified that if they don’t make a model selection, then no data will be returned:

- Once the key tables are properly made, we design the app. For the model selection, there is only one key (since there is only one variable, namely, the model). This is on the far right hand side. This makes use of the very powerful and fast Calgary join (joining the key from the pick-list to the key in the model table). For the client table, since there are 12 attributes/keys, we need 12 Calgary joins. Again, this is why we put the database into Calgary format. At the very end, we simply join the clients returned to the model selected:

Step 3: Gallery

- Using our private server behind our own firewall, we set up a Gallery and Studio for our apps:

- The app can now be run, and the results can be downloaded by the end-user to CSV (I even put a link to an “at-a-glance” guide to all our models):

- The user can select the model(s) they want, and the scores they want:

And then they can select the various client criteria:

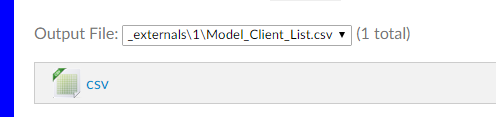

Once done running (takes anywhere between 10 – 30 seconds), they can download their results to CSV:

Describe the benefits you have achieved

Not having to send out two dozen lists to the end-users, and the end users not having to wait for me to send them (can get them on their own). More efficient and streamlined giving them a self-service tool.

-

2016 Entries

-

Best Use of Predictive Analytics

-

Business Services

-

Human Resources

Author: Michael Barone, Data Scientist

Company: Paychex Inc.

Awards Category: Most Time Saved

We currently have more than two dozen predictive models, pulling data of all shapes and sizes from many different sources. Total processing time for a round of scoring takes 4 hours. Before Alteryx, we had a dozen models, and processing took around 96 hours. That's a 2x increase in our model portfolio, but a 24x decrease in processing time.

Describe the problem you needed to solve

Our Predictive Modeling group, which began in the early-to-mid 2000s, had grown from one person to four people by summer 2012. I was one of those four. Our Portfolio had grown from one model, to more than a dozen. We were what you might call a self-starting group. While we had the blessing of upper Management, we were small and independent, doing all research, development, and analysis ourselves. We started with using the typical every day Enterprise solutions for software. While those solutions worked well for a few years, by the time we were up to a dozen models, we had outgrown those solutions. A typical round of "model scoring" which we did at the beginning of ever y month, took about two-and-a-half weeks, and ninety-five percent of that was system processing time which consisted of cleansing, blending, and transforming the data from varying sources.

Describe the working solution

We blend data from our internal databases - everything from Excel and Access, to Oracle, SQL Server, and Netezza. Several models include data from 3rd party sources such as D&B, and the Experian CAPE file we get with out Alteryx data package.

Describe the benefits you have achieved

We recently have taken on projects that require us processing and analyzing billions of records of data. Thanks to Alteryx and more specifically the Calgary format, most of our time is spent analyzing the data, not pulling, blending, and processing. This leads to faster delivery time of results, and faster business insight.

-

2016 Entries

-

Business Services

-

Consulting

-

Most Time Saved

-

2016 Entries

37 -

2017 Entries

16 -

Automotive

2 -

Banking and Financial Services

2 -

Best Business ROI

4 -

Best Use of Alteryx Server

6 -

Best Use of Predictive Analytics

2 -

Best Use of Spatial Analytics

4 -

Best Value Driven

8 -

Best ‘Alteryx for Good’ Story

6 -

Business Intelligence

16 -

Business Services

2 -

Consulting

5 -

Consumer Goods & Services

1 -

Education

1 -

Energy & Utilities

1 -

Entertainment & Publishing

3 -

finance

1 -

From Zero to Hero

8 -

Government

1 -

Healthcare

2 -

Hospitality & Tourism

1 -

Human Resources

3 -

Insurance

3 -

IT

4 -

Manufacturing

1 -

Marketing

3 -

Marketing Service Provider

2 -

Media

3 -

Most Time Saved

12 -

Most Unexpected Insight

3 -

Non-Profit

2 -

Operations

4 -

Other

6 -

Retail

2 -

Sales

3 -

Transportation & Logistics

1 -

Wildcard

10

- « Previous

- Next »

- LeahK on: Excellence Awards 2017: Renilton Soares de Oliveir...

-

andrewdatakim on: Excellence Awards 2017: Bill Lyons - Multiple Awar...

- LeahK on: Excellence Awards 2017: Michael Carrico - Best Val...

- LeahK on: Excellence Awards 2017: Tim Chandler & Amy Jorde -...

- LeahK on: Excellence Awards 2017: John Matyasovsky Jr - From...

- robby on: Excellence Awards 2017: Allen Long - Best Value Dr...

- LeahK on: Excellence Awards 2017: Joseph Majewski - Best Use...

- LeahK on: Excellence Awards 2017: Jason Claunch - Best Use o...

- MosaicSP_Asher on: Excellence Awards 2017: Pamela Rooney - Best Value...

- lagueux_kerry on: Excellence Awards 2017: Ryan Bruskiewicz - Best Us...