Past Analytics Excellence Awards

- Community

- :

- Public Archive

- :

- Past Community Events

- :

- Past Analytics Excellence Awards: Top Ideas

- Mark all as New

- Mark all as Read

- Float this item to the top

- Subscribe to RSS Feed

Author: Andy Kriebel (@VizWizBI), Head Coach

Company: The Information Lab

Awards Category: Best 'Alteryx for Good' Story

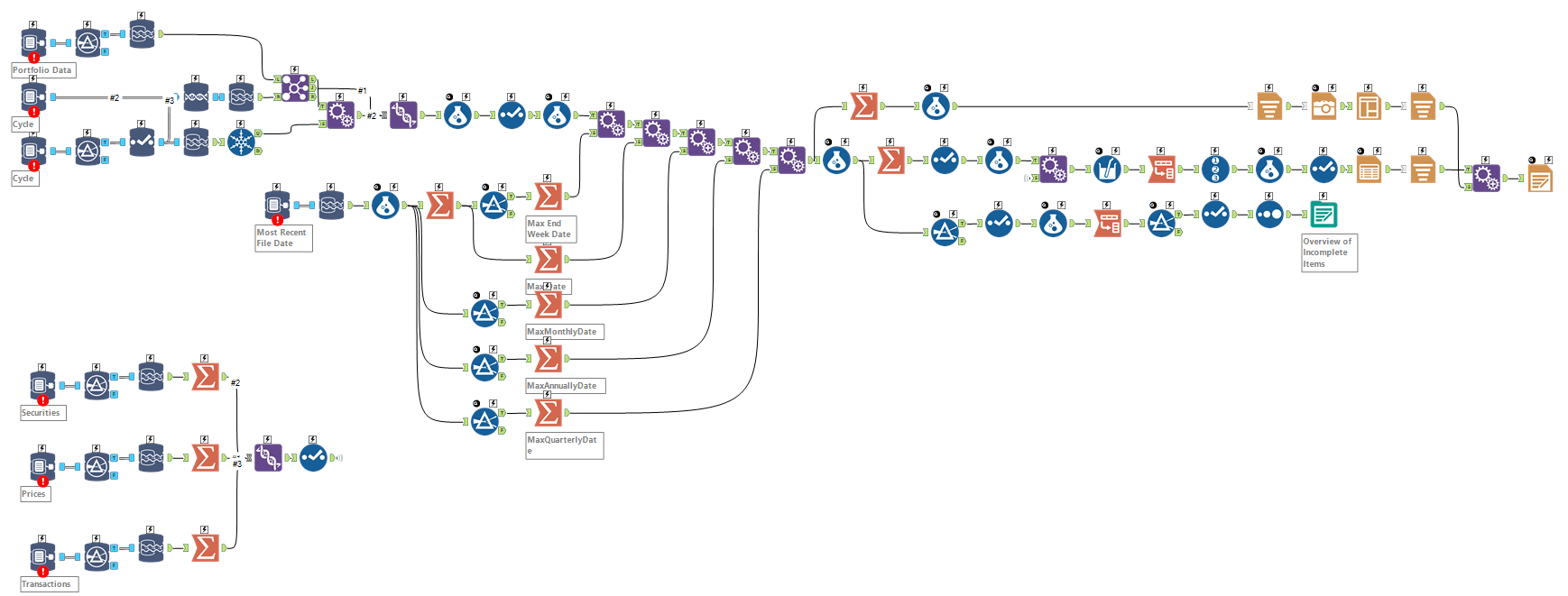

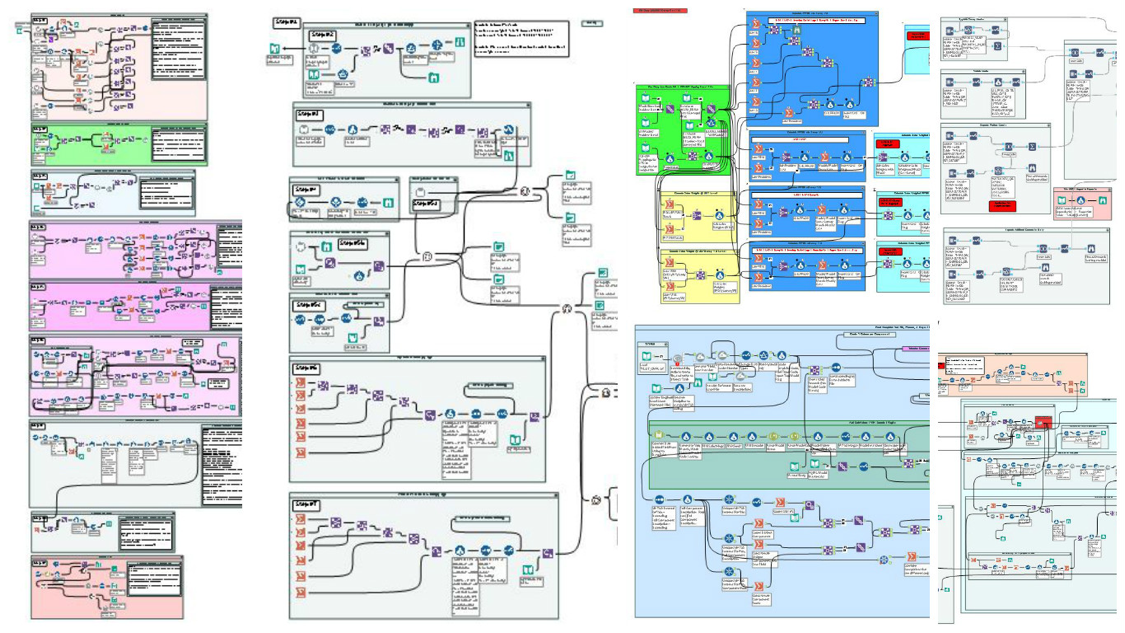

The Connect2Help 211 team outlined their requirements, including review the database structure and what they were looking for as outputs. Note that this was also the week that we introduced Data School to Alteryx. We knew that the team could use Alteryx to prepare, cleanse and analyse the data. Ultimately, the team wanted to create a workflow in Alteryx that Connect2Help 211 could use in the future.

Ann Hartman, Director of Connect2Help 211 summarized the impact best: "We were absolutely blown away by your presentation today. This is proof that a small group of dedicated people working together can change an entire community. With the Alteryx workflow and Tableau workbooks you created, we can show the community what is needed where, and how people can help in their communities."

The entire details of the project can be best found here - http://www.thedataschool.co.uk/andy-kriebel/connect2help211/

Describe the problem you needed to solve

In July 2015, Connect2Help 211, an Indianapolis-based non-profit service that facilitates connections between people who need human services and those who provide them, reached out to the Tableau Zen Masters as part of a broader effort that the Zens participate in for the Tableau Foundation. Their goals and needs were simple: Create an ETL process that extracts Refer data, transforms it, and loads it into a MYSQL database that can be connected to Tableau.

Describe the working solution

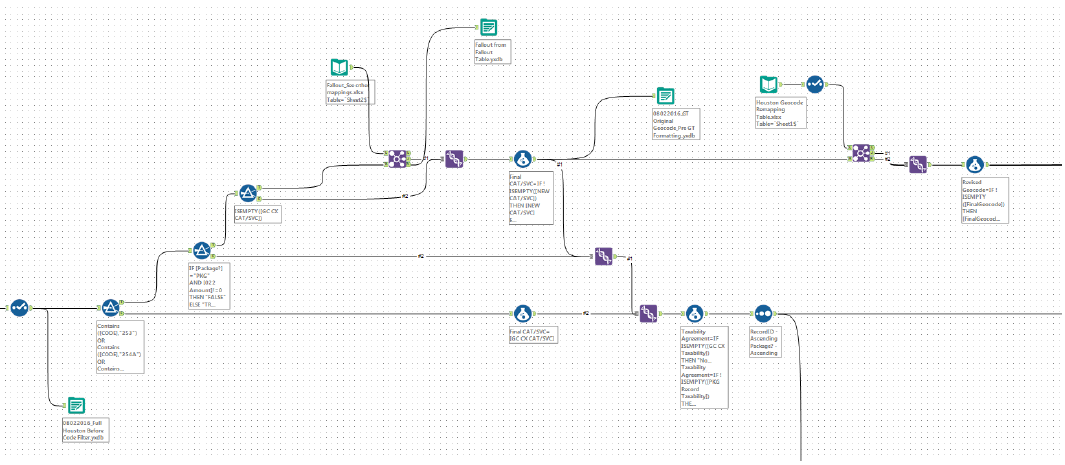

See the workflow and further details in the blog post - http://www.thedataschool.co.uk/andy-kriebel/connect2help211/

Describe the benefits you have achieved

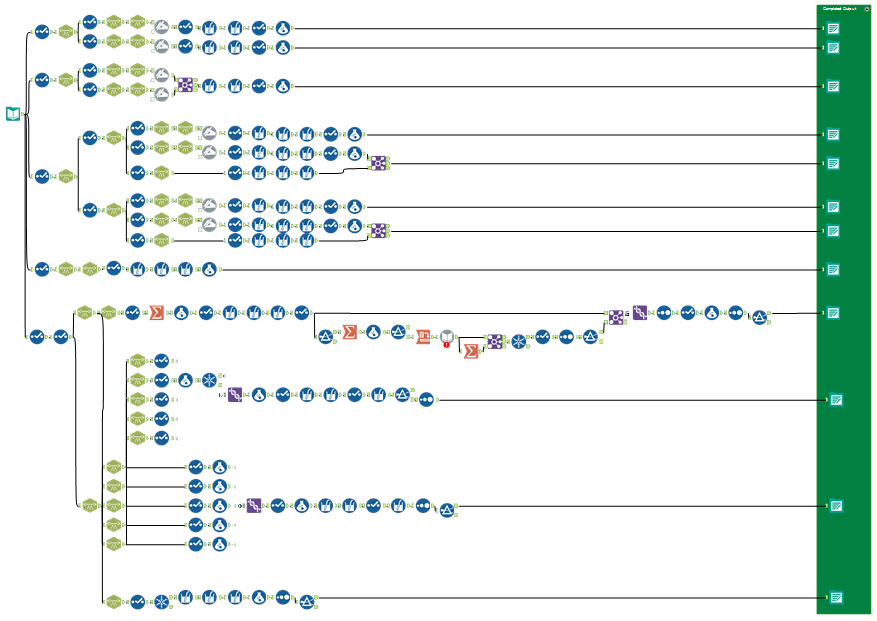

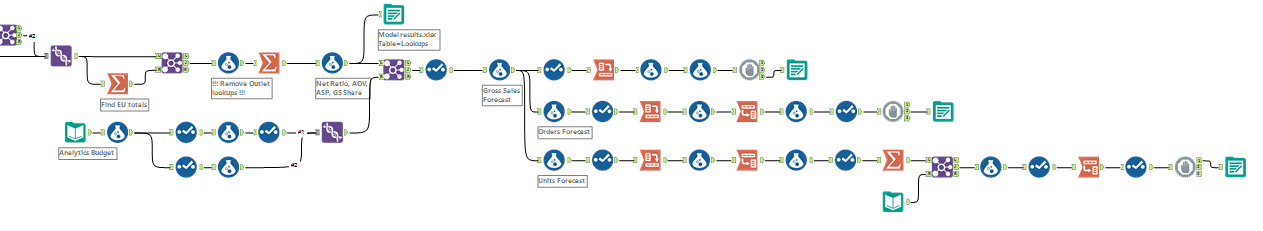

While the workflow looks amazingly complex, it absolutely accomplished the goal of creating a reusable ETL workflow. Ben Moss kicked off the project presentations by taking the Connect2Help 211 team through what the team had to do and how Connect2Help 211 could use this workflow going forward.

From there, the team went through the eight different visualisation that they created in Tableau. Keep in mind, Connect2Help 211 wasn't expecting any visualisations as part of the output, so to say they were excited with what the team created in just a week is a massive understatement.

-

2016 Entries

-

Best ‘Alteryx for Good’ Story

-

Business Intelligence

Author: Rana Dalbah, Director - Workforce Intelligence & Processes

Company: BAE Systems

Awards Category: Most Unexpected Insight - Best Use Case for Alteryx in Human Resources

Working in Human Resources, people do not expect us to be technology savvy, let alone become technology leaders and host a "Technology Day" to show HR and other functions the type of technology that we are leveraging and how it has allowed us, as a team, to become more efficient and scalable.

Within the Workforce Intelligence team, a team responsible for HR metrics and analytics, we have been able to leverage Alteryx in a way that has allowed us to become more scalable and not "live in the data", spending the majority of our time formatting, cleansing, and re-indexing. For example, Alteryx replaced both Microsoft T-SQL and coding in R for our HR Dashboard, which allowed us to decrease the pre-processing time of our HR Dashboard from 8-16 hours per month to less than 10 minutes per month, which does not account for the elimination of human intervention and error.

With the time savings due to Alteryx, it has allowed us to create custom metrics in the dashboard at a faster rate to meet customer demands. In addition, it has also given us the opportunity to pursue other aspects of Alteryx forecast modeling, statistical analysis, predictive analytics, etc. The fact that we are able to turn an HR Dashboard around from one week to two days has been a game changer.

The HR dashboard is considered to have relevant data that is constantly being used for our Quarterly Business Reviews and has attracted the attention of our CEO and the Senior Leadership. Another use that we have found for Alteryx is to create a workflow for our Affirmative Action data processing. Our Affirmative Action process has lacked consistency over the years and has changed hands countless times, with no one person owning it for more than a year. After seeing the capabilities for our HR Dashboard, we decided to leverage Alteryx to create a workflow for our Affirmative Action processing that took 40 hours of work down to 7 minutes with an additional hour that allows for source data recognition

recognition and correction. We not only have been able to cut down a two or three month process to a few minutes, but we also now have a documented workflow that lists all the rules and exceptions for our process and would only need to be tweaked slightly as requirements change.

For our first foray into predictive analytics, we ran a flight risk model on a certain critical population. Before Alteryx, the team used SPSS and R for the statistical analysis and created a Microsoft Access database to combine and process at least 30 data files. The team was able to run the process, with predictive measures, in about 6 months. After the purchase of Alteryx, the workflow was later created and refined in Alteryx, and we were able to run a small flight risks analysis on another subset of our population that took about a month with better visualizations than what R had to offer. By reducing the data wrangling time, we are able to create models in a more timely fashion and the results are still relevant.

The biggest benefit of these time-savings is that it has freed up our analytics personnel to focus less on “data chores” and more on developing deeper analytics and making analytics more relevant to our executive leadership and our organization as a whole. We’ve already become more proactive and more strategic now that we aren’t focusing our time on the data prep. The combination of Alteryx with Tableau is transformative for our HR, Compensation, EEO-1, and Affirmative Action analytics. Now that we are no longer spending countless hours prepping data, we’re assisting other areas, including Benefits, Ethics, Safety and Health, Facilities, and even our Production Engineering teams with ad-hoc analytics processing.

Describe the problem you needed to solve

A few years ago, HR metrics was a somewhat foreign concept for our Senior Leadership. We could barely get consensus on the definition of headcount and attrition. But in order for HR to bring to the table what Finance and Business Development do: metrics, data, measurements, etc. we needed to find a way to start displaying relevant HR metrics that can steer Senior Leadership in the right direction when making decisions for the workforce. So, even though we launched with an HR Dashboard in January of 2014, it was simple and met minimum requirements, but it was a start. We used Adobe, Apache code and SharePoint, along with data in excel files, to create simple metrics and visuals. In April 2015, we launched the HR Dashboard using Tableau with the help of a third party that used Microsoft SQL server to read the data and visualize it based on our requirements. However, this was not the best solution for us because we were not able to make dynamic changes to the dashboard in a fast timeframe. The dashboard was being released about two weeks after fiscal month end, which is an eternity in terms of relevance to our Senior Leadership.

Once we had the talent in-house, we were able to leverage our technological expertise in Tableau and then, with the introduction of Alteryx, create our workflows that cut down a 2 week process into a few days - including data validation and dashboard distribution to the HR Business Partners and Senior Leadership. But why stop there? We viewed Alteryx as a way to help refine existing manual processes: marrying multiple excel files using vlookups, pivot tables, etc. that were not necessarily documented by the users and cut back on processing time. If we can build it once and spend minimal time maintaining the workflow, why not build it? This way, all one has to do in the future is append or replace a file and hit the start button, and the output is created. Easy peasy! That is when we decided we can leverage this tool for our compliance team and build out the Affirmative Action process, described above, along with the EE0-1 and Vets processing.

What took months and multiple resources now takes minutes and only one resource.

Describe the working solution

The majority of the data we are using comes from our HCM (Human Capital Management Database) in excel based files. In addition to the HCM files, we are also using files from our applicant tracking system (ATS), IMPACT Awards data, Benefit provider, 401K, Safety and Health data, and pension providers.

Anything that does not come out of our HCM system are coming from a third party vendor. These files are used specifically for our HR dashboard, Affirmative Action Plan workflow, Safety & Health Dashboard, and our benefits dashboard.

In addition to dashboards, we have been able to leverage the mentioned files along with survey data and macro-economic factors for our flight risk model. We have also leveraged Google map data to calculate the commute time from an employee's home zip code to their work location zip code. This was a more accurate measurement of time spent on the road to and from work when compared to distance.

The ultimate outputs vary: an HR dashboard that tracks metrics such as demographics, headcount, attrition, employee churn/movement, rewards and exit surveys is published as a Tableau workbook. The Flight Risk analysis that allows us to determine what factors most contribute to certain populations leaving the company; a compensation dashboard that provided executives a quick way to do merit and Incentive Compensation planning includes base pay, pay ratios, etc. is also published as a Tableau Workbook.

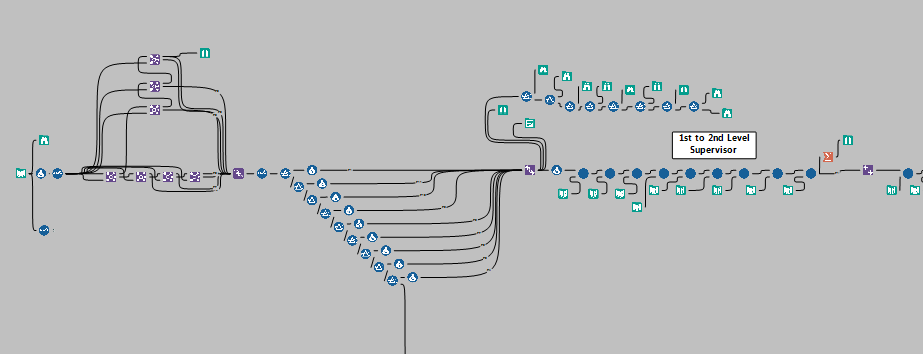

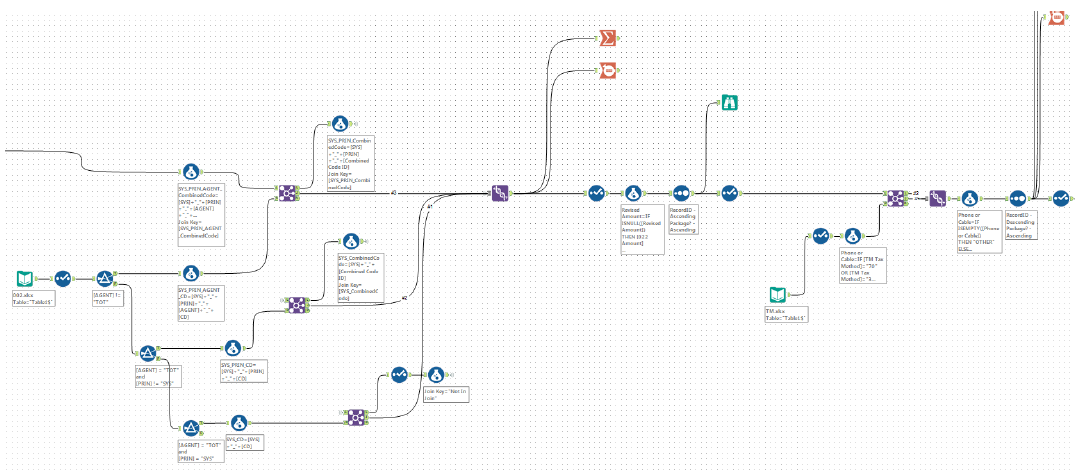

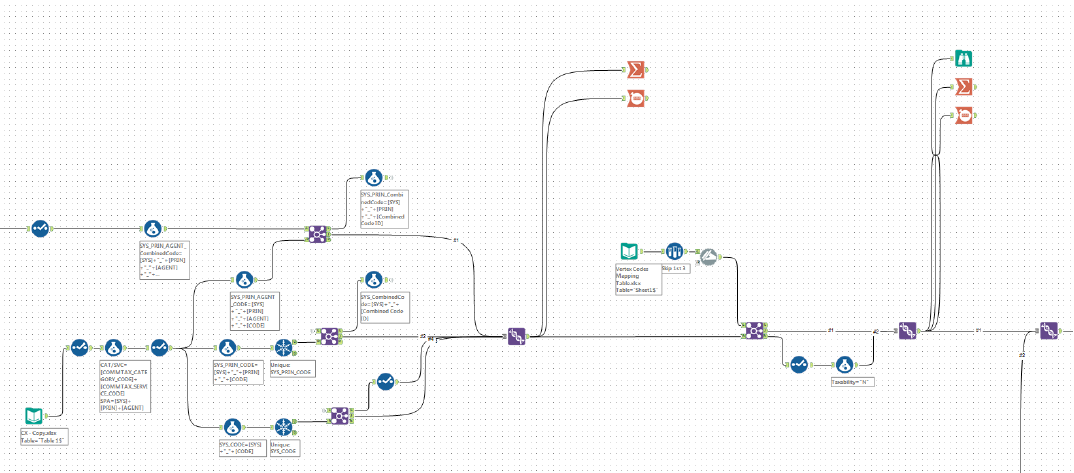

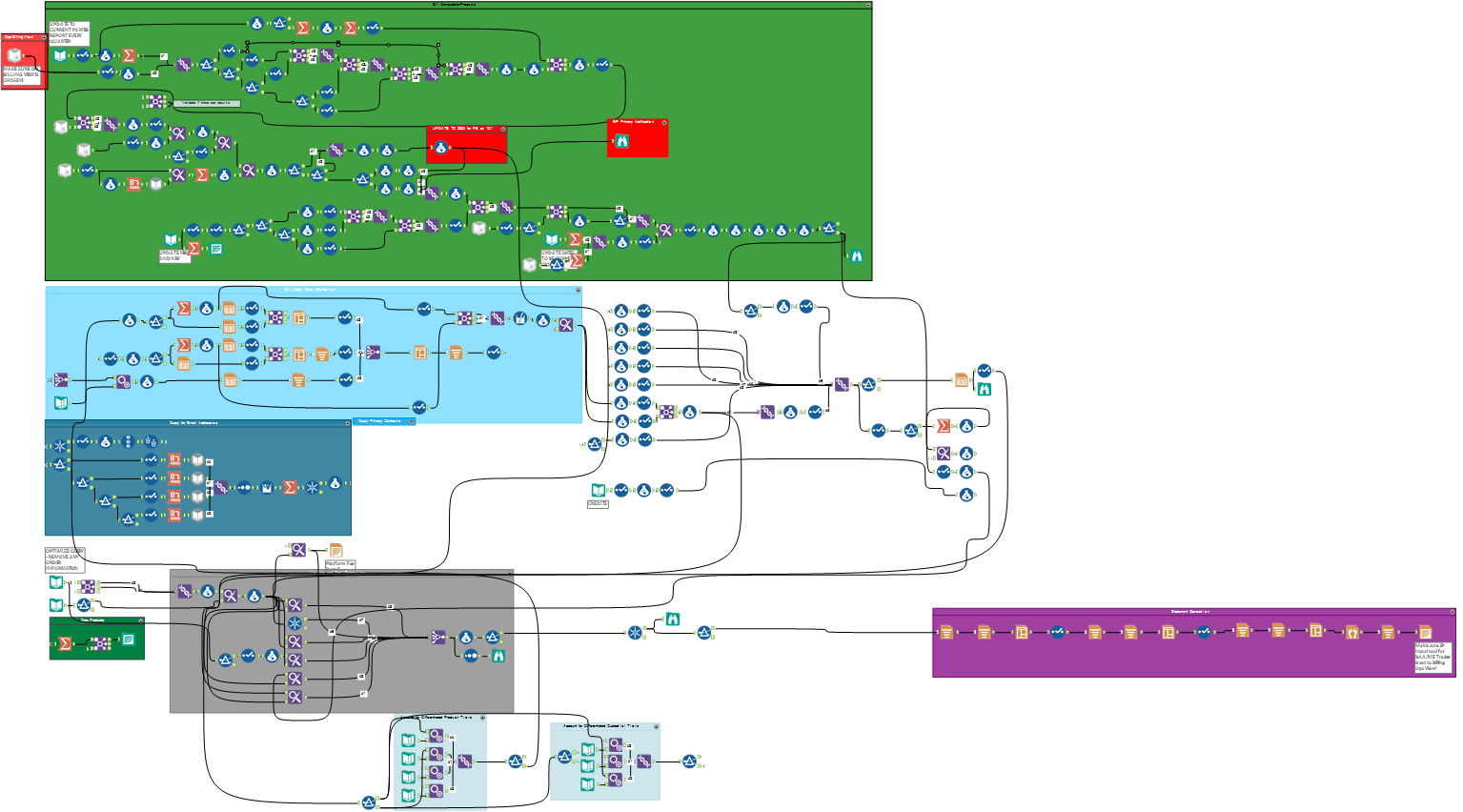

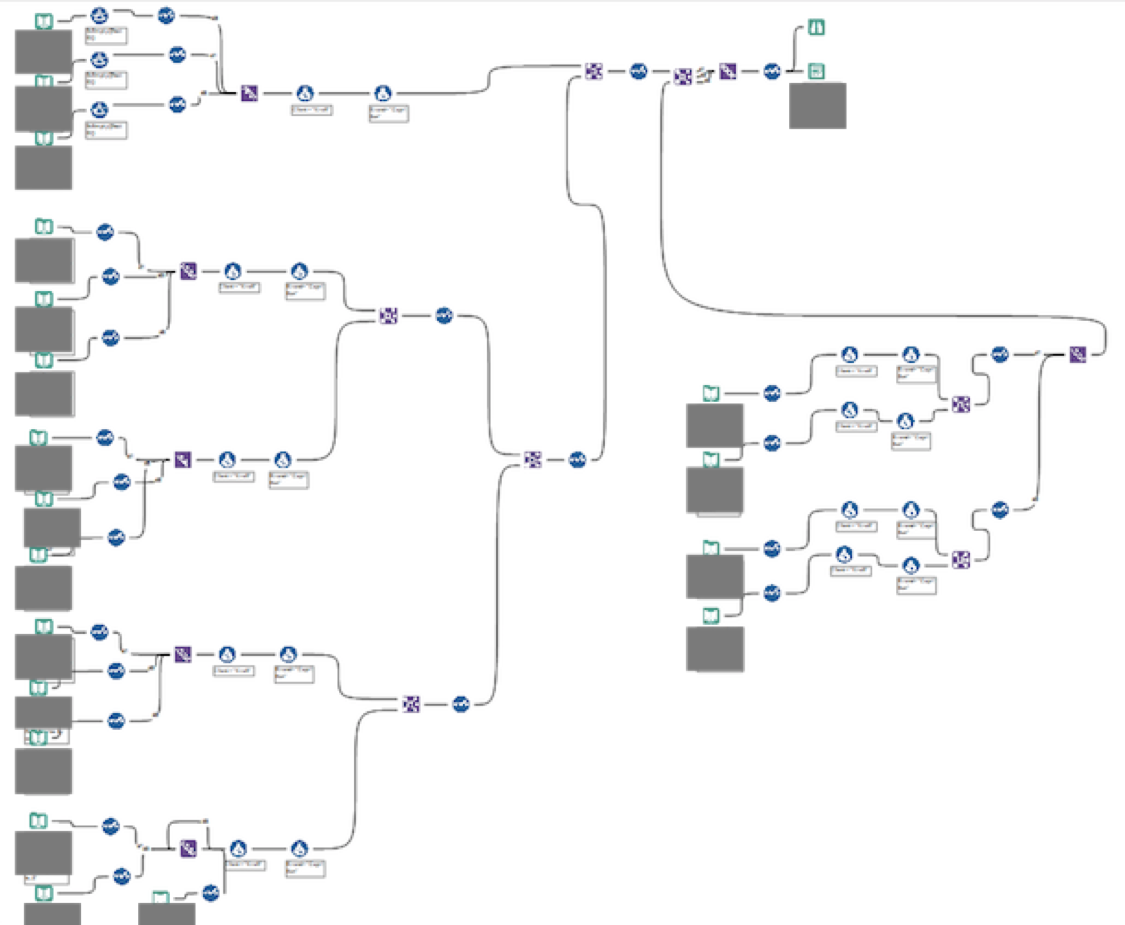

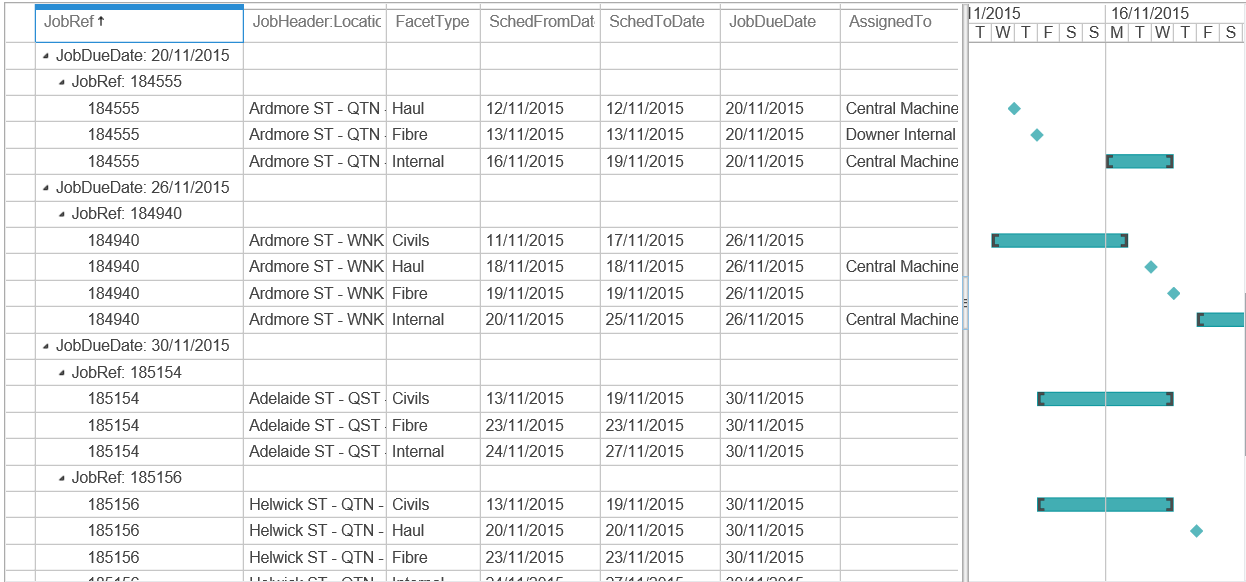

This workflow has as its input our employee roster file, which includes the employee’s work location and supervisor identifiers and work locations going up to their fourth level supervisor. For the first step of processing, we used stacked-joins to establish employee’s supervisor hierarchies up to the 8th level supervisor. We then needed to assign initial “starting location” for an employee based on the location type. That meant “rolling up” the employee’s location until we hit an actual company, not client, site. We did this because Affirmative Action reporting requires using actual company sites. The roll-up was accomplished using nested filters, which is easier to see, understand, modify, and track than a large ELSEIF function (important for team sharing).

Once the initial location rollup was completed, we then needed to rollup employees until every employee was at a site with at least 40 employees. While simply rolling all employees up at once would be quick, it would also result in fewer locations and many employees being rolled up too far from their current site which would undermine the validity and effectiveness of our Affirmative Action plan. Instead, we used a slow-rolling aggregate sort technique, where lone employees are rolled up into groups of two, then groups of two are rolled up into larger groups, and so on until sites are determined with a minimum of 40 employees (or whatever number is input). The goal is to aggregate employees effectively, while minimizing the “distance” of the employee from their initial site. This sorting was accomplished using custom-built macros with a group size control input that can be quickly changed by anyone using the workflow.

The end result was the roster of employees with the original data, with new fields identifying their roll-up location, and what level of roll-up from their initial location was needed. A small offshoot of “error” population (usually due to missing or incorrect data) is put into a separate file for later iterative correction.

Previously, this process was done through trial and error via Access, and Excel. That process, was not only much slower and more painstaking, but it also tended to result in larger “distances” of employees from initial sites then was necessary. As a result, our new process is quicker, less error-prone, and arguably more defensible than its predecessor.

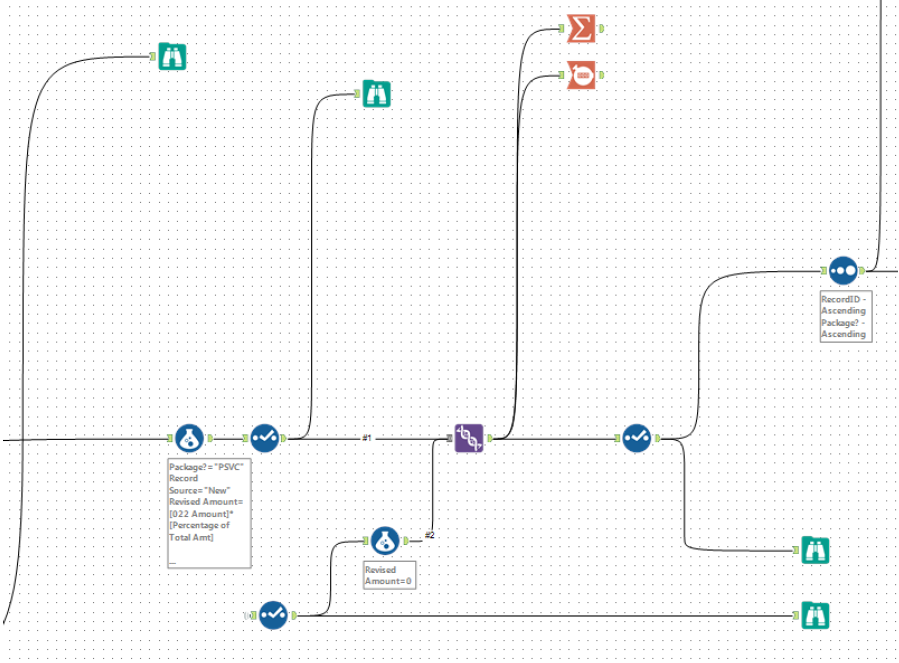

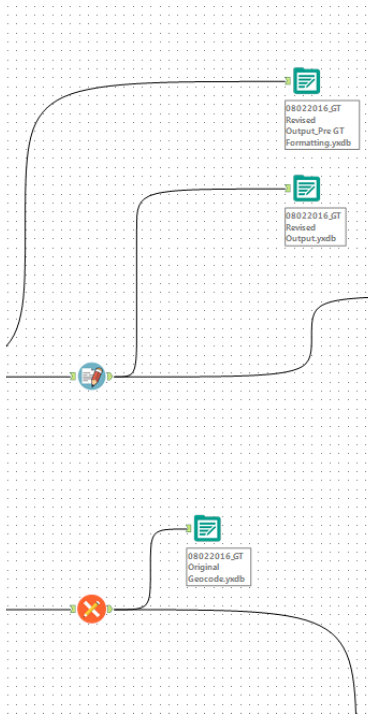

One of the Macros used in AAP:

Describe the benefits you have achieved

Alteryx has enabled our relatively small analytics shop (3 people) to build a powerful, flexible and scalable analytics infrastructure without working through our IT support. We are independent and thus can reply to the user's custom requests in a timely fashion. We are seen as agile and responsive - creating forecasting workflows in a few days to preview to our CEO and CHRO instead of creating Power Point slides to preview for them a concept. This way, we can show them what we expect it to look like and how it will work and any feedback they give us, we can work at the same time to meet their requirements. The possibilities of Alteryx, in our eyes, are endless and for a minimal investment, we are constantly "wowing" our customers with the service and products we are providing them. In the end, we have been successful in showing that HR can leverage the latest technologies to become more responsive to business needs without the need for IT or developer involvement.

-

2016 Entries

-

Human Resources

-

Most Unexpected Insight

-

Wildcard

Author: Alexandra Wiegel, Tax Business Intelligence Analyst

Company: Comcast Corp

Awards Category: Best Business ROI

A Corporate Tax Department is not typically associated with a Business Intelligence team sleekly manipulating and mining large data sources for insights. Alteryx has allowed our Tax Business Intelligence team to provide incredibly useful insight to several branches of our larger Tax Department. Today, almost all of our data is in Excel or csv format and so data organization, manipulation and analysis have previously been accomplished within the confines of Excel, with the occasional Tableau for visualization. Alteryx has given us the ability to analyze, organize, and manipulate very large amounts of data from multiple sources. Alteryx is exactly what we need to solve our colleague’s problems.

Describe the problem you needed to solve

Several weeks ago we were approached about using Alteryx to do a discovery project that would hopefully provide our colleagues further insight into the application of tax codes to customer bills. Currently, our Sales Tax Team uses two different methods to apply taxes to two of our main products respectively. The first method is to apply Tax Codes to customer bill records and then run those codes through software that generates and applies taxes to each record. The second method is more home-grown and appears to be leading to less consistent taxability on this side of our business.

Given that we sell services across the entire country, we wanted to explore standardization across all our markets. So, our Sales Tax team tasked us with creating a workflow that would compare the two different methods and develop a plan towards the goal of standardization and the effect it would have on every customer’s bills.

Describe the working solution

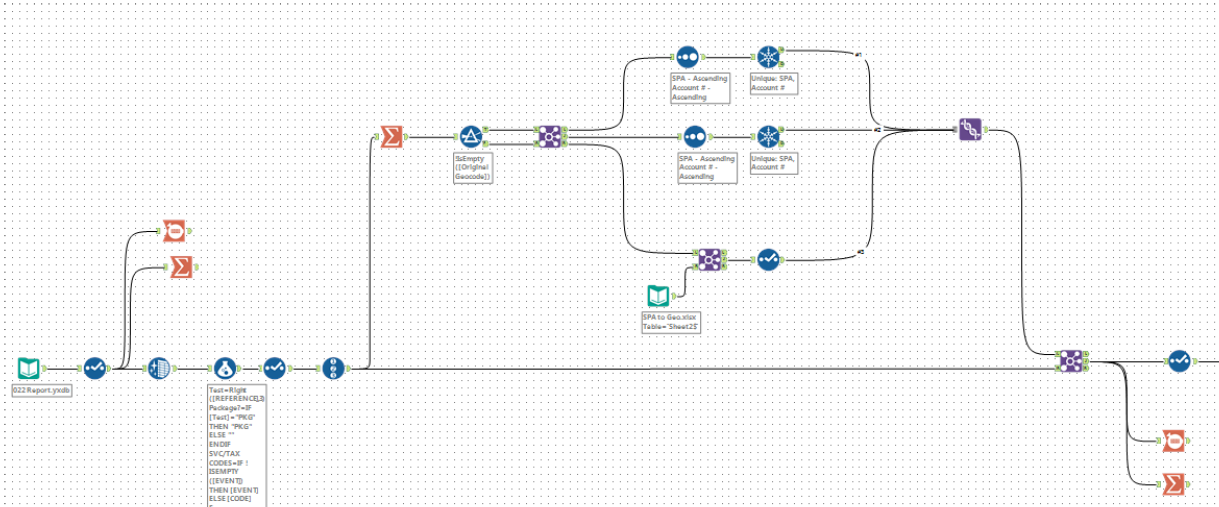

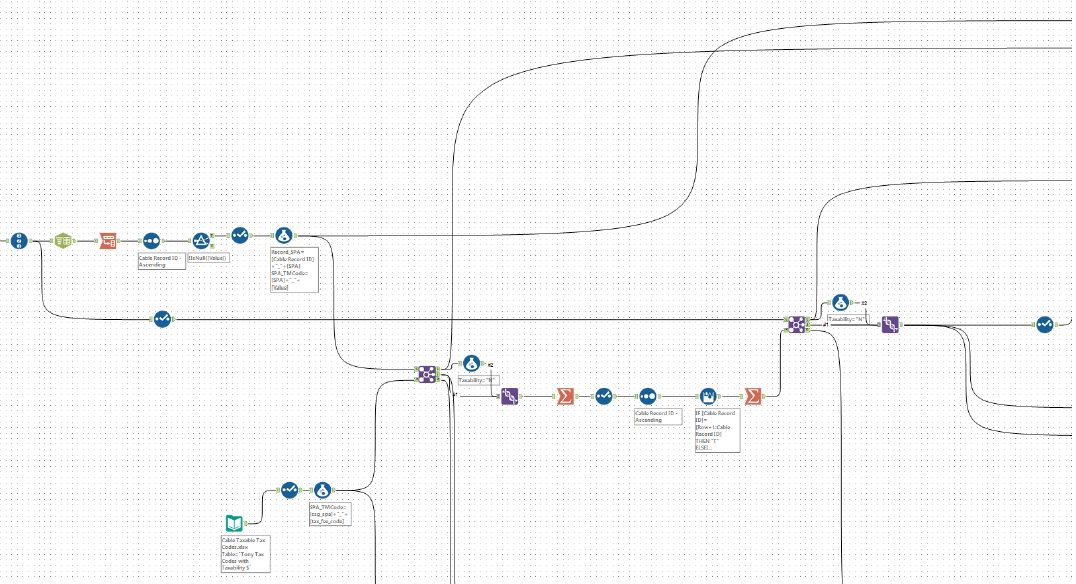

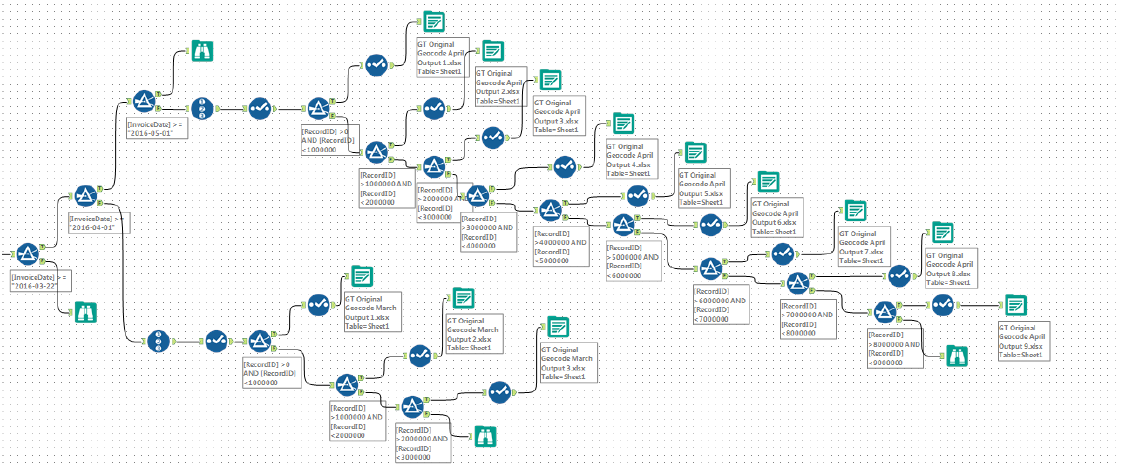

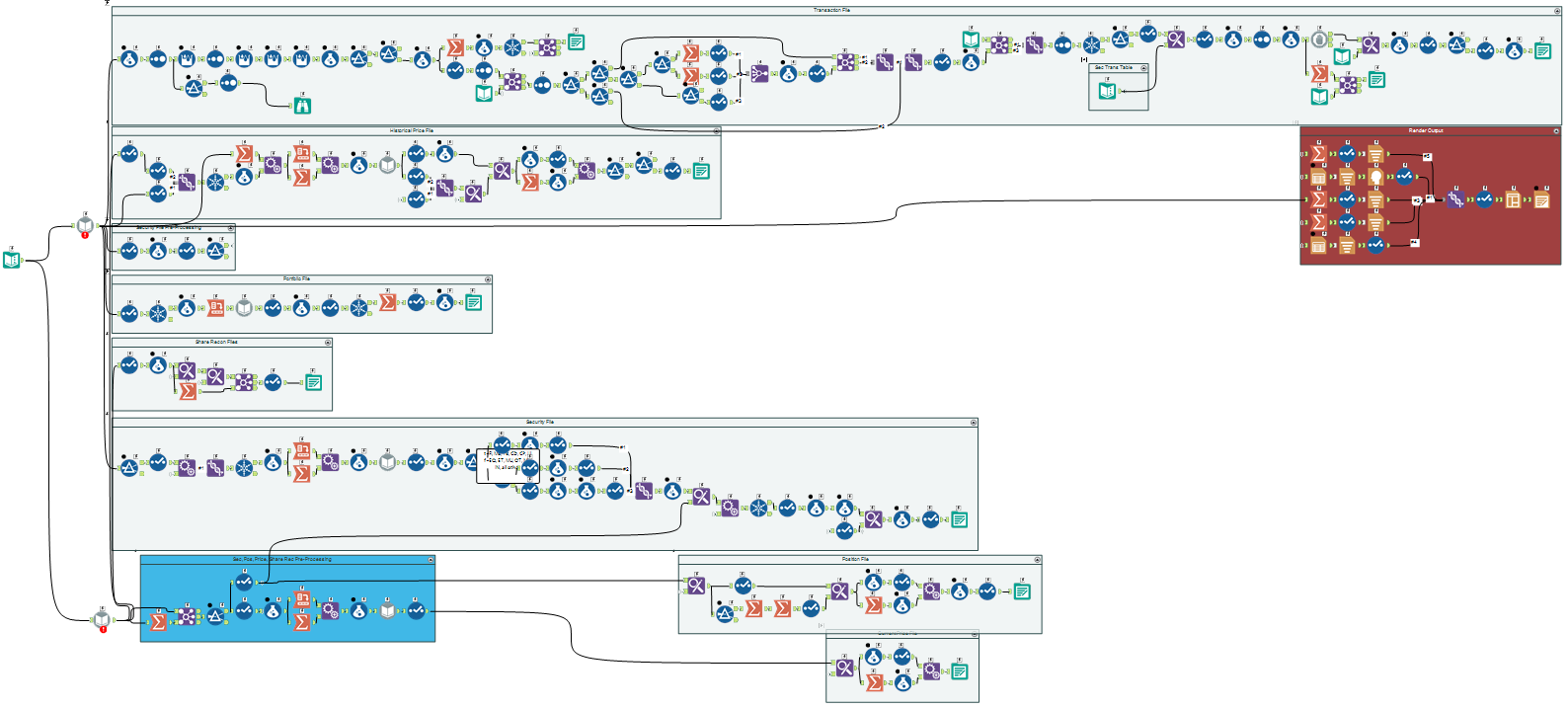

Our original source file was a customer level report where the records were each item (products, fees, taxes, etc.) on a customer’s bill for every customer in a given location. As it goes with data projects, our first task was to cleanse, organize, and append the data to make it uniform.

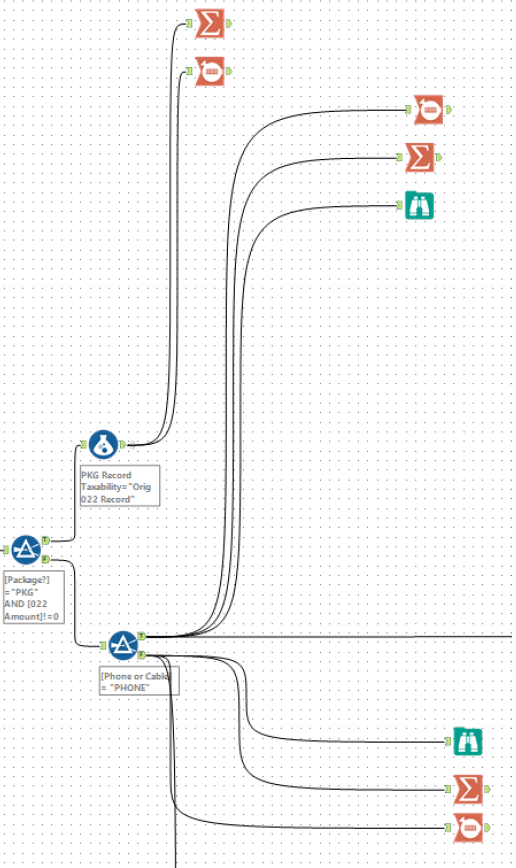

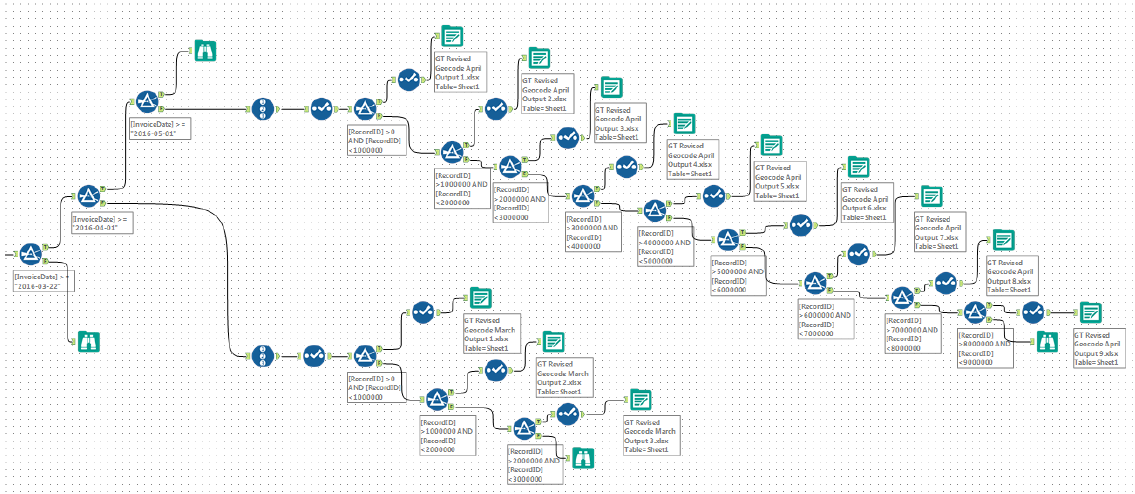

The next step was to add in the data from several data sources that we would ultimately need in order to show the different buckets of customers according to the monetary changes of their bills. Since these sources were all formatted differently and there was often no unique identifier we could use to join new data sources to our original report. Hence, we had to create a method to ensure we did not create duplicate records when using the join function. We ended up using this process multiple times (pictured below)

And so, the workflow followed. We added tax descriptions, new codes, and other information. We added calculated fields to determine the amount of tax that should be owed by each customer today, based on our current coding methods.

After we had layered in all the extra data that we would need to create our buckets, we distinguished between the two lines of business and add in the logic to determine which codes, at present, are taxable.

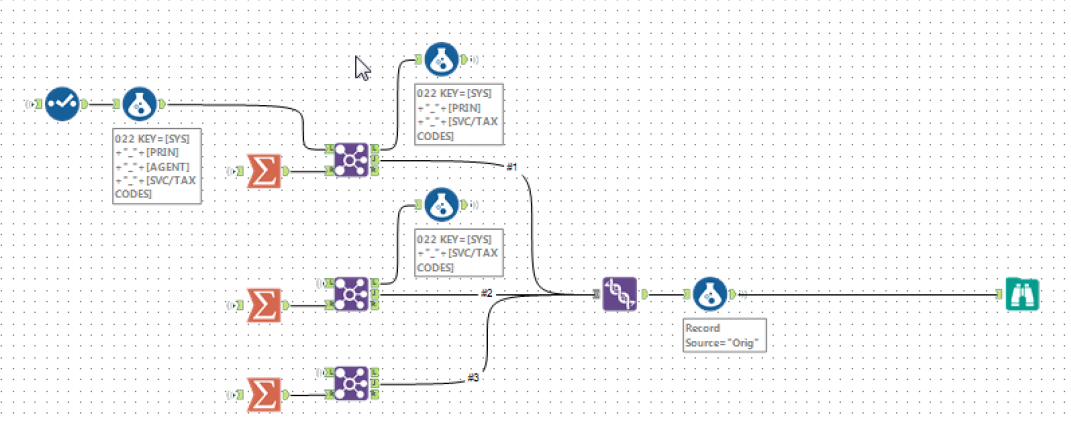

For the side of our business whose taxability is determine by software, you will notice that the logic is relatively simple. We added in our tax codes using the same joining method as we did above and then used a single join to a table that lists the taxable codes.

For the side of our business whose taxability is determine by using our home-grown method, you can see below that the logic is more complicated. Currently, the tax codes for this line of business are listed in such a way that requires us to parse a field and stack the resulting records in order to isolate individual codes. Once we have done this we can then apply the taxability portion. We then have to use this as a lookup for the actual record in order to determine if a record contains within the code column a tax code that has been marked as taxable. Or in other words, to apply our home-grown taxability logic is complicated, time consuming, and leaves much room for error.

Once we stacked all this data back together we joined it with the new tax code table. This will give us the new codes so that the software can be used for both lines of business. Once we know these new codes, we can simulate the process of the software and determine which of the new codes will be taxable.

Knowing whether or not codes are taxable helps us hypothesize about how problematic a geographic location may end up being for our team, but it does not tell us the dollar amount of taxes that will be changing. To know this we must output files that will be run through the real software.

Hence, once we have completed the above data manipulation, cleansing, and organization, we extract the data that we want to have run through the software and reformat the records to match the necessary format for the software recognition.

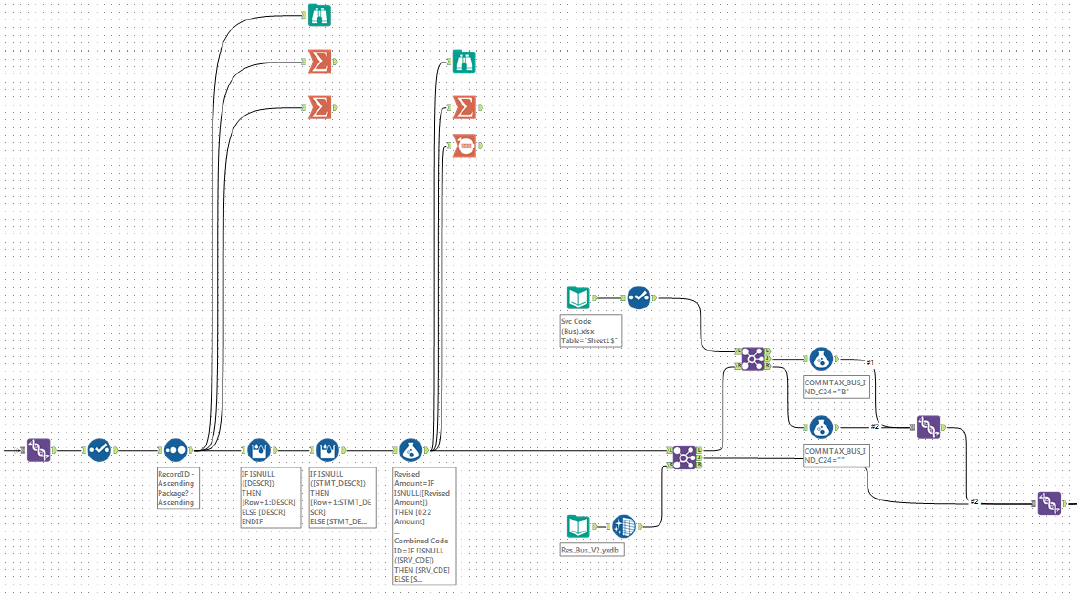

We created the above two macros to reformat the columns in order to simply this extensive workflow. Pictured below is the top macro. The difference between the two resides in the first select tool where we have specified different fields to be output.

After the reformatting, we output the files and send them to the software team.

When the data is returned to us, we will be able to determine the current amount of tax that is being charged to each customer as well the amount that will be charged once the codes are remapped. The difference between these two will then become our buckets of customers and our Vice President can begin to understand how the code changes will affect our customer’s bills.

Describe the benefits you have achieved

Although this project took several weeks to build in Alteryx, it was well worth the time invested as we will be able to utilize it for any other locations. We have gained incredible efficiency in acquiring insight on this standardization project using Alteryx. Another benefit we have seen in Alteryx is the flexibility to make minor changes to our workflow which has helped us easily customize for different locations. All of the various Alteryx tools have made it possible for the Tax Business Intelligence team to assist the Tax Department in accomplishing large data discovery projects such as this.

Further, we have begun creating an Alteryx app that can be run by anyone in our Tax Department. This frees up the Tax Business Intelligence team to work on other important projects that are high priority.

A common benefit theme amongst Alteryx users is that Alteryx workflows save companies large amounts of time in data manipulation and organization. Moreover, Alteryx has made it possible (where it is impossible in Excel) to handle large and complicated amounts of data and in a very user friendly environment. Alteryx will continue to be a very valuable tool which the Tax Business Intelligence team will use to help transform the Tax department into a more efficient, more powerful, and more unified organization in the coming years.

How much time has your organization saved by using Alteryx workflows?

We could never have done this data discovery project without using Alteryx. It was impossible to create any process within Excel given the quantity and complexity of the data.

In other projects, we are able to replicate Excel reconciliation processes that are run annually, quarterly, and monthly in Alteryx. The Alteryx workflows have saved our Tax Department weeks of manual Excel pivot table work. Time savings on individual projects can range from a few hours to several weeks.

What has this time savings allowed you to do?

The time savings has been invaluable. The Tax Department staff are now able to free themselves of the repetitive tasks in Excel, obtain more accurate results and spend time doing analysis and understanding the results of the data. The “smarter” time spent to do analyses will help transform the Tax Department with greater opportunities to further add value to the company.

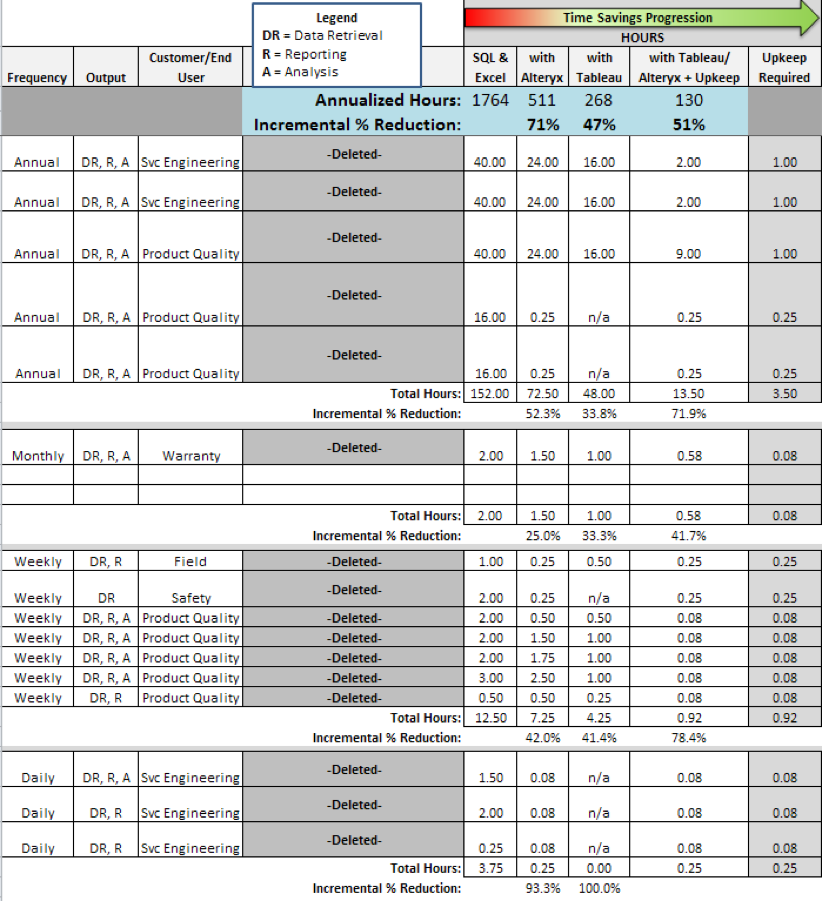

Author: Jack Morgan (@jack_morgan), Project Management & Business Intelligence

Awards Category: Most Time Saved

After adding up the time savings for our largest projects we came up with an annual savings of 7,736 hours - yea, per year! In that time, you could run 1,700 marathons, fill 309,000 gas tanks or watch 3,868 movies!! Whaaaaaaaaaaaaat! In said time savings, we have not done any of the previously listed events. Instead, we've leveraged this time to take advantage of our otherwise unrealized potential for more diverse projects and support of departments in need of more efficiency. Other users that were previously responsible for running these processes now work on optimizing other items that are long overdue and adding value in other places by acting as project managers for other requests.

Describe the problem you needed to solve

The old saying goes, Time is of the essence, and there are no exceptions here! More holistically, we brought Alteryx into our group to better navigate disparate data and build one-time workflows to create processes that are sustainable and provide a heightened level of accuracy. In a constraint driven environment my team is continuously looking for how to do things better. Whether that is faster, more accurately or with less needed oversight is up to our team. The bottom line is that Alteryx provides speed, accuracy, and agility that we never thought would be possible. Cost and the most expensive resource of all, human, has been a massive driver for us through our Alteryx journey and I'd expect that these drivers will continue as time passes us by.

Describe the working solution

Our processes vary from workflow to workflow, however overall we use a lot of SQL, Oracle, Teradata and SharePoint. In some workflows we blend 2 sources; in others we blend all of them. It depends on the need of the business that we are working with on any given day. Once the blending is done we do a variety of things with it, sometimes is goes to apps for self-service consumption and other times we push it into a data warehouse. However one thing that is consistent in our process is final data visualization in Tableau! Today, upwards of 95% of our workflows end up in Tableau allowing us to empower our users with self-service and analytics reporting. When using databases like SQL and Oracle we see MASSIVE gains in the use of In-Database tools. The ability for our Alteryx users to leverage such a strong no code solution creates an advantage for us in the customer service and analytics space because they already understand the data but now they have a means to get to it.

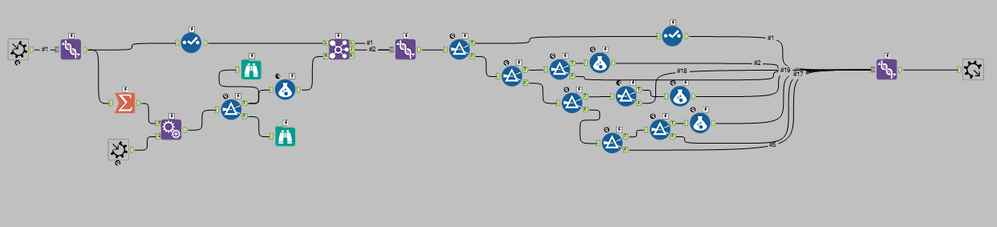

Audit Automation:

Billing:

File Generator:

Market Generator:

Parse:

Describe the benefits you have achieved

The 7,736 hours mentioned above is cumulative of 7 different processes that we rely on, on a regular basis.

- One prior process took about 9 days/month to run - we've dropped that to 30s/month!

- Another process required 4 days/quarter that our team was able to cut to 3 min/quarter.

- The third and largest workflow would have taken at estimate 5200 hours to complete and our team took 10.4 hours to do the same work!

- The next project was a massive one, we needed to create a tool to parse XML data into a standardized excel format. This process once took 40 hrs/month (non-standard pdf to excel) that we can run in less than 5s/month!

- Less impressive but still a great deal of time was when our systems and qa team contracted us to rebuild their daily reporting for Production Support Metrics. This process took them about 10 hours/month that we got to less than 15 sec/day.

- One of our internal QA teams asked us to assist them in speeding up their pre-work time for their weekly audit process. We automated their process that took them upwards of 65 hours/month to a process that now takes us 10 sec/week!

- The last of the 7 processes that have been mentioned in that our above write-up would be a process for survey data that took a team 2 hours/week to process. That same process takes our team about 20 sec/week to process.

We hope you've found our write-up compelling and win-worthy!

Author: Jennifer Jensen, Sr. Analyst and team members Inna Meerovich, RJ Summers

Company: mcgarrybowen

mcgarrybowen is a creative advertising agency that is in the transformation business. From the beginning, mcgarrybowen was built differently, on the simple premise that clients deserve better. So we built a company committed to delivering just that. A company that believes, with every fiber of its being, that it exists to serve clients, build brands, and grow businesses.

Awards Category: Best Business ROI

Describe the problem you needed to solve

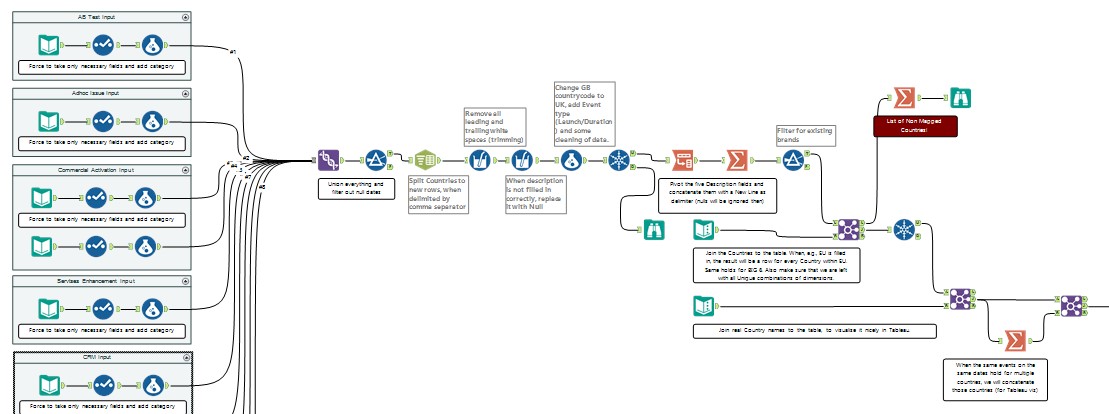

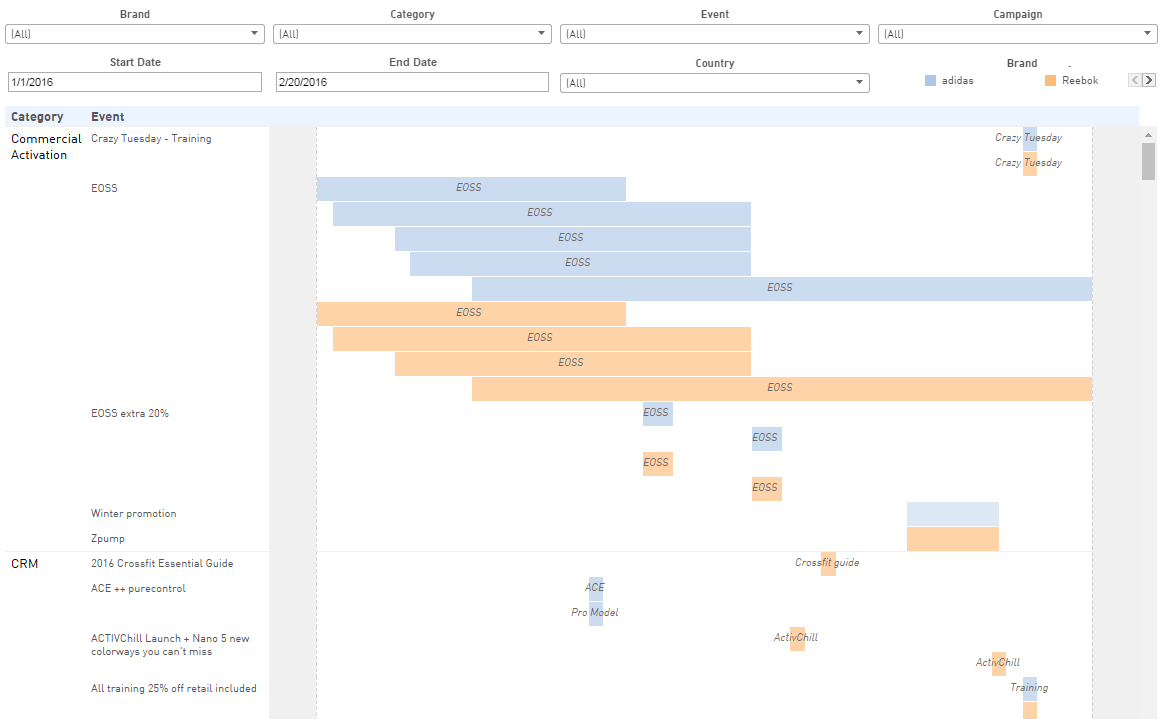

Mcgarrybowen creates hundreds of pieces of social creative per year for Fortune 500 CPG and Healthcare brands, on platforms including Facebook and Twitter. The social media landscape is constantly evolving especially with the introduction of video, a governing mobile-first mindset, and interactive ad units like carousels, but yet the capabilities for measuring performance on the platforms have not followed as closely.

Our clients constantly want to know, what creative is the most effective, drives the highest engagement rates, and the most efficient delivery? What time of day, day of week is best for posting content? What copy and creative works best? On other brands you manage, what learnings have you had?

But, therein lies the challenge. Answers to these questions aren’t readily available in the platforms, which export Post-Level data in raw spreadsheets with many tabs of information. Both Facebook and Twitter can only export 90 days of data at a time. So, to look at client performance over longer periods of time and compared to their respective categories, and derive performance insights that drive cyclical improvements in creative – we turned to Alteryx.

Describe the working solution

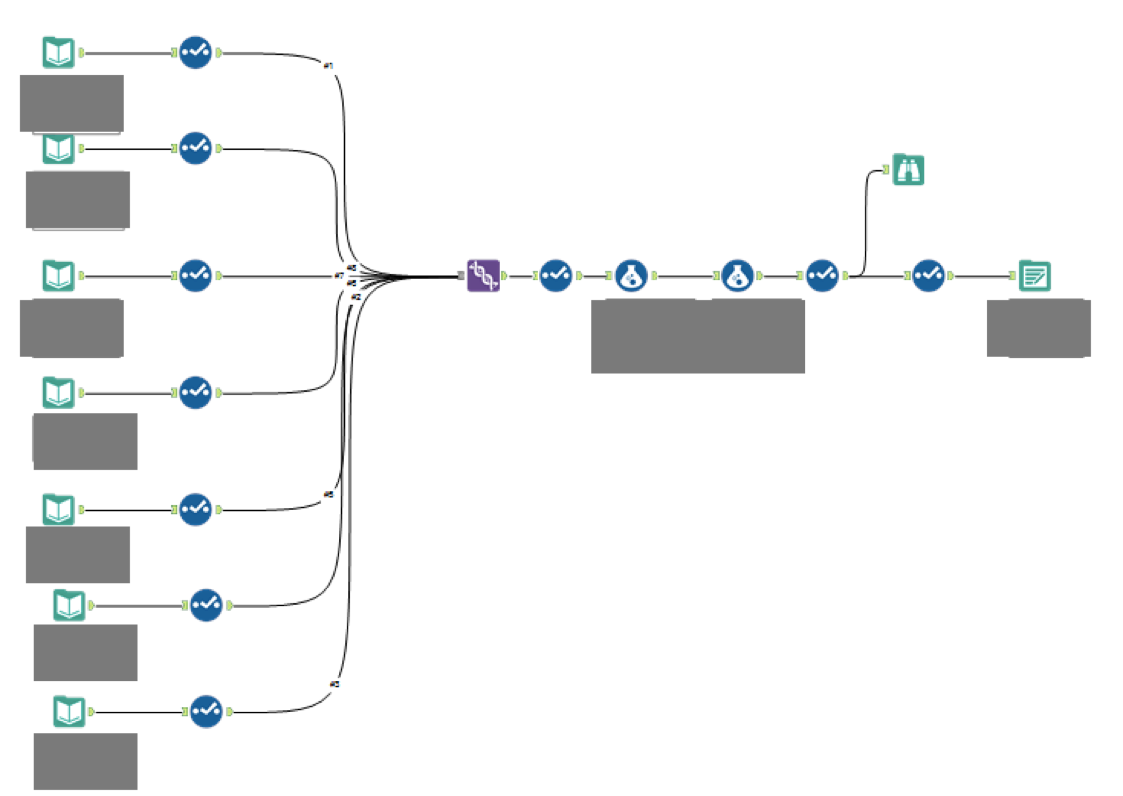

Our Marketing Science team first created Alteryx workflows that blended multiple quarters and spreadsheet tabs of social data for each individual client. The goal was to take many files over several years that each contained many tabs of information, and organize it onto one single spreadsheet so that it was easily visualized and manipulated within Excel and Tableau for client-level understanding. In Alteryx, it is easy to filter out all of the unnecessary data in order to focus on the KPIs that will help drive the success of the campaigns. We used “Post ID,” or each post’s unique identifying number, as a unifier for all of the data coming in from all tabs, so all data associated with a single Facebook post was organized onto a single row. After all of the inputs, the data was then able to be exported onto a single tab within Excel.

After each client’s data was cleansed and placed into a single Excel file, another workflow was made that combined every client’s individual data export into a master file that contained all data for all brands. From this, we can easily track performance over time, create client and vertical-specific benchmarks, and report on data efficiently and effectively.

Single Client Workflow

Multi-Client Workflow

Describe the benefits you have achieved

Without Alteryx, it would take countless hours to manually work with the social data in 90 day increments and manipulate the data within Excel to mimic what the Alteryx workflow export does in seconds. With all of the saved time, we are able to spend more time on the analysis of these social campaigns. Since we are able to put more time into thoughtful analysis, client satisfaction with deeper learnings has grown exponentially. Not only do we report out on past performance, but we can look toward the future and more real-time information to better analyze and optimize.

-

2016 Entries

-

Best Business ROI

-

Business Intelligence

-

Media

Author: Kiran Ramakrishnan

Awards Category: Most Time Saved

Through automating processes we received a lot of management attention and a desire to create more automated and on-demand analysis, dashboards and reports.

Another area where we have benefited significantly is training and process consistency. No more are we reliant on training new resources on learning the systems and process or critically affected by sudden departure of a team member.

Describe the problem you needed to solve

We are a semiconductor company located in the Silicon Valley. We are in business for more than 30 years with 45 locations globally and about 5000 employees. We are in business to solve our customers' challenges. We are a leader in driving innovations in particular for Microcontrollers. The company focuses on markets embedded processing, security, wireless, and touch technologies. In Automotive we provide solutions beyond touch such Remote keyless or networking. Our emphasize is IoT applications. We see a potential in the Internet of Things market combining our products especially MCUs, Security and Wireless Technologies.

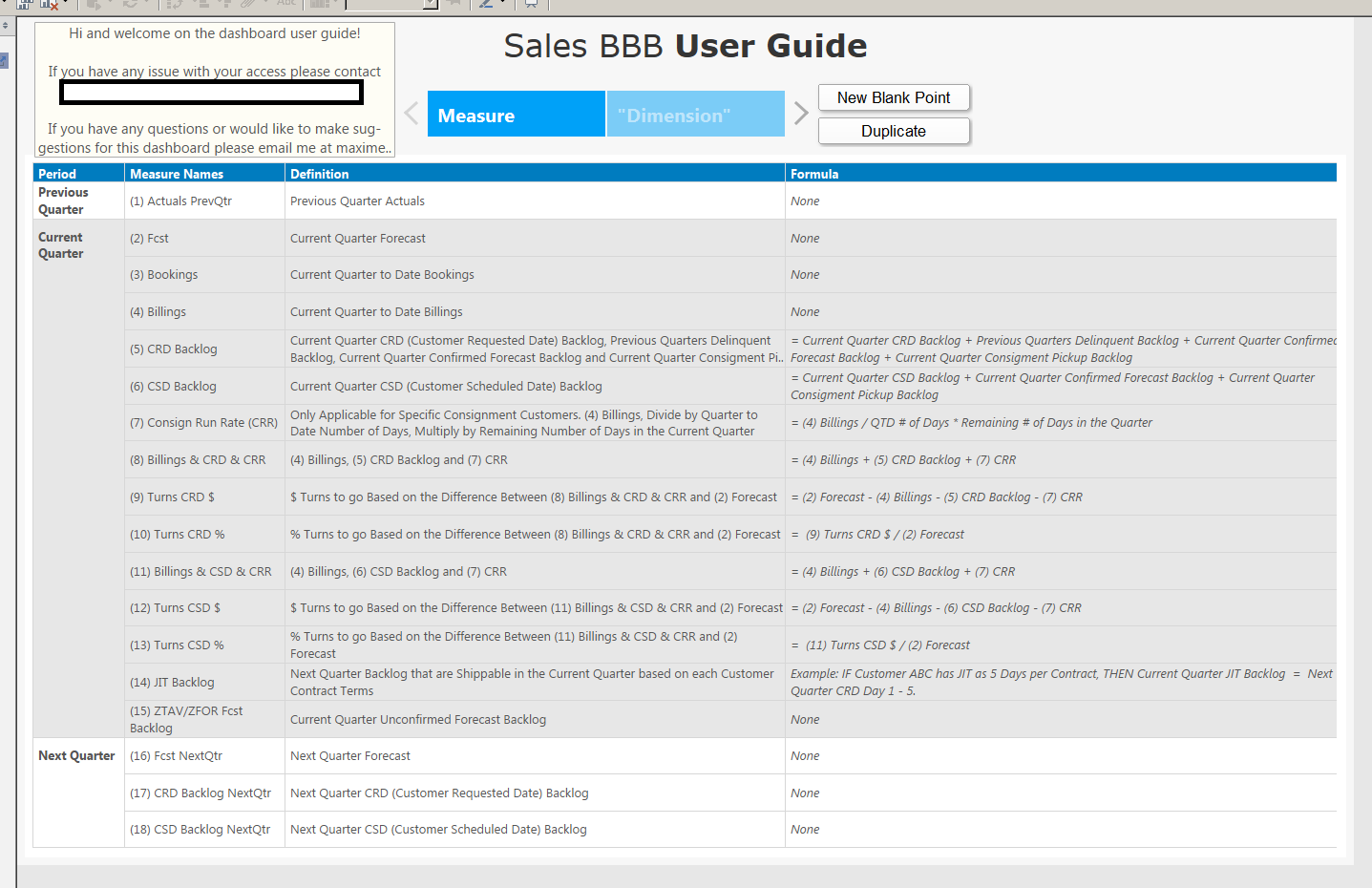

In this industry, planning is essential as the market is very dynamic and volatile but manufacturing cycles are long. Most electronic applications have comparatively short product life cycles and sharp production ramp cycles. Ignoring these ramps could result in over/under capacity. For a semiconductor company it is key to clearly understand these dynamics and take appropriate actions within an acceptable time.

To forecast and make appropriate predictions, organizations need critical information such as actual forecast, billing, backlog and bookings. Based on this information Sales, BUs and Finance are able to build models. As End of Life parts convert immediately into revenue we need to treat them separately. Typically semiconductors sales is based on sales commission. Sales commissions are calculated on product category and type. Therefore each line item needs to be matched to a salesperson by product life cycle. In public companies this is done on a quarterly basis and regular updates increase an organization's confidence to achieve set goals. As electronic companies are demanding more and more security levels to data access, consolidated dataset needs to be protected to ensure compliance with customer agreements. Large organizations also require data security to ensure data is only accessible on a need-to-know basis.

Historically, people from these different groups manually created, cleansed and merged data and information into various files and sources to achieve insight. It is common to use different environments such as Oracle DBs, SAP, ModelN, SharePoint, Salesforce, Excel, and Access. This is extremely time consuming and requires a huge manual effort. Usually data consistency between different sources is not guaranteed and requires additional cleansing and manipulation. As every person/group has also their own way to gathering and consolidating this information it typically leads to different results and layout as it is hard for someone outside the group to clearly understand the other person's approach. These reports are regularly necessary a necessity and need to be complied on a weekly/daily basis on the refresh frequencies. We also want to get independent of resources to update dashboards on demand. Current process makes the reporting heavily reliant on human resources.

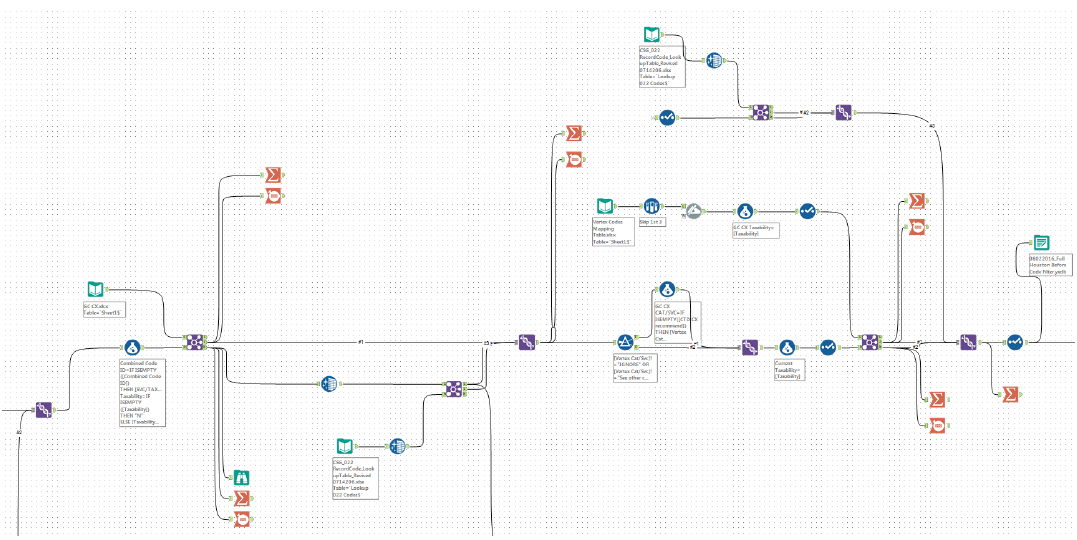

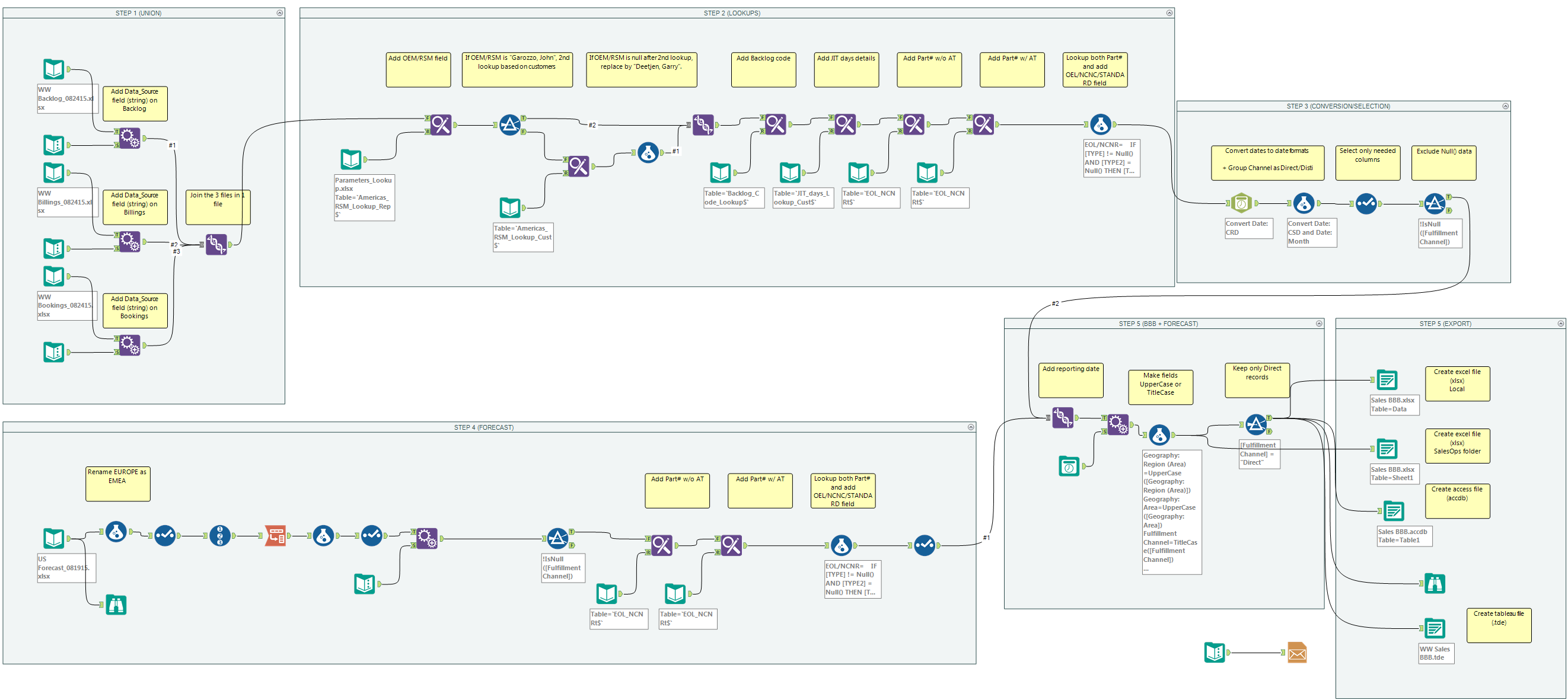

Describe the working solution

In Alteryx we found the solution to our problem. Alteryx was utilized to join data sources of in different data formats and environments gathered from different departments including Sales, Finance, Operations/Supply Chain, and Human Resources.

- The Sales department provides Forecast in an Excel worksheet. As the worksheet is being accessed and edited by more than 500 individuals, data inconsistency between fields (such as time dimension) is an ongoing issue and data architecture needs to be re-organized and consolidated.

- The Finance department provides Billings in the format of Oracle Hyperion, where there are data inconsistencies between Billings and Backlog & Bookings due to system differences. Billings need to be merged with Backlog & Bookings to identify EOL parts for commissions and forecast are identified.

- The Operations/Supply Chain department provides Backlog & Bookings through SAP, which also has data inconsistencies between Backlog & Bookings and Billings due to system differences. Backlog and Bookings need to be merged with Billings, and EOL parts for commission and forecast are identified.

- The HR department provides Organization Hierarchy through SAP HANA, in order to apply a row level security on the dashboards later on.

To resolve the issues, all relevant data is structured and follows the overall defined data architecture described in Alteryx. First, Alteryx pulls relevant data from various sources and stores it in a shared drive/folder. Then, Alteryx runs its algorithms based on our definitions. A special script was developed to publish and trigger a refresh of the dashboard with the latest data on a daily basis. Finally, a notification via email is sent to all the users (more than 500) with a hyperlink, once the refreshed data is published.

Describe the benefits you have achieved

Prior to the Alteryx implementation, a lot of time was spent downloading, storing, and consolidating the files, which resulted in multiple unexpected errors which were hard to identify. The accuracy and confidence level of the manually created dashboard was not very high, due to the unexpected human errors. Very often, the dashboards required so much preparation that by the time they were published they were already outdated.

Through the Alteryx approach, we have now eliminated manual intervention and reduced the effort to prepare and publish/distribute the reports to less than 1% compared to previous approach. In addition, through this streamlined approach we have stimulated collaboration on a global basis.

Departments such as IT, Finance, Sales are able to work much tighter together as they are seeing results within an extremely short period of time.

The other advantage of this solution is that it is now broadly being used throughout the organization from the CEO to analysts based on the defined security model.

It used to take us one week to create and develop the workflow. The biggest challenge we faced was to determine the individual steps and the responsible person as various resources and departments were required to contribute.

Through Alteryx workflow we are able to save more than 15 hours per week in data merging alone and at the same time we are now able to publish the reports/analysis on a daily basis. Through Alteryx we are now saving over 75h from various departments to run the process from end-to-end on a daily basis.

What has this time savings allowed you to do?

Through automating the process we received a lot of management attention and a desire to create more automated and on-demand dashboards and reports.

Another area where we have benefited significantly is training and process consistency. No more are we reliant on training new resources on learning the systems and process or critically affected by sudden departure of a team member.

-

2016 Entries

-

Manufacturing

-

Most Time Saved

-

Sales

Author: Omid Madadi, Developer

Company: Southwest Airlines Co.

Awards Category: Best Business ROI

Describe the problem you needed to solve

Fuel consumption expense is a major challenge for the airline industry. According to the International Air Transport Association, fuel represented 27% of the total operating costs for major airlines in 2015. For this reason, most airlines attempt to improve their operational efficiency in order to stay competitive and increase revenue. One way to improve operational efficiency is to increase the accuracy of fuel consumption forecasting.

Currently, Southwest Airlines offers services in 97 destinations with an average of 3,500 flights a day. Not having enough fuel at an airport is extremely costly and may result in disrupting flights. Conversely, ordering more fuel than what an airport needs results in high inventory and storage costs. As such, the objective of this project was to develop proper forecasting models and methods for each of these 97 airports in order to increase the accuracy and speed of fuel consumption by using historical monthly consumption data.

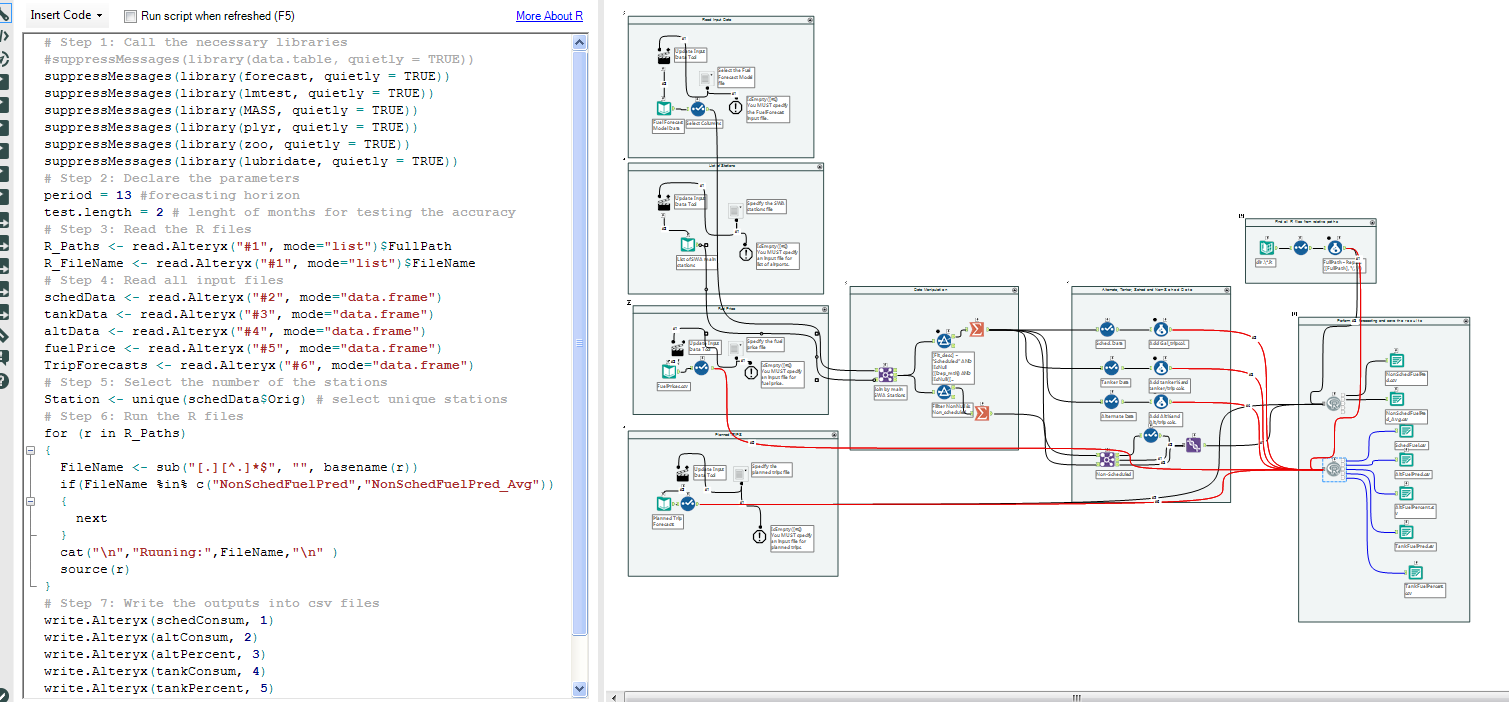

Describe the working solution

Data utilized in this project were from historical Southwest Airlines monthly fuel consumption reports. Datasets were gathered from each of the 97 airports as well as various Southwest departments, such as finance and network planning. Forecasting was performed on four different categories: scheduled flights consumption, non-scheduled flights consumption, alternate fuel, and tankering fuel. Ultimately, the total consumption for each airport was obtained by aggregating these four categories. Since data were monthly, time series forecasting and statistical models - such as autoregressive integrated moving average (ARIMA), time series linear and non-linear regression, and exponential smoothing - were used to predict future consumptions based on previously observed consumptions. To select the best forecasting model, an algorithm was developed to compare various statistical model accuracies. This selects a statistical model that is best fit for each category and each airport. Ultimately, this model will be used every month by the Southwest Airlines Fuel Department.

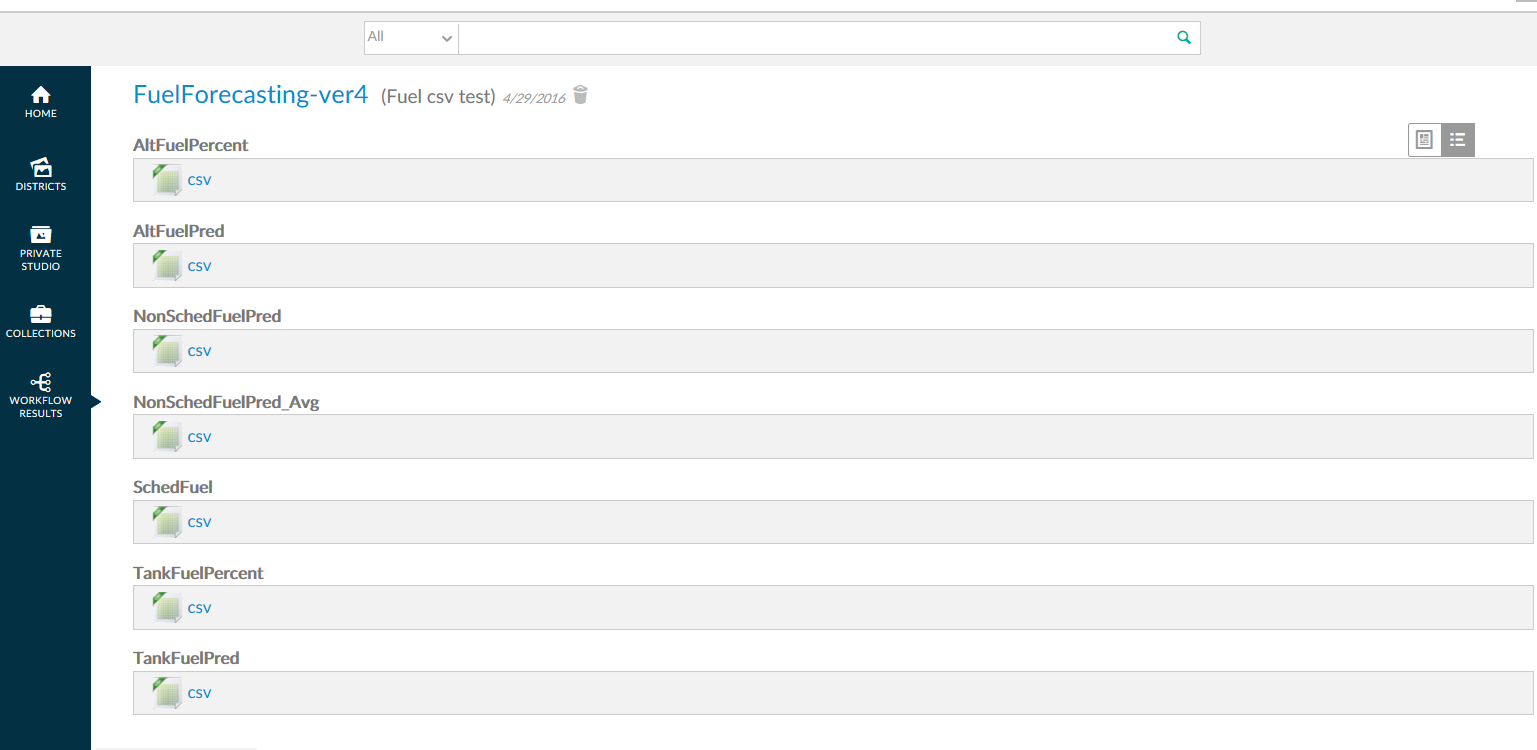

In addition to developing a consumption forecast that increases fuel efficiency, a web application was also developed. This web application enables the Fuel Department to browse input data files, upload them, and then run the application in an easy, efficient, and effortless manner. Data visualization tools were also added to provide the Fuel Department with better insights of trends and seasonality. Development of the statistical models has been finalized and will be pushed to production for use by the Southwest Airlines Fuel Department soon.

Describe the benefits you have achieved

Initially, the forecasting process for all 97 Southwest Airlines airports used to be conducted through approximately 150 Excel spreadsheets. However, this was an extremely difficult, time-consuming, and disorganized process. Normally, consumption forecasts would take up to three days and would have to be performed manually. Furthermore, accuracy was unsatisfactory since Excel's capabilities are inadequate in terms of statistical and mathematical modeling.

For these reasons, a decision was made to use CRAN R and Alteryx for data processing and development of the forecasting models. Alteryx offers many benefits since it allows executing R language script by using R-Tool. Moreover, Alteryx makes data preparations, manipulations, processing, and analysis fast and efficient for large datasets. Multiple data sources and various data types have been used in the design workflow. Nonetheless, Alteryx made it convenient to select and filter input data, as well as join data from multiple tables and file types. In addition, the Fuel Department needed a web application that would allow multiple users to run the consumption forecast without the help of any developers, and Alteryx was a simple solution to the Fuel Department's needs since it developed an interface and published the design workflow to a web application (through the Southwest Airlines' gallery).

In general, the benefits of the consumption forecast include (but are not limited to) the following:

- The forecasting accuracy improved approximately 70% for non-schedule flights and 12% for scheduled flight, which results in considerable fuel cost saving for the Southwest Airlines.

- The current execution time reduced dramatically from 3 days to 10 minutes. Developers are working to reduce this time even more.

- The consumption forecast provides a 12-month forecasting horizon for the Fuel Department. Due to the complexity of the process, this could not be conducted previously using Excel spreadsheets.

- The Fuel Department is able to identify seasonality and estimate trends at each airport. This provides invaluable insights for decision-makers on the fuel consumption at each airport.

- The consumption forecast identifies and flags outliers and problematic airports and enables decision-makers to be prepared against unexpected conditions.

-

2016 Entries

-

Best Business ROI

-

Transportation & Logistics

Author: Mark Frisch (@MarqueeCrew), CEO

Company: MarqueeCrew

Awards Category: Name Your Own - Macros for the Good of All Alteryx Users

Describe the problem you needed to solve

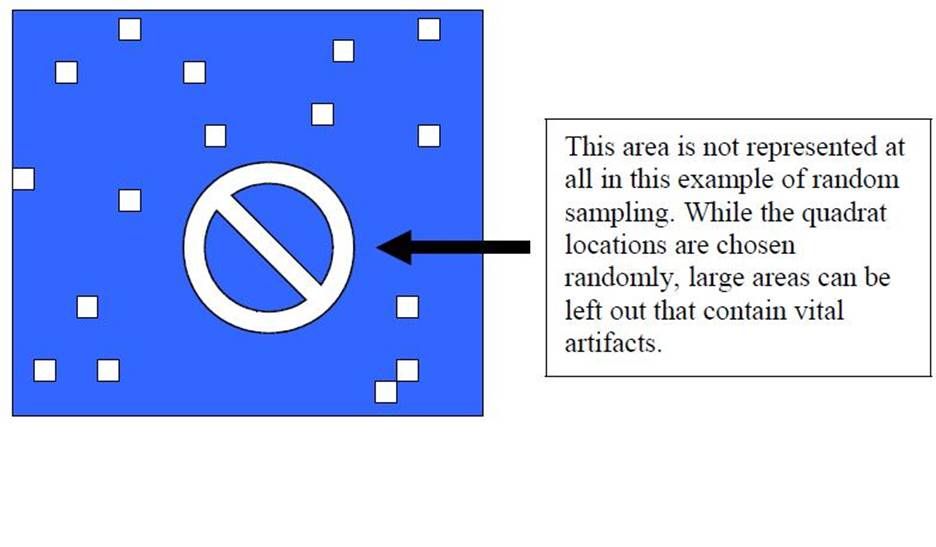

Creation of samples goes beyond random and creating N'ths. It is crucial that samples be representative of their source populations if you are going to draw any meaningful truth from your marketing or other use cases. After creating a sample set, how would you verify that you didn't select too many of one segment vs another? If you're using Mosaic (r) data and there are 71 types to consider did you get enough of each type?

Describe the working solution

Using a chi-squared test, we created a macro and published the macro to the Alteryx Macro District as well as to the CReW macros (www.chaosreignswithin). There are two input anchors (Population and Sample) and the configuration requires that you select a categorical variable from both inputs (the same variable content). The output is a report that tells you if your representative or not (includes degrees of freedom and the Chi square results against a 95% confidence interval).

Describe the benefits you have achieved

My client was able to avoid the costly mistake that had plagued their prior marketing initiative and was setup for success. I wanted to share this feature with the community. It would be awesome if it ended up helping my charity, the American Cancer Society. Although this isn't quite as sexy as my competition, it is sexy in it's simplicity and geek factor.

-

2016 Entries

-

Business Intelligence

-

Consulting

-

Wildcard

Author: Shelley Browning, Data Analyst

Company: Intermountain Healthcare

Awards Category: Most Time Saved

Describe the problem you needed to solve

Intermountain Healthcare is a not-for-profit health system based in Salt Lake City, Utah, with 22 hospitals, a broad range of clinics and services, about 1,400 employed primary care and secondary care physicians at more than 185 clinics in the Intermountain Medical Group, and health insurance plans from SelectHealth. The entire system has over 30,000 employees. This project was proposed and completed by members of the Enterprise HR Employee Analytics team who provide analytic services to the various entities within the organization.

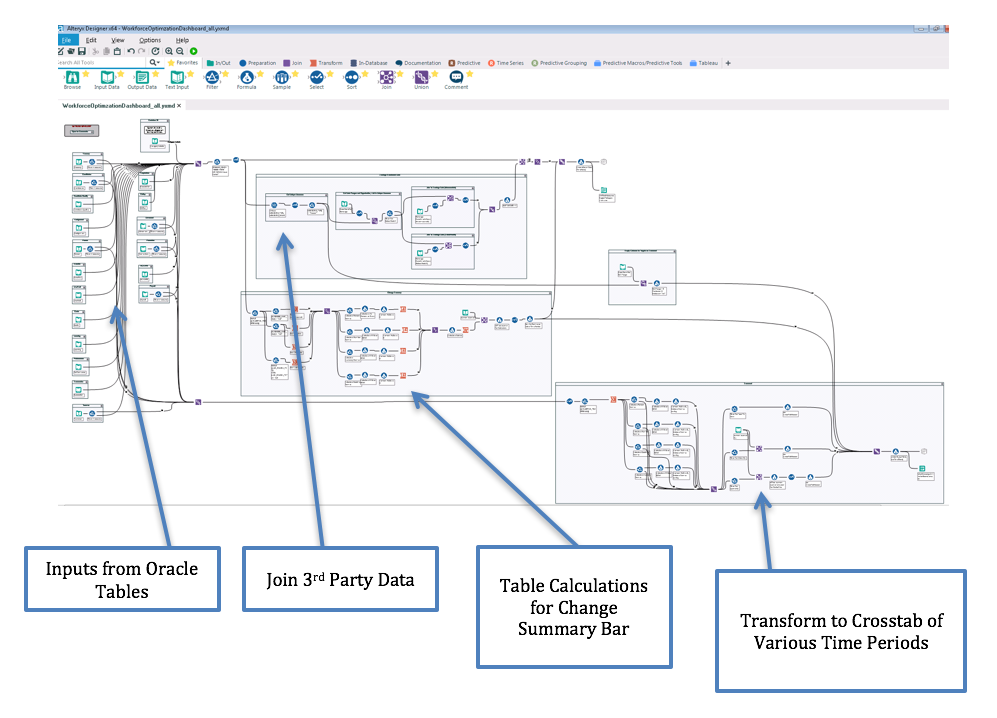

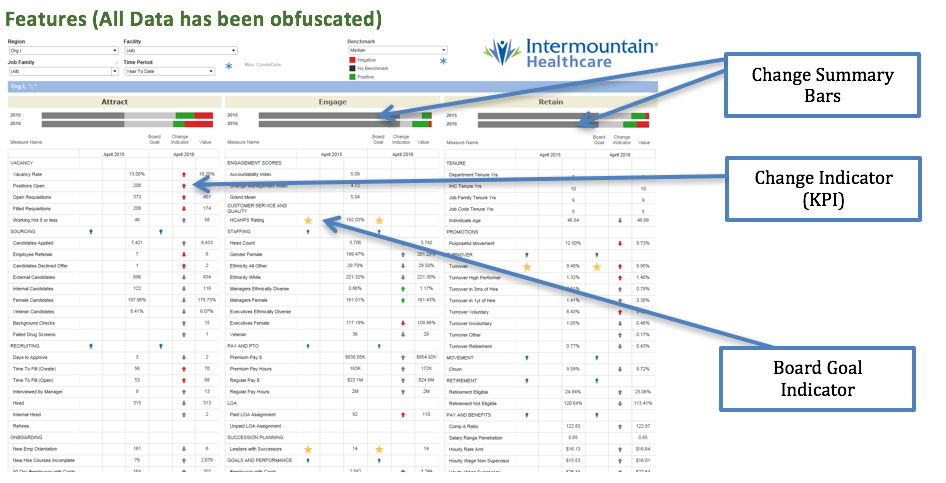

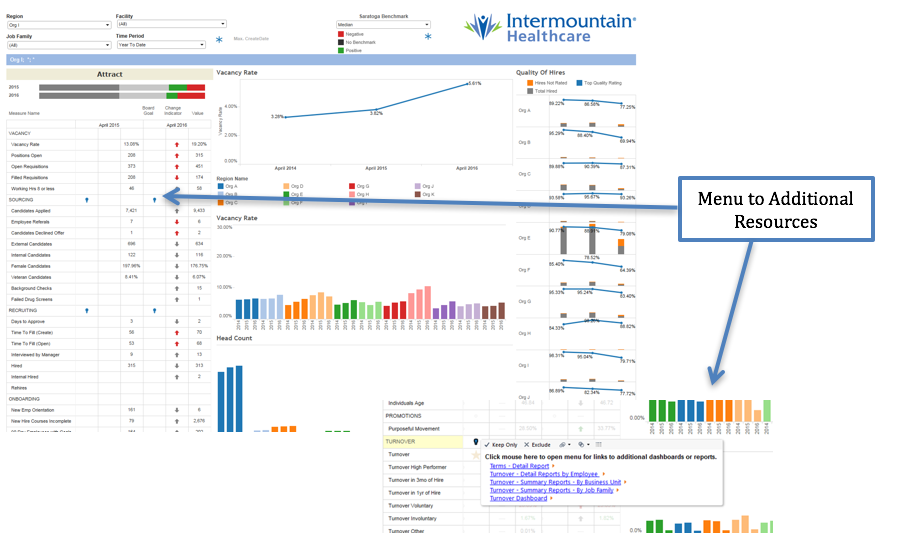

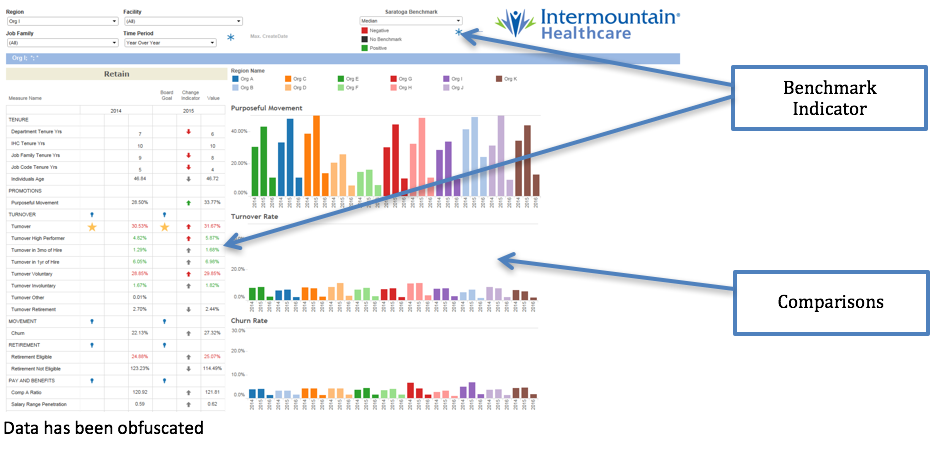

The initial goal was to create a data product utilizing data visualization software. The Workforce Optimization Dashboard and Scorecard is to be used throughout the organization by employees with direct reports. The dashboard provides a view of over 100 human resource metrics on activities related to attracting, engaging, and retaining employees at all levels of the organization. Some of the features in the dashboard include: drilldown to various levels of the organization, key performance indicators (KPI) to show change, options for various time periods, benchmark comparison with third party data, and links to additional resources such as detail reports. Prior to completion of this project, the data was available to limited users in at least 14 different reports and dashboards making it difficult and time consuming to get a complete view of workforce metrics.

During initial design and prototyping it was discovered that in order to meet the design requirements and maintain performance within the final visualization it would be necessary for all the data to be in a single data set. The data for human resources is stored in 17 different tables in an Oracle data warehouse. The benchmark data is provided by a third party. At the time of development the visualization software did not support UNION or UNION ALL in the custom SQL function. During development the iterative process of writing SQL, creating an extract file, and creating and modifying calculations in the visualization was very laborious. Much iteration was necessary to determine the correct format of data for the visualization.

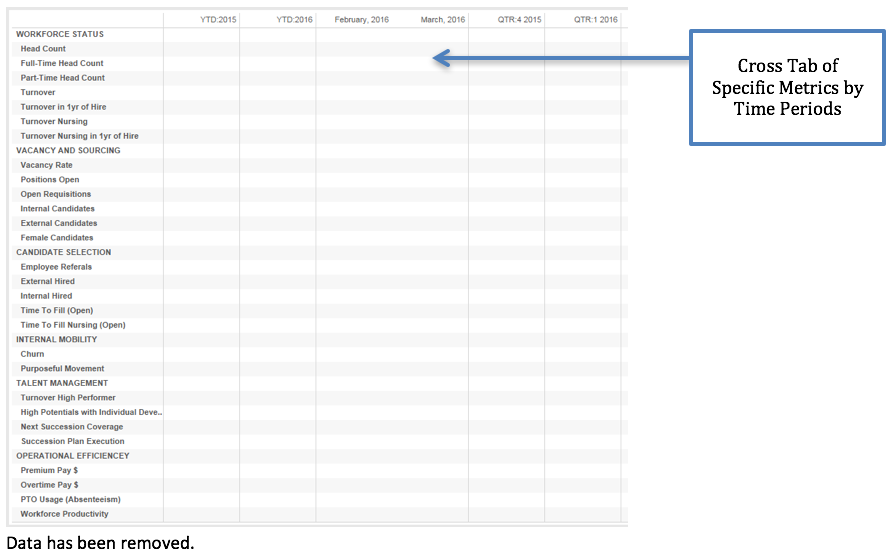

Other challenges occurred, such as when it was discovered that the visualization software does not support dynamic field formatting. The data values are reported in formats of percent, currency, decimal and numeric all within the same data column. While the dashboard was in final review it was determined that a summary of the KPI indicators would be another useful visualization on the dashboard. The KPI indicators, red and green arrows, were using table calculations. It is not possible to create additional calculations based on the results of table calculations in the visualization software. The business users also requested another cross tabular view of the same data showing multiple time periods.

Describe the working solution

Alteryx was instrumental in the designing and development of the visualization for the workforce dashboard. Without Alteryx the time to complete this project would have easily doubled. By using Alteryx, a single analyst was able to iterate through design and development of both the data set and the dashboard.

The final dashboard includes both tabular and graphic visualizations all displayed from the same data set. The Alteryx workflow uses 19 individual Input Data tools to retrieve data from the 17 tables in Oracle and unions this data into the single data set. Excel spreadsheets are the source for joining the third party benchmark data to the existing data. The extract is output from Alteryx directly to a Tableau Server. By utilizing a single set of data, filtering and rendering in visualization are very performant on 11 million rows of data. (Development included testing data sets of over 100 million rows with acceptable but slower performance. The project was scaled back until such a time as Alteryx Server is available for use.)

Describe the benefits you have achieved

The initial reason for using Alteryx was the ability to perform a UNION ALL on the 19 input queries. By selecting the option to cache queries, output directly to tde files, and work iteratively to determine the best format for the data in order to meet design requirements and provide for the best performance for filtering and rendering in the visualization, months of development time was saved. The 19 data inputs contain over 7000 lines of SQL code combined. Storing this code in Alteryx provides for improved reproducibility and documentation. During the later stages of the project it was fairly straight forward to use the various tools in Alteryx to transform the data to support the additional request for a cross tab view and also to recreate the table calculations to mimic the calculations the visualization. Without Alteryx it would have taken a significant amount of time to recreate these calculations in SQL and re-write the initial input queries.

Our customers are now able to view their Workforce Optimization metrics in a single location. They can now visualize a scenario in which their premium pay has been increasing the last few pay periods and see that this may be attributed to higher turnover rates with longer times to fill for open positions, all within a single visualization. With just a few clicks our leaders can compare their workforce optimization metrics with other hospitals in our organization or against national benchmarks. Reporting this combination of metrics had not been attempted prior to this time and would not have been possible at this cost without the use of Alteryx.

Costs saving are estimated at $25,000 to-date with additional savings expected in future development and enhancements.

-

2016 Entries

-

Healthcare

-

Human Resources

-

Most Time Saved

Author: Katie Snyder, Marketing Analyst

Company: SIGMA Marketing Insights

Awards Category: Most Time Saved

We've taken a wholly manual process that took 2 hours per campaign and required a database developer, to a process that takes five minutes per campaign, and can be done by an account coordinator. This frees our database developers to work on other projects, and drastically reduces time from data receipt to report generation.

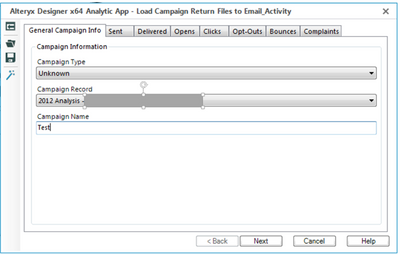

Describe the problem you needed to solve

We process activity files for hundreds of email campaigns for one client alone. The files come in from a number of different external vendors, are never in the same format with the same field names, and never include consistent activity types (bounces or opt-outs might be missing from one campaign, but present in another). We needed an easy, user-friendly way for these files to be loaded in a consistent manner. We also needed to add some campaign ID fields that the end user wouldn't necessarily know - they would only know the campaign name.

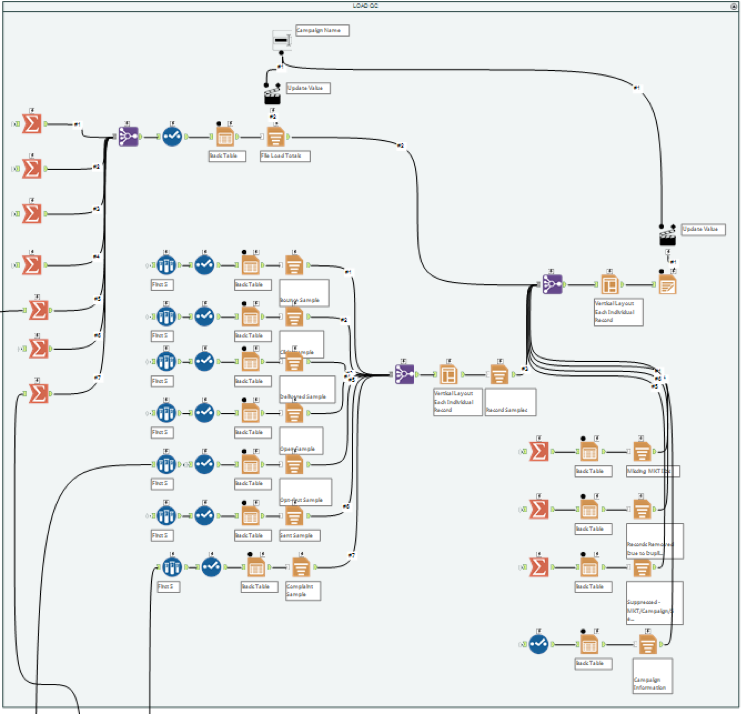

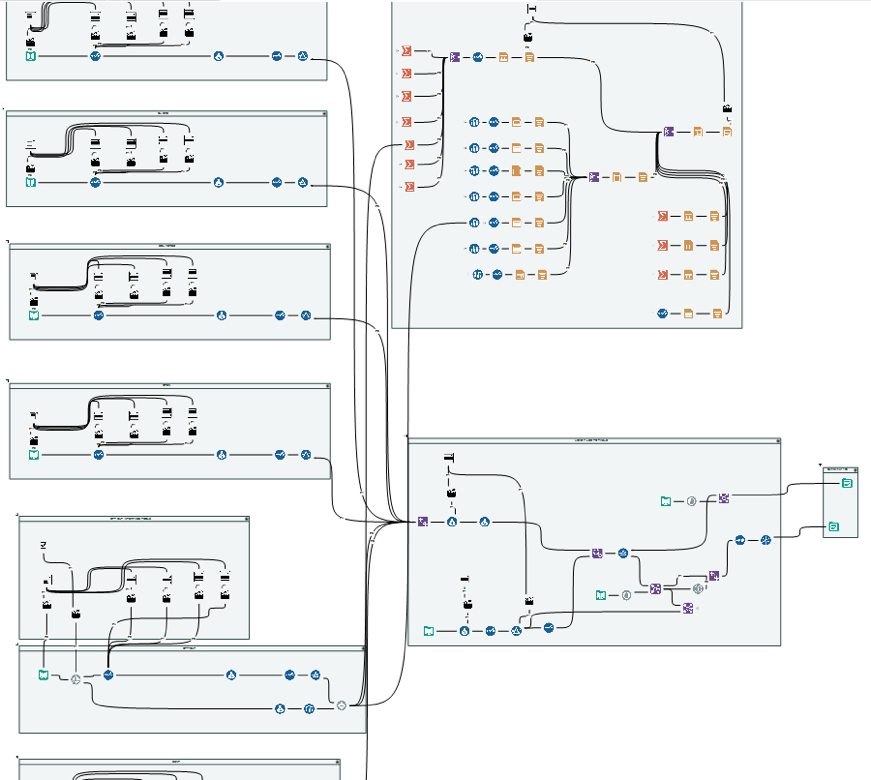

Describe the working solution

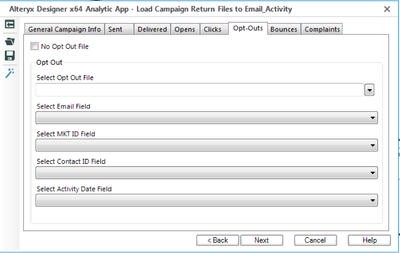

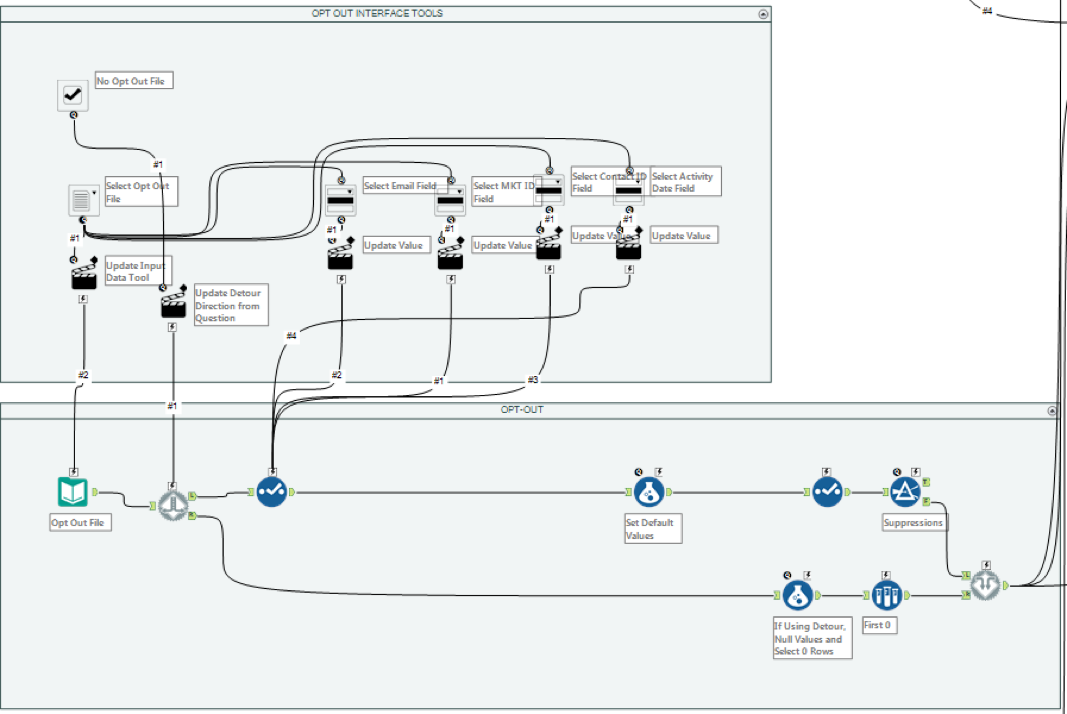

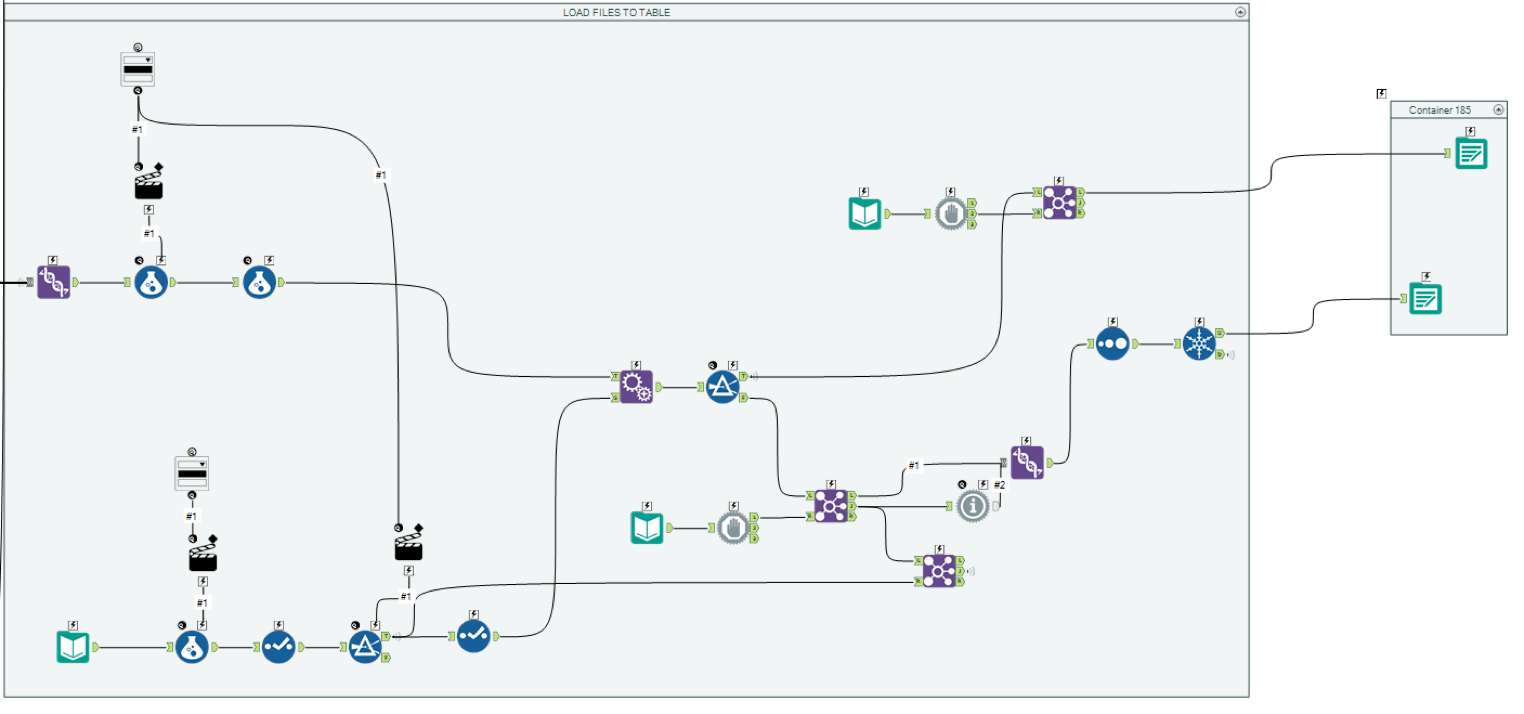

Using interface tools, we created an analytic app that allowed maximum flexibility in this file processing. Using a database query and interface tools, Alteryx displays a list of campaign names that the end user selects. The accompanying campaign ID fields are passed downstream. For each activity type (sent, delivered, bounce, etc), the end user selects a file, and then a drop down will display the names of all fields in the file, allowing the user to designate which field is email, which is ID, etc. Because we don't receive each type of activity every time, detours are placed to allow the analytic app user to check a box indicating a file is not present, and the workflow runs without requiring that data source.

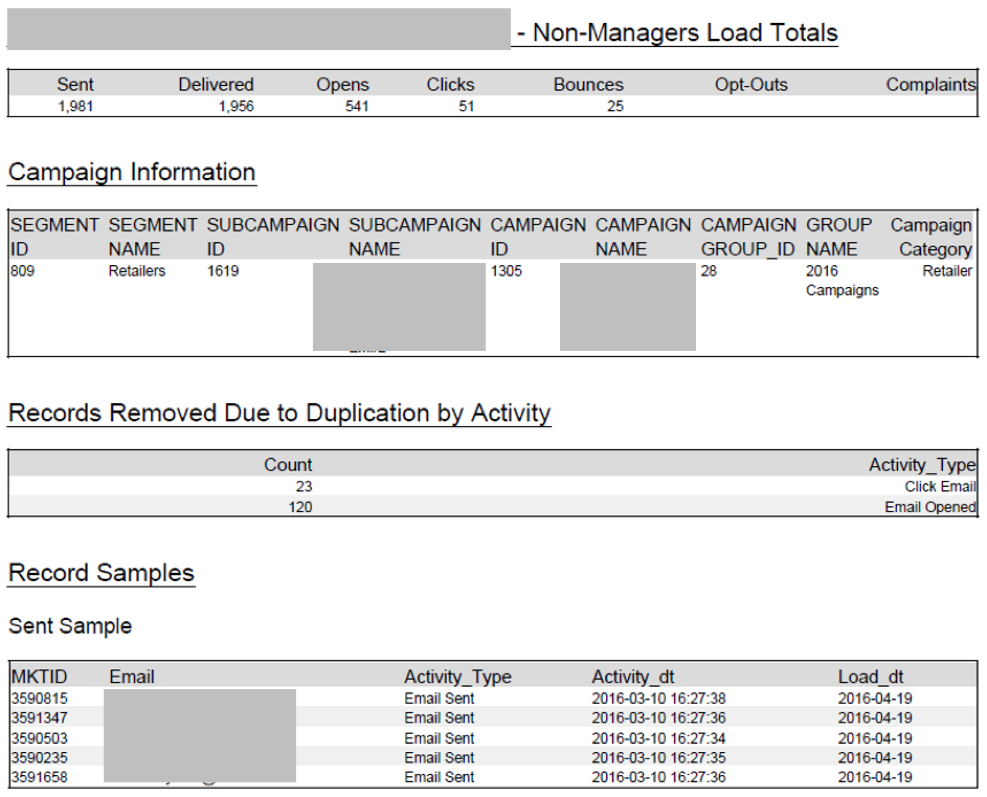

All in all, up to six separate Excel or CSV files are combined together with information already existing in a database, and a production table is created to store the information. The app also generates a QC report that includes counts, campaign information, and row samples that is sent to the account manager. This increases accountability and oversight, and ensures all members of the team are kept informed of campaign processing.

Process Opt Out File - With Detour:

Join All Files, Suppress Duplicates, Insert to Tables:

Generate QC Report:

Workflow Overview:

QC Report Example:

Describe the benefits you have achieved

In making this process quicker and easier to access, we save almost two hours of database developer time per campaign, which accounts for at least 100 hours over the course of the year. The app can be used by account support staff who don't have coding knowledge or even account staff of different accounts without any client specific knowledge, also saving resources. Furthermore, the app can be easily adapted for other clients, increasing time savings across our organization. Our developers are able to spend time doing far more complex work rather than routine coding, and because the process is automated, saves any potential rework time that would occur from coding mistakes. And the client is thrilled because it takes us less time to generate campaign reporting.

-

2016 Entries

-

Marketing

-

Most Time Saved

Author: Jim Kunce, SVP & Chief Actuary

Company: MedPro Group

Awards Category: Best Use of Server

Describe the problem you needed to solve

MedPro Group, Berkshire Hathaway's dedicated healthcare liability solution, is the nation's highest-rated healthcare liability carrier - according to A.M. Best (A++ as of 5/27/2015). We have been providing professional liability insurance to physicians, dentists and other healthcare providers since 1899. Today, we have insurance operations in all 50 states, the District of Columbia and are growing internationally. With such great size of operations and diversity of insurance products, it is a challenge to connect systems, processes and employees with one another.

Regardless of an insurance carrier's size and scale, its long-term success depends on:

- Continued new business growth

- Consistent pricing and risk-evaluation

- Unified internal operations

Our challenge was to lay the analytical foundation necessary for an ever-growing insurance company to execute on these three objectives. We identified the following three action items and linked them to the drivers of long-term success.

- Fuel new business growth by: Centralizing processes & remove system silos, link manual processes together.

- Drive consistent pricing and risk-evaluation: Remove data supply bottle-necks & empower business analysts to self-serve.

- Unify internal operations: Accelerate modernization & facilitate enterprise-wide legacy system integration.

Describe the working solution

"Fuel new business growth by centralizing processes & remove system silos, link manual processes together."

This solution has three parts to it.

- First, we programmed our pricing algorithm using Alteryx to "learn" the insurability of a prospective customer.

- Second, we overlaid this system on our CRM data to create sales recommendations nationwide.

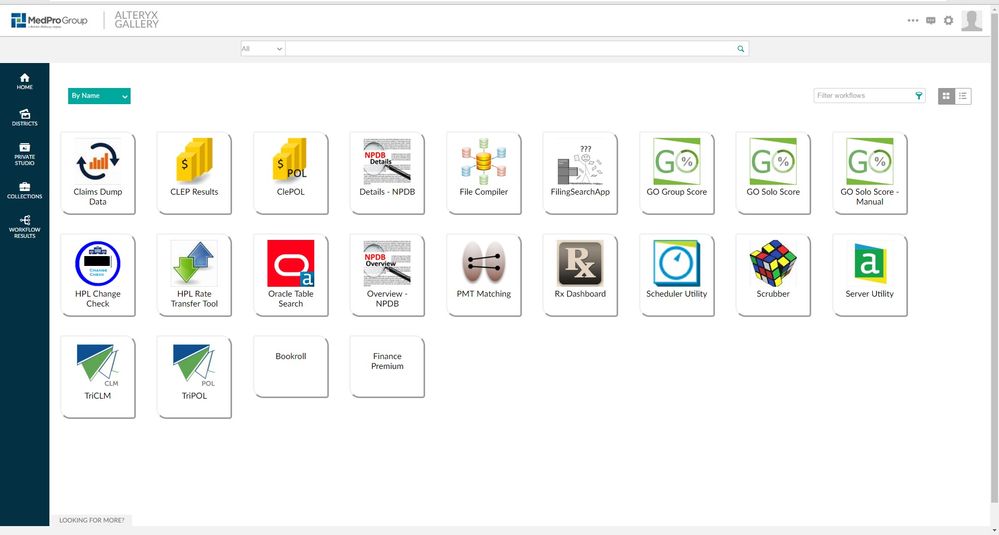

- Third, we deployed this recommender system with our Alteryx private gallery to provide real-time access to our sales teams.

Today, from anywhere in the country, our sales personnel can request a report for a customer they are prospecting and receive a consistent, reliable recommendation in a matter of seconds with little manual intervention.

"Drive consistent pricing and risk-evaluation: Remove data supply bottle-necks & empower business analysts to self-serve."

In an insurance company, actuaries and underwriters are responsible for pricing insurance policies and evaluating insurance risks of applicants. These complex decisions rely on many data inputs - some of which are internally available, but in other cases come from external sources (e.g. government websites, third party resources).

Today, we have been able to significantly reduce the data supply bottle-necks by configuring the Alteryx server to be the bridge between the data sources and our actuaries and underwriters. Each person along the pricing and risk evaluation process now gets “analysis-ready†data consistently and timely from the private gallery, a virtual buffet of self-serve apps for all data needs.

"Unify internal operations: accelerate modernization -- facilitate enterprise-wide legacy system integration."

In 2015, MedPro Group decided to scale up investments in modernizing legacy systems to a new web-based system. The challenge was to move data from our legacy systems into the new web-based system and vice versa. Additionally, the software solution needed to have a short learning curve and be flexible and transparent enough that key business leaders managing this modernization would be able to perform the data migration tasks.

Alteryx was a great fit in this case. Not only were business leaders able to program processes in Alteryx in a relatively short timeframe, we scaled up with ease and accelerated modernization by deploying on the private server for analysts to use in a self-serve, reliable environment.

Describe the benefits you have achieved

"We have connected systems, processes and employees to one another and made the benefits of that interconnectivity available to every employee."

We have been using the private gallery and server since July, 2015. What started as a proof of concept and experiment is now a fully functional production-grade experience. The list of systems that have been connected, processes that have been automated and employees who are finding value out of our private gallery and server is growing rapidly.

Here's a view into some of the measurable benefits we have achieved in just nine months -

- 94: The number of apps published to the private gallery to date.

- 6951: The number of times an app has run on the gallery. That's 26 runs a day over 9 months!

- 15: The percentage of employees who are served with this consistent, reliable self-serve platform.

And our goal? Move that needle to 100% with Alteryx in the months to come!

-

2016 Entries

-

Best Use of Alteryx Server

-

Insurance

-

Other

Author: Scott Elliott (@scott_elliott) , Senior Consultant

Company: Webranz Ltd

Awards Category: Best Use of Alteryx Server

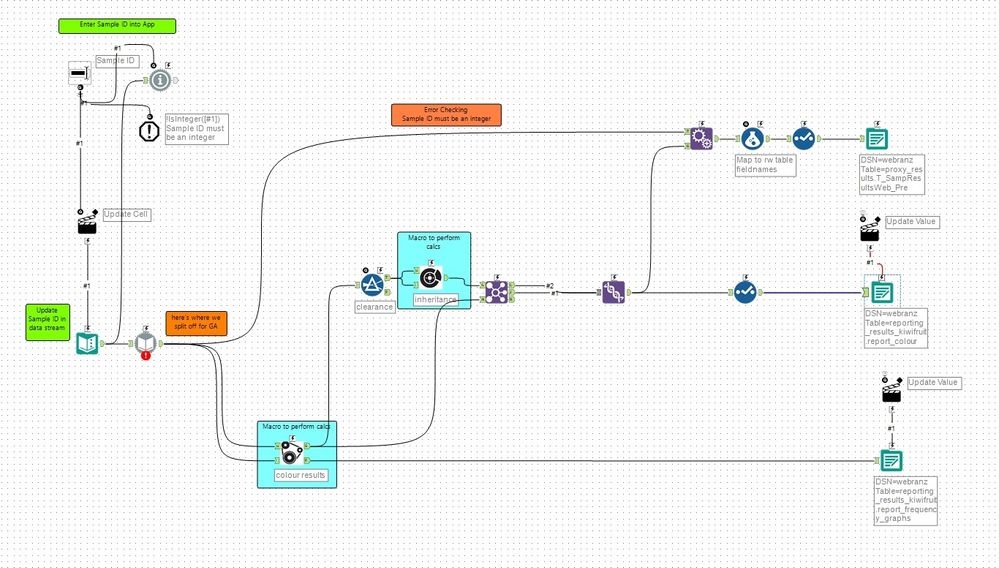

We are using the server to store Alteryx Apps that get called by the "service bus" and perform calculations and write the results into a warehouse where growers can log into a web portal and check the results of the sample.

Describe the problem you needed to solve

Agfirst BOP is a agricultural testing laboratory business that perform scientific measurement on Kiwifruit samples it receives from 2500 growers around New Zealand. In peak season it tests up to 1000 samples of 90 fruit per day. The sample test results trigger picking of the crop, cool storage, shipping and sales to foreign markets. From the test laboratory the grower receives notification of the sample testing being completed. They log into a portal to check the results. Agfirst BOP were looking for a new technology to transform the results from the service bus up to the web portal which gave them agility around modifying or adding tests.

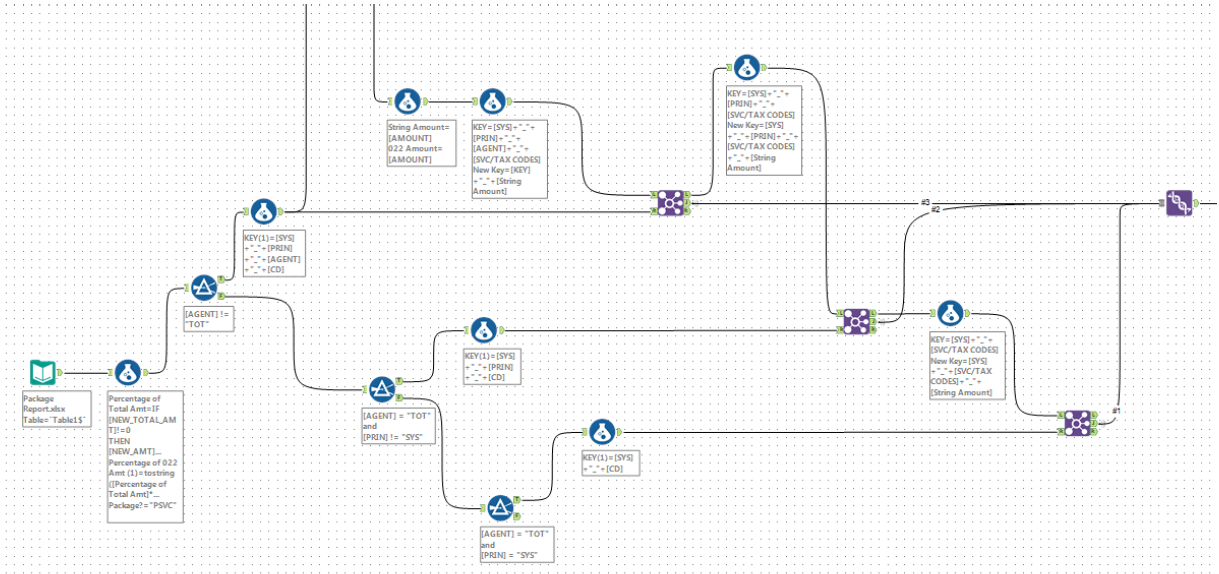

Describe the working solution

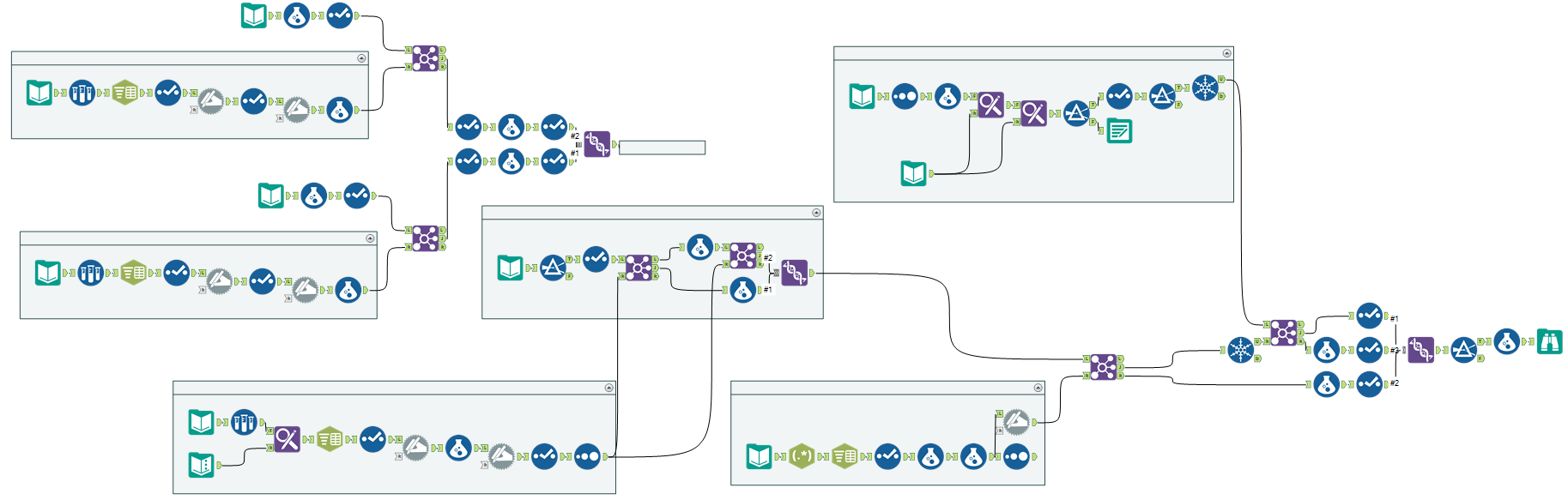

We take sample measurement results from capture devices. These get shipped to a landing warehouse. There is a trigger which calls the Alteryx Application residing on the Alteryx server for each sample and test type. The Alteryx App then performs a series of calculations and publishes the results into the results warehouse. The grower is now able to login to the web portal and check their sample. Each App contains multiple batch macros which allow processing sample by sample. Some of the tests have a requirement for the use of advanced analytics. These tests call R as part of the App. The use of macros is great as it provide amazing flexibilty and agility to plug in or plug out new tests or calculations. Having it on Alteryx Server allows it to be enterprise class by giving it the ability to be scaled and flexible at the same time. As well as being fully supported by the infrastructure team as it is managed within the data centre rather than on a local desktop.

App:

Batch Macro:

Describe the benefits you have acheived

The benefits realised include greater agility around adding/removing sample tests via the use of Macros. We are able to performed advanced analytics by calling R and it futures proofs the business by enabling them to choose any number of vendors and not be limited by the technology because of the ability of Alteryx to blend multiple sources. It gives them amazing flexibility around future technology choices and it is all supported and backed up by the infrastructure team because it sits within the datacentre and they have great comfort in knowing it's not something sitting under someones desk.

-

2016 Entries

-

Best Use of Alteryx Server

-

Consulting

-

Operations

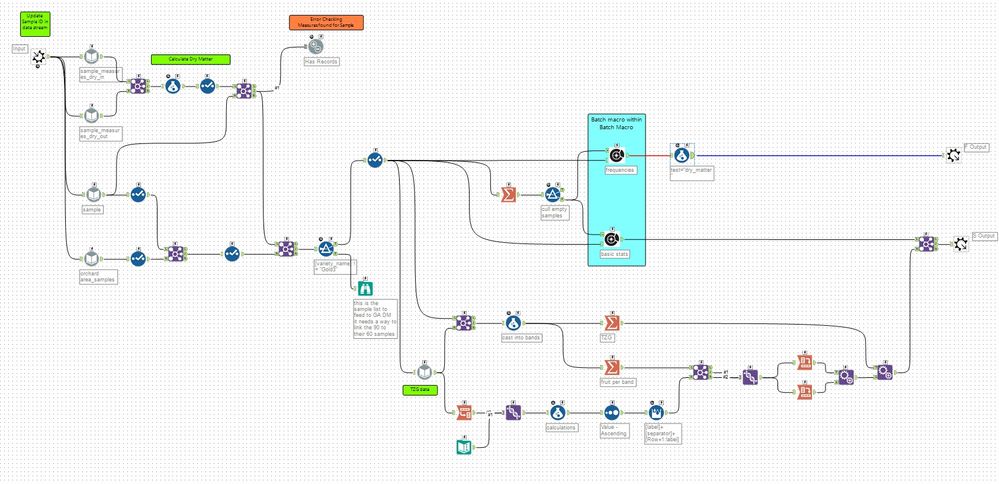

Author: Brodie Ruttan (@BrodieR), Lead Analytics & Special Projects

Company: Downer New Zealand

Awards Category: Name Your Own - Best Use of Alteryx SharePoint Integration

Describe the problem you needed to solve

I work for the largest services company in New Zealand, Downer NZ Ltd. Water services, Telecommunications, Power, Gas, Mining, Roads, Rail, Airports, Marine, and Defense etc. Our Work Streams are business to business and business to government and as such there are many different, disparate, aged data sources to work with. While we are progressing work streams on to new platforms, many of the databases and information systems we use are very dated and to keep developing them is cost prohibitive.

To keep providing our customers with the increased level of service they desire we need to keep capturing new metrics, but can't spend the money to further develop aged systems. How can we implement a solution to capture these new metrics without additional costs, and can we use the learning provided from capturing this data to develop the new information systems to operate these work streams?

Describe the working solution

What we have implemented at Downer is a solution whereby we develop SharePoint lists to sit alongside our current information systems to gather supplementary data about the work we do and seamlessly report on it. An example of this would be if one of our technicians is at site a Cell Mast Site (think cell/mobile phone transmitting tower) and needs to report that the work cannot be completed, but the site has been "Made Safe." "Made Safe" is not a Boolean expression available in our current information systems. This is where Alteryx comes in and provides the value. Alteryx is capable of pulling the data out of the aged system and pushing the required job details into SharePoint. Once data has been added to the SharePoint list, Alteryx can then blend the data seamlessly back into exports for reporting and monitoring purposes.

Describe the benefits you have achieved

Our business now has the capability of expanding legacy systems seamlessly using Alteryx and SharePoint. The cost of implementing the solution is limited only to the licensing costs of Alteryx and a SharePoint environment. Considering both of these licensing costs are sunk, we are capable of expanding systems using only the cost of time, which when using Alteryx and SharePoint is minimal. The cost benefit is immense, to upgrade or expand a legacy information system is a hugely expensive effort with little benefit to show. Legacy information systems in our environment mostly need to be migrated rather than upgraded. While we build these lists to expand our capability and keep our customers satisfied we also get the benefits of lessons learned when developing the new platform. Any information gathered in SharePoint, using Alteryx, needs to be planned for when the new information system is stood up, which saves the effort and cost of additional business analyst work.

We have also expanded this capability using Alteryx to pull out multi-faceted work projects for display in Gantt views in SharePoint and then to pull the updated information back into the host systems.

-

2016 Entries

-

Business Intelligence

-

Energy & Utilities

-

Wildcard

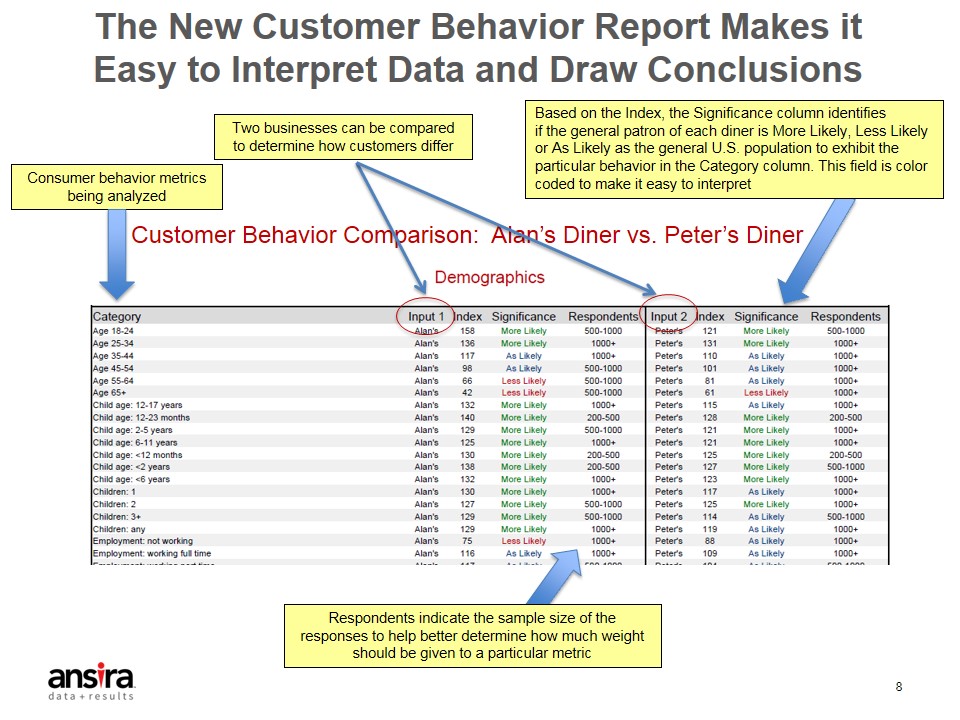

Company: B.I. Spatial

Awards Category: Best Use of Spatial

With Spatial in our company name we use Spatial analytics every day. We use Spatial analytics to better understand consumer behavior, especially relative to the retail stores, restaurants and banks they use. We are avid proponents and users of customer segmentation. We rely on Experian's Mosaic within ConsumerView. In the last 2 years we have invested heavily in understanding the appropriate use of Mobile Device Location data. We help our clients use the mobile data for better understanding their customers as well as their competitors' customers and trade areas.

Describe the problem you needed to solve

Among retail, restaurant and financial services location analysts, one of the hottest topics is using mobile device location data as a surrogate for customer intercept studies. The beauty of this data, when used properly, is that it provides incredible insight. We can define home and work trade areas, differentiate between a shopping center’s trade areas versus its anchors, understand shopping preferences, identify positive co-tenancies, and, perform customer segmentation studies.

The problem, or opportunity, we wanted to solve was to:

1. Develop a process that would allow us to clean/analyze each mobile device’s spatial data in order to determine its most probable home location

2. Build a new, programmatic trade area methodology that would best represent the mall/shopping center visitors’ distribution

3. Easily deliver the trade areas and their demographic attributes

And, it had to scale. You see, our company entered into a partnership with UberMedia and the Directory of Major Malls to develop residence-based trade areas for every mall and shopping center in the United States and Canada – about 8,000 locations. We needed to get from 100 billion rows of raw data to 8,000 trade areas.

Describe the working solution

Before I get into the details I’d like to thank Alteryx for bringing Paul DePodesta back as a Keynote Speaker this year at Inspire. Paul spoke at a previous Inspire and his advice to keep a journal was critical to the success of this project. I actually kept track of CPU and Memory usage as I was doing my best to be the most efficient. Thanks for the advice Paul.

Using only Alteryx Spatial, we were able to accomplish our goal. Without giving away the secret sauce, here’s what we did. We divided the task into three parts which I will describe below.

1. Data Hygiene and Analysis (8 workflows for each state and province) – The goal of this portion was to identify the most likely home location for each unique device. It is important to note that the raw data is fraught with bad data, including common device identifiers, false location data and location points that could not be a home location. To clean the data, nearly all of the 100 billion rows of data were touched dozens of times. Here are some of the details.

a. Common Device Identifiers

i. The Summarize tool was used to determine those device ID’s, which were then used within a Filter tool

ii. Devices with improper lengths were also removed using the Filter tool

b. False Location Data – every now and again there is a lat/long that has an inexplicably high number of devices (think tens or hundreds of thousands). These points were eliminated using algorithms utilizing the Create Points, Summarization and Formula tools, coupled with spatial filtering.

c. Couldn’t be a Home Location – For a point to be considered as a likely home location, it had to be within a populated Census Block and not within other spatial features. We downloaded the Census Blocks from the Census and, utilizing the TomTom data included within Alteryx Spatial, built a series of spatial filter files for each US state and Canadian province. To build the spatial filters (one macro with 60+ tools), we used the following spatial tools:

i. Create Points

ii. Trade Area

iii. Buffer

iv. Spatial Match

v. Distance

vi. Spatial Process Cut

vii. Summarize - SpatialObj Combine

Once the filters were built all of the data was passed through the filters, yielding only those points that could possibly be a home location.

Typically, there are over one thousand observations per device, so even after the filtering there was work left to be done. We built a series of workflows that took advantage of the Calgary tools so that we could analyze each device, individually. Since every device record was timestamped, our workflows were able to identify clusters of activity over time and calculate the most likely home location. Tools critical to this process included:

- Sort

- Tile

- Multi-row Formula

- Calgary Join and Input

- Formula

- Create Points

- Trade Area

- Distance

The Hygiene portion of this process reduced 100 billion rows of raw data to about 45 million likely home locations.

2. Trade Area Delineation (4 workflows/macros for each mall and shopping center, run iteratively until capture rate was achieved) – We didn’t want to manually delineate thousands of trade areas. We did want a consistent, programmatic methodology that could be run within Alteryx. In short, we wanted the trade area method to produce polygons that depicted concentrations of visitors without including areas that didn’t contribute. We also didn’t want to predefine the extent of the trade areas; i.e. 20 minutes. We wanted the data to drive the result. This is what we did.

a. Devised a Nearest Neighbor Methodology and embedded it within a Trade Area Macro – Creates a trade area based on each visitor’s proximity to other visitors. Tools used in this Macro include:

i. Calgary

ii. Calgary Join

iii. Distance

iv. Sort

v. Running Total

vi. Filter

vii. Find Nearest

viii. Tile

ix. Summarize – SpatialObj Combine

x. Poly-Split

xi. Buffer

xii. Smooth

xiii. Spatial Match

b. Nest the Trade Area Macro within an Iterative Macro – By placing the Trade Area Macro within the Iterative Macro Alteryx allow the Trade Area Macro to run multiple scenarios until the trade area capture rate is achieved

c. Nest the Iterative Macro within a Batch Macro – Nesting the Iterative Macro within the Batch Macro allows us to run an entire state at once

The resultant trade areas do a great job of depicting where the visitors live. Although rings and drive times are great tools, especially when considering new sites, trade areas based on behavior are superior. For the shopping center below, a ring would have included areas with low visitor concentrations, but high populations.

3. Trade Area Attributed Collection and Preparation (15 workflows) – Not everyone in business has mapping software but many are using Tableau. We decided that we could broaden our audience if we’d simply make our trade areas available within Tableau.

Using Alteryx, we were able to easily export our trade areas for Tableau.

Build Zip Code maps.

For our clients that use Experian’s Mosaic or PopStats demographics, Alteryx allows us to attach the trade area attributes.

Describe the benefits you have achieved

The benefits we have achieved are incredible.

The impact to our business is that both our client list and industry coverage have more than doubled without having to add headcount. By year end, we expect our clients’ combined annual sales to top $250 billion. Our own revenues are on pace to triple.

Our clients are abandoning older customer intercept methods and depending on us.

Operationally, we have repeatable processes that are lightning fast. We can now produce a store or shopping center’s trade area in minutes. Our new trade methodology has been very well received and requested.

Personally, Alteryx has allowed me to harness my nearly 30 years of spatial experience and create repeatable processes and to continually learn and get better. It’s fun to be peaking almost 30 years into my career.

Since we have gone to market with the retail trade area product we have heard “beautiful”, “brilliant” and “makes perfect sense.” Everyone loves a pat on the back, but, what we really like hearing is “So, what’s Alteryx?” and “Can we get pricing?”

-

2016 Entries

-

Best Use of Spatial Analytics

-

Business Intelligence

Awards Category: Best 'Alteryx For Good' Story

On December 1st, 2015, which was "Giving Tuesday", a global day dedicated to giving back, B2E Direct Marketing announced a newly created grant program for 2016 called 'Big Data for Non-Profits'. B2E Direct Marketing is a business offering Big Data, Visual Business Intelligence and Database Marketing solutions.

Non-profit organizations are a crucial part of our society, providing help to the needy, education for a lifetime, social interactions and funds for good causes.

Describe the problem you needed to solve

While serving on three non-profit boards, Keith Snow, President of B2E, became aware that data is among the most important, under-used and least maintained asset of a non-profit.

The grant program includes the following services free of charge to the winning organization in the month for which they are selected:

- Data Hygiene (clean up donor file)

- Data Append (age, income, gender, marital status, lifestyle segmentation, and more)

- Detailed donor analysis and overview reports

Each month in 2016, B2E will choose one non-profit from those that apply through www.nonprofit360marketing.com. Award recipient applications are reviewed by a panel selected by B2E and awards are given based upon how the services will be used and to further the organization's goals. The grant program began accepting applications from eligible 501(c)(3) non-profits at the end of December and has already completed work on three organizations so far this year.

"We are excited about using Alteryx to help non-profits expand their mission and to better serve our communities." says Snow.

Describe the working solution

B2E has an initial consultation meeting with each non-profit where the goals and takeaways of the 'Big Data for Non-Profits' program is discussed.

We identify current data sources that the non-profit has available, and request up to 48 months of donor contact and giving information. Minimal information is requested from the non-profit as we know great value can be added using Alteryx Designer.

- Name

- Address, City, State, Zip

- Phone

- Date of Donation

- Amount of Donation

- Campaign

Donation type: i.e. cash, check, soft credit, etc.

B2E has created Alteryx workflows to perform donor file hygiene. Since we have licensed the data package, we take advantage of the CASS, Zip4 Coder and Experian Geodemographic append and TomTom capabilities.

All donor data is address standardized to meet postal standards and duplicates within their database are identified. Once the data is updated to meet our standards, we process the files against the National Change of Address and the National Deceased database.

The next step is taking the donor's contact information and appending demographics at the individual and household level (age, income, gender, marital, age of home, Mosaic segmentation, etc.) using the Alteryx Experian add-on product. Alteryx Designer is invaluable for this process as we manipulate the donor data to be more useful for the non-profit.

a. Consumer demographics

b. Mosaic marketing segmentation

c. Campaign or donation source

d. Donation seasonality / giving analysis

e. Pareto (80/20 Rule): to identify and profile the 20% of the donors who contribute 80% of the revenue

f. Geography (city, zip, county, metro area)

Once the data is in the Tableau Extract, business intelligence analysis is performed with visualization that is easy to understand and immediately actionable by the non-profit. Tableau packaged workbooks are created for each non-profit so they have access to interactive analytics to help them make quick and immediate business decisions for their organization.

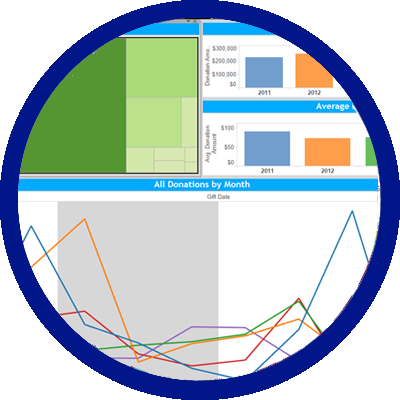

Describe the benefits you have achieved

B2E provides a niche service that many non-profits do not have the knowledge, tools or budget to complete on their own.

The benefits to each non-profit includes the following:

- The donor data from each non-profit can now be processed in days instead of weeks using Alteryx. This allows B2E the maximum ability to help more organizations. In the past, we only worked with one non-profit per year. Our 2016 goal is to work with twelve.

- A clean donor contact file with updated addresses, deceased individuals flag and duplicated merged is returned to the organization. Many non-profits send out direct mail, they immediately see their deliverability rates increase by more than 15% and return mail rates decrease. The cost for printing and postage is optimized as well.

- The best way to get your current donors to give more is to truly understand what they look like. Understand the donor's life stage, giving history, demographics, lifestyle characteristics, media preferences and digital behavior is key for success. Targeting a donor in a way that resonates with them has lead to an increase in giving.

- All non-profits want access to new donors. A profile identifies what the best donor characteristics look like. Since B2E can also acquire direct mail and email lists, we help the non-profit find "look-alike" individuals who have never donated to their organization.

- B2E's goal is to help each non-profit to maximize the current donations coming into their organization so they can keep their expenses and overhead lower as well as offer them a free service they would not have otherwise acquired.

The impact to each non-profit is huge, but the impact to B2E is just as great as we are allowed to use a great tool to be a leader in Iowa as a company that truly gives back to our community all year long. As of April, 2016, we have provided services for:

- Big Brothers Big Sisters of Iowa