Engine Works

Under the hood of Alteryx: tips, tricks and how-tos.- Community

- :

- Community

- :

- Learn

- :

- Blogs

- :

- Engine Works

- :

- Getting Audio Features From the Spotify API

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Notify Moderator

This article originally appeared on the Information Lab blog. Re-posted with permission.

Recently, Hanna Nykowska wrote an excellent blog showcasing how we can use Alteryx to authenticate against the Spotify API, which itself opens up endless possibilities of fun.

In this blog I’m going to highlight how we can use Alteryx to get audio features for a series of my favourite tracks (you will probably be questioning my music taste by the end, but hey ho!).

So What Are ‘Audio Features’?

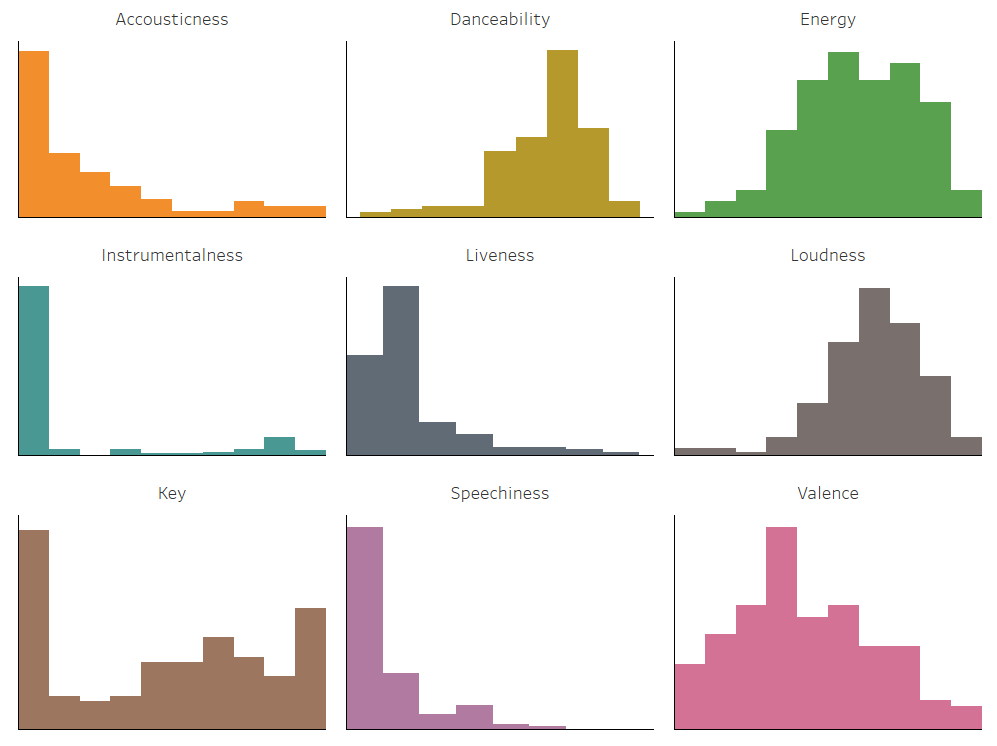

Audio Features is the term assigned to a range of quantitative metrics that are believed to create a profile of a song that is relatable and relevant; for example the metric ‘Danceability’ is supposed to give an indication, through analysing aspects such as tempo, rhythm and beat strength, of how suitable a song is for dancing.

Spotify uses these audio features, along with additional metrics about us as individuals, to help generate the content that is recommended to us via curated playlists; I find this super interesting.

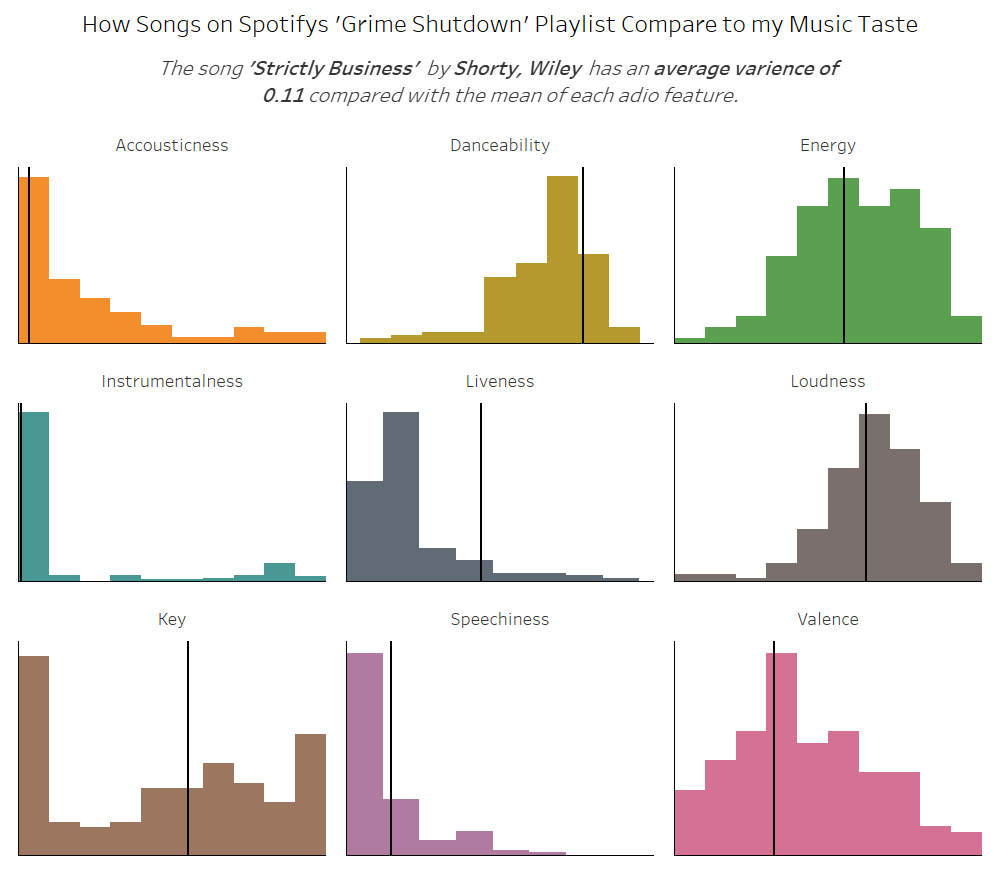

In the image above I have created a profile of all of my favourited/liked/loved music against all of these audio features, and you can instantly see how this data may be of useful to someone with whom (Spotify) it is in their best interest to recommend me music that I may like, in order to keep me as a paying customer.

Note, in the image above, the audio features ‘Mode’, ‘Tempo’ and ‘Time Signature’ are not in the view, but they are available values and are just as important as the metrics shown in the visualisation above.

For example, I clearly do not like loud music. I’m not a rock fan at all, and I’d be seriously questioning my subscription if they ever suggested songs of that genre to me!

Of course, as mentioned, other attributes are important here, but these attributes are key to understanding my music taste.

‘Authorization’

As mentioned at the beginning of this blog; Spotify requires us to authenticate against their API before we actually go and get any data. This makes total sense so they can control the amount of data we are retrieving from their API, and also tell us off should we begin to abuse our access rights.

I pointed you earlier to the blog of my colleague Hanna, in our workflow that we will create in this blog we will utilise the macro that she has created which provides an output field titled ‘Authorization’, which contains an authentication token we can use in our requests.

Getting Track IDs

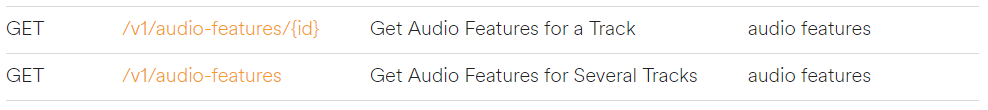

In order to retrieve (or GET) audio feature information we can take one of two routes, we can query one track at a time, or we can use an endpoint that allows us to query multiple tracks at the same time; given that we are likely want to get audio information for multiple tracks at the same time, this is the method that I will demonstrate, and it’s also worth noting that you can also get a single track from the ‘several tacks’ end point.

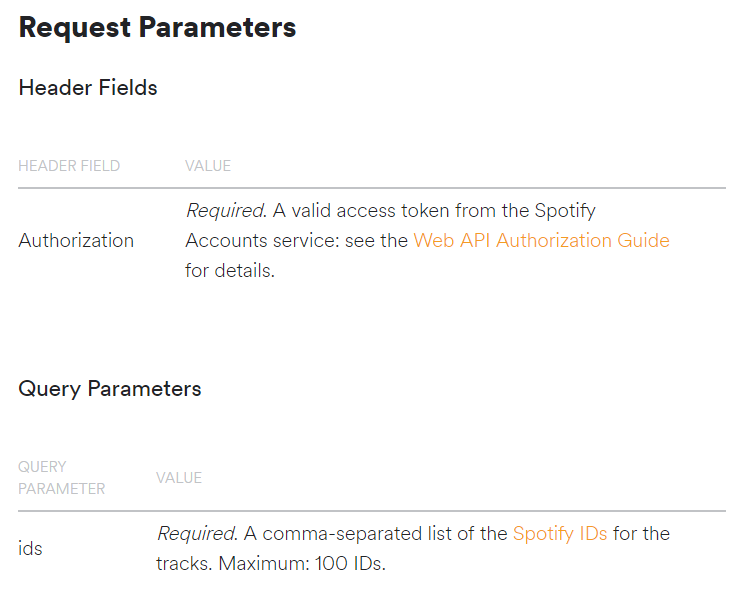

When reading the documentation for the end point there are two parameters needed for the request, the first is a header parameter which is our authorization field generated via Hanna’s macro. The second parameter we need is a ‘query parameter’, and the documentation shows how we need a comma-separated list of Spotify Track IDs, with us being able to query 100 tracks at a single time.

We can get the track IDs relevant to us using an array of methods. We can use the API to get all track IDs on a specific playlist for instance, or use the search end-point to try and find the relevant track IDs too. Alternatively, we can use third-party applications to get all track IDs from our playlists and favourite songs.

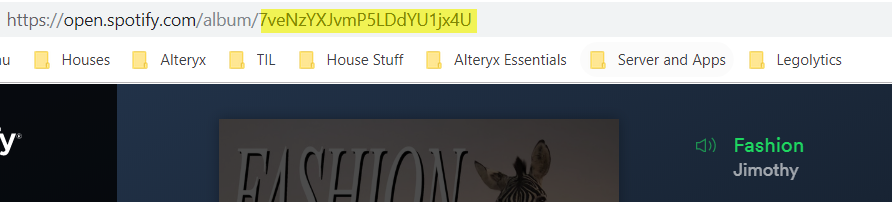

If we are really patient, you can search all the songs you wish to get these attributes for via your web-browser, and copy the track ID from the web URL.

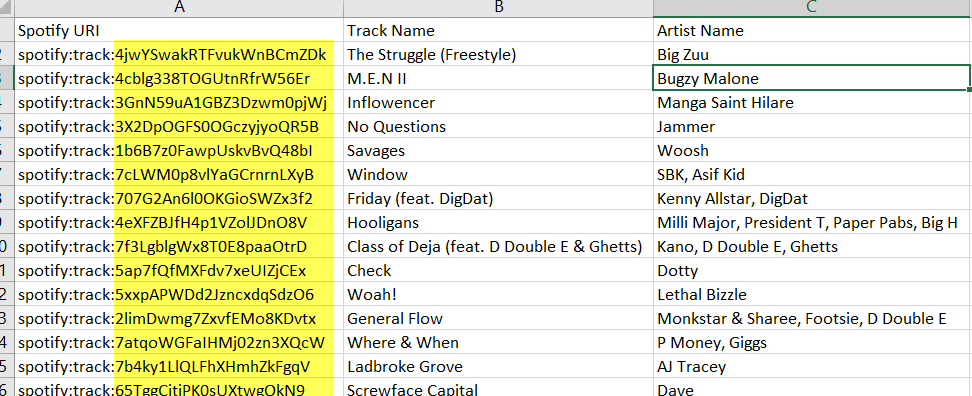

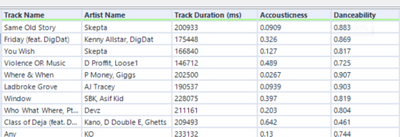

In this case, I used this third-party application to get songs from the ‘Grime Shutdown’ playlist which I follow, which gives me a datasource that looks something like this, with the track ID easily to pull out from the ‘SpotifyURI’ field by using either the Text to Columns or RegEx tools.

Analysing Our Tracks (or Getting Our Audio Features)

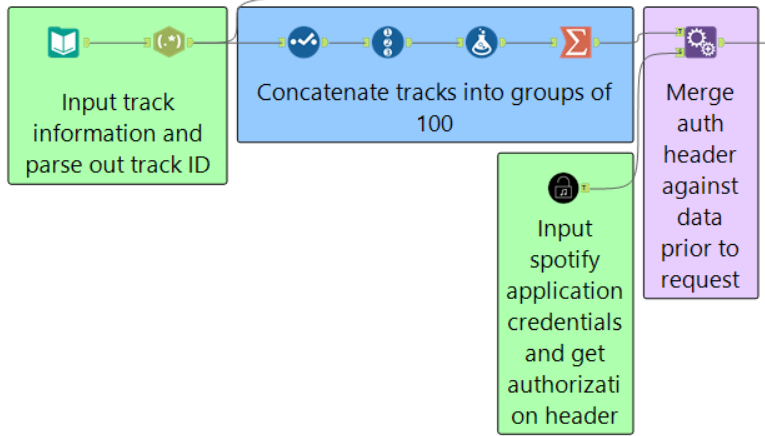

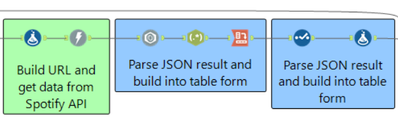

Now that we have both our authorization token and our track IDs, lets cook up some magic.

First things first, we need to bring our Track IDs into this ‘csv’ format required by the end point. This is very easily done by using the Summarize tool. HOWEVER, the API mentions that it will only accept a maximum of 100 track IDs per query. So I’ll use a little trick to build our track IDs into chunks of 100 by…

- Create a RecordID (using the RecordID tool), for each track, which should start at 1.

- Add a formula and create a column, say ‘Group’, with the formula

FLOOR([RecordID]/100)

The floor rounds our division down, so any track with an ID between 1 and 100 will be given the Group 0, between 101-200, Group 1, etc, etc. We can then include this Group field as a Group By clause in our Summarize tool.

Once we have done this, we also need to bring our Authorization field against our request(s), which can be done with an append tool.

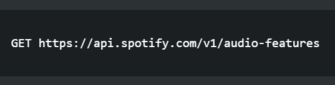

The final thing we need to do before bringing our Download tool onto the canvas and getting our data is to build out the URL which we want to make the request against. In this case, the URL is clearly documented on the right side panel.

However, it’s not just this. As mentioned before, when we are getting a series of tracks, rather than a single track, we do this by using a ‘query parameter’. This type of parameters are added to the end on the end point URL; the initial query parameter is separated from the end point URL via a question mark (?), and any further parameters by an ampersands (&), so…

https://api.service.com/v1/endpoint?queryparameterone=queryvalueone&queryparametertwo=queryvaluetwo

In our case, we only have a single parameter, which is our concatenated list of Track IDs, so our URL will look something like…

https://api.spotify.com/v1/audio-features?ids=TrackID1,TrackID2,TrackIDN…

Which can be easily generated via a formula tool in Alteryx with a statement like…

“https://api.spotify.com/v1/audio-features?ids=”+[IDs]

Where the field [IDs] is the field containing our concatenated track IDs.

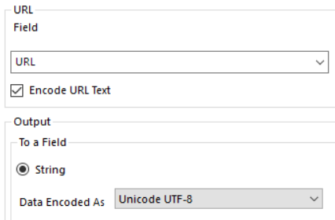

Now we can plug our Download tool into the workflow, and it doesn’t take too much configuration (we’ve already done the hard stuff getting the authorization header and building our query URL).

In the first tab of our Download tool, we should specify the field which contains our URL that we have just created; we also want to download the data to a field as as string, so that we can parse the result immediately in this workflow.

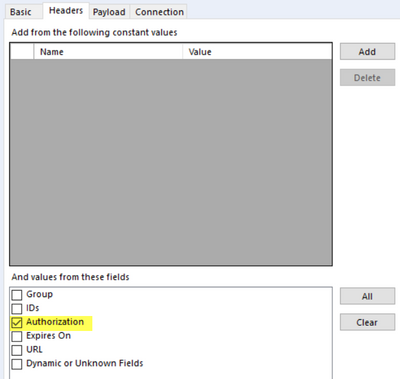

The only other tab where we must change the default configurations is the header tab. Here we should select the ‘Authorization’ field which is generated as part of Hanna’s macro, this authenticates our query and allows us to get our data.

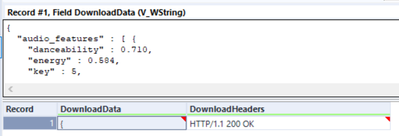

We can now run our workflow, and hopefully we will get the response ‘HTTP/1.1 200 OK’ in the DownloadHeaders field, which is what we want, and whilst the ‘DownloadData’ field looks empty, it does in fact contain data in a JSON format, which we can now begin to parse and build our data table.

Creating a Structured Data Table

There are a huge amount of resources on the Alteryx Community which highlight the process for bringing JSON data into a nicely formatted table, and the process is always very similar (though never exactly the same).

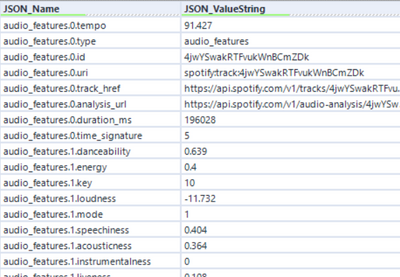

We start with a JSON Parse tool, parsing our ‘DownloadData’ column, which brings our data into a longggggggggggggggggggggggggggggggg format.

The parsed data is formatted in a way in which the JSON_Name field contains the ‘Row ID’ if you will, which is the numeric value in the middle of our string, whilst all text to the right of the final full stop represents a field name.

We can parse this out using two methods, either via Regex, or via the ‘Text to Columns’ tool. In this case, I will go the RegEx route. The below images shows how the configuration panel can be set up to extract our two pieces of information from our JSON_Name column.

The statement .+\.(.+)\.(.+) essentially means ignore everything up to the 1st full stop, then return everything between the 1st and 2nd full stop as one field, and return everything after the 2nd full stop in a second field (marked by the brackets).

The final step is to use the cross-tab tool to bring our data into our table structure. In this case, our group by field should be the ‘Row ID’, whilst our new column headers are of course in our created ‘Field Name’ column. Finally our values are in our ‘JSON_ValueString’ field.

The one further group by field I would add in the cross-tab is the URL, this is a safety in case you are making requests for more than 100 tracks; if you do not check this option, then track 1 from your 1st group of 100, and track 1 from your 2nd group of 100 will be merged into a single line.

We can then just add a select tool to rename the attributes and remove fields should we wish. You can use the reference guide to dictate the data types you should use for each of the fields. It’s worth noting that the ‘Key’ and ‘Mode’ fields use numeric references which need to be overwritten by their true values (again detailed in the reference guide), which is what I have done in the formula tool to the very right of the view above.

The one mild annoyance is that you may have to make another call using the ‘Get Several Tracks’ end point to go and get information about the actual track name, and the artists of the track, which of course provide useful context. In this case, the data table that we downloaded from our third party site already contains this information, so we can simply join back against this dataset to pull through these attributes.

And now we have a nice structured table which we can use for those quantitative self projects!

In the example below I have layered the data from the ‘Grime Shutdown’ playlist tracks over the profile of audio features for songs in my Library, which allows me to understand tracks on this playlist that I am most likely going to enjoy!

The workflow used in this example can be found here, and I have also created a macro which will take a field containing a list of track IDs, and an authentication token, and return a nice structured data table with our audio features (so essentially you’ve just read a ‘how to’ blog for no reason!).

Ben

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.