Part I of this series explains how I set up the experiment to emulate Dorian's original tests. Now on to the results...

Experiment 1: Complex Joins

The first experiment took the data samples and attempted to answer the following business question:

“I want to see Passenger Cars whose make is either Mercedes-Benz or BMW and where the type is Hatchback”

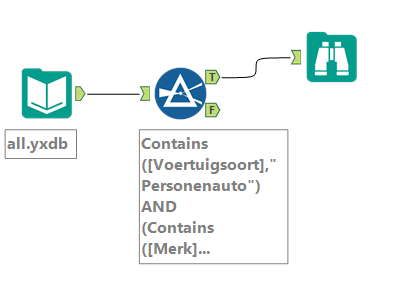

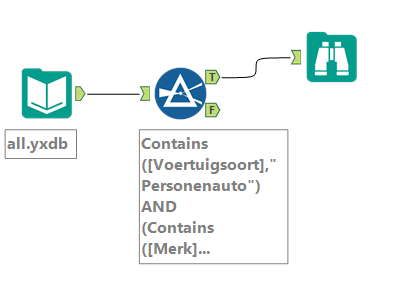

The general structure of the query will look as follows:

select * from df where Voertuigsoort like '%Personenauto%' and (Merk like 'MERCEDES-BENZ' or Merk like 'BMW') and Inrichting like '%hatchback%'

Or, as represented in an Alteryx workflow:

The mean timings are as follows (in seconds, to one decimal place):

| SAMPLE |

ALTERYX LOADING CSV |

ALTERYX LOADING YXDB |

ALTERYX LOADING CYDB |

PANDAS ANALYSIS-ONLY |

PANDAS FROM COLD-START |

| 100k |

0.7 |

0.2 |

0.2 |

0.1 |

1.3 |

| 1m |

5.6 |

1.4 |

0.8 |

0.1 |

12.7 |

| 5m |

27.3 |

6.3 |

3.7 |

0.1 |

65.8 |

| 10m |

54.1 |

12.0 |

7.1 |

0.1 |

129.2 |

| 13.6m |

73.3 |

16.3 |

9.3 |

8.8 |

239.9 |

Experiment 2: Sorting

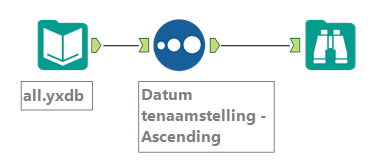

The second experiment took the data samples and attempted to answer the following business question:

“Sort the data by registration date”

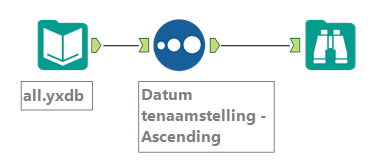

The general structure of the query will look as follows:

select * from df order by 'Datum tenaamstelling'

The mean timings are as follows:

| SAMPLE |

ALTERYX LOADING CSV |

ALTERYX LOADING YXDB |

ALTERYX LOADING CYDB |

PANDAS ANALYSIS-ONLY |

PANDAS FROM COLD-START |

| 100k |

1.0 |

0.6 |

0.3 |

0.2 |

1.5 |

| 1m |

7.7 |

3.4 |

1.8 |

3.4 |

16.1 |

| 5m |

36.6 |

15.9 |

8.1 |

26.5 |

92.3 |

| 10m |

73.7 |

30.7 |

14.7 |

59.5 |

188.6 |

| 13.6m |

123.0 |

64.3 |

19.2 |

240.1 |

471.2 |

Experiment 3: Joining

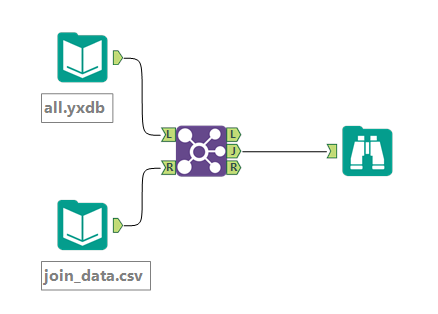

The third experiment took the data samples and attempted to answer the following business question:

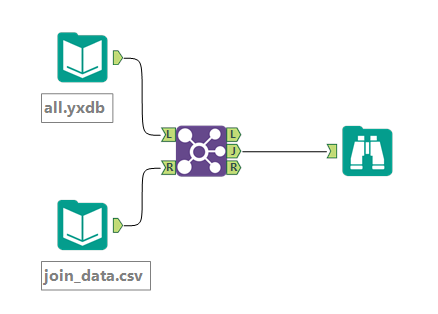

“Join the data to another file using the Type field”

The general structure of the query will look as follows:

select * from df join join_df on df.Inrichting = join_df.type

The mean timings are as follows:

| SAMPLE |

ALTERYX LOADING CSV |

ALTERYX LOADING YXDB |

ALTERYX LOADING CYDB |

PANDAS ANALYSIS-ONLY |

PANDAS FROM COLD-START |

| 100k |

1.1 |

0.7 |

0.3 |

0.2 |

1.5 |

| 1m |

9.0 |

4.8 |

2.2 |

1.5 |

14.1 |

| 5m |

51.5 |

31.0 |

10.3 |

7.3 |

73.1 |

| 10m |

105.3 |

65.0 |

20.3 |

16.0 |

145.1 |

| 13.6m |

140.7 |

85.3 |

28.0 |

119.4 |

350.4 |

Experiment 4: Self-Joins

The fourth experiment took the data samples and attempted to answer the following business question:

“Join the data to itself, using the Licence Plate field”

The general structure of the query will look as follows:

select * from df join df on df.Kenteken = df.Kenteken

The mean timings are as follows:

| SAMPLE |

ALTERYX LOADING CSV |

ALTERYX LOADING YXDB |

ALTERYX LOADING CYDB |

PANDAS ANALYSIS-ONLY |

PANDAS FROM COLD-START |

| 100k |

1.6 |

1.3 |

0.5 |

0.3 |

1.6 |

| 1m |

12.0 |

8.6 |

3.9 |

2.8 |

15.5 |

| 5m |

57.6 |

42.4 |

19.2 |

17.9 |

83.7 |

| 10m |

129.0 |

97.3 |

33.5 |

116.9 |

246.0 |

| 13.6m |

189.3 |

143.0 |

45.6 |

380.9 |

612.0 |

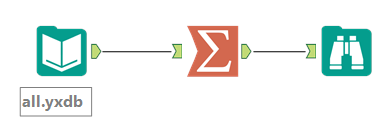

Experiment 5: Grouping

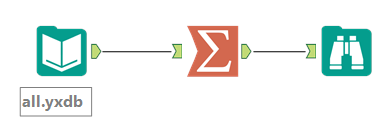

The fifth and final experiment took the data samples and attempted to answer the following business question:

“Tell me how many license plates have been registered, by Make”

The general structure of the query will look as follows:

select count(*) from df group by Merk

The mean timings are as follows:

| SAMPLE |

ALTERYX LOADING CSV |

ALTERYX LOADING YXDB |

ALTERYX LOADING CYDB |

PANDAS ANALYSIS-ONLY |

PANDAS FROM COLD-START |

| 100k |

0.7 |

0.3 |

0.1 |

0.1 |

1.3 |

| 1m |

6.7 |

2.4 |

0.2 |

0.1 |

12.8 |

| 5m |

33.0 |

12.3 |

0.7 |

0.5 |

66.3 |

| 10m |

59.7 |

17.8 |

1.4 |

1.0 |

130.1 |

| 13.6m |

85.0 |

27.0 |

2.1 |

2.3 |

233.4 |

Experimental Analysis

We’ve got a wealth of data to pore over and analyse from these experiments.

Firstly, from a pure Alteryx perspective, there’s an amazing difference in performance even by changing the Alteryx input format from raw CSV to YXDB and then to Calgary! (If nothing else, this blog should act as a call-to-action to always think about using the Calgary format if you work with larger data sets and need to squeeze every ounce of query performance from that data!)

The Python results also demand a comparative analysis and commentary. Pandas (as a relatively simple scripting language) is extremely fast for processing filters and groupings, but seems less efficient at sorting and joining. Interestingly, using a lower-specification machine than the original author I didn’t see Pandas failing against any of these experiments, but I did notice that it appears to struggle as the amount of memory required reaches the machine’s physical limit. Indeed, it seems that its performance gets exponentially worse close to the memory limit. (Hence the original author’s comments about potentially running analysis against larger datasets by simply scaling up the laboratory environment to much larger capacities of RAM).

If you include the time needed to populate the initial Pandas data-frame, the time required for a ‘cold-start’ analysis is considerably slower than the Alteryx equivalent.

Given that these benchmarks represent common data science preparation operations (e.g. prior to a machine-learning analysis) – these results really showcase Alteryx as a high-performing alternative to Python in citizen data science teams!

Potential next steps

This experiment provides an interesting foundation for future data preparation and blending performance benchmarking tests. As Alteryx (and Python, and the industry in general) evolves, these tests (and the open data that they’re based upon) can be easily reused to test new algorithms and new data processing infrastructure.

Given the popularity of Python as an open-source platform for data analytics, it would be valuable to compare equivalent R code against both Alteryx and Python against this dataset, as well as demonstrating the performance of the Alteryx in-database tools (e.g. Spark, SQL Server, etc.) to deliver the same analytic steps but leveraging the power of an underlying data platform without the need to write code.

I'd love to see comments if there are any ideas to squeeze even more performance from the Alteryx Platform.