Alteryx Success Stories

Learn how Alteryx customers transform their organizations using data and analytics.- Community

- :

- Public Archive

- :

- Success Stories

- :

- A Particularly Problematic Parsing Example

A Particularly Problematic Parsing Example

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Printer Friendly Page

2018 Excellence Awards Entry: A Particularly Problematic Parsing Example

An unusual text file parsing situation requiring dynamic parsing rules to be applied to each line of a large data file, with different parsing logic for each line determined by that line’s record type.

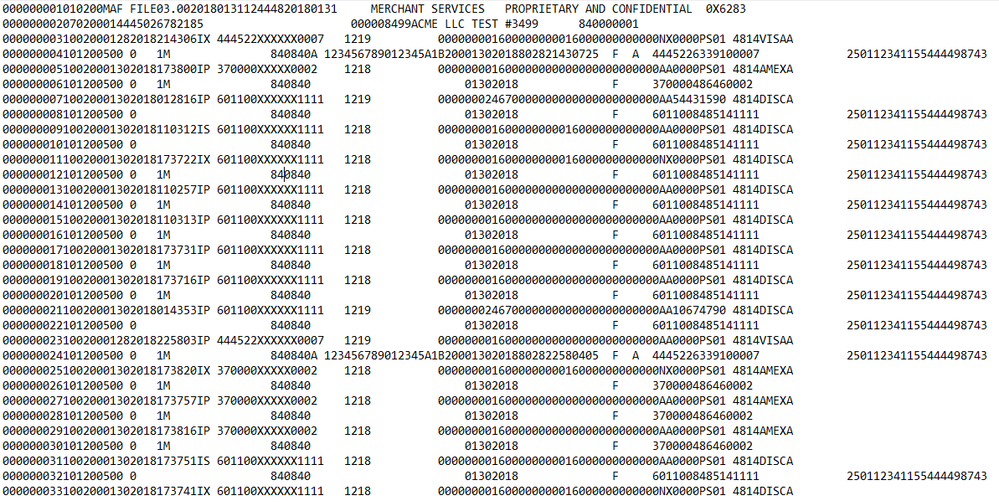

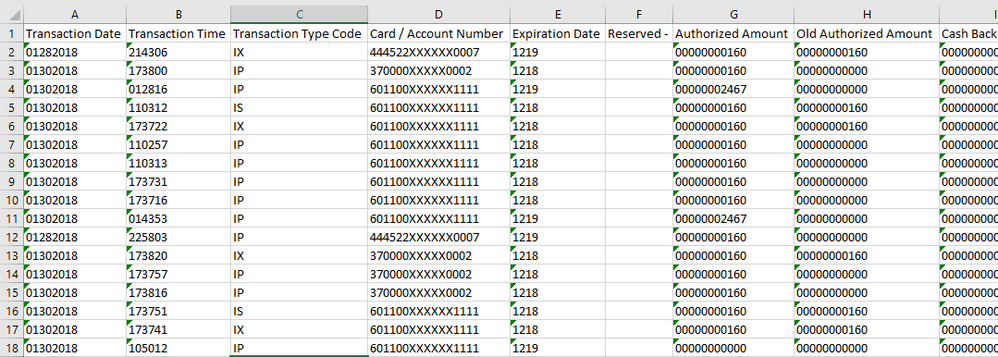

The Accounting Department at T-Mobile was presented with an unique challenge in trying to parse large text files received every day by our credit card processing company: these text files contain data about credit card transactions from ALL T-Mobile retail stores, but every line of the file contains different mapping rules, based on that item’s record type… so while one line might have 3 characters in the first column and 13 in the next, the second line might have two columns of 8 characters each. Without any of the usual suspects available for delimiters (tabs, commas, pipe-delimiters…), I needed to find a solution that would dynamically adjust the way each line of the file was parsed based on its record type.

Describe your working solution:

To begin the process of resolving this challenge, I looked through the documentation provided by the credit card processing company and determined that while the rules were different for every line of the file, there was a set logic based upon the record type (a 3-digit code near the beginning of every record). So, for example, every record type 100 had the characters-per-field pattern of 8-6-3-19-4-10-11-11-11-2-6-3-4-4-4-79… and every record type 361 had the characters-per-field pattern of 1-3-4-9-3-8-9-14-1-8-19-8-98… etc.

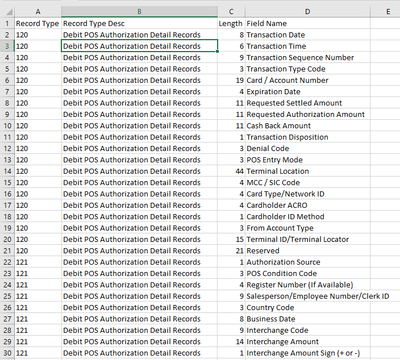

The first step then was to create the File Mapping document (I used an Excel file, in case future mapping changes needed to be made) that contained 4 fields – record type, record type description, length of the field, and the field name (field names were different for every record type as well). This would be used in the Alteryx workflow both to split out each line of data into its appropriate number of fields (with the right number of characters in each) as well as to name these fields when the output data was written to separate tabs in the output Excel file.

NOTE: A later modification to this workflow also created an additional layer of complexity when I was asked to limit the output to specific fields (different for every record type) in conjunction with summary file information… but because I already had the fields & headers mapped for each record, it was very simple to just select the fields we needed and output that smaller, summarized subset.

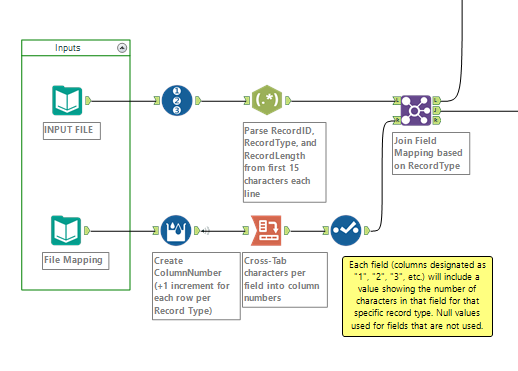

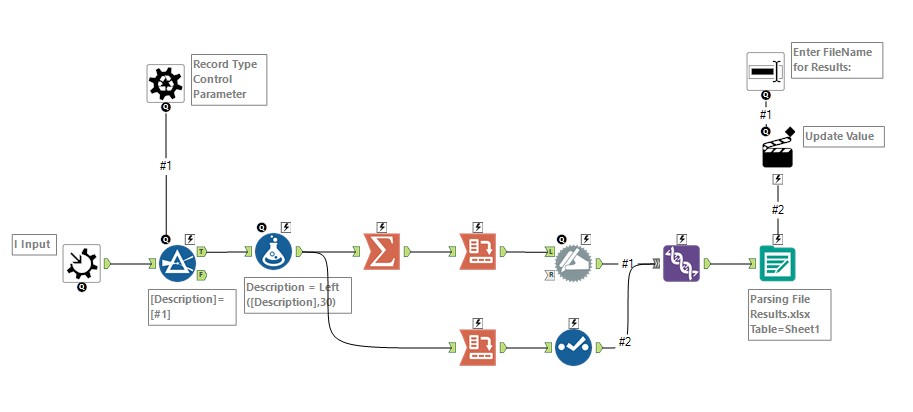

The second step was to create the workflow to pull in the mapping information and apply it to each record based on record type. I used Multi-Row tool to create column numbers, then pivoted the information so that the column #’s were headers with the number of characters per field shown in each line, per record type.

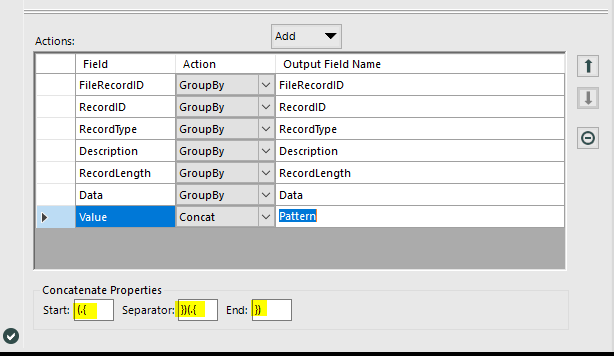

With the joined data showing number of characters per field, I transposed the information and then summarized using the concat function, configured as follows:

This created a pattern that could be used in RegEx formulas that looked something like this:

(.{8})(.{5})(.{8})(.{6})(.{8})(.{5})(.{20})(.{30})(.{6})(.{4})(.{4})(.{6})(.{7})(.{7})(.{4})(.{5})(.{10})(.{42})

This formula would bring back 8 characters for the first field, 5 characters for the second field, etc. so that each field could be parsed into the correct number of characters based on that record type.

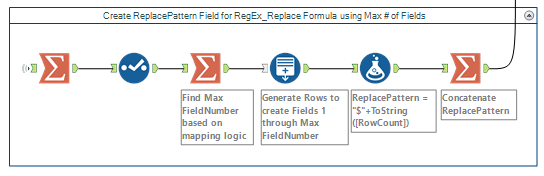

I then created a ReplacePattern based on the number of fields being used by the data in the file… for example, if there were 10 fields being used, I found the max field number (10), used Generate Rows to create fields 1-10, and then concatenated that list to create “$1|$2|$3|$4|$5|$6|$7|$8|$9|$10”. This would be used in the RegEx formula in the next step as the output, effectively adding a delimiter in between each of the fields based on the mapping logic.

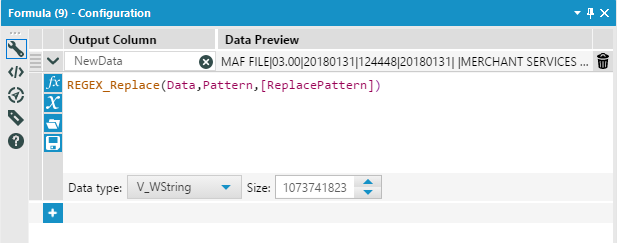

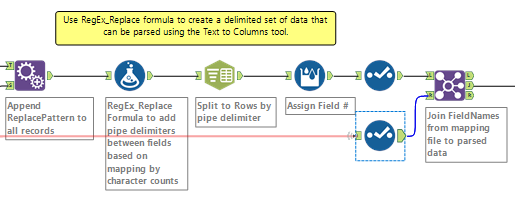

By appending this pattern on to the transaction data, I could now create a RegEx formula as follows:

Now that the data had been parsed into the correct number of fields per record type, I could join the Field Names from the original mapping file.

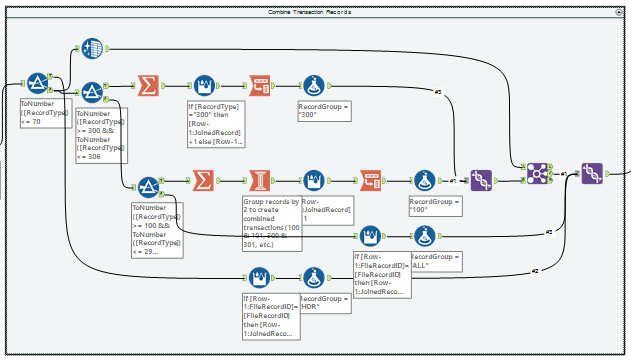

Some additional data prep was needed to combine some of the records… for example, record type 100 & 101 needed to be combined to create the full set of fields for that transaction. Using several of the tools in the transform category (plus one of my favorite secret weapons, Make Columns tool), I was able to join those related records together and create a final version of the data that contained the basic fields for output: Record ID, Record Type, Column Number, Field Name, and Data.

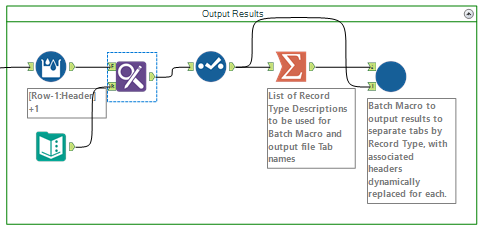

The final step in this solution was to output the records to an Excel file with a different tab for each record type (since the field names would vary for each, I could not output the results all to one output). By creating a batch macro that processed each record type one at a time and output the results to its own tab in the Excel output, I was able to apply the dynamic column names based on the mapping for that Record Type.

One of the coolest benefits of this workflow is that it not only solved what seemed to be an impossible parsing problem for this specific use case related to credit card processing, but it is also a repeatable and adaptable workflow that could be used for any number of other unusual parsing situations with very little modification. This could easily be adapted for use by departments outside of the Accounting Department that deal with similar data processing scenarios as well, making it a potentially valuable tool for T-Mobile as a whole!

The sheer quantity of data that would have needed to be processed manually made this a daunting task, but with this flexible Alteryx workflow, we are able to choose whether we process records daily, weekly, monthly… the quantity of data is no longer a factor because it can be run through this workflow in a matter of seconds. The time it took to develop a fully functional solution was incredibly fast as well – I had a working draft done in a couple hours, and have made only minor revisions to it since that initial pass. Without the flexibility of this Alteryx solution, we would likely have been looking at an expensive, custom-developed program from an outside source. In this case, the hours saved, both in processing as well as solution-development, are almost immeasurable – it would have been a monumental task to even parse 100 records with the dynamic nature of the file structure, let alone the thousands (or even millions) of records that can now be processed in this manner every day. With the data attached to this use case, it takes just 5.7 seconds to process almost 4000 records. Even with a simple delimited text file, we wouldn’t have been able to parse and output that much data so quickly!

Packaged workflow (include sample text file with dummy data, example mapping file, and output macro) attached.

-

Adobe

2 -

Alteryx Connect

5 -

Alteryx Designer

202 -

Alteryx for Good

1 -

Alteryx Promote

2 -

Alteryx Server

66 -

Alteryx using Alteryx

29 -

Americas

158 -

Analyst

108 -

Analytics Leader

54 -

Asia Pacific

29 -

AWS

9 -

BI + Analytics + Data Science

100 -

Business Leader

37 -

C-Leader

18 -

Data Prep + Analytics

230 -

Data Science + Machine Learning

113 -

Data Scientist

14 -

Department: Other

14 -

Education

18 -

Energy + Utilities

5 -

Europe + Middle East + Africa

58 -

Experian

2 -

finance

29 -

Financial Services

33 -

Healthcare + Insurance

21 -

Human Resources

19 -

Information Technology

25 -

IT

31 -

Life Sciences + Pharmaceuticals

3 -

Manufacturing

20 -

Marketing

16 -

Media + Entertainment

12 -

Microsoft

52 -

Operations

38 -

Other

10 -

Process Automation

60 -

Professional Services

69 -

Public Sector

15 -

Qlik

1 -

Retail + CPG

32 -

Sales and Service

24 -

Salesforce

9 -

SAP

11 -

Snowflake

6 -

Tableau

71 -

Tech Partner: Other

86 -

Technology

34 -

Telecommunications

5 -

Teradata

5 -

Thomson Reuters

1 -

Transportation + Logistics

25 -

Travel + Hospitality

4 -

UiPath

1

- « Previous

- Next »