Alteryx Designer Desktop Discussions

Find answers, ask questions, and share expertise about Alteryx Designer Desktop and Intelligence Suite.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Designer Desktop

- :

- Re: Delete before insert in Output Tool

Delete before insert in Output Tool

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi All,

I am trying to output data from Alteryx output tool to SQL Server database. I would like to delete the old records(which I am trying to insert) for that particular period from database before insert.

Solved! Go to Solution.

- Labels:

-

Database Connection

-

Input

-

Output

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi Jeeva,

There is no way to parameterize your pre or post SQL in Aleteryx , but yes there are some tricky way to achieve this

What you can do is, create a control table on you data base, something like below and insert the file name what you have if you are dealing with multiple source files

create table ETLcontrol (filename varchar(50),filedate date)

insert ETLcontrol values('Sample',null)

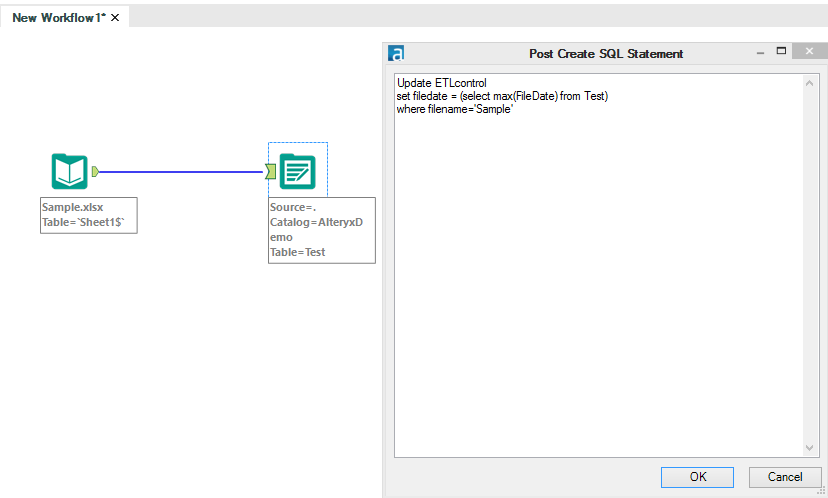

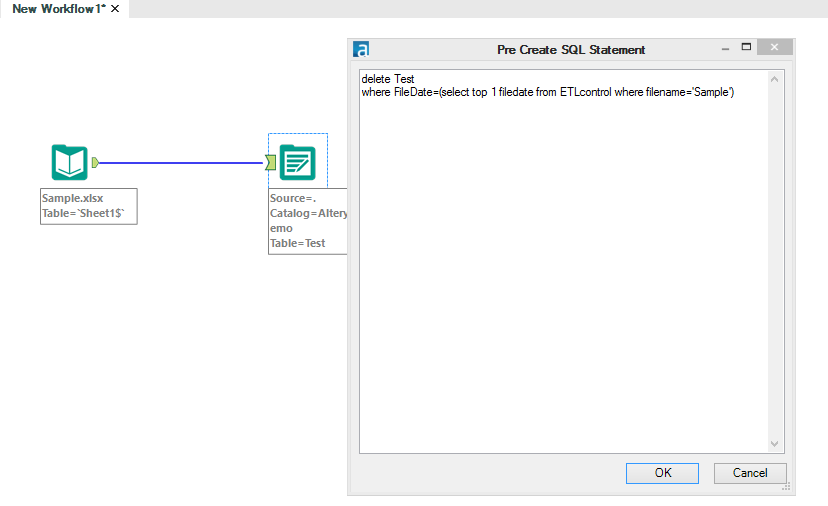

Then configure both post and pre sql with below SQL

--post SQL

Update ETLcontrol

set filedate = (select max(FileDate) from Test)

where filename='Sample'

--Pre SQL

delete Test

where FileDate=(select top 1 filedate from ETLcontrol where filename='Sample')

So after you configure this and when you run it first time it will load the date into your destination and also POST sql will update the control table for each file with the latest date processed

And when you run it next time PRE SQL will look for the date to be deleted and before you load it will delete the rows. And you need to customize SQL as per your requirement for control table

Hope this may help you to start your SQL with Alteryx

PFA sample for you testing

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi. Thanks for your reply. But my file name will be different every month and so I cannot depend on that. My only option here is to use the date column from the incoming data.. :(

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Jeeva,

I think there is a workaround still

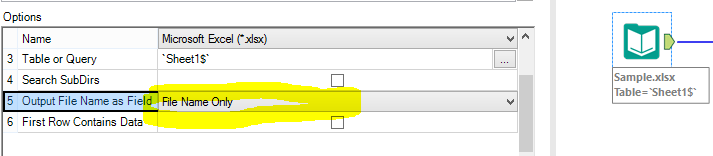

What you can do is, Grab the File name also from your input and pass it throughout your workflow and add a column name in your Destination table and populate the file name

Then modify your both Post and Pre SQL statements

you can see the same in the file that i attached attached in my earlier reply

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi,

Thanks for your reply. Yes that makes sense. I tried to implement this, but wanted to just optimize and have one single workflow instead of one to update the min and max date in ETLcontrol table and another one to run the actual workflow.

Since the input data is huge, having two workflows to read the same input takes twice the time. Is it possible to read in and write the min and max DATE with file name to ETLcontrol and make the remaining part of the workflow to wait till this happens. Then fetch date from this ETLcontrol table and update accordingly. I thought of using block until done, but not sure if that waits till the date insert happens. Also not sure if I can pass data out of the block until done tool.

Regards,

Jeeva.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi

yes you can try with Block untill done

Also give a try with another Macro developed by Adam. Download it from the below Link for "ParallelBlockUntilDone.yxmc"

http://www.chaosreignswithin.com/p/macros.html

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

The solution here is to use a Batch Macro. The Batch Macro, rather than

doing any batching, is just used to make the SQL statement dynamic - this

is a fantastic use of batch macro and is often overlooked.

Configure the output module and connect two control parameters with actions

to update the start and end dates of the preSQL statement. The macro itself

can consist of just two "data" tools, a macro input and an output tool - as

well as the 4 interface tools (2 actions and 2 control parameters).

Now in the parent module add the macro (which will have two inputs - the

control parameters and the main data feed) and connect the min and max

values from a Summarise to the control parameters, and feed the data into

the macro input.

Hope that makes sense, I'm on the road today but I can build an example

module tonight if needed.

Regards

Chris

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Great Idea Chris

i am waiting to see your example :-) as this is a common need for every developer who deal with Database using Alteryx :)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Attached.

Typically I'd go a step futher and have a log table updated in the post sql script.

In a lot of cases I'd also use a control table to control which tables I load and the changes to the database, but it looks unnecessary in this case. Alteryx makes a great ETL for these situations with the right implementation.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

That is perfect. It works well. Thanks a lot Chris and pichaipillai for your ideas.

-

Academy

6 -

ADAPT

2 -

Adobe

204 -

Advent of Code

3 -

Alias Manager

78 -

Alteryx Copilot

26 -

Alteryx Designer

7 -

Alteryx Editions

95 -

Alteryx Practice

20 -

Amazon S3

149 -

AMP Engine

252 -

Announcement

1 -

API

1,209 -

App Builder

116 -

Apps

1,360 -

Assets | Wealth Management

1 -

Basic Creator

15 -

Batch Macro

1,559 -

Behavior Analysis

246 -

Best Practices

2,695 -

Bug

719 -

Bugs & Issues

1 -

Calgary

67 -

CASS

53 -

Chained App

268 -

Common Use Cases

3,825 -

Community

26 -

Computer Vision

86 -

Connectors

1,426 -

Conversation Starter

3 -

COVID-19

1 -

Custom Formula Function

1 -

Custom Tools

1,939 -

Data

1 -

Data Challenge

10 -

Data Investigation

3,488 -

Data Science

3 -

Database Connection

2,221 -

Datasets

5,223 -

Date Time

3,229 -

Demographic Analysis

186 -

Designer Cloud

742 -

Developer

4,374 -

Developer Tools

3,532 -

Documentation

528 -

Download

1,037 -

Dynamic Processing

2,941 -

Email

928 -

Engine

145 -

Enterprise (Edition)

1 -

Error Message

2,261 -

Events

198 -

Expression

1,868 -

Financial Services

1 -

Full Creator

2 -

Fun

2 -

Fuzzy Match

713 -

Gallery

666 -

GenAI Tools

3 -

General

2 -

Google Analytics

155 -

Help

4,711 -

In Database

966 -

Input

4,296 -

Installation

361 -

Interface Tools

1,902 -

Iterative Macro

1,095 -

Join

1,959 -

Licensing

252 -

Location Optimizer

60 -

Machine Learning

260 -

Macros

2,865 -

Marketo

12 -

Marketplace

23 -

MongoDB

82 -

Off-Topic

5 -

Optimization

751 -

Output

5,258 -

Parse

2,328 -

Power BI

228 -

Predictive Analysis

937 -

Preparation

5,171 -

Prescriptive Analytics

206 -

Professional (Edition)

4 -

Publish

257 -

Python

855 -

Qlik

39 -

Question

1 -

Questions

2 -

R Tool

476 -

Regex

2,339 -

Reporting

2,434 -

Resource

1 -

Run Command

575 -

Salesforce

277 -

Scheduler

411 -

Search Feedback

3 -

Server

631 -

Settings

936 -

Setup & Configuration

3 -

Sharepoint

628 -

Spatial Analysis

599 -

Starter (Edition)

1 -

Tableau

512 -

Tax & Audit

1 -

Text Mining

468 -

Thursday Thought

4 -

Time Series

432 -

Tips and Tricks

4,187 -

Topic of Interest

1,126 -

Transformation

3,731 -

Twitter

23 -

Udacity

84 -

Updates

1 -

Viewer

3 -

Workflow

9,982

- « Previous

- Next »