Alteryx Designer Desktop Discussions

Find answers, ask questions, and share expertise about Alteryx Designer Desktop and Intelligence Suite.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Designer Desktop

- :

- Re: Zendesk JSON export - wrap in array and comma-...

Zendesk JSON export - wrap in array and comma-separate each line

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I have exported customer service ticket data from Zendesk in json format. The problem with the Zendesk json export is that it is not formatted in a normal way. Before I'm able to use the Alteryx Input Data tool for json, I need to wrap the exported data in an array and comma-separate each line.

With small enough export files, I am able to do this in Notepad++ by doing the follow -

- Add the following as a first line in the file

- {"tickets":[

- Add the following as a bottom line in the file

- ]}

- Separate all the objects enclosed in { } with commas using find and replace

For a large file, is there a way for me to replicate the wrapping and comma-separating processes within Alteryx so that I'm able to read in the json file and work with it?

Thanks for the help!

Solved! Go to Solution.

- Labels:

-

Input

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

You can use the Sample tool in Alteryx to take the First record and add the first line. Or you can create the first line with a Text Input tool and Append tool to stack it on top of the data forming the first line.

For the last line, the Sample tool can be used also to extract that, make changes to it, and Append tool to stack it underneath the data.

For the body ie. between { and }, you can either use REGEX in the Regex Parse tool, to search for this and insert the commas, or you can filter this data out, and then use a simple String function to replace spaces with commas.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Thank you, @RishiK! Really appreciate the quick and clear answer.

One follow-up - I am currently unable to import the data as json given the formatting issue. What configuration on the Input Data tool would I use to be able to load the data and subsequently use the Regex Parse tool?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

If you are trying to format it as JSON just to parse in Regex maybe you should consider skipping the JSON formatting and just parsing the file as is? parsing is parsing....regex is awesome and if you have recognizable patterns just parse them.

The act of formatting as you describe sounds unnecessary if you are just feeding it into another tool to parse apart again...if you need to send it into some other application that needs JSON then that is another thing...

If you want to force the JSON format rather than sample tool, which is a good idea, consider...

- if the data is in rows and your main thing is modifying first and last row you could use a record ID tool to number each record and use a count in a parallel or other tool to identify the last record and use a formula with string functions or regex in formula to make the changes. RecordID = 1 tells you it is first row and you do that modification....RecordID = Count and you know it is the last row and do that change...in between use your choice of string functions or regex_replace to insert commas or other symbols as you need.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi @laurany

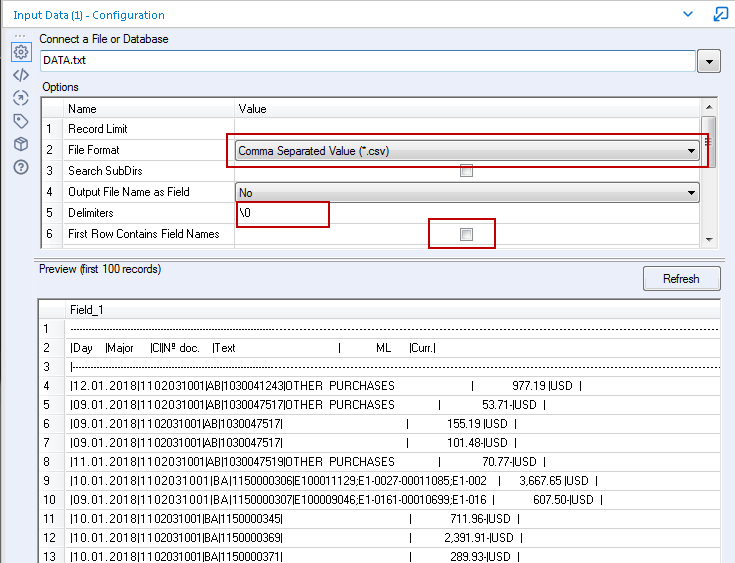

You can use the following config to load in any text based file, xml, json, html, etc

Format=csv

Delimiters=\0

First row contains field names = unchecked

With this config, the input tool will load all lines from the file, one per row exactly as they are. From there, you can process the data as you see fit.

Dan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Good shout @danilang

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Thank you all for the pointers!

In the end, I did the following -

- Loaded as a CSV with no headers or delimiters

- Used select records to pull out two individual rows and a middle chunk (the order of the rows didn't matter)

- Used Formula to edit each type of row (first, last, and middle as needed)

- Used a Union to recombine them in the specified order

- Then was ready to start using JSON parse tool

-

Academy

6 -

ADAPT

2 -

Adobe

204 -

Advent of Code

3 -

Alias Manager

78 -

Alteryx Copilot

26 -

Alteryx Designer

7 -

Alteryx Editions

95 -

Alteryx Practice

20 -

Amazon S3

149 -

AMP Engine

252 -

Announcement

1 -

API

1,208 -

App Builder

116 -

Apps

1,360 -

Assets | Wealth Management

1 -

Basic Creator

15 -

Batch Macro

1,559 -

Behavior Analysis

246 -

Best Practices

2,695 -

Bug

719 -

Bugs & Issues

1 -

Calgary

67 -

CASS

53 -

Chained App

268 -

Common Use Cases

3,825 -

Community

26 -

Computer Vision

86 -

Connectors

1,426 -

Conversation Starter

3 -

COVID-19

1 -

Custom Formula Function

1 -

Custom Tools

1,938 -

Data

1 -

Data Challenge

10 -

Data Investigation

3,487 -

Data Science

3 -

Database Connection

2,220 -

Datasets

5,222 -

Date Time

3,227 -

Demographic Analysis

186 -

Designer Cloud

742 -

Developer

4,372 -

Developer Tools

3,530 -

Documentation

527 -

Download

1,037 -

Dynamic Processing

2,939 -

Email

928 -

Engine

145 -

Enterprise (Edition)

1 -

Error Message

2,258 -

Events

198 -

Expression

1,868 -

Financial Services

1 -

Full Creator

2 -

Fun

2 -

Fuzzy Match

712 -

Gallery

666 -

GenAI Tools

3 -

General

2 -

Google Analytics

155 -

Help

4,708 -

In Database

966 -

Input

4,293 -

Installation

361 -

Interface Tools

1,901 -

Iterative Macro

1,094 -

Join

1,958 -

Licensing

252 -

Location Optimizer

60 -

Machine Learning

260 -

Macros

2,864 -

Marketo

12 -

Marketplace

23 -

MongoDB

82 -

Off-Topic

5 -

Optimization

751 -

Output

5,255 -

Parse

2,328 -

Power BI

228 -

Predictive Analysis

937 -

Preparation

5,169 -

Prescriptive Analytics

206 -

Professional (Edition)

4 -

Publish

257 -

Python

855 -

Qlik

39 -

Question

1 -

Questions

2 -

R Tool

476 -

Regex

2,339 -

Reporting

2,434 -

Resource

1 -

Run Command

575 -

Salesforce

277 -

Scheduler

411 -

Search Feedback

3 -

Server

630 -

Settings

935 -

Setup & Configuration

3 -

Sharepoint

627 -

Spatial Analysis

599 -

Starter (Edition)

1 -

Tableau

512 -

Tax & Audit

1 -

Text Mining

468 -

Thursday Thought

4 -

Time Series

431 -

Tips and Tricks

4,187 -

Topic of Interest

1,126 -

Transformation

3,730 -

Twitter

23 -

Udacity

84 -

Updates

1 -

Viewer

3 -

Workflow

9,980

- « Previous

- Next »