Alteryx Designer Desktop Discussions

Find answers, ask questions, and share expertise about Alteryx Designer Desktop and Intelligence Suite.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Designer Desktop

- :

- Re: Whether you can write data to the databricks u...

Whether you can write data to the databricks using the output tool?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

HI,all

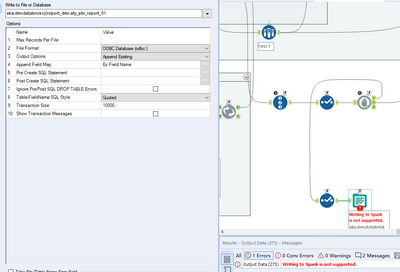

Whether you can write data to the databricks using the output tool?How do I write the result data to the databricks?

Because I had a problem: I was using the output tool to try to write the resulting data to the databricks, but the message was not supported. Do you have any solutions?

thanks all.

- Labels:

-

Designer Cloud

-

Developer

-

Error Message

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi @pg_two

It doesn't look like youcan use the Output Tool to write to Databricks, you need to use the Data Stream In Tool. More information can be found here (for Alteryx Version 22.1) -> https://help.alteryx.com/20221/designer/databricks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

hi @davidskaife

When I use the input stream tool to write to databricks, he prompts me about a bug in Alteryx itself:

Error: Data Stream In (5): You have found a bug. Replicate, then let us know. We shall fix it soon.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi @pg_two

I can suggest the following, if you haven't already:

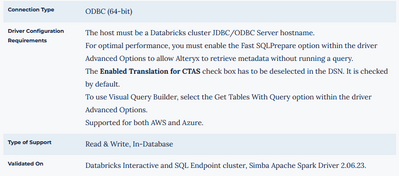

Ensure your drivers are fully up to date

Ensure your driver configuration is set up correctly as per the below

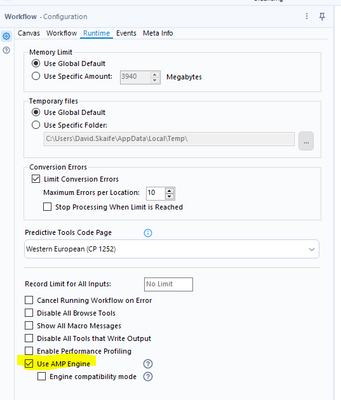

If you created the workflow in Alteryx 2022.1+ then try running the workflow with AMP Engine off, just to see if that makes a difference

If none of these make a difference and you still get the message, I'd raise a support request with Alteryx for them to have a look into it for you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hey - not sure if you got a satisfactory solution to this - I have never successfully written to Databricks (AWS) using the Ouptut data tool. There are multiple different types of write possibilities listed (Databricks CSV) for example - all of which error somewhere along the way.

I do - however - successfully write to Databricks using Datastream-In all of the time... If you need help setting this up - there's some nuance here - feel free to drop me a line. Basically you have to have the SQL endpoint set up in your ODBC and you need your token/PAT in both the read and write connection In-DB.

I hit that "you have found a bug" a bunch of times. I vaguely remember getting that extensively when I used summarize in-db on all of the fields prior to datastream out. On the write-side this may have had to do with improperly configured write connection string. my notes say the write Endpt needs to be https:// and needs to not end in / - it should also be set for Databricks bulk loader CSV

and while I'm commenting - Alteryx does NOT support append to existing with Databricks. you can set up a Sparks job to merge or something - but append to existing is not support. The product team told me last year they were working on incorporating Merge into it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Thank you so much @apathetichell . Following you advice literally brought three days of work to an end even with an Open case with Alteryx support so thank you!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Have you had any issues writing larger datasets? I seem to have no issues writing tables of smaller sizes using what you oultined here using the In-Db Datastream-In tool, however, anytime I'm writing anything over 2GB it seems to error out. Is there a limit on Databricks that is causing the issue?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

@jheck honestly it could be a bunch of things - but the first place I'd look is your cluster timeout policy - and where you are staging your data. If you are using a bulk loader (which you pretty much have to) you are moving it into a single location in your system - and then pushing it to Databricks (vs incremental api calls)- this can save time - but if your cluster goes to sleep during the inactivity - that won't work. You can enable driver logging in your odbc - but I'd look at cluster timeout first. If I was pushing large data - I'd probably push to S3 for staging.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I'm far from an expert on this, so I'm not entirely sure what you mean by push to S3 for staging. Is this something that can be done in Alteryx? The other limitation I have is that I'm unable to receive a shared key for security reasons.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

@jheck - sounds like my company. Usually (always) there's a storage system intertwined with your lakehouse (ie your lake)- which should be s3 or azure blob storage. You can use this to stage (write the files to) if you have keys. If you don't have access - I'd recommend:

1) request a longer timeout on the cluster (say 30 minutes vs 15).

2) batch your writes (write every x minutes)

3) if you can authenticate to your cloud (aws/azure) via cli you can transfer the file via cli vs internally in Alteryx.

-

Academy

6 -

ADAPT

2 -

Adobe

204 -

Advent of Code

3 -

Alias Manager

78 -

Alteryx Copilot

26 -

Alteryx Designer

7 -

Alteryx Editions

95 -

Alteryx Practice

20 -

Amazon S3

149 -

AMP Engine

252 -

Announcement

1 -

API

1,208 -

App Builder

116 -

Apps

1,360 -

Assets | Wealth Management

1 -

Basic Creator

15 -

Batch Macro

1,559 -

Behavior Analysis

246 -

Best Practices

2,695 -

Bug

719 -

Bugs & Issues

1 -

Calgary

67 -

CASS

53 -

Chained App

268 -

Common Use Cases

3,825 -

Community

26 -

Computer Vision

86 -

Connectors

1,426 -

Conversation Starter

3 -

COVID-19

1 -

Custom Formula Function

1 -

Custom Tools

1,938 -

Data

1 -

Data Challenge

10 -

Data Investigation

3,487 -

Data Science

3 -

Database Connection

2,220 -

Datasets

5,222 -

Date Time

3,227 -

Demographic Analysis

186 -

Designer Cloud

742 -

Developer

4,372 -

Developer Tools

3,530 -

Documentation

527 -

Download

1,037 -

Dynamic Processing

2,939 -

Email

928 -

Engine

145 -

Enterprise (Edition)

1 -

Error Message

2,258 -

Events

198 -

Expression

1,868 -

Financial Services

1 -

Full Creator

2 -

Fun

2 -

Fuzzy Match

712 -

Gallery

666 -

GenAI Tools

3 -

General

2 -

Google Analytics

155 -

Help

4,708 -

In Database

966 -

Input

4,293 -

Installation

361 -

Interface Tools

1,901 -

Iterative Macro

1,094 -

Join

1,958 -

Licensing

252 -

Location Optimizer

60 -

Machine Learning

260 -

Macros

2,864 -

Marketo

12 -

Marketplace

23 -

MongoDB

82 -

Off-Topic

5 -

Optimization

751 -

Output

5,255 -

Parse

2,328 -

Power BI

228 -

Predictive Analysis

937 -

Preparation

5,169 -

Prescriptive Analytics

206 -

Professional (Edition)

4 -

Publish

257 -

Python

855 -

Qlik

39 -

Question

1 -

Questions

2 -

R Tool

476 -

Regex

2,339 -

Reporting

2,434 -

Resource

1 -

Run Command

575 -

Salesforce

277 -

Scheduler

411 -

Search Feedback

3 -

Server

630 -

Settings

935 -

Setup & Configuration

3 -

Sharepoint

627 -

Spatial Analysis

599 -

Starter (Edition)

1 -

Tableau

512 -

Tax & Audit

1 -

Text Mining

468 -

Thursday Thought

4 -

Time Series

431 -

Tips and Tricks

4,187 -

Topic of Interest

1,126 -

Transformation

3,730 -

Twitter

23 -

Udacity

84 -

Updates

1 -

Viewer

3 -

Workflow

9,980

- « Previous

- Next »