Alteryx Designer Desktop Discussions

Find answers, ask questions, and share expertise about Alteryx Designer Desktop and Intelligence Suite.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Designer Desktop

- :

- Web scraping including embedded pdf documents from...

Web scraping including embedded pdf documents from a website

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi all,

I am unsure whether this can be done using Alteryx, but perhaps one of you has a ingenious solution.

What I want to achieve is to scrape information from the below website into a readable excel table (i.e. list up all announcements in a tabular format).

It does not stop here though, at the same time I want Alteryx to download the corresponding PDF files and store these for me in a certain folder on my laptop. If the file names of these PDF files could be the concatenation of columns "Date" and "Headline", that would be perfection. However, I would be very happy already if the workflow could automatically extract all PDF files.

https://www.asx.com.au/asx/statistics/announcements.do?by=asxCode&asxCode=TLS&timeframe=Y&year=2019

I have a good idea on how to create the table, however, downloading the corresponding PDF files might be too challenging for Alteryx.

Apologies if this request is too far fetched... .but I've seen some geniuses around here, so perhaps you can astonish me again :).

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

https://www.thedataschool.co.uk/nick-jastrzebski/the-dark-arts-of-alteryx-reporting-tools/

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi @Liline008

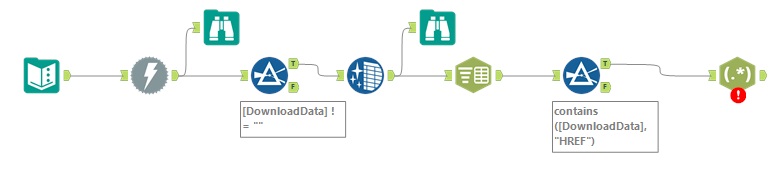

You were close. Your filter needed to be changed from [DownloadData]="" to [DownloadData]!="". From there, I added a couple of tools that have worked for me in the past.

First split to rows and the find all the lines that contain HREF. The rest is left as an exercise for the student.

One thing I did notice is that the HREF for the pdfs don't actually point to a pdf file, i.e. "https://www.asx.com.au/asx/statistics/displayAnnouncement.do?display=pdf&idsId=02169254". It's actually a link to a License page that you'll have to navigate before you get to the actual page "https://www.asx.com.au/asxpdf/20191105/pdf/44b8x1lk4hbb07.pdf". I haven't come across this kind of situation before and I'm not sure how you'll be able to work around it. Reaching out to @Claje, @jdunkerley79, @MarqueeCrew to see if they've come across similar situations.

Dan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Had a quick nosey.

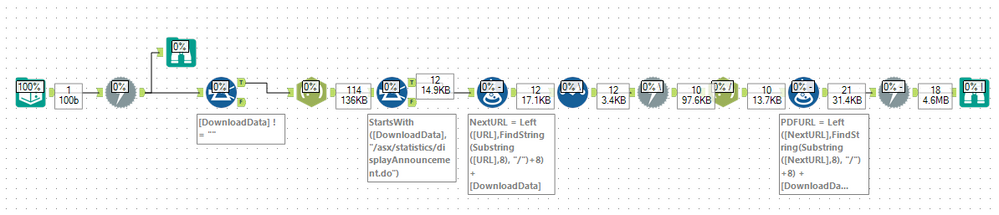

Need to do a multistage download:

First, download and pick the links out (I chose to just use a RegEx tokenise to Rows)

The download each of those pages (which are all accept pages in my case)

Extract the pdfURL from the hidden input

Then download that to a blob

You then have all the PDF - how you process those is a different issue!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi all,

You guys are amazing, the solution works as a charm!

Just for educational purposes, can you please explain why you used href="([^"]+)" in the RegEx tool? (especially the ([^"]+) part)

As the cherry on the cake I was hoping to incorporate the part in red in the file name of the PDFs that get extracted, but first I'd need to fully understand your great solution. If I add the description in the split, then I can parse it into a new column, and then use it in the file names.

| href="/asx/statistics/displayAnnouncement.do?display=pdf&idsId=02165227"> 2020 First Quarter Sales Results |

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

The regex is looking for hefs in the text and then identifying the link contained between the following ".

The "([^"]+)" picks out characters until it encounters another ". The brackets make it a marked group. The RegEx tool in tokenise mode will match pick this marked group out and make it the value.

To do the later request we need to do a little extra work.

1. Change the Regex to match the whole <a> tag: <a[^>]*href="[^"]+"[^>]*>.*?</a

2. Add a second Regex in Parse mode to pick URL and text description out

Have attached new version for you to look at

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Is it possible to search the ASX website for "Annual Reports" released by a company and then within the annual reports the term "amended assessment" or "amended assessments"?

(Apologies for reaching out unintroduced, you just seem like the experts! ;))

-

Academy

6 -

ADAPT

2 -

Adobe

204 -

Advent of Code

3 -

Alias Manager

78 -

Alteryx Copilot

26 -

Alteryx Designer

7 -

Alteryx Editions

95 -

Alteryx Practice

20 -

Amazon S3

149 -

AMP Engine

252 -

Announcement

1 -

API

1,208 -

App Builder

116 -

Apps

1,360 -

Assets | Wealth Management

1 -

Basic Creator

15 -

Batch Macro

1,559 -

Behavior Analysis

246 -

Best Practices

2,695 -

Bug

719 -

Bugs & Issues

1 -

Calgary

67 -

CASS

53 -

Chained App

268 -

Common Use Cases

3,825 -

Community

26 -

Computer Vision

86 -

Connectors

1,426 -

Conversation Starter

3 -

COVID-19

1 -

Custom Formula Function

1 -

Custom Tools

1,938 -

Data

1 -

Data Challenge

10 -

Data Investigation

3,487 -

Data Science

3 -

Database Connection

2,220 -

Datasets

5,222 -

Date Time

3,227 -

Demographic Analysis

186 -

Designer Cloud

742 -

Developer

4,372 -

Developer Tools

3,530 -

Documentation

527 -

Download

1,037 -

Dynamic Processing

2,939 -

Email

928 -

Engine

145 -

Enterprise (Edition)

1 -

Error Message

2,258 -

Events

198 -

Expression

1,868 -

Financial Services

1 -

Full Creator

2 -

Fun

2 -

Fuzzy Match

712 -

Gallery

666 -

GenAI Tools

3 -

General

2 -

Google Analytics

155 -

Help

4,708 -

In Database

966 -

Input

4,293 -

Installation

361 -

Interface Tools

1,901 -

Iterative Macro

1,094 -

Join

1,958 -

Licensing

252 -

Location Optimizer

60 -

Machine Learning

260 -

Macros

2,864 -

Marketo

12 -

Marketplace

23 -

MongoDB

82 -

Off-Topic

5 -

Optimization

751 -

Output

5,255 -

Parse

2,328 -

Power BI

228 -

Predictive Analysis

937 -

Preparation

5,169 -

Prescriptive Analytics

206 -

Professional (Edition)

4 -

Publish

257 -

Python

855 -

Qlik

39 -

Question

1 -

Questions

2 -

R Tool

476 -

Regex

2,339 -

Reporting

2,434 -

Resource

1 -

Run Command

575 -

Salesforce

277 -

Scheduler

411 -

Search Feedback

3 -

Server

630 -

Settings

935 -

Setup & Configuration

3 -

Sharepoint

627 -

Spatial Analysis

599 -

Starter (Edition)

1 -

Tableau

512 -

Tax & Audit

1 -

Text Mining

468 -

Thursday Thought

4 -

Time Series

431 -

Tips and Tricks

4,187 -

Topic of Interest

1,126 -

Transformation

3,730 -

Twitter

23 -

Udacity

84 -

Updates

1 -

Viewer

3 -

Workflow

9,980

- « Previous

- Next »