Alteryx Designer Desktop Discussions

Find answers, ask questions, and share expertise about Alteryx Designer Desktop and Intelligence Suite.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Designer Desktop

- :

- Re: Question&Problem -> Python Tool : Cannot write...

Question&Problem -> Python Tool : Cannot write Output data from tool

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

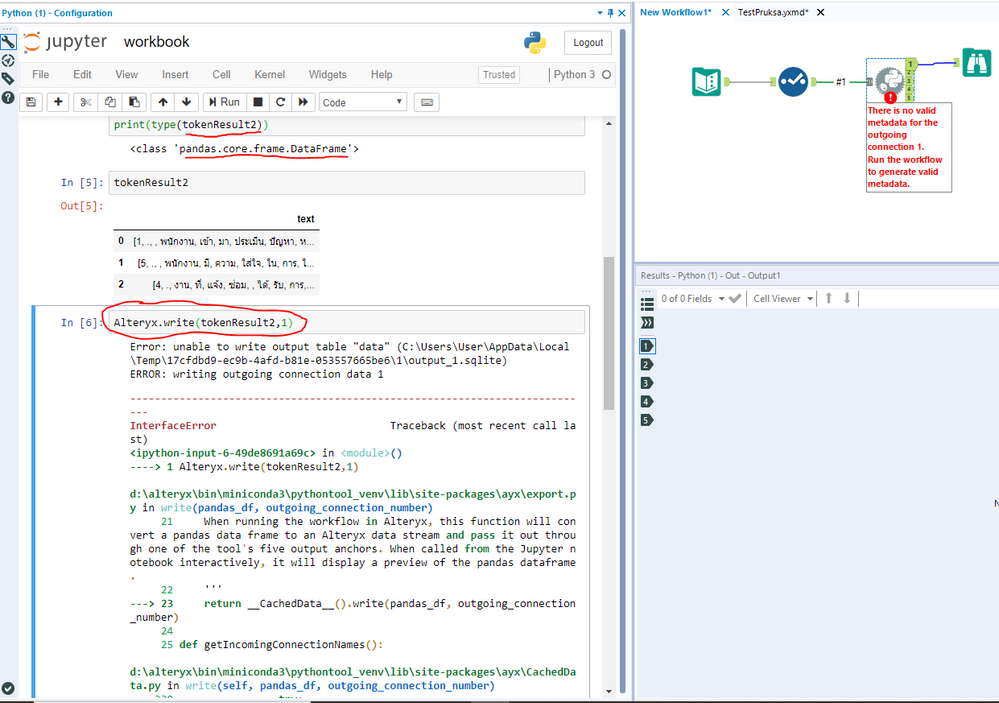

Python Tool : I cannot write Output data from tool.

My Variable type is pandas data frames, but cannot write output? !!!

How to config this problem? please, help T^T

Error MSG

""

Error: Python (1): [NbConvertApp] Converting notebook C:\Users\User\AppData\Local\Temp\17cfdbd9-ec9b-4afd-b81e-053557665be6\1\workbook.ipynb to html

[NbConvertApp] Executing notebook with kernel: python3

2018-09-18 15:30:26.755508: I C:\tf_jenkins\workspace\rel-win\M\windows\PY\36\tensorflow\core\platform\cpu_feature_guard.cc:137] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX AVX2

[NbConvertApp] ERROR | Error while converting 'C:\Users\User\AppData\Local\Temp\17cfdbd9-ec9b-4afd-b81e-053557665be6\1\workbook.ipynb'

Traceback (most recent call last):

File "d:\alteryx\bin\miniconda3\pythontool_venv\lib\site-packages\nbconvert\nbconvertapp.py", line 393, in export_single_notebook

output, resources = self.exporter.from_filename(notebook_filename, resources=resources)

File "d:\alteryx\bin\miniconda3\pythontool_venv\lib\site-packages\nbconvert\exporters\exporter.py", line 174, in from_filename

return self.from_file(f, resources=resources, **kw)

File "d:\alteryx\bin\miniconda3\pythontool_venv\lib\site-packages\nbconvert\exporters\exporter.py", line 192, in from_file

return self.from_notebook_node(nbformat.read(file_stream, as_version=4), resources=resources, **kw)

File "d:\alteryx\bin\miniconda3\pythontool_venv\lib\site-packages\nbconvert\exporters\html.py", line 85, in from_notebook_node

return super(HTMLExporter, self).from_notebook_node(nb, resources, **kw)

File "d:\alteryx\bin\miniconda3\pythontool_venv\lib\site-packages\nbconvert\exporters\templateexporter.py", line 280, in from_notebook_node

nb_copy, resources = super(TemplateExporter, self).from_notebook_node(nb, resources, **kw)

File "d:\alteryx\bin\miniconda3\pythontool_venv\lib\site-packages\nbconvert\exporters\exporter.py", line 134, in from_notebook_node

nb_copy, resources = self._preprocess(nb_copy, resources)

File "d:\alteryx\bin\miniconda3\pythontool_venv\lib\site-packages\nbconvert\exporters\exporter.py", line 311, in _preprocess

nbc, resc = preprocessor(nbc, resc)

File "d:\alteryx\bin\miniconda3\pythontool_venv\lib\site-packages\nbconvert\preprocessors\base.py", line 47, in __call__

return self.preprocess(nb, resources)

File "d:\alteryx\bin\miniconda3\pythontool_venv\lib\site-packages\nbconvert\preprocessors\execute.py", line 262, in preprocess

nb, resources = super(ExecutePreprocessor, self).preprocess(nb, resources)

File "d:\alteryx\bin\miniconda3\pythontool_venv\lib\site-packages\nbconvert\preprocessors\base.py", line 69, in preprocess

nb.cells[index], resources = self.preprocess_cell(cell, resources, index)

File "d:\alteryx\bin\miniconda3\pythontool_venv\lib\site-packages\nbconvert\preprocessors\execute.py", line 286, in preprocess_cell

raise CellExecutionError.from_cell_and_msg(cell, out)

nbconvert.preprocessors.execute.CellExecutionError: An error occurred while executing the following cell:

------------------

Alteryx.write(tokenResult2,1)

------------------

---------------------------------------------------------------------------

InterfaceError Traceback (most recent call last)

<ipython-input-4-49de8691a69c> in <module>()

----> 1 Alteryx.write(tokenResult2,1)

d:\alteryx\bin\miniconda3\pythontool_venv\lib\site-packages\ayx\export.py in write(pandas_df, outgoing_connection_number)

21 When running the workflow in Alteryx, this function will convert a pandas data frame to an Alteryx data stream and pass it out through one of the tool's five output anchors. When called from the Jupyter notebook interactively, it will display a preview of the pandas dataframe.

22 '''

---> 23 return __CachedData__().write(pandas_df, outgoing_connection_number)

24

25 def getIncomingConnectionNames():

d:\alteryx\bin\miniconda3\pythontool_venv\lib\site-packages\ayx\CachedData.py in write(self, pandas_df, outgoing_connection_number)

330 try:

331 # get the data from the sql db (if only one table exists, no need to specify the table name)

--> 332 data = db.writeData(pandas_df, 'data')

333 # print success message

334 print(''.join(['SUCCESS: ', msg_action]))

d:\alteryx\bin\miniconda3\pythontool_venv\lib\site-packages\ayx\CachedData.py in writeData(self, pandas_df, table)

170 print('Attempting to write data to table "{}"'.format(table))

171 try:

--> 172 pandas_df.to_sql(table, self.connection, if_exists='replace', index=False)

173 if self.debug:

174 print(fileErrorMsg(

d:\alteryx\bin\miniconda3\pythontool_venv\lib\site-packages\pandas\core\generic.py in to_sql(self, name, con, schema, if_exists, index, index_label, chunksize, dtype)

2128 sql.to_sql(self, name, con, schema=schema, if_exists=if_exists,

2129 index=index, index_label=index_label, chunksize=chunksize,

-> 2130 dtype=dtype)

2131

2132 def to_pickle(self, path, compression='infer',

d:\alteryx\bin\miniconda3\pythontool_venv\lib\site-packages\pandas\io\sql.py in to_sql(frame, name, con, schema, if_exists, index, index_label, chunksize, dtype)

448 pandas_sql.to_sql(frame, name, if_exists=if_exists, index=index,

449 index_label=index_label, schema=schema,

--> 450 chunksize=chunksize, dtype=dtype)

451

452

d:\alteryx\bin\miniconda3\pythontool_venv\lib\site-packages\pandas\io\sql.py in to_sql(self, frame, name, if_exists, index, index_label, schema, chunksize, dtype)

1479 dtype=dtype)

1480 table.create()

-> 1481 table.insert(chunksize)

1482

1483 def has_table(self, name, schema=None):

d:\alteryx\bin\miniconda3\pythontool_venv\lib\site-packages\pandas\io\sql.py in insert(self, chunksize)

639

640 chunk_iter = zip(*[arr[start_i:end_i] for arr in data_list])

--> 641 self._execute_insert(conn, keys, chunk_iter)

642

643 def _query_iterator(self, result, chunksize, columns, coerce_float=True,

d:\alteryx\bin\miniconda3\pythontool_venv\lib\site-packages\pandas\io\sql.py in _execute_insert(self, conn, keys, data_iter)

1268 def _execute_insert(self, conn, keys, data_iter):

1269 data_list = list(data_iter)

-> 1270 conn.executemany(self.insert_statement(), data_list)

1271

1272 def _create_table_setup(self):

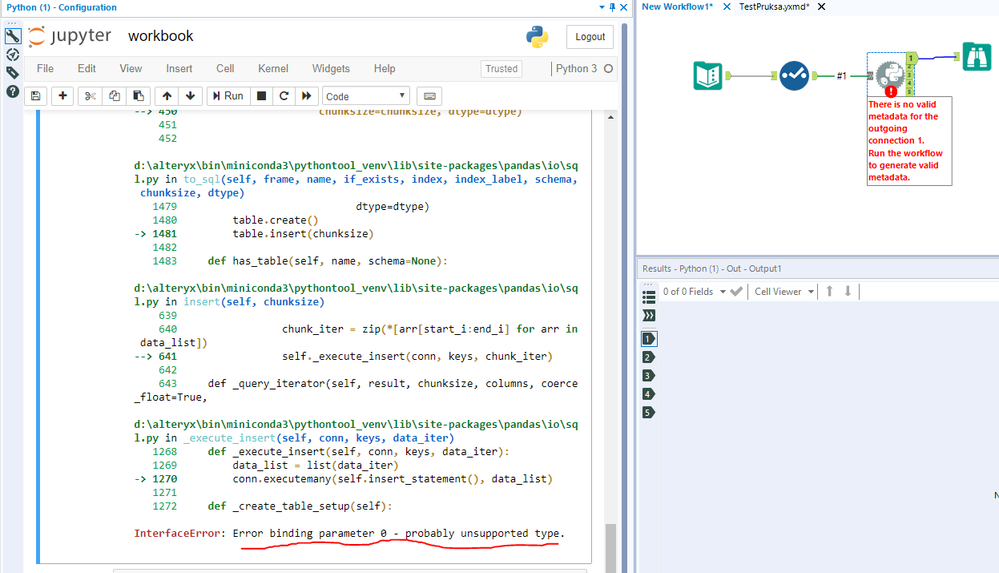

InterfaceError: Error binding parameter 0 - probably unsupported type.

InterfaceError: Error binding parameter 0 - probably unsupported type.

""

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Thanks, Paul!

The below you pointed to worked fine:

if not exist %userprofile%\.jupyter mkdir %userprofile%\.jupyter

echo c.ExecutePreprocessor.timeout = None > %userprofile%\.jupyter\jupyter_nbconvert_config.py

Regards,

Yann

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi, Paul - I'm having the same problem and it doesn't seem to be related to either of the problems above. I'm trying to use the BLS API, so my python tool isn't connected to anything as it's making an API call to retrieve data. It's the only thing in my workflow currently. Here's my script:

from ayx import Package

from ayx import Alteryx

import requests

import pandas as pd

import bls

bls_API_key = '14f4fc6cad9d4317a5e21842a53c2604'

sm_employees_thousands = pd.DataFrame(bls.get_series('SMS01000003000000001', 2014, 2018, bls_API_key))

sm_employees_thousands.columns

sm_employees_thousands.reset_index(inplace=True)

sm_employees_thousands.head()

| 2014-01 | 251.1 |

| 2014-02 | 250.9 |

| 2014-03 | 251.6 |

| 2014-04 | 251.8 |

| 2014-05 | 252.6 |

Alteryx.write(sm_employees_thousands,1)Error: unable to write output table "data" (C:\Users\nspare\AppData\Local\Temp\0c571dd40d9cd7d1d88a1a9c97070ebd\1\output_1.sqlite) ERROR: writing outgoing connection data 1

--------------------------------------------------------------------------- InterfaceError Traceback (most recent call last) <ipython-input-6-51e8a29ee518> in <module> ----> 1 Alteryx.write(sm_employees_thousands,1) c:\program files\alteryx\bin\miniconda3\pythontool_venv\lib\site-packages\ayx\export.py in write(pandas_df, outgoing_connection_number, columns, debug, **kwargs) 78 When running the workflow in Alteryx, this function will convert a pandas data frame to an Alteryx data stream and pass it out through one of the tool's five output anchors. When called from the Jupyter notebook interactively, it will display a preview of the pandas dataframe. 79 ''' ---> 80 return __CachedData__(debug=debug).write(pandas_df, outgoing_connection_number, columns=columns, **kwargs) 81 82 def getIncomingConnectionNames(debug=None, **kwargs): c:\program files\alteryx\bin\miniconda3\pythontool_venv\lib\site-packages\ayx\CachedData.py in write(self, pandas_df, outgoing_connection_number, columns) 1354 try: 1355 # get the data from the sql db (if only one table exists, no need to specify the table name) -> 1356 data = db.writeData(pandas_df_out, 'data', dtype=dtypes) 1357 # print success message 1358 print(''.join(['SUCCESS: ', msg_action])) c:\program files\alteryx\bin\miniconda3\pythontool_venv\lib\site-packages\ayx\CachedData.py in writeData(self, pandas_df, table, dtype) 957 print('Attempting to write data to table "{}"'.format(table)) 958 try: --> 959 pandas_df.to_sql(table, self.connection, if_exists='replace', index=False, dtype=dtype) 960 if self.debug: 961 print(fileErrorMsg( c:\program files\alteryx\bin\miniconda3\pythontool_venv\lib\site-packages\pandas\core\generic.py in to_sql(self, name, con, schema, if_exists, index, index_label, chunksize, dtype) 2128 sql.to_sql(self, name, con, schema=schema, if_exists=if_exists, 2129 index=index, index_label=index_label, chunksize=chunksize, -> 2130 dtype=dtype) 2131 2132 def to_pickle(self, path, compression='infer', c:\program files\alteryx\bin\miniconda3\pythontool_venv\lib\site-packages\pandas\io\sql.py in to_sql(frame, name, con, schema, if_exists, index, index_label, chunksize, dtype) 448 pandas_sql.to_sql(frame, name, if_exists=if_exists, index=index, 449 index_label=index_label, schema=schema, --> 450 chunksize=chunksize, dtype=dtype) 451 452 c:\program files\alteryx\bin\miniconda3\pythontool_venv\lib\site-packages\pandas\io\sql.py in to_sql(self, frame, name, if_exists, index, index_label, schema, chunksize, dtype) 1479 dtype=dtype) 1480 table.create() -> 1481 table.insert(chunksize) 1482 1483 def has_table(self, name, schema=None): c:\program files\alteryx\bin\miniconda3\pythontool_venv\lib\site-packages\pandas\io\sql.py in insert(self, chunksize) 639 640 chunk_iter = zip(*[arr[start_i:end_i] for arr in data_list]) --> 641 self._execute_insert(conn, keys, chunk_iter) 642 643 def _query_iterator(self, result, chunksize, columns, coerce_float=True, c:\program files\alteryx\bin\miniconda3\pythontool_venv\lib\site-packages\pandas\io\sql.py in _execute_insert(self, conn, keys, data_iter) 1268 def _execute_insert(self, conn, keys, data_iter): 1269 data_list = list(data_iter) -> 1270 conn.executemany(self.insert_statement(), data_list) 1271 1272 def _create_table_setup(self): InterfaceError: Error binding parameter 0 - probably unsupported type.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi @nafferly,

Thank you for posting!

Looking at your example, I believe that error is caused by the fact that Period() (datatype of column date) is not recognised by Alteryx Engine.

Example:

If we check the type of the first row of date:

type(sm_employees_thousands.iloc[0][0])

> pandas._libs.tslibs.period.Period

As a workaround, you could cast date to str, or any relevant type.

Example:

sm_employees_thousands = sm_employees_thousands.astype({"date":str})

Alteryx.write(sm_employees_thousands,1)

Thanks,

Paul Noirel

Sr Customer Support, Alteryx

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Works perfectly now - thank you so much!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Old question but I ran into a similar problem and solved it by changing all datatypes in the dataframe to strings

df = df.applymap(str)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

For anyone else who comes across this thread, here's one more thing to check...

I also had this error occur, and the issue was that my DataFrame had two columns with the same name. Changing one of the column names (before outputting) resolved the issue.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hello PaulN,

I have also been having trouble outputting a list from inside the Python tool to Alteryx.

So I understand what you are saying; that you cannot output a list, even in a dataframe, because Alteryx does not recognize lists.

I first tried to fix it in a way that made sense to me:

1) If Alteryx doesnt recognize a list, convert the list to a string

2) Turn that string into a dataframe so it can be outputted; I did this by converting it to a series first with pd.Series() and then to a dataframe with the .to_frame() method

...although this did convert to a dataframe I got an error when I tried to output it. Do you know why?

Then I tried your method. It is more advanced and I don't fully understand what it is doing. Could you explain please? And why how i used it did not work?

I have attached the input file and my workflow with notes.

Thank you in advance!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

@G1 - a coworker and I just ran into this last week. The issue is that you're changing the working directory within the Python tool.

When you open the workflow, a temp directory is created, and that directory contains a .json file that has some configuration data needed when writing the data back out to the workflow. Since you're changing the working directory within your Python code, Alteryx is trying to look for that .json file in the changed directory (and not the original temp directory where the .json file lives).

Option 1 - at the beginning of your code, capture the current working directory, and then re-set the working directory at the end of your code (before you write out to the workflow).

from ayx import Alteryx

from os import getcwd, chdir

# import whatever other modules you need...

# get the current (temp) directory

original_directory = getcwd()

# the rest of your code goes here...

# at the end, change the working directory back

# to the temp directory before writing out to the workflow

chdir(original_directory)

Alteryx.write(list_as_pandas_df,1)

Option 2 - instead of changing the working directory, just use the full path when you read in the file. I would recommend using the pandas read_csv function to read the file directly into a dataframe (unless there is some specific reason that you need a file object).

from ayx import Alteryx

from pandas import read_csv

# read in the file as newline delimited

list_as_pandas_df = read_csv('C:\\your-file-path-goes-here\\where.txt', header=None, sep='\n')

Alteryx.write(list_as_pandas_df,1)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hey @G1,

Thanks for posting!

There are a few things in your code that may lead to errors.

1. Python tool scope

cd C:\Users\GWallace2\Box\Gemma_Box\Alteryx\Alteryx_References\Python\Ch16_Visualizations\Files\geodata\geodata

This will lead to an error message with Alteryx.read() and Alteryx.write() as Python tool will not be able to find its configuration file jupyterPipes.json.

Example:

Config file error -- C:\Users\GWallace2\Box\Gemma_Box\Alteryx\Alteryx_References\Python\Ch16_Visualizations\Files\geodata\geodata\jupyterPipes.json"

[...]

FileNotFoundError: Cached data unavailable -- run the workflow to make the input data available in Jupyter notebook (C:\Users\GWallace2\Box\Gemma_Box\Alteryx\Alteryx_References\Python\Ch16_Visualizations\Files\geodata\geodata\jupyterPipes.json)

2. Python tool output data type

list_to_contain_file_info = []

[...]

test = pd.DataFrame(list_to_contain_file_info[list_to_contain_file_info.columns[0]].values.tolist(), index=list_to_contain_file_info.index)

Alteryx.write(test,1)

list_to_contain_file_info is a list and therefore, it does not contain any "columns" attribute

Following should be enough:

test = pd.DataFrame(list_to_contain_file_info)

Alteryx.write(test,1)

Your list contains only strings which could be easily recognised by Alteryx Engine (contrary to dates in example posted earlier on this thread).

3. Misc

As a side note, code opens file 'where.txt' but never closes it:

open_file = open('where.txt', 'r')

print(open_file)

You should call the following when you are done or stick to the "with" structure.

open_file.close()

Feel free to refer to https://docs.python.org/3.6/tutorial/inputoutput.html (section 7.2 Reading and Writing files).

Hope that helps. Do not hesitate to create your own thread for better visibility.

Thanks,

PaulN

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

@kelly_gilbert thank you! Your explanation is easy to understand.

I am using a file object because that is part of the exercise...I am learning Python. But I have made notes on your other options for future reference.

-

Academy

6 -

ADAPT

2 -

Adobe

204 -

Advent of Code

3 -

Alias Manager

78 -

Alteryx Copilot

25 -

Alteryx Designer

7 -

Alteryx Editions

94 -

Alteryx Practice

20 -

Amazon S3

149 -

AMP Engine

252 -

Announcement

1 -

API

1,208 -

App Builder

116 -

Apps

1,360 -

Assets | Wealth Management

1 -

Basic Creator

14 -

Batch Macro

1,558 -

Behavior Analysis

246 -

Best Practices

2,693 -

Bug

719 -

Bugs & Issues

1 -

Calgary

67 -

CASS

53 -

Chained App

268 -

Common Use Cases

3,823 -

Community

26 -

Computer Vision

85 -

Connectors

1,426 -

Conversation Starter

3 -

COVID-19

1 -

Custom Formula Function

1 -

Custom Tools

1,936 -

Data

1 -

Data Challenge

10 -

Data Investigation

3,486 -

Data Science

3 -

Database Connection

2,220 -

Datasets

5,221 -

Date Time

3,227 -

Demographic Analysis

186 -

Designer Cloud

740 -

Developer

4,368 -

Developer Tools

3,528 -

Documentation

526 -

Download

1,037 -

Dynamic Processing

2,937 -

Email

927 -

Engine

145 -

Enterprise (Edition)

1 -

Error Message

2,256 -

Events

198 -

Expression

1,868 -

Financial Services

1 -

Full Creator

2 -

Fun

2 -

Fuzzy Match

711 -

Gallery

666 -

GenAI Tools

3 -

General

2 -

Google Analytics

155 -

Help

4,705 -

In Database

966 -

Input

4,291 -

Installation

360 -

Interface Tools

1,900 -

Iterative Macro

1,094 -

Join

1,957 -

Licensing

252 -

Location Optimizer

60 -

Machine Learning

259 -

Macros

2,862 -

Marketo

12 -

Marketplace

23 -

MongoDB

82 -

Off-Topic

5 -

Optimization

750 -

Output

5,252 -

Parse

2,327 -

Power BI

228 -

Predictive Analysis

936 -

Preparation

5,167 -

Prescriptive Analytics

205 -

Professional (Edition)

4 -

Publish

257 -

Python

855 -

Qlik

39 -

Question

1 -

Questions

2 -

R Tool

476 -

Regex

2,339 -

Reporting

2,431 -

Resource

1 -

Run Command

575 -

Salesforce

277 -

Scheduler

411 -

Search Feedback

3 -

Server

629 -

Settings

933 -

Setup & Configuration

3 -

Sharepoint

626 -

Spatial Analysis

599 -

Starter (Edition)

1 -

Tableau

512 -

Tax & Audit

1 -

Text Mining

468 -

Thursday Thought

4 -

Time Series

431 -

Tips and Tricks

4,187 -

Topic of Interest

1,126 -

Transformation

3,726 -

Twitter

23 -

Udacity

84 -

Updates

1 -

Viewer

3 -

Workflow

9,974

- « Previous

- Next »