Alteryx Designer Desktop Discussions

Find answers, ask questions, and share expertise about Alteryx Designer Desktop and Intelligence Suite.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Designer Desktop

- :

- Processing time question on a very simple query

Processing time question on a very simple query

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

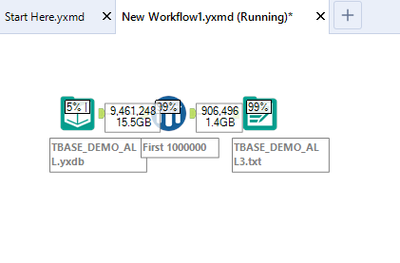

I have a large database with 180 Million records that i put into a table called [TBASE_DEMO_ALL.yxdb]

The query i built below selects the 1st 1million records and OUTPUTS the data into a .txt file. Thats pretty much it.

Question I have is the SAMPLE tool and OUTPUT tool hit 99% in 2 minutes. The INPUT tool is slowly creeping up - and if my math is correct - should finish in a couple of hours before the INPUT tool hits 100%.

Here's the kicker - if i cancel the workflow once the SAMPLE tool and OUTPUT tool hit 99% (so after 2 minutes) - the 1million .txt file is created.

So in my head i'm thinking that the INPUT file is still trying to read the rest of the file. Anyway i can bypass it reading the rest of the file? Am i not building the query correctly? Any thoughts would be appreciated. Thanks. Javier

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

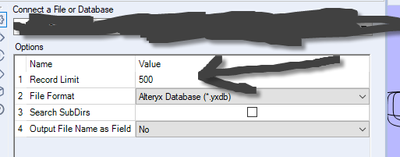

Input configuration has a record limit setting. I dont know if it will read in the same 1,000,000 each time, but it is worth testing (maybe test with with something less than a million records though... 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

So for this particular case - yep that worked! Thank you!

I have one more scenario:

My current .yxdb database had 300 fields, and 180 million records.

If i write a query to get a count on just one (1) of the fields it has to read the entire INPUT file and all 300 fields, even though i am interested in only one of the fields.

Is there a way, right from the beginning, that I can say - From my Input file, only ready in the fields i specify?

Thanks again for any insight on this.

Javier

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

If you are using the Sample tool as a way to limit downstream records, you may be better off doing that by putting the record limitation in the Input tool directly. Most data sources allow that option and this should stop reading from the Input after it hits the limit. An input tool will be read in its entirety before going to the sample tool if done using that method.

Another trick when dealing with those volumes is to right-click and Cache and Run workflow so it only reads it once as you are building. You only feel the pain once that way.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I dont know how to actually do this as I have never had the need, but I think you can use a dynamic input and modify the SQL that is bringing in the data to only select the columns you want.

Check out the tool help page https://help.alteryx.com/20212/designer/dynamic-input-tool

And the examples available within designer might help too.

And I am sure there are other threads in the community that would cover this topic. Hopefully someone else with actual experience with it can post in here too. 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I totally missed DanielG's post in my last response. Reading those volumes, I have found requires more work to develop so you don't pull your hair out. For example, I just wrote a test workflow that pulled 2.6M records from SnowFlake and does a count distinct on my PK. This takes 42 seconds.

My trick I have been using is to put a sacrificial Select tool (or any tool with a single output anchor) after the input tool and doing the Right-Click --> Cache and Run WorkFlow. In many cases you can cache the input tool's results directly, but not when using a YXDB+Input. But you can set a cache point using the Select right after it. The second pull reading from that once in memory was 7 seconds, BUT I still have that output to use later on with all my columns.

A YXDB is basically a text file with no indexes so reading it is a brute force procedure. You can also use AMP if you have created the YXDB to be amp-friendly to help the read times.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

@dataguyW -- i agree completely for testing purposes that the cache and run is a fantastic time saver.

Caching literally creates a YXDB file within the temp folders on your computer so I wonder if just dropping his actual YXDB into that same folder would allow for the improvements in run time? Does the Cache and Run process do anything under the covers to further optimize the file beyond the standard YXDB creation process in an output tool in another workflow?

Not that dropping a select after the input isnt easier than finding the cache folder and moving the YXDB there. I am just curious now that we are talking about it. haha.

Havent done anything with AMP yet either, but will try to get into a bit more when my time frees up.

@jdelaguila - hopefully something in here is helpful to you 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

DanielG - You are correct that the Input Tool's "Cache Input" and the Cache and Run workflow option are actually creating a local .yxdb. I thought the latter was going straight into memory.

Another option I've used when data gets into the hundreds of millions is to create a Calgary output file. This makes the "temp" database behave more like a normal indexed database and from what the help file says is in a sweet-spot between 100-300M records. I just built one. Even if you create a record-Id and just filter the Calgary output for where recorded <= 1,000,000 it will return nearly instantly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Thanks everyone! I never knew about the "Cache and Run". That has been very helpful. Also Calgary idea was great! Game changer. Thanks everyone for all the great advice!

-

Academy

6 -

ADAPT

2 -

Adobe

204 -

Advent of Code

3 -

Alias Manager

78 -

Alteryx Copilot

26 -

Alteryx Designer

7 -

Alteryx Editions

95 -

Alteryx Practice

20 -

Amazon S3

149 -

AMP Engine

252 -

Announcement

1 -

API

1,208 -

App Builder

116 -

Apps

1,360 -

Assets | Wealth Management

1 -

Basic Creator

15 -

Batch Macro

1,559 -

Behavior Analysis

246 -

Best Practices

2,695 -

Bug

719 -

Bugs & Issues

1 -

Calgary

67 -

CASS

53 -

Chained App

268 -

Common Use Cases

3,825 -

Community

26 -

Computer Vision

86 -

Connectors

1,426 -

Conversation Starter

3 -

COVID-19

1 -

Custom Formula Function

1 -

Custom Tools

1,938 -

Data

1 -

Data Challenge

10 -

Data Investigation

3,487 -

Data Science

3 -

Database Connection

2,220 -

Datasets

5,222 -

Date Time

3,227 -

Demographic Analysis

186 -

Designer Cloud

742 -

Developer

4,372 -

Developer Tools

3,530 -

Documentation

527 -

Download

1,037 -

Dynamic Processing

2,939 -

Email

928 -

Engine

145 -

Enterprise (Edition)

1 -

Error Message

2,258 -

Events

198 -

Expression

1,868 -

Financial Services

1 -

Full Creator

2 -

Fun

2 -

Fuzzy Match

712 -

Gallery

666 -

GenAI Tools

3 -

General

2 -

Google Analytics

155 -

Help

4,708 -

In Database

966 -

Input

4,293 -

Installation

361 -

Interface Tools

1,901 -

Iterative Macro

1,094 -

Join

1,958 -

Licensing

252 -

Location Optimizer

60 -

Machine Learning

260 -

Macros

2,864 -

Marketo

12 -

Marketplace

23 -

MongoDB

82 -

Off-Topic

5 -

Optimization

751 -

Output

5,255 -

Parse

2,328 -

Power BI

228 -

Predictive Analysis

937 -

Preparation

5,169 -

Prescriptive Analytics

206 -

Professional (Edition)

4 -

Publish

257 -

Python

855 -

Qlik

39 -

Question

1 -

Questions

2 -

R Tool

476 -

Regex

2,339 -

Reporting

2,434 -

Resource

1 -

Run Command

575 -

Salesforce

277 -

Scheduler

411 -

Search Feedback

3 -

Server

630 -

Settings

935 -

Setup & Configuration

3 -

Sharepoint

627 -

Spatial Analysis

599 -

Starter (Edition)

1 -

Tableau

512 -

Tax & Audit

1 -

Text Mining

468 -

Thursday Thought

4 -

Time Series

431 -

Tips and Tricks

4,187 -

Topic of Interest

1,126 -

Transformation

3,730 -

Twitter

23 -

Udacity

84 -

Updates

1 -

Viewer

3 -

Workflow

9,980

- « Previous

- Next »