Alteryx Designer Desktop Discussions

Find answers, ask questions, and share expertise about Alteryx Designer Desktop and Intelligence Suite.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Designer Desktop

- :

- Re: Lookup Value in Matrix Range

Lookup Value in Matrix Range

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hello,

I need assistance on how to best structure a workflow that needs to capture value stored in a matrix.

Essentially, based on two different performance metrics, I need a "score" returned. An example of the matrix is below.

Metric 1

| 10-15% | 15-20% | 20-25% | 25-30% | |

| 5-10% | 1 | 1.5 | 2 | 3 |

| 10-15% | 1.5 | 2 | 3 | 4 |

| 15-20% | 2 | 3 | 4 | 5 |

| 20-5% | 3 | 4 | 5 | 6 |

So someone who scored a 10.3% on metric one and a 15.7% on metric two would get a score of 2.

I know I could write a complicated if/then statement, but I have 6 unique matrices depending on what department a person works at, so it would be pretty exhausting to write out.

Also, I'm not sure if I could use a generate rows as the data is continuous, but maybe that is an option?

I can't share the actual data, but the score each record is a single person and the two scores are two columns.

Input:

Name | Metric 1 | Metric 2 |

| Jane Doe | 10.7% | 15.3% |

Desired Output:

Name | Metric 1 | Metric 2 | Score |

| Jane Doe | 10.7% | 15.3% | 2 |

Thank you!

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi @atperry30

Assuming you don't have hundreds of thousands of records.

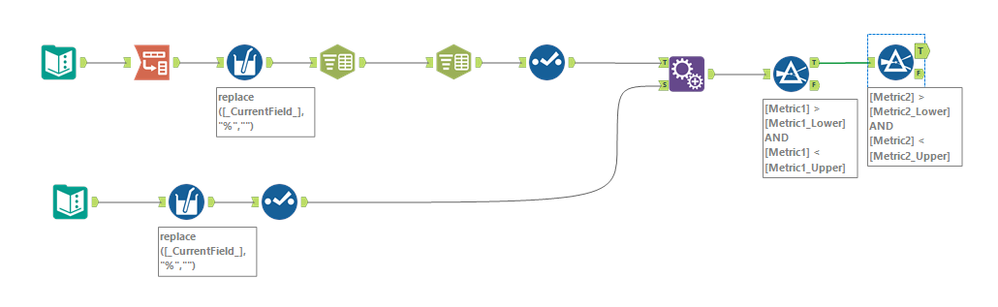

This workflow should work well for you:

I create the combinations of the matrix with upper and lower bands in the top.

Append all the people to the matrix, then filter out for the correct result.

As mentioned though, the append fields tool will not be the best if you do have large data volumes. That being said, Alteryx will churn through the data to make it work if you have the PC resources.

Let me know if you need a more efficient workflow and I'll see if I can come up with one.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Joe,

This is fantastic, thank you!

Unfortunately yes, in terms of the number of records it is about 1.1 million going through the whole workflow (records are unique to per person, per month). However we only need to apply the score to the previous 3 months worth of data, so that's approximately 150,000 records. So perhaps we could offshoot the data and only apply the methodology to those records.

But if you do have a more efficient way, that could be helpful! It's already a very long workflow and we're always trying to shave minutes of the time.

Best,

Alex

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

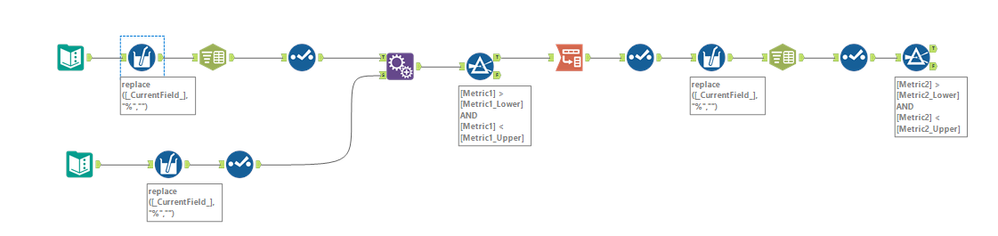

Hi Alex,

Same logic but applied in a slightly different order. May mean it runs faster, as we transpose based on the second matrix value after already finding which one the first falls into (if that makes sense).

Makes the small sample data run in 0.3 seconds compared to the above being 0.4.

Those numbers shouldn't get as big then as it's only even going to be number of people per month times the number of metric values. And not people x metric1 x metric2

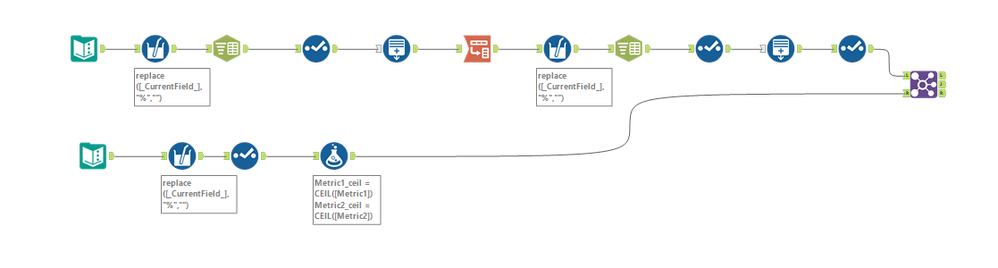

The other way would be to do some rounding, and then preparing the matrix file a lot more.

Which is a fair bit more work on the matrix file, but I assume that's much less volume. And then you only have a join between 150k records and however many the matrix gets to.

I may have to bow out with the above and see if anyone else can think of a more efficient flow.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Joe,

This also works well. We were able to narrow the data that needs to be processed enough that I think your first solution will be perfect, but if not, I'll try the second. I'm so grateful for such a quick reply!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Great stuff, always good to have multiple choices.

I think option 3 may be the most efficient on a large data set.

-

Academy

6 -

ADAPT

2 -

Adobe

204 -

Advent of Code

3 -

Alias Manager

78 -

Alteryx Copilot

26 -

Alteryx Designer

7 -

Alteryx Editions

95 -

Alteryx Practice

20 -

Amazon S3

149 -

AMP Engine

252 -

Announcement

1 -

API

1,208 -

App Builder

116 -

Apps

1,360 -

Assets | Wealth Management

1 -

Basic Creator

15 -

Batch Macro

1,559 -

Behavior Analysis

246 -

Best Practices

2,695 -

Bug

719 -

Bugs & Issues

1 -

Calgary

67 -

CASS

53 -

Chained App

268 -

Common Use Cases

3,825 -

Community

26 -

Computer Vision

86 -

Connectors

1,426 -

Conversation Starter

3 -

COVID-19

1 -

Custom Formula Function

1 -

Custom Tools

1,938 -

Data

1 -

Data Challenge

10 -

Data Investigation

3,487 -

Data Science

3 -

Database Connection

2,220 -

Datasets

5,222 -

Date Time

3,227 -

Demographic Analysis

186 -

Designer Cloud

742 -

Developer

4,372 -

Developer Tools

3,530 -

Documentation

527 -

Download

1,037 -

Dynamic Processing

2,939 -

Email

928 -

Engine

145 -

Enterprise (Edition)

1 -

Error Message

2,258 -

Events

198 -

Expression

1,868 -

Financial Services

1 -

Full Creator

2 -

Fun

2 -

Fuzzy Match

712 -

Gallery

666 -

GenAI Tools

3 -

General

2 -

Google Analytics

155 -

Help

4,708 -

In Database

966 -

Input

4,293 -

Installation

361 -

Interface Tools

1,901 -

Iterative Macro

1,094 -

Join

1,958 -

Licensing

252 -

Location Optimizer

60 -

Machine Learning

260 -

Macros

2,864 -

Marketo

12 -

Marketplace

23 -

MongoDB

82 -

Off-Topic

5 -

Optimization

751 -

Output

5,255 -

Parse

2,328 -

Power BI

228 -

Predictive Analysis

937 -

Preparation

5,169 -

Prescriptive Analytics

206 -

Professional (Edition)

4 -

Publish

257 -

Python

855 -

Qlik

39 -

Question

1 -

Questions

2 -

R Tool

476 -

Regex

2,339 -

Reporting

2,434 -

Resource

1 -

Run Command

575 -

Salesforce

277 -

Scheduler

411 -

Search Feedback

3 -

Server

630 -

Settings

935 -

Setup & Configuration

3 -

Sharepoint

627 -

Spatial Analysis

599 -

Starter (Edition)

1 -

Tableau

512 -

Tax & Audit

1 -

Text Mining

468 -

Thursday Thought

4 -

Time Series

431 -

Tips and Tricks

4,187 -

Topic of Interest

1,126 -

Transformation

3,730 -

Twitter

23 -

Udacity

84 -

Updates

1 -

Viewer

3 -

Workflow

9,980

- « Previous

- Next »