Alteryx Designer Desktop Discussions

Find answers, ask questions, and share expertise about Alteryx Designer Desktop and Intelligence Suite.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Designer Desktop

- :

- Re: Join with wildcard for some fields

Join with wildcard for some fields

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

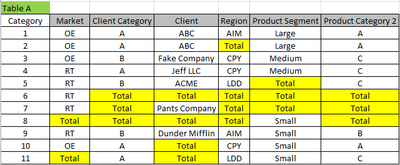

I would like to join 2 tables together and I don't know a good way to do it. Table A has all the real possible combinations of categories that I need, which is about 50,000 possibilities. Any "Total" in the data in Table A (highlighted in yellow) means any value will match.

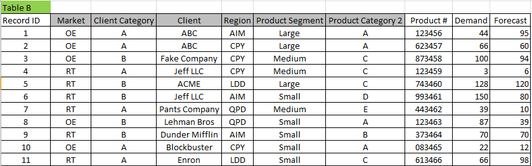

Table B has actual demand and forecast data at the lower product # level with about 25,000 records that I will later use to run some forecast accuracy calculations.

I want to join the two tables together with the grey headed columns as key fields (right join) but using a sort of wildcard if there is "Total" in one of the fields of Table A.

For example, Records 1 and 2 from Table B should join with Category row 2 of Table A because any region matches "Total". However, only record 1 from Table B should join with Category row 1 of Table A because that category row needs region AIM specifically. Obviously, the table will expand into millions of records when this join is done.

I don't know if this can be done with some iterative macro or some other trick. Can someone help?

Solved! Go to Solution.

- Labels:

-

Iterative Macro

-

Join

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi there!

I would love to help with this situation - it sounds like a really interesting challenge. I have a couple of questions about the data before I can think about the best way forward:

1) First of all, what are you planning to use this data once the join is completed? Will the totals (with their multiple rows) be used to sum the values in table B and get back to one row for your original table A, or do you plan to have a final data set with multiple rows for each of those totals? Once the data is created, will you be using this for reporting, and in what format/software?

2) Is your table A meant to contain all possible combinations of totals, or will you only need a subset of the possible roll up totals? I ask because I would anticipate the total number of possible combinations is very large (probably larger than 50,000 - there are 52 combinations of different totals/non totals for a single row alone). It is important to understand if we are dealing with very large data volumes if we are going to consider using an iterative approach.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hey @TLyle

This should do it. MIght not be the best approach once the data gets real big but I think this gives you the idea. You could turn this into a batch macro to do this join row by row so you don't have to do the append. Wanted to give you something that you could start with. Let me know if this works for you!

Joshua

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Of course I couldn't help myself and went ahead and created the batch version that I think should perform better as it limits the amount of appends that need to happen by batching by RecordID. Spot check to make sure this gives you what you need 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

@joshuaburkhow Excellent solution . This could be next weekly challenge !. I think macro is not attached .

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Thanks Joshua, for sure that was the simple logic that I thought might exist but I couldn't figure out. This solution works but i think your macro solution will be more scalable as the records will expand to a huge number before i par them down a couple steps later.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

@joshuaburkhowcould you post the macro as well? I can't load the workflow without the macro.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Also I know a lot of us here would love to know how it actually performs on the large dataset. If you remember/can post how the macro did against the larger data I would love to know!

thanks!

JB

-

Academy

6 -

ADAPT

2 -

Adobe

204 -

Advent of Code

3 -

Alias Manager

78 -

Alteryx Copilot

26 -

Alteryx Designer

7 -

Alteryx Editions

95 -

Alteryx Practice

20 -

Amazon S3

149 -

AMP Engine

252 -

Announcement

1 -

API

1,209 -

App Builder

116 -

Apps

1,360 -

Assets | Wealth Management

1 -

Basic Creator

15 -

Batch Macro

1,559 -

Behavior Analysis

246 -

Best Practices

2,695 -

Bug

719 -

Bugs & Issues

1 -

Calgary

67 -

CASS

53 -

Chained App

268 -

Common Use Cases

3,825 -

Community

26 -

Computer Vision

86 -

Connectors

1,426 -

Conversation Starter

3 -

COVID-19

1 -

Custom Formula Function

1 -

Custom Tools

1,939 -

Data

1 -

Data Challenge

10 -

Data Investigation

3,488 -

Data Science

3 -

Database Connection

2,221 -

Datasets

5,223 -

Date Time

3,229 -

Demographic Analysis

186 -

Designer Cloud

742 -

Developer

4,373 -

Developer Tools

3,531 -

Documentation

528 -

Download

1,037 -

Dynamic Processing

2,940 -

Email

928 -

Engine

145 -

Enterprise (Edition)

1 -

Error Message

2,259 -

Events

198 -

Expression

1,868 -

Financial Services

1 -

Full Creator

2 -

Fun

2 -

Fuzzy Match

713 -

Gallery

666 -

GenAI Tools

3 -

General

2 -

Google Analytics

155 -

Help

4,709 -

In Database

966 -

Input

4,295 -

Installation

361 -

Interface Tools

1,901 -

Iterative Macro

1,095 -

Join

1,959 -

Licensing

252 -

Location Optimizer

60 -

Machine Learning

260 -

Macros

2,864 -

Marketo

12 -

Marketplace

23 -

MongoDB

82 -

Off-Topic

5 -

Optimization

751 -

Output

5,256 -

Parse

2,328 -

Power BI

228 -

Predictive Analysis

937 -

Preparation

5,171 -

Prescriptive Analytics

206 -

Professional (Edition)

4 -

Publish

257 -

Python

855 -

Qlik

39 -

Question

1 -

Questions

2 -

R Tool

476 -

Regex

2,339 -

Reporting

2,434 -

Resource

1 -

Run Command

575 -

Salesforce

277 -

Scheduler

411 -

Search Feedback

3 -

Server

631 -

Settings

936 -

Setup & Configuration

3 -

Sharepoint

628 -

Spatial Analysis

599 -

Starter (Edition)

1 -

Tableau

512 -

Tax & Audit

1 -

Text Mining

468 -

Thursday Thought

4 -

Time Series

432 -

Tips and Tricks

4,187 -

Topic of Interest

1,126 -

Transformation

3,731 -

Twitter

23 -

Udacity

84 -

Updates

1 -

Viewer

3 -

Workflow

9,982

- « Previous

- Next »