Alteryx Designer Desktop Discussions

Find answers, ask questions, and share expertise about Alteryx Designer Desktop and Intelligence Suite.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Designer Desktop

- :

- How to force a type but leave byte size unfixed?

How to force a type but leave byte size unfixed?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

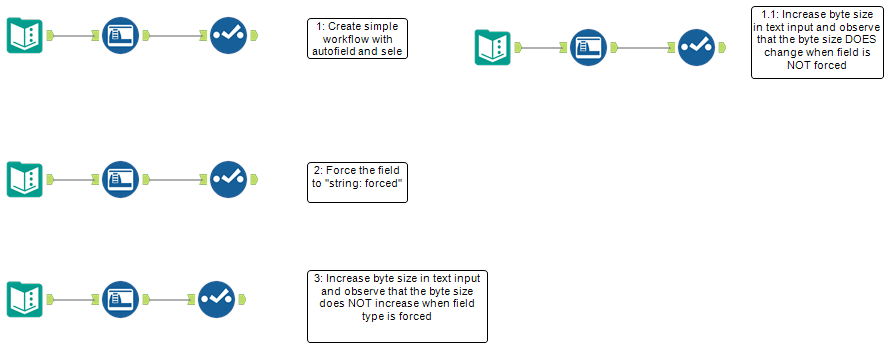

The post title and workflow comments describe the situation exactly.

The fact that forcing a data type locks in the byte size, regardless of whether there is an autofield, is ridiculous and causing a lot of problems for me.

I NEED the data type to be forced, otherwise auto field mucks it up. But I need the byte size to be dynamically set (minimized via autofield) for each field on each run of the workflow for optimization.

How do I do this?

EDIT:

furthermore, it is NOT warning about data truncation. Why is this? I've just spent days uploading millions of records to a table... now I see I could easily have tons of truncations without even getting a warning?? Why??

Solved! Go to Solution.

- Labels:

-

Gallery

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi @Joshman108

You can try and change the sequence. First use select tool and force datatype but give very large size so that can accommodate big string. Use auto-field after on the required field to reduce the string variable size.

Workflow:

Hope this helps 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I actually accepted this too soon, this does not fully solve the issue.

My data has different qualities from these examples namely, I have string fields that look like numbers. In your example, autofield will still obliterate the field type if it thinks it's a number, regardless of whether the upstream select specifies string: forced.

Unfortunately my data must remain this way.

So still unresolved but.... I think we're going to use a different tool for this.

Very frustrating though.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

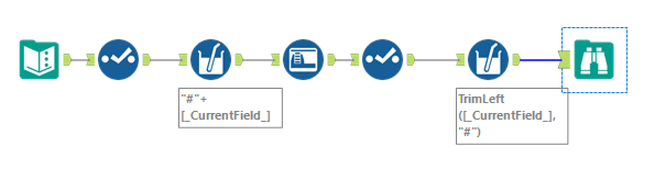

Hi @Joshman108

Here is what you can do. To prevent string to be converted to number lets append "#" (could be any character) before sending it into autofield and remove it later wards. We can append "#" on multiple columns using multi-field formula tool.

Workflow:

1. using select tool to force type and give large size

2. using multi-field formula filed to append "#" for all string fields at the beginning.

3. using autofield to minimize size. Since "#" is appended it wont be converted to numbers.

4. using multi-field formula to remove beginning "#" and getting it back to normal.

Hope this helps 🙂

-

Academy

6 -

ADAPT

2 -

Adobe

204 -

Advent of Code

3 -

Alias Manager

78 -

Alteryx Copilot

26 -

Alteryx Designer

7 -

Alteryx Editions

95 -

Alteryx Practice

20 -

Amazon S3

149 -

AMP Engine

252 -

Announcement

1 -

API

1,210 -

App Builder

116 -

Apps

1,360 -

Assets | Wealth Management

1 -

Basic Creator

15 -

Batch Macro

1,559 -

Behavior Analysis

246 -

Best Practices

2,696 -

Bug

720 -

Bugs & Issues

1 -

Calgary

67 -

CASS

53 -

Chained App

268 -

Common Use Cases

3,825 -

Community

26 -

Computer Vision

86 -

Connectors

1,426 -

Conversation Starter

3 -

COVID-19

1 -

Custom Formula Function

1 -

Custom Tools

1,939 -

Data

1 -

Data Challenge

10 -

Data Investigation

3,489 -

Data Science

3 -

Database Connection

2,221 -

Datasets

5,223 -

Date Time

3,229 -

Demographic Analysis

186 -

Designer Cloud

743 -

Developer

4,376 -

Developer Tools

3,534 -

Documentation

528 -

Download

1,038 -

Dynamic Processing

2,941 -

Email

928 -

Engine

145 -

Enterprise (Edition)

1 -

Error Message

2,262 -

Events

198 -

Expression

1,868 -

Financial Services

1 -

Full Creator

2 -

Fun

2 -

Fuzzy Match

714 -

Gallery

666 -

GenAI Tools

3 -

General

2 -

Google Analytics

155 -

Help

4,711 -

In Database

966 -

Input

4,296 -

Installation

361 -

Interface Tools

1,902 -

Iterative Macro

1,095 -

Join

1,960 -

Licensing

252 -

Location Optimizer

60 -

Machine Learning

260 -

Macros

2,866 -

Marketo

12 -

Marketplace

23 -

MongoDB

82 -

Off-Topic

5 -

Optimization

751 -

Output

5,258 -

Parse

2,328 -

Power BI

228 -

Predictive Analysis

937 -

Preparation

5,171 -

Prescriptive Analytics

206 -

Professional (Edition)

4 -

Publish

257 -

Python

855 -

Qlik

39 -

Question

1 -

Questions

2 -

R Tool

476 -

Regex

2,339 -

Reporting

2,434 -

Resource

1 -

Run Command

576 -

Salesforce

277 -

Scheduler

411 -

Search Feedback

3 -

Server

631 -

Settings

936 -

Setup & Configuration

3 -

Sharepoint

628 -

Spatial Analysis

599 -

Starter (Edition)

1 -

Tableau

512 -

Tax & Audit

1 -

Text Mining

468 -

Thursday Thought

4 -

Time Series

432 -

Tips and Tricks

4,187 -

Topic of Interest

1,126 -

Transformation

3,732 -

Twitter

23 -

Udacity

84 -

Updates

1 -

Viewer

3 -

Workflow

9,982

- « Previous

- Next »