Alteryx Designer Desktop Discussions

Find answers, ask questions, and share expertise about Alteryx Designer Desktop and Intelligence Suite.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Designer Desktop

- :

- Re: How to execute different instances of same wor...

How to execute different instances of same workflow in parallel

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hello - I have use case to execute Altrex workflow in parallel. I have workflow parameterized using a one element which is getting data from Postgres and teradata and performing some comparison and creating an output file . This workflow is highly optimized but it takes 1 hr time to process the comparison as avg data volume is 50m . Now we want to execute the workflow in parallel say 5 parallel process by passing different parameter so that we can perform 5 comparison in 1 hour rather sone spending 5 hours . Could you please let me know how to do this in Altrex?

- Labels:

-

Best Practices

-

Dynamic Processing

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Have tried to transforme it on to macro with application tools inside and then you can call the macro as many time as you want and you can change the parameters as well.

You can see the data cleansing tool as it's macro. You can have an idea :)

Hope this helps,

Regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi @Dkundu,

I would not agree with @messi007 , when you set up a macro or any tool in a workflow, it might not paralellize, plus it depends a lot on the content of the macro (tools inside...), most of the time the longest part of the process is extracting data between the server (in your case teradata and postgre) to your environement. In your case, there are a few different possibilities which come to my mind if the data extracted is the same:

- Extract the data raw and store it as in db, then do your tests so that it will take less time, you could then multiply the different tests as much as you want since the data will already be stored

- Use In Database Tools if you have joins between postgre and postgre and you did it locally, it will allow you create a sql query without coding. BUT you won't be able to join teradata and postgre by using those tools, or what you might have to do would be extract from one system to create a temporary table to be used

If you data is different everytime, I don't see really another way since the most time consuming point will be the extraction of the data.

Generally I would advise you to run your workflow with the option Enable Performance profiling (Runtime settings), and see where is the most time consuming action in your workflow, when this is found, you may be able to factorise it with ease!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Thanks for your reply . For each run my data is different so we are making the db call well ahead and placing it in alterx server ahead in file . So our requirement is just call same workflow multiple time may be 5 for each data set or input. Pls note we are getting the input in spreadsheet. I am thinking what we save the job 5 times using different name and link it to 5 different spreadsheet so that it can run in parallel. As we are keeping in file our goal is use amp on top of it .

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Thanks for your reply . For each run my data is different so we are making the db call well ahead and placing it in alterx server ahead in file . So our requirement is just call same workflow multiple time may be 5 for each data set or input. Pls note we are getting the input in spreadsheet. Do you think you macro will work based on my use case ?

I am thinking if we save the job 5 times using different name and link it to 5 different spreadsheet so that it can run in parallel. As we are keeping in file our goal is use amp on top of it .

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi again @Dkundu,

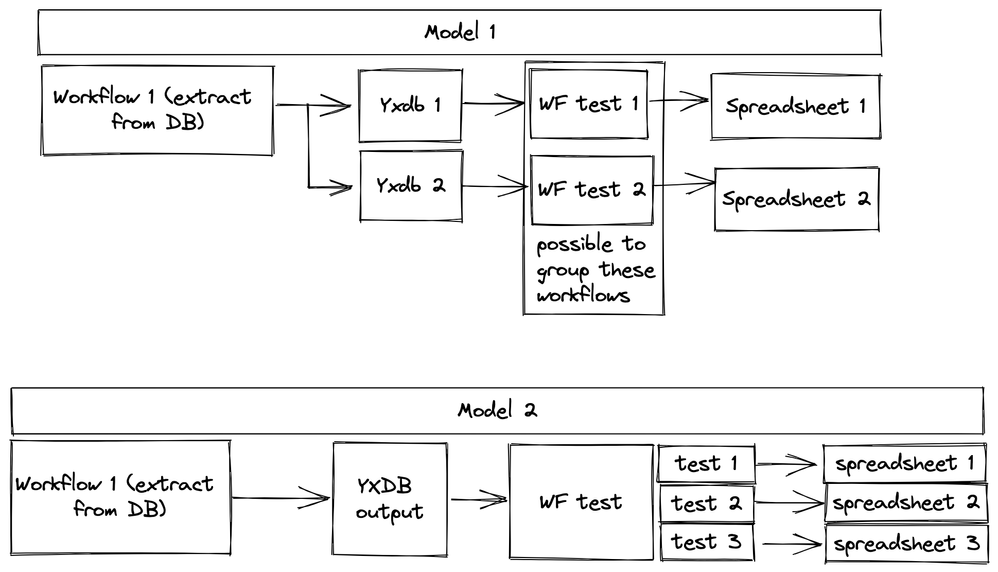

here is a scheme of how I would do it, the first model is if you have multiple source files for your tests, meaning multiple workflow to process theses tests. The second model is a bit more straight forward, you only have 1 datasource for all the tests, you output it as yxdb to improve performance and then you run one workflow containing all the tests you might need.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

My problem is little different . Let’s I have 10 run id like R1,R2…R10 … I have one Altrex flow now if Push R1 to my Altrex flow then it takes 2 hours to process . After that I have to push R2 and wait for 2 hours to complete so if I have 10 run id then I have to wait 20 hours . All I want to complete the process in 2 hours by runnning same workflow 10 times with different run id as parallel stream at same time .

-

Academy

6 -

ADAPT

2 -

Adobe

204 -

Advent of Code

3 -

Alias Manager

78 -

Alteryx Copilot

26 -

Alteryx Designer

7 -

Alteryx Editions

95 -

Alteryx Practice

20 -

Amazon S3

149 -

AMP Engine

252 -

Announcement

1 -

API

1,208 -

App Builder

116 -

Apps

1,360 -

Assets | Wealth Management

1 -

Basic Creator

15 -

Batch Macro

1,559 -

Behavior Analysis

246 -

Best Practices

2,695 -

Bug

719 -

Bugs & Issues

1 -

Calgary

67 -

CASS

53 -

Chained App

268 -

Common Use Cases

3,825 -

Community

26 -

Computer Vision

86 -

Connectors

1,426 -

Conversation Starter

3 -

COVID-19

1 -

Custom Formula Function

1 -

Custom Tools

1,938 -

Data

1 -

Data Challenge

10 -

Data Investigation

3,487 -

Data Science

3 -

Database Connection

2,220 -

Datasets

5,222 -

Date Time

3,227 -

Demographic Analysis

186 -

Designer Cloud

742 -

Developer

4,372 -

Developer Tools

3,530 -

Documentation

527 -

Download

1,037 -

Dynamic Processing

2,939 -

Email

928 -

Engine

145 -

Enterprise (Edition)

1 -

Error Message

2,258 -

Events

198 -

Expression

1,868 -

Financial Services

1 -

Full Creator

2 -

Fun

2 -

Fuzzy Match

712 -

Gallery

666 -

GenAI Tools

3 -

General

2 -

Google Analytics

155 -

Help

4,708 -

In Database

966 -

Input

4,293 -

Installation

361 -

Interface Tools

1,901 -

Iterative Macro

1,094 -

Join

1,958 -

Licensing

252 -

Location Optimizer

60 -

Machine Learning

260 -

Macros

2,864 -

Marketo

12 -

Marketplace

23 -

MongoDB

82 -

Off-Topic

5 -

Optimization

751 -

Output

5,255 -

Parse

2,328 -

Power BI

228 -

Predictive Analysis

937 -

Preparation

5,169 -

Prescriptive Analytics

206 -

Professional (Edition)

4 -

Publish

257 -

Python

855 -

Qlik

39 -

Question

1 -

Questions

2 -

R Tool

476 -

Regex

2,339 -

Reporting

2,434 -

Resource

1 -

Run Command

575 -

Salesforce

277 -

Scheduler

411 -

Search Feedback

3 -

Server

630 -

Settings

935 -

Setup & Configuration

3 -

Sharepoint

627 -

Spatial Analysis

599 -

Starter (Edition)

1 -

Tableau

512 -

Tax & Audit

1 -

Text Mining

468 -

Thursday Thought

4 -

Time Series

431 -

Tips and Tricks

4,187 -

Topic of Interest

1,126 -

Transformation

3,730 -

Twitter

23 -

Udacity

84 -

Updates

1 -

Viewer

3 -

Workflow

9,980

- « Previous

- Next »