Alteryx Designer Desktop Discussions

Find answers, ask questions, and share expertise about Alteryx Designer Desktop and Intelligence Suite.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Designer Desktop

- :

- Dynamic Input Macro - Handling Different Delimiter...

Dynamic Input Macro - Handling Different Delimiters, Header Rows, File Types, Etc.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi all,

I need to create a master data input macro that can automatically pull in all zip and csv files: those that are already delimited, those that need to be delimited, and files with header rows where the data doesn’t start until somewhere like row 12 (requiring a dynamic Import Line). Needs to be in a macro as it would involve pulling multiple files at once.

Let’s assume I’m doing all these files separately for now, so I don’t have to stack up these five different files into one output – I just need a macro that I could drop in to take care of each different file.

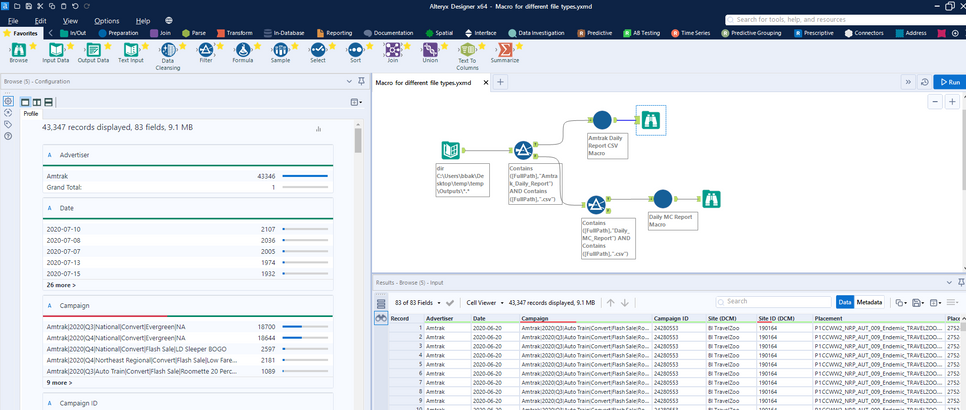

I have five different containers listed in the attached workflow, and they’re all plugged into the same macro with 5 different outputs. Output A was built for the example in Container A, B for B, and so forth. I’m also attaching a zip folder of the files I’m using so if you want to export into your own folder and test, please change the C:\temp\ path in the text inputs to wherever you have the data saved.

When I test some containers on some differently-lettered outputs, some work, some kind of work (the data is there, but still in non-delimited form, or all the data is there but the non-header rows are still in the file) and some don’t work at all. I need a master output from the macro that would be able to take on the different file types and cleanly export as a delimited and properly header-rowed file. None of the 5 Outputs work for all files well enough that I could currently finagle the final pieces on my own.

I’m listing the specific examples of the types of file I want to import and what the constraints are for all of them.

Container A – File is standard csv; Output A is able to take in standardized files.

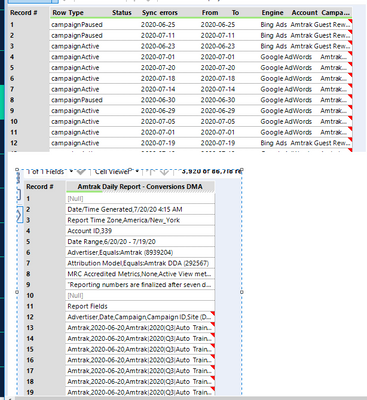

Column B – Zip file with underlying csv. Syntax is already organized into the code you need to feed into the macro (both zip and csv text): C:\temp\Outputs\Report.zip|||Report.csv. This file automatically delimits the columns without me really having to do anything to the underlying Input tool.

Container C- Zip file with an underlying csv BUT this one necessitates that I write in a tab delimiter to the underlying Input tool as \t (Output C). I have no idea why one csv file in a zip would need to be delimited and the one in Container B does not, but I do need a code that’ll be able to treat them both. Might be safer to input it non-delimited and then use a delimiter tool in the next step for files like Container C that still require it?

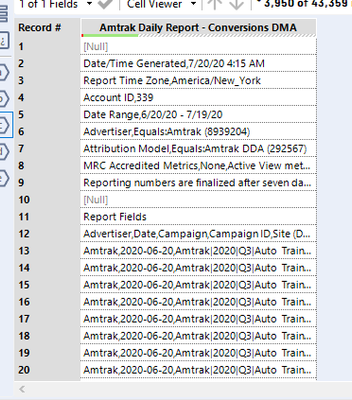

Container D – I previously had a macro where I’d overwrite the XML code for the Import Line portion, saying that if the file name contains DMA Conversions then Import Line 12 because that’s whether the header is, BUT in this example, I want the macro to be able to detect that itself. Let’s assume I have other files not included that start on row 6 or something so I’d need a code that will realize the header row is not line 1 and will find it automatically, instead of me manually writing the Line Imports for certain files into the code.

Might be safest for the input portion to just import the data as below, and then I could write some classic Alteryx code to delimit it in the next tool:

I mean, if I had an input tool that would import regular csv and zip files as is that could also detect if the file needs to be delimited like in Container C and import files where files that begin with header rows on line 12 like above, I could probably automate the rest on my own. But none of my current output options pull in all the files like that.

Container E is like Container D except it’s a direct csv file instead of within a zip but right now, Output D doesn’t work for container E, and vice versa.

Ultimately, it’s a conceptually easy outcome that I’m seeking: for all files - regardless of file type and files that have awkward header rows - to be cleanly parsed into tables. But the input tool isn’t variable enough standalone that it can account for all these different file types. And while a macro can help to overrule some variability, mine still aren’t sophisticated enough to ingest and output all these files.

I’m providing the workflow export with the underlying macros, and the folder that contains all the files I want to dynamically input (please change the file path in the text inputs to whatever folder you load those files into so you can play around with the different file types firsthand).

Please let me know if anything is unclear. Looking forward to hearing from some brilliant minds,

Kieran

Solved! Go to Solution.

- Labels:

-

Batch Macro

-

Dynamic Processing

-

Input

-

Parse

-

Reporting

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Do you know what files need to be parsed in what way based on either the folders that they live in or their file names? You could potentially use a directory tool and some filters to pass different files to different macros.

As for the dynamic import on certain lines, you can actually take a unique approach if you know what word you are looking for in the header row. Take a look at this post I helped out with a while back: https://community.alteryx.com/t5/Alteryx-Designer/Dynamic-quot-Start-data-Import-on-Line-quot/td-p/4...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I do think that if you can route files accordingly based on their file name and/or extension that would be ideal rather than the open them up and then do something with the data approach.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hey Brandon,

Thanks for getting back to me. The yxzp file won't open for me but per the screenshot, I'm not looking to send some files one way and some the other way, rather I'd like a macro that can tackle them all because there are plenty of other files not included in this example that I would want to send through as well. After I posted yesterday, I actually got a little closer by playing around in the Options section of the Input Data tool and set it up as the screenshot below: Delimiter as \t and Ignore Delimiters in None. This works on the manual input for all five files, but doesn't work on the zip file with header rows as a macro (Container D). Also in container C (the zip file without header rows), the manual input will delimit it, but sending the file path through the macro will not delimit it, which seems like inconsistent logic.

I am attaching the new macro, and in each container I have both the file path going through the macro along with the Input Data tool for the manual file, so you can see how the two differed against each other in C and D as I mentioned.

But the main issue right now is why it won't work for the file in Container D because it says too many fields in most records. Container E has the same file with header rows, but just as a csv without a zip, so it seems weird that the macro will output container E correctly but not container D.

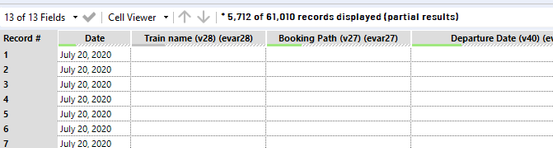

If I can get the macro to pull the files as you see below

then I'll be able to figure out the rest of the parsing on my own :). So no worries on that part. But I need a way to send all five file paths through the macro and get everything outputted, regardless of how sloppy it comes out. Updated macro along with the manual Input Data for each file attached.

Thanks again.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

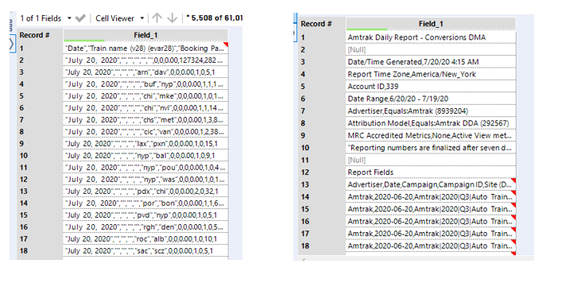

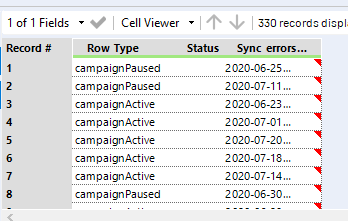

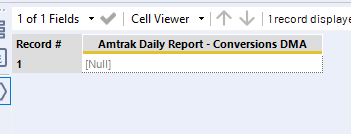

Update: I played around in the Input Data tool some more and the best way to manually do it appears to be to delimit by \0 (i.e. to not delimit at all) and to deselect First Row Contains Field Names. From here on, for all files, I’d be able to parse and cleanse into a neat table on my own, so that’s not a concern, even with header rows. If I select those steps manually in an Input Data tool, they will come out as follows below where I’ll finagle on my own henceforth.

But I’m still looking for a macro to take all file types (csv vs zip, and files with header rows) to be able to output all files as above.

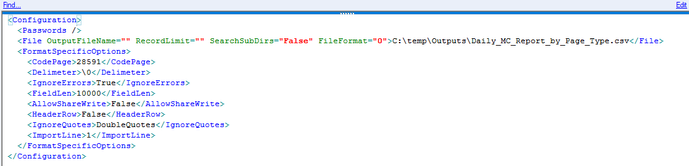

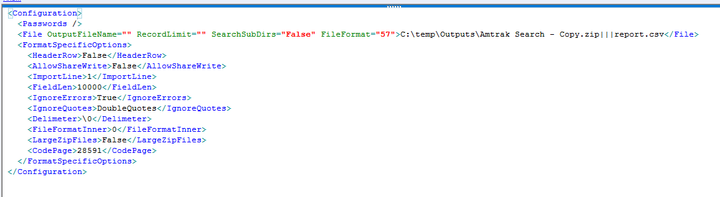

I broke into the XML code in the Input Data tool of uploading a manual zip vs csv to see if there were any differences I’d need to account for in the macro, as sometimes sending a zip file through a macro hardcoded for some features of a csv will only partially work, if at all. Please take a look at the corresponding XML code below for the different file types in that tool:

CSV

ZIP

Despite the difference in order of lines (which I’m assuming for now doesn’t matter), the only difference in the two screenshots above appears to be in the <File> line, with both the FileFormat # and the actual file path. The file path is accounted for in my Text Input tool in the master workflow (to feed through the Action tool in macro where I select the action type Update Input Data Tool) and accounts for the full path of csv vs. zip, whereas the FileFormat portion is where I can conditionally update the XML code to account for csv vs. zip as the file type input.

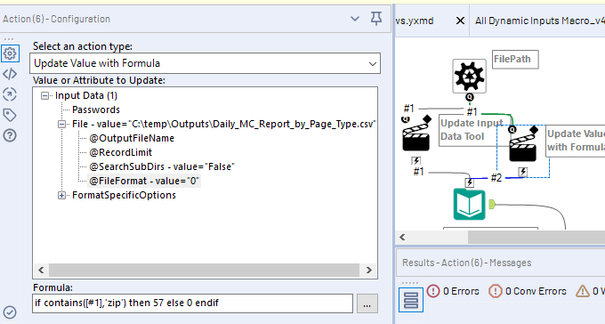

So theoretically, since I’m already inputting each file path into the macro, the only other additional code I’d have to write is to update the FileFormat code based on whether it’s a csv (0) or a zip (57), right? In my previous experience with macros, updating the XML overrules what we hardcode into the inner tool in the macro, so I’ve created an additional Action tool with the formula below.

But it still didn’t yield the results I wanted in the master flow. I’ve also wrapped that formula in a tostring() in case the 0 and 57 yield a number and mess everything up, but that didn’t work either.

Currently the macro works for csvs in both container A and E, but yields problems for all the zip files. Made a quick list below of the problems and screenshots, and some of the logic I hardcoded in (deselecting First Row Contains Field Names) for some reason is still not coming through in the macro:

Container | File Type | Result/Problem w/ Macro |

B | Zip File | Come sout delimited (when I selected it not to via \0) with Column Names as Header Rows |

C | Zip File | Comes out correctly parsed (non-delimited) but Field is nondelimited column names |

D | Zip File with Header Rows | Only comes out with first record, with the rest of the header rows and subsequent data table not coming through). Also, Field name is first row from file, despite me deselecting that in macro). |

Container B file through macro:

Container C file through macro:

Container D file through macro:

So to recap, the stuff that’s hardcoded into the Input Data tool in the macro is still in the XML code, which you see below:

<Delimeter>\0</Delimeter>

<HeaderRow>False</HeaderRow>

This will be true for all containers if I input those files manually, but the variable aspects of input are FileFormat and file path, which I have Action tools to update the XML:

<File OutputFileName="" RecordLimit="" SearchSubDirs="False" FileFormat="0">C:\temp\Outputs\Daily_MC_Report_by_Page_Type.csv</File>

So even though the file paths in containers B, C and D don’t work through the macro, I feel like the variable portions (i.e. Action tools) of the macro should update the XML code to mirror exactly what’s in the XML code when I manually input these files in their respective containers, and yet still, aspects of both the constant portions and variable portions are not working consistently.

Any thoughts are appreciated. New workflow attached with each container having both a Text Input with the file path and the corresponding file being manually input via Input Data tool in the same container. Folder attached as well, if you can reset the file path to whatever folder you store it in. Thank you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

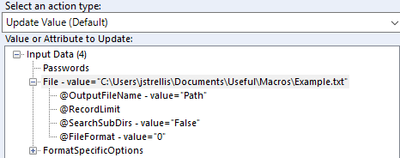

OK, I’m going to hat tip @JoeS whose InputBatch Macro post here helped me solve the final mystery https://community.alteryx.com/t5/Alteryx-Designer-Discussions/Input-Batch-Macro-AKA-the-Extra-Dynami.... Looks like the Input Batch Submacro in this workflow had similar logic regarding dynamically changing FileFormat # based on file type, but what was different is that this workflow took the FileName in the master workflow and updated as below via the action Update Value (Default):

Whereas in my previous post, I had selected as an action type Update Input Data Tool. I couldn’t have initially told you what the exact difference is between Update Input Data Tool and Update Value (Default) action types but it looks like one way works, and the other doesn’t, so here we are with the result of one output regardless of it’s a csv or zip. It appears if I select Update Input Data Tool, the file type that I feed into the inner macro is grandfathered regardless of the file name that you put in the master workflow (i.e. if it’s a csv in the inner macro, only a csv file path would work going through the master workflow), whereas the Update Value (Default) action type will regard and overrule file type based on what’s in the master workflow. The matter is closed; solution attached (folders are available for download in previous post, at which point you can change path in Text Inputs). My specific input will always be \0 as Delimiter and deselect First Row Contains Column Names so that the remainder of my ‘flow (which I’m leaving out of the solution) will parse that all out. Thanks to those who commented.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I am glad my macro proved useful 🙂

Some nice work you have done there.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Thank you, @JoeS - Game recognizes game.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Sorry I should have explained the difference.

When you are using the Update Input Data Tool it will use the default settings for that file type. So it's not going to use the delimiter type \0 and header as false, but the default of , and true.

Update Value (default) is only updating the specific part of the configuration (File path) and leaving the rest of the XML the same, thus leaving you with a working Input tool 🙂

-

Academy

6 -

ADAPT

2 -

Adobe

204 -

Advent of Code

3 -

Alias Manager

78 -

Alteryx Copilot

26 -

Alteryx Designer

7 -

Alteryx Editions

95 -

Alteryx Practice

20 -

Amazon S3

149 -

AMP Engine

252 -

Announcement

1 -

API

1,209 -

App Builder

116 -

Apps

1,360 -

Assets | Wealth Management

1 -

Basic Creator

15 -

Batch Macro

1,559 -

Behavior Analysis

246 -

Best Practices

2,695 -

Bug

719 -

Bugs & Issues

1 -

Calgary

67 -

CASS

53 -

Chained App

268 -

Common Use Cases

3,825 -

Community

26 -

Computer Vision

86 -

Connectors

1,426 -

Conversation Starter

3 -

COVID-19

1 -

Custom Formula Function

1 -

Custom Tools

1,939 -

Data

1 -

Data Challenge

10 -

Data Investigation

3,488 -

Data Science

3 -

Database Connection

2,221 -

Datasets

5,223 -

Date Time

3,229 -

Demographic Analysis

186 -

Designer Cloud

742 -

Developer

4,373 -

Developer Tools

3,531 -

Documentation

528 -

Download

1,037 -

Dynamic Processing

2,940 -

Email

928 -

Engine

145 -

Enterprise (Edition)

1 -

Error Message

2,260 -

Events

198 -

Expression

1,868 -

Financial Services

1 -

Full Creator

2 -

Fun

2 -

Fuzzy Match

713 -

Gallery

666 -

GenAI Tools

3 -

General

2 -

Google Analytics

155 -

Help

4,711 -

In Database

966 -

Input

4,295 -

Installation

361 -

Interface Tools

1,901 -

Iterative Macro

1,095 -

Join

1,959 -

Licensing

252 -

Location Optimizer

60 -

Machine Learning

260 -

Macros

2,864 -

Marketo

12 -

Marketplace

23 -

MongoDB

82 -

Off-Topic

5 -

Optimization

751 -

Output

5,258 -

Parse

2,328 -

Power BI

228 -

Predictive Analysis

937 -

Preparation

5,171 -

Prescriptive Analytics

206 -

Professional (Edition)

4 -

Publish

257 -

Python

855 -

Qlik

39 -

Question

1 -

Questions

2 -

R Tool

476 -

Regex

2,339 -

Reporting

2,434 -

Resource

1 -

Run Command

575 -

Salesforce

277 -

Scheduler

411 -

Search Feedback

3 -

Server

631 -

Settings

936 -

Setup & Configuration

3 -

Sharepoint

628 -

Spatial Analysis

599 -

Starter (Edition)

1 -

Tableau

512 -

Tax & Audit

1 -

Text Mining

468 -

Thursday Thought

4 -

Time Series

432 -

Tips and Tricks

4,187 -

Topic of Interest

1,126 -

Transformation

3,731 -

Twitter

23 -

Udacity

84 -

Updates

1 -

Viewer

3 -

Workflow

9,982

- « Previous

- Next »