Alteryx Designer Desktop Discussions

Find answers, ask questions, and share expertise about Alteryx Designer Desktop and Intelligence Suite.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Designer Desktop

- :

- Re: Delete rows to a Database using alteryx (looki...

Delete rows to a Database using alteryx (looking for fast method)

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hello,

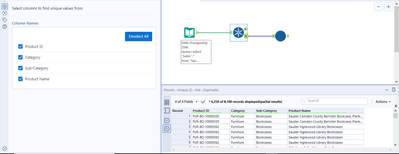

I am working with a workflow to find duplicates from a database and now I am trying to Delete thoose duplicates, directly from alteryx.

I know there is a PreSQL Statement to create a query. So, I have create my query, then add a control parameter for build a macro.

I connect my workflow to the macro to delete rows from it, It's working but it's very very long.

To give you an idea, I have 500 rows to delete, and it's still running after 1 hour (34% complete).

So, I would like to know if there is a faster method, because it's not possible to use this method.

Especially , I only have 500 rows, I can't even imagine with millions of rows.

Thank for your help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I'm not quite seeing what you are doing - but it sounds a bit off.... Can you sketch out more of what you are doing? Are you deleting the rows in a SQL database? what's the format? I don't know what the macro is for.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

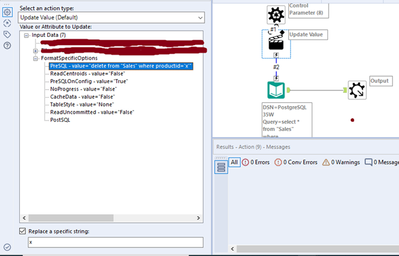

Yes, I want to delete specific rows in SQL DataBase, using a where condition on a ID, to choose the rows that i want to delete.

That's why I am using a macro to replace the ID from the query with a control parameter.

I am using exactly the method found in this post :

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

o.k. - post your action tool configuration screens with samples of the values you are feeding in.

Can you clarify what kind of SQL DB you are using?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I am using a Postgre DB. I have made some screens of my workflows.

As I've said, it's working but the execution is very very long.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

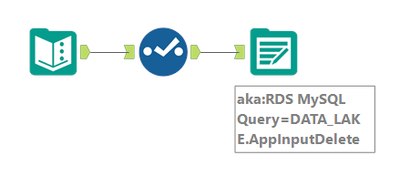

My recommendation for this type of processing is to create a stream with the to-be-deleted IDs and insert them into a database table that will be used to power a SQL Delete with Join statement as the post-SQL statement to delete the records required. This will then become a SQL bulk delete that will perform much more efficiently. This is an example of a MySQL delete statement.

Delete c

From Customer c

Join CustomerDeleteTemp ct

On c.customerID = ct.customerID

This video might help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

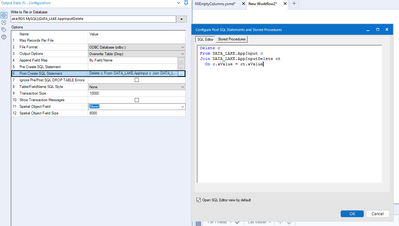

This is the simple workflow. Text Data has the to-be-deleted ID. And Ouput Data has the Post-SQL.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I have a table of 2300 jobs, and I would like to delete 461 of them. They are identified by a filter (isreplacejobs = 1).

The list of jobs to delete goes to the Control Container "tempDelete" input to start the process, and also goes to a Select within the Control, which reduces the fields to just the jobid. This creates a table called "tempdelete", with one field jobid (from the Select).

When the table is created, the Control Container is satisfied, and Container output starts the next Container "delete jobs". The list of jobs is sent to the Select Records, which chooses 0, so no records are continued. The Count counts zero, which creates a field called Count.

The "jobs then tempdelete" Output (ODBC) creates a new table called "tempdelete", overwriting the previous tempdelete that had the jobids. But, prior to the deletion, the Pre-SQL deletes records from the jobs table that match the IDs in tempdelete.jobid. tl;dr: delete the jobs by ID and erase your tracks.

Note: this is safe to the workflow. You might need to change tempdelete to tempdeletejobs to avoid external conflict.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Using Alteryx Dynamic input to delete rows:

Dynamic Input SQL:

SELECT 1;

DELETE FROM table WHERE id IN (1,2,3)

Then use the dynamic input to edit your list of IDs. No Pre/post SQL needed.

If needed you can then add a control container to append any additional rows - setting it up so that it doesn't activate until the previous Dynamic input has finished.

C

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Thank you! This works great if you know the ID numbers you want, but I did not. The IDs to use were indicated by a certain field value = 1.

-

Academy

6 -

ADAPT

2 -

Adobe

204 -

Advent of Code

3 -

Alias Manager

78 -

Alteryx Copilot

26 -

Alteryx Designer

7 -

Alteryx Editions

95 -

Alteryx Practice

20 -

Amazon S3

149 -

AMP Engine

252 -

Announcement

1 -

API

1,208 -

App Builder

116 -

Apps

1,360 -

Assets | Wealth Management

1 -

Basic Creator

15 -

Batch Macro

1,559 -

Behavior Analysis

246 -

Best Practices

2,695 -

Bug

719 -

Bugs & Issues

1 -

Calgary

67 -

CASS

53 -

Chained App

268 -

Common Use Cases

3,825 -

Community

26 -

Computer Vision

86 -

Connectors

1,426 -

Conversation Starter

3 -

COVID-19

1 -

Custom Formula Function

1 -

Custom Tools

1,938 -

Data

1 -

Data Challenge

10 -

Data Investigation

3,487 -

Data Science

3 -

Database Connection

2,220 -

Datasets

5,222 -

Date Time

3,227 -

Demographic Analysis

186 -

Designer Cloud

742 -

Developer

4,372 -

Developer Tools

3,530 -

Documentation

527 -

Download

1,037 -

Dynamic Processing

2,939 -

Email

928 -

Engine

145 -

Enterprise (Edition)

1 -

Error Message

2,258 -

Events

198 -

Expression

1,868 -

Financial Services

1 -

Full Creator

2 -

Fun

2 -

Fuzzy Match

712 -

Gallery

666 -

GenAI Tools

3 -

General

2 -

Google Analytics

155 -

Help

4,708 -

In Database

966 -

Input

4,293 -

Installation

361 -

Interface Tools

1,901 -

Iterative Macro

1,094 -

Join

1,958 -

Licensing

252 -

Location Optimizer

60 -

Machine Learning

260 -

Macros

2,864 -

Marketo

12 -

Marketplace

23 -

MongoDB

82 -

Off-Topic

5 -

Optimization

751 -

Output

5,255 -

Parse

2,328 -

Power BI

228 -

Predictive Analysis

937 -

Preparation

5,169 -

Prescriptive Analytics

206 -

Professional (Edition)

4 -

Publish

257 -

Python

855 -

Qlik

39 -

Question

1 -

Questions

2 -

R Tool

476 -

Regex

2,339 -

Reporting

2,434 -

Resource

1 -

Run Command

575 -

Salesforce

277 -

Scheduler

411 -

Search Feedback

3 -

Server

630 -

Settings

935 -

Setup & Configuration

3 -

Sharepoint

627 -

Spatial Analysis

599 -

Starter (Edition)

1 -

Tableau

512 -

Tax & Audit

1 -

Text Mining

468 -

Thursday Thought

4 -

Time Series

431 -

Tips and Tricks

4,187 -

Topic of Interest

1,126 -

Transformation

3,730 -

Twitter

23 -

Udacity

84 -

Updates

1 -

Viewer

3 -

Workflow

9,980

- « Previous

- Next »