Alteryx Designer Desktop Discussions

Find answers, ask questions, and share expertise about Alteryx Designer Desktop and Intelligence Suite.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Designer Desktop

- :

- Re: Complicated Excel shaping across multiple file...

Complicated Excel shaping across multiple files

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hello,

I have a few hundred Excel files, all with about 30 sheets. On the same sheet in every file, in the same place on every sheet, there are some numbers I need to aggregate. I've taken that sheet out and put in some dummy data, and attached it below.

The data that I need is column A, (just the retailer name, not any of the product details), and for columns DG to FF, row 3 (the date) and rows 1214 to rows 1381 (the data).

The output I need is as follows:

| FILENAME | RETAILER | DATE | VALUE |

| File 1 | Retailer 1 | 15/06/20 | 3000 |

| File 2 | Retailer 1 | 22/06/20 | 2000 |

| File 3 | Retailer 1 | 29/06/20 | 3000 |

| File 1 | Retailer 2 | 15/06/20 | 2000 |

| File 2 | Retailer 2 | 22/06/20 | 20002 |

| File 3 | Retailer 2 | 29/06/20 | 200 |

I've run into so many problems I don't even know where to begin. I can't work out how to carry the Retailer name down, I can't work out how to transpose the date row into a column, I can't work out how to aggregate the numbers, and I can't work out how to get Alteryx to plug through 200 files in one workflow, doing the same thing to each of them and aggregating all the results into a single Excel output.

Any help you could provide on this, my fourth very late night in the office, would be appreciated!!

Thank you

Sam

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi @pupmup,

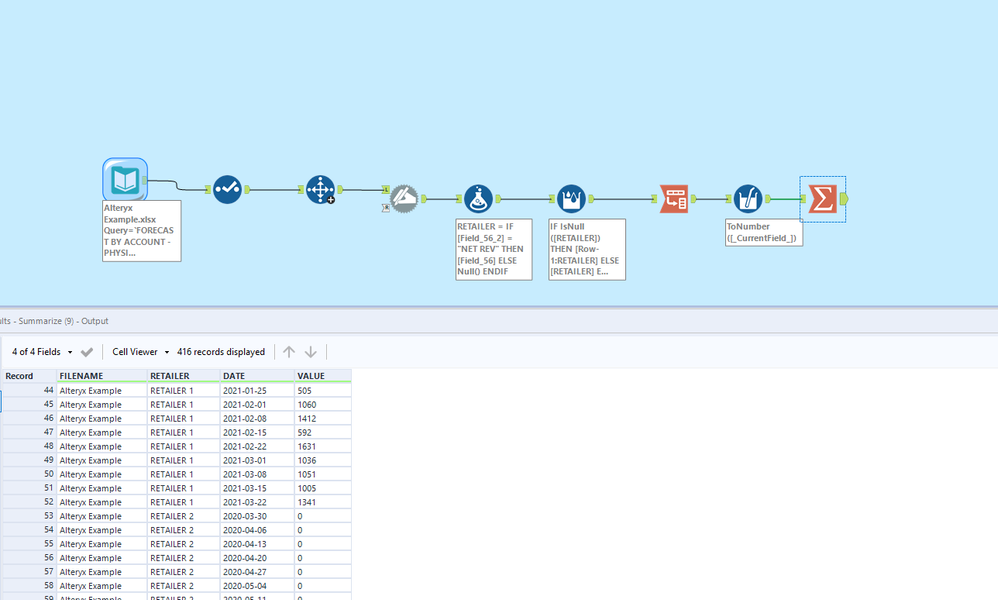

Hopefully this helps you get home earlier tonight. This should work with real data and bringing in multiple files at once in the Input Data tool. I brought in the data at the first line instead of line 3 as the repeating dates would require data changing downstream to get the dates correct; a Select tool and a Select Records tool make it easier to keep the data clean down the road. To identify the Retailer I used the second field as the retailer name is associated with "NET REV". From there a Multi-Row Formula tool replicates the retailer name down; Transpose, data cleanse and change field type via Multi-Formula tool, and Summarize to put your data in the right format and update the field names.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I think I managed to put something together that you might be able to work with.

The Directory Tool loads the list of files from the folder (I only have 1 - perhaps try it with 1 first to check the results are what you want)

Three formula tools feed 3 dynamic input tools to load 3 cell ranges separately: A1214: C1389 for finding the Retailer; DG3:FF3 for the dates and DG1214:FF1381 for the data

I then had to do some jiggery pokery to find a way to bring it all together.

There's a lot of nulls in the data which I filtered out.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Thank you so much for your help @T_Willins !! I can't express how grateful I am. I've poked through all the various steps to make sure I understand them, and it's a joy indeed to see it working on one file at a time! I only have one question, and that's how to get the process to run through each Excel in the directory.

I have tried replacing the file name in the input file path with a *, so it reads \\network\directory\*.xlsx, and while this appears to read every file in the directory at the input stage, it only outputs 1.5 file's worth of data at the other end.

I think doing this means it reads all the data into one big table, but then the record select tool still just keeps the top few hundred rows, and discards all the other files' worth of data.

Is there a tool that allows me to say "run this entire workflow for a file in the directory, then move to the next file and repeat, until all files are gone", instead of the "read all files at once and then run the workflow once on the aggregate"?

(I will obviously look by myself as well!)

Thank you again

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Thank you so much for this, I can't express how grateful I am to have had the assistance!

I only have one question left, and that's how to get the workflow to run on sequential multiple files. I have tried to replace the filename with an asterisk, but I think that just aggregates all the directory data into one lump, then discards 99% of it with the row selector tool later on.

Any suggestions you can provide (while I do my own research!) would be lovely.

thank you so much again!!

Sam

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Thank you very much for your help @DavidP ! I really appreciate you spending the time helping me out 🙂

-

Academy

6 -

ADAPT

2 -

Adobe

204 -

Advent of Code

3 -

Alias Manager

78 -

Alteryx Copilot

25 -

Alteryx Designer

7 -

Alteryx Editions

94 -

Alteryx Practice

20 -

Amazon S3

149 -

AMP Engine

252 -

Announcement

1 -

API

1,208 -

App Builder

116 -

Apps

1,360 -

Assets | Wealth Management

1 -

Basic Creator

14 -

Batch Macro

1,558 -

Behavior Analysis

246 -

Best Practices

2,693 -

Bug

719 -

Bugs & Issues

1 -

Calgary

67 -

CASS

53 -

Chained App

268 -

Common Use Cases

3,823 -

Community

26 -

Computer Vision

85 -

Connectors

1,426 -

Conversation Starter

3 -

COVID-19

1 -

Custom Formula Function

1 -

Custom Tools

1,936 -

Data

1 -

Data Challenge

10 -

Data Investigation

3,486 -

Data Science

3 -

Database Connection

2,220 -

Datasets

5,221 -

Date Time

3,227 -

Demographic Analysis

186 -

Designer Cloud

740 -

Developer

4,368 -

Developer Tools

3,528 -

Documentation

526 -

Download

1,037 -

Dynamic Processing

2,937 -

Email

927 -

Engine

145 -

Enterprise (Edition)

1 -

Error Message

2,256 -

Events

198 -

Expression

1,868 -

Financial Services

1 -

Full Creator

2 -

Fun

2 -

Fuzzy Match

711 -

Gallery

666 -

GenAI Tools

3 -

General

2 -

Google Analytics

155 -

Help

4,705 -

In Database

966 -

Input

4,291 -

Installation

360 -

Interface Tools

1,900 -

Iterative Macro

1,094 -

Join

1,957 -

Licensing

252 -

Location Optimizer

60 -

Machine Learning

259 -

Macros

2,862 -

Marketo

12 -

Marketplace

23 -

MongoDB

82 -

Off-Topic

5 -

Optimization

750 -

Output

5,252 -

Parse

2,327 -

Power BI

228 -

Predictive Analysis

936 -

Preparation

5,167 -

Prescriptive Analytics

205 -

Professional (Edition)

4 -

Publish

257 -

Python

855 -

Qlik

39 -

Question

1 -

Questions

2 -

R Tool

476 -

Regex

2,339 -

Reporting

2,431 -

Resource

1 -

Run Command

575 -

Salesforce

277 -

Scheduler

411 -

Search Feedback

3 -

Server

629 -

Settings

933 -

Setup & Configuration

3 -

Sharepoint

626 -

Spatial Analysis

599 -

Starter (Edition)

1 -

Tableau

512 -

Tax & Audit

1 -

Text Mining

468 -

Thursday Thought

4 -

Time Series

431 -

Tips and Tricks

4,187 -

Topic of Interest

1,126 -

Transformation

3,726 -

Twitter

23 -

Udacity

84 -

Updates

1 -

Viewer

3 -

Workflow

9,974

- « Previous

- Next »