Alteryx Designer Desktop Discussions

Find answers, ask questions, and share expertise about Alteryx Designer Desktop and Intelligence Suite.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Designer Desktop

- :

- Comparing hashes

Comparing hashes

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

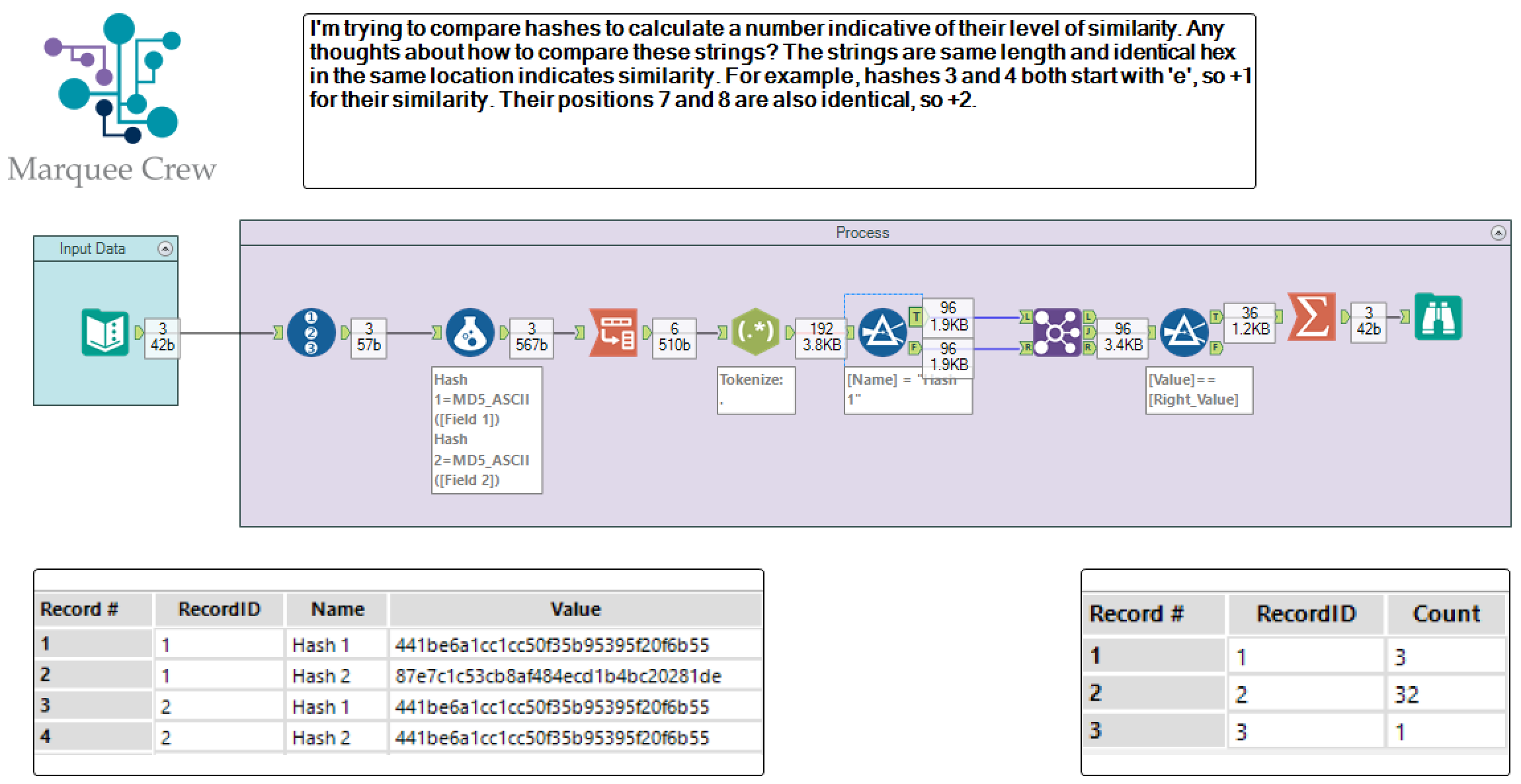

I'm trying to compare hashes to calculate a number indicative of their level of similarity. Any thoughts about how to compare these strings? The strings are same length and identical hex in the same location indicates similarity. For example, hashes 3 and 4 both start with 'e', so +1 for their similarity. Their positions 7 and 8 are also identical, so +2.

| 329036933693649764956c996699e6996b18cf1ce704f719ff19ff19bf39bf3c |

| eff86ff06fe067f0e5c0adc0bd8a9f88df98de885e409ed0dfd047dceff34fe1 |

| ea31de34b874b424be29ee39eb21ed216c616c69c8c9ce49cc61cc39c3c9cdc9 |

| e336e134f070e131e011e210e014e03de831e03af036f058f019f1317907fd83 |

| 870ff603e801e965b8c931e9a1cda1cda58d81e580e5844d880d998509cd036d |

Thoughts?

Solved! Go to Solution.

- Labels:

-

Parse

-

Transformation

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

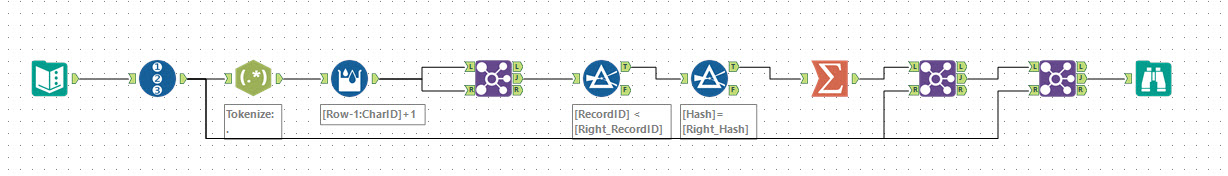

I would break it into character rows.

You can then join it to itself and do a count.

Process something like:

- Add a RecordID

- Use Regex to Tokenise

- Use a multi-row formula to add a CharacterID

- Join self on CharacterID

- Filter so left RecordID < right RecordID and characters equal

- Summarise to group by recordids and count.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

This is an impressive setup, @jdunkerley79. Thanks so much.

Kai :-)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Alas, while elegant, the solution is not practical in my case. I have ten million hashes. Multiplied by 64, that gives me more than half a billion records to join with the same number of records. Do you have any ideas for a more direct comparison of a hash pair? I'm wondering if the Fuzzy Match tool can be used somehow?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Thats a chunky problem - about 5x10¹¹ values to compute///

Think best approach is probably a new formula function and then should be a single c++ call for each function...

Will arr a charmatch function to my formula addin and see if works. Back as soon as have a result. Will be an interesting test of the engine...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Essentially, each row consists of two 64-character hash values, and I need to loop through to compare and count the number of matches, for example:

For x= 1 to 64

If hash1[x] = hash2[x] then count=count+1

This would be trivial and fast in R, but I'm trying to learn Alteryx better, so trying to resist the R-node.

Kai :-)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I came up with a comparison that essentially doesn't require a JOIN, but does explode columns to rows. It is fairly efficient in that the join that I do use is based upon record position. I understand the simplicity of the array loop. Please do check the timing for this module as I'm interested in knowing if it does improve upon my colleague's solution.

Thanks,

Mark

Chaos reigns within. Repent, reflect and restart. Order shall return.

Please Subscribe to my youTube channel.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Thank you so much, @jdunkerley79. I was able to optimize it down to 35GB and three hours 9 minutes for the whole workflow, which is almost reasonable for this one-off task, but as soon as I'm done with this task on Monday evening, I'm going to try your solution out.

Joining based on position sounds promising, though.

Kai :-)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

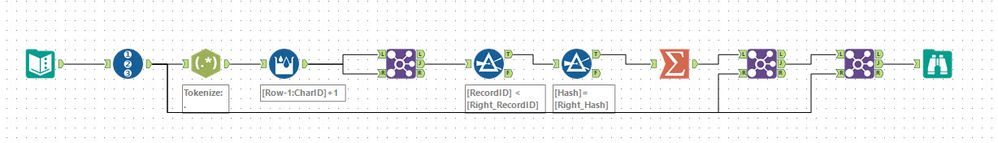

Ah had misunderstood.

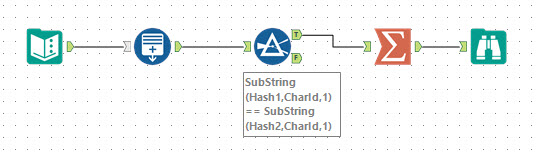

A much simpler problem. I did the following:

Generate a set fo CharID row from 1 to length of the hash.

This will scale it up to 64x original size.

Then Filter where characters at position match

Final group back together counting rows.

Basically same as for loop, I havent dealt with 0 similarity in this case but that could easily done.

Adjusted flow now looks like:

This copes with 0 matches and puts back in input order

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

@jdunkerley79 wrote:I would break it into character rows.

You can then join it to itself and do a count.

Process something like:

- Add a RecordID

- Use Regex to Tokenise

- Use a multi-row formula to add a CharacterID

- Join self on CharacterID

- Filter so left RecordID < right RecordID and characters equal

- Summarise to group by recordids and count.

-

Academy

6 -

ADAPT

2 -

Adobe

204 -

Advent of Code

3 -

Alias Manager

78 -

Alteryx Copilot

26 -

Alteryx Designer

7 -

Alteryx Editions

95 -

Alteryx Practice

20 -

Amazon S3

149 -

AMP Engine

252 -

Announcement

1 -

API

1,209 -

App Builder

116 -

Apps

1,360 -

Assets | Wealth Management

1 -

Basic Creator

15 -

Batch Macro

1,559 -

Behavior Analysis

246 -

Best Practices

2,695 -

Bug

719 -

Bugs & Issues

1 -

Calgary

67 -

CASS

53 -

Chained App

268 -

Common Use Cases

3,825 -

Community

26 -

Computer Vision

86 -

Connectors

1,426 -

Conversation Starter

3 -

COVID-19

1 -

Custom Formula Function

1 -

Custom Tools

1,939 -

Data

1 -

Data Challenge

10 -

Data Investigation

3,488 -

Data Science

3 -

Database Connection

2,221 -

Datasets

5,223 -

Date Time

3,228 -

Demographic Analysis

186 -

Designer Cloud

742 -

Developer

4,373 -

Developer Tools

3,531 -

Documentation

528 -

Download

1,037 -

Dynamic Processing

2,940 -

Email

928 -

Engine

145 -

Enterprise (Edition)

1 -

Error Message

2,259 -

Events

198 -

Expression

1,868 -

Financial Services

1 -

Full Creator

2 -

Fun

2 -

Fuzzy Match

713 -

Gallery

666 -

GenAI Tools

3 -

General

2 -

Google Analytics

155 -

Help

4,709 -

In Database

966 -

Input

4,295 -

Installation

361 -

Interface Tools

1,901 -

Iterative Macro

1,095 -

Join

1,959 -

Licensing

252 -

Location Optimizer

60 -

Machine Learning

260 -

Macros

2,864 -

Marketo

12 -

Marketplace

23 -

MongoDB

82 -

Off-Topic

5 -

Optimization

751 -

Output

5,256 -

Parse

2,328 -

Power BI

228 -

Predictive Analysis

937 -

Preparation

5,171 -

Prescriptive Analytics

206 -

Professional (Edition)

4 -

Publish

257 -

Python

855 -

Qlik

39 -

Question

1 -

Questions

2 -

R Tool

476 -

Regex

2,339 -

Reporting

2,434 -

Resource

1 -

Run Command

575 -

Salesforce

277 -

Scheduler

411 -

Search Feedback

3 -

Server

631 -

Settings

936 -

Setup & Configuration

3 -

Sharepoint

628 -

Spatial Analysis

599 -

Starter (Edition)

1 -

Tableau

512 -

Tax & Audit

1 -

Text Mining

468 -

Thursday Thought

4 -

Time Series

432 -

Tips and Tricks

4,187 -

Topic of Interest

1,126 -

Transformation

3,731 -

Twitter

23 -

Udacity

84 -

Updates

1 -

Viewer

3 -

Workflow

9,982

- « Previous

- Next »