Alteryx Designer Desktop Discussions

Find answers, ask questions, and share expertise about Alteryx Designer Desktop and Intelligence Suite.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Designer Desktop

- :

- Building a more "Interactive" Interface

Building a more "Interactive" Interface

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Note: I have not built an interface with Alteryx before, but I have very familiar with the ETL capabilities of Alteryx. So this whole interface is new to me but I would like to be able to leverage it to create value.

Here is my situation:

My current workflow takes in a data source with a list of unique identifiers (UIs) (lets call it "Main"). I then join this with another data source that has more specific information on each UI (lets call it "Detail"). However, Detail does not contain every single UI. I also output a list of all of the UIs from Main that were not contained in the Detail.

I then manually go retrieve the information for each UI from a website. The website requires me to search by UI, therefore I must know the UI before I cannot get the data (so getting all of the data beforehand is not an option). I then add these new UIs to Detail.

I then run the workflow again to link the UIs from Main with the UIs from Detail (which now contains all of the UIs from Main).

Here is what I would like to do. I have two ideas:

1) Use a macro to web scrape the website for the information within the current workflow. However, I cannot find a macro that can take data (the UIs) from within a workflow, search for them on a website, and then return the results. If this exists, please point me to it. If not, I have never made a macro before, so unless it is easy, I would like to avoid it.

2) Create an interface that runs the workflow once and gives the list of the UIs that need to be searched online to the user. It then prompts the user to enter in the information for these UIs. (The user can search these online outside of the interface, but it would be ideal if it would be possible to put it directly inside the interface. I am unsure if that is possible.) The user then enters this information into the interface and clicks a continue like button. The interface then runs the workflow again with the new information included.

I hope that this makes sense and is possible to do! Any help is appreciated. Please let me know if I need to be clearer in the explanation. I understand that I am asking for a bit of a complicated process. And if there is a better way to do this, please let me know!

Solved! Go to Solution.

- Labels:

-

Interface Tools

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi @jedwards543,

If I'm reading it correctly, I think full automation with #1 makes sense, using an iterative macro. For an iterative macro, your input would be the list of UIs; grab the first from the list, and send all but the first to the "Loop Control" output. Then send that single UI through whatever process you have for scraping.... send the output from that to another Macro Output.

In short, the "iterative macro" will continue sending "loop control" output until it is empty... since you remove an item with every iteration, that should work. The other output (when done iteratively) ill just get unioned (essentially) together.

Hope that helps!

John

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Thank you John!

I'm glad that you support #1 because it is definitely the more automated approach. I have found web scraping macros that can scrape a table from a website. However I have not found any web scraping macros that can first search in a search bar, and then scrap the table the is returned. Could you point me in the direction of resources that will do that or teach me to do it?

Thank you!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Adding to @JohnJPS's reply:

If say you were performing a lookup on Yahoo Finance and needed to lookup AYX (a missing value), you could construct the search:

https://finance.yahoo.com/quote/AYX?p=AYX

Then send each URL through the download tool.

Just a thought,

Mark

Chaos reigns within. Repent, reflect and restart. Order shall return.

Please Subscribe to my youTube channel.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

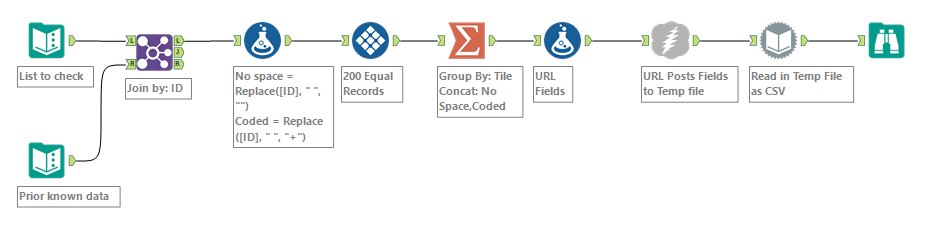

You can use the Inspect feature in Google Chrome prior to clicking on the button in the webpage to see the URL/Headers/Payload that you need to craft. Then with that info, you can make a workflow like:

The attached will:

- Join two data streams to find IDs not in the known file

- Formula to prep the ID, removing space and replacing space with +

- Tile tool to identify batches of 200 records

- Summarize to concatenate, making use of the separator option

- Formula to set URL and concatenate Fields

- Download to a Temp file using Post and passing the Fields as the payload

- Dynamic Input to read in the downloaded CSV file

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

If all of the equipment IDs match and there are not any Equipment IDs that need to be scraped from the website (which can sometimes happen), how do we prevent the error thrown at the Dynamic Input? Is there a way to say, "If no Equip IDs, do not run this part?"

Thank you!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Yeah, the tool "Dynamic Input" has a lot of limitations, and commonly errors before ruining (maybe making you think that there is an error when there is not, and maybe when you go to try to fix it, the attempt to fix actually breaks it). The "Dynamic Input" tool is not resilient.

In the attached I replaced it with a quick Macro, that will not error, and will not give you error prior to running.

-

Academy

6 -

ADAPT

2 -

Adobe

204 -

Advent of Code

3 -

Alias Manager

78 -

Alteryx Copilot

26 -

Alteryx Designer

7 -

Alteryx Editions

95 -

Alteryx Practice

20 -

Amazon S3

149 -

AMP Engine

252 -

Announcement

1 -

API

1,209 -

App Builder

116 -

Apps

1,360 -

Assets | Wealth Management

1 -

Basic Creator

15 -

Batch Macro

1,559 -

Behavior Analysis

246 -

Best Practices

2,695 -

Bug

719 -

Bugs & Issues

1 -

Calgary

67 -

CASS

53 -

Chained App

268 -

Common Use Cases

3,825 -

Community

26 -

Computer Vision

86 -

Connectors

1,426 -

Conversation Starter

3 -

COVID-19

1 -

Custom Formula Function

1 -

Custom Tools

1,939 -

Data

1 -

Data Challenge

10 -

Data Investigation

3,488 -

Data Science

3 -

Database Connection

2,221 -

Datasets

5,223 -

Date Time

3,229 -

Demographic Analysis

186 -

Designer Cloud

742 -

Developer

4,374 -

Developer Tools

3,532 -

Documentation

528 -

Download

1,037 -

Dynamic Processing

2,941 -

Email

928 -

Engine

145 -

Enterprise (Edition)

1 -

Error Message

2,261 -

Events

198 -

Expression

1,868 -

Financial Services

1 -

Full Creator

2 -

Fun

2 -

Fuzzy Match

713 -

Gallery

666 -

GenAI Tools

3 -

General

2 -

Google Analytics

155 -

Help

4,711 -

In Database

966 -

Input

4,296 -

Installation

361 -

Interface Tools

1,902 -

Iterative Macro

1,095 -

Join

1,959 -

Licensing

252 -

Location Optimizer

60 -

Machine Learning

260 -

Macros

2,865 -

Marketo

12 -

Marketplace

23 -

MongoDB

82 -

Off-Topic

5 -

Optimization

751 -

Output

5,258 -

Parse

2,328 -

Power BI

228 -

Predictive Analysis

937 -

Preparation

5,171 -

Prescriptive Analytics

206 -

Professional (Edition)

4 -

Publish

257 -

Python

855 -

Qlik

39 -

Question

1 -

Questions

2 -

R Tool

476 -

Regex

2,339 -

Reporting

2,434 -

Resource

1 -

Run Command

575 -

Salesforce

277 -

Scheduler

411 -

Search Feedback

3 -

Server

631 -

Settings

936 -

Setup & Configuration

3 -

Sharepoint

628 -

Spatial Analysis

599 -

Starter (Edition)

1 -

Tableau

512 -

Tax & Audit

1 -

Text Mining

468 -

Thursday Thought

4 -

Time Series

432 -

Tips and Tricks

4,187 -

Topic of Interest

1,126 -

Transformation

3,731 -

Twitter

23 -

Udacity

84 -

Updates

1 -

Viewer

3 -

Workflow

9,982

- « Previous

- Next »