Engine Works

Under the hood of Alteryx: tips, tricks and how-tos.- Community

- :

- Community

- :

- Learn

- :

- Blogs

- :

- Engine Works

- :

- AMP Engine Technical Deep Dive | Part 1 | Why AMP?

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Notify Moderator

On January 1st 2016 founding CTO Ned Harding, now former Alteryx software engineer Scott Wiesner and me, started work on a proof of concept for a new Alteryx core engine. A project which aimed to reimagine how Alteryx transferred data as it is processed by a workflow. A project which was codenamed e2 and now after many more person hours from many more engineers working across Kiev Ukraine, Cambridge UK and Broomfield Colorado, I am proud to see released to our users as the AMP engine.

Join me in this series of blogs on a technical journey through the AMP engine: How does it process records, store data, join data streams? What does the new engine mean for you as a user?

But before we get there I wanted to start with the why of AMP. Why have we spent the last four years reimagining a core piece of the technology behind our product?

Moore's Law

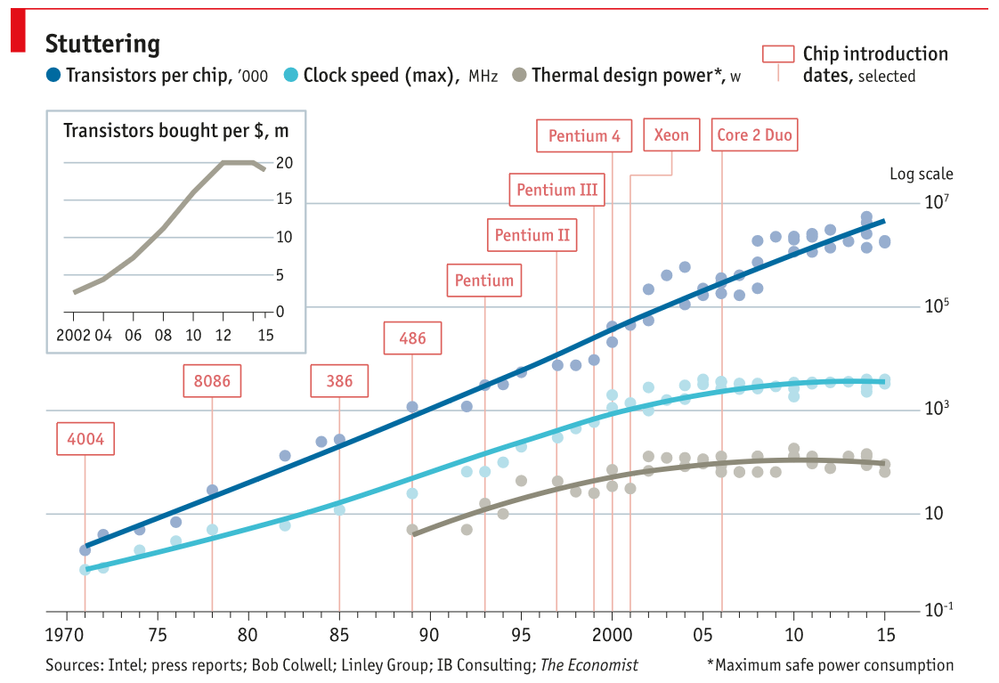

And to tell that story we have to go back quite a bit further than 2016 to 1965 and the very early days of computer science and meet Gordon Moore, one of the co-founders of Intel. In 1965 Moore made an observation that the number of transistors on an integrated circuit was doubling about every year (later revised to every two years). This observation was later coined as Moore's Law and has continued to hold true over the decades that followed. Now being the data driven people that we are Moore's Law can be observed in this chart:

Source: https://www.economist.com/technology-quarterly/2016-03-12/after-moores-law

This is a chart with a logarithmic scale (something which has become very common with recent world events) showing Moore's Law in action from 1970 to near present day. And if we look at the dark blue line we can see it is on a straight upwards course showing the exponential growth that is Moore's Law.

Why do we care about Moore's Law as software engineers and as software users? Well a transistor is the fundamental part of the computer that performs calculations and so the more transistors we have the more calculations we are able to do and (in theory) the faster our software will run. Moore's Law is great for both software engineers and users, who can just wait for the hardware manufacturers to build new chips and the software runs faster. Except of course as with all great stories there is a catch, and that catch is the light blue line on that chart: clock speed.

Clock speed is a measure of how fast a computer chip can perform calculations and right up to about 2005 (co-incidently right about the time that Ned started writing the original e1 engine) we begin to see a "flattening of the curve" for the clock speed. So what gives? I thought we said more transistors means more speed means faster software? Well at about this time the hardware manufacturers began to run into some physical limitations of getting the transistors onto a CPU (some around thermal limits), but they had this Moore's Law target that they needed to hit and so the answer was for the CPUs to go multi-core. Now this brings some issues for the software engineer: if they don't write their applications in a multi-threaded way they can't take advantage of the extra power the newest CPUs provide.

And this brings us back to our Alteryx story because at its heart, the e1 core engine is single threaded. That's not to say it doesn't use multiple threads at times, in fact many of the individual tools are multi-threaded (Input and Output tools have background threads for reading/writing to disks, our sort algorithm used across many tools is multi-threaded) but the main data pump which moves records between tools is single threaded (Check out the animations in this post). And when that engine was written that was exactly the right choice: most machines were single cored, maybe quad core on your servers, so having the threading model as it is meant we could very efficiently use the CPU power available to us at that time. But times change: the laptop which I am currently using as a word processor has 12 logical processors! And the reality is that the e1 engine cannot use all of those cores for a sustained amount of time.

Multi-Thread all the Tools!

But why can't we just multi-thread all the existing tools in e1 I hear you ask? Unfortunately multi-threading code has overheads associated with the additional complication of running work in parallel. So if were just to take the formula tool and execute each row of data in an individual thread, we would find that, although we were using up a lot more CPU, the overall runtime would be slower as we paid for all of that overhead per row of data.

Enter AMP

The answer was a completely new approach to how we process records in the engine. No longer would we process records individually, but in batches (called Record Packets), threading would be dealt with at the engine level not with individual tools. Reading data from disk also changes with AMP being optimized for modern SSD hard drives. Along with the data transportation changes we needed to reimagine our join algorithm to work efficiently across multiple cores and took a fresh look at how we stored data in records and yxdb files.

Join me in part 2 for a high level overview of how AMP ticks under the hood.

Editor's note: Hear the story straight from Adam in the latest episode of the Alter Everything podcast...

-

Former account of @AdamR. Find me at https://community.alteryx.com/t5/user/viewprofilepage/user-id/120 and https://www.linkedin.com/in/adriley/

Former account of @AdamR. Find me at https://community.alteryx.com/t5/user/viewprofilepage/user-id/120 and https://www.linkedin.com/in/adriley/

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.