Data Science

Machine learning & data science for beginners and experts alike.- Community

- :

- Community

- :

- Learn

- :

- Blogs

- :

- Data Science

- :

- Extracting Additional Information from the Predict...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Notify Moderator

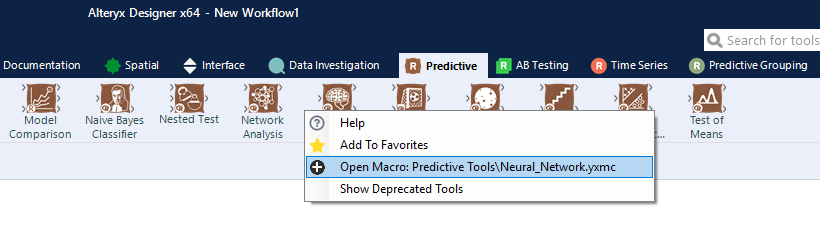

The Alteryx Predictive tools are a suite of tools that can be added to Alteryx Designer to perform data investigation, time series analysis, AB testing, and prescriptive analysis, as well as train, test, and score a variety of machine learning models.

On the Alteryx Community, I occasionally see questions on how to extract additional information from outputs of the predictive tools, such as numbers in the Report output or an additional metric for the model that is not included in the report.

Extracting additional information about a model trained with a predictive tool is possible because of the way the predictive tools are built. The predictive tools are Alteryx macros, which means that at least part of the functionality of the tool is built with Alteryx tools.

The predictive tools typically contain an R tool with R code that executes the core functionality of the tool, such as training a model with provided input data.

As an output (returned in the “O” (object) output anchor) the predictive tools will return a serialized model object.

In this context, you can think of serialization as the process of putting the R code that makes up the trained model object packaged into a shipping box. Serialization is what allows something written in the R programming language to be pulled out of R, through the Alteryx Engine and onto the canvas in a data stream.

The model object is the bundle of components that make up the trained model. What a model object includes depends on what kind of model it is (e.g., the components that define a logistic regression model are different than the components that define a decision tree), but typically include the structure of the trained model (e.g., predictor variables, the branches and nodes of a decision tree, or the number of hidden layers in a neural network) and the weights, coefficients, or split thresholds that were learned from the training data.

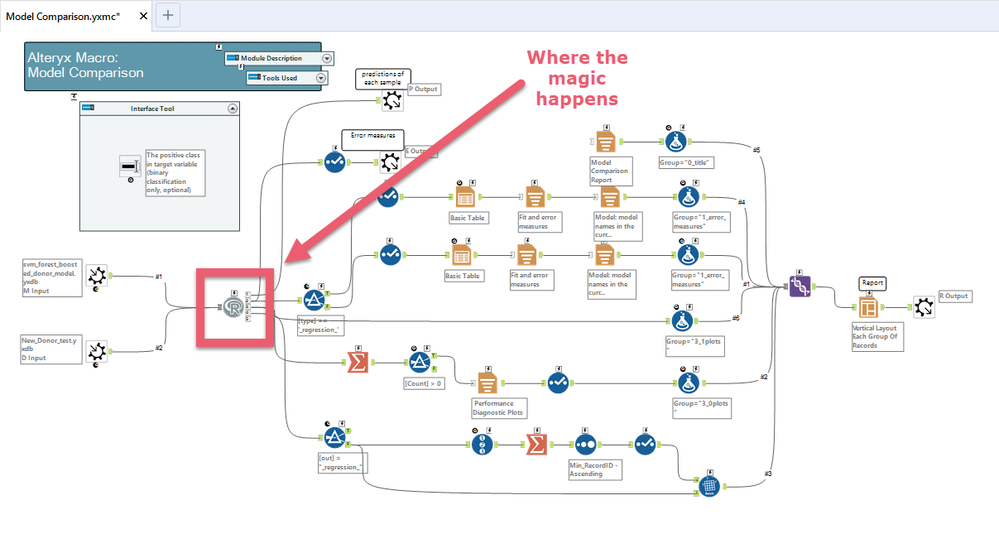

Because this packaged model object is exported into a data stream in Designer, it is possible to interact with the model object after it is trained by opening the serialization package in an R tool. This is exactly how the Score, Model Comparison, Cross-Validation, and Stepwise tools work.

These tools (among others) accept a model object ("O" output anchor) as an input, unserialize the model object in an R tool with a couple lines of R code, and then interact with the model object with code in the R tool to create an intermediary output (e.g, the Score tool loads the model object and new data into an R tool, and uses the unserialized model object to score the new data. The tool then returns the model's estimate for each record as an output).

It's as simple as that! With a little bit of R-and-Alteryx know-how, an R tool, and Google as a trusty sidekick, you should be able to extract any additional information you might need from a model.

The basic process for performing this kind of task is as follows:

- Connect the O output of your predictive tool to an R tool.

- In the R tool, write code to read in and unserialize the model object.

- Apply code that extracts the information you're looking for to the model object (this might be the Google part).

- Write code that writes the extracted information back out to Alteryx.

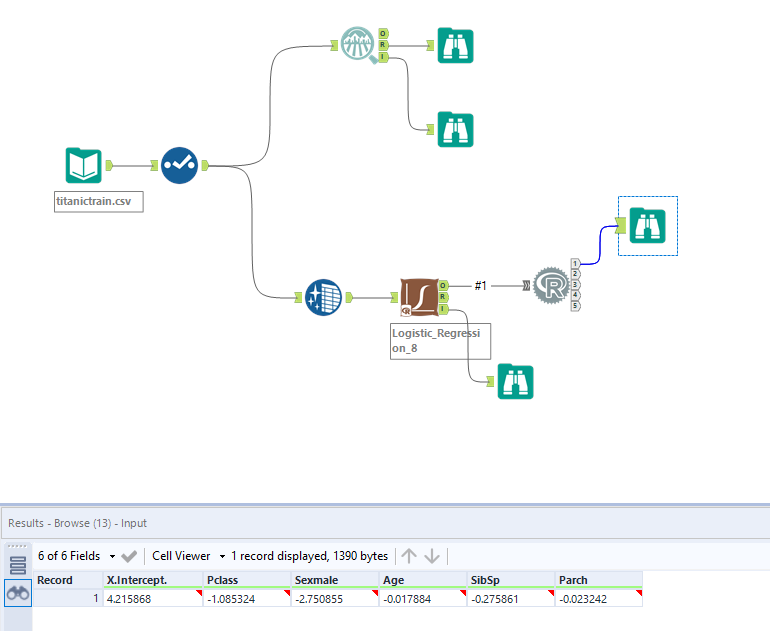

As an example, if you'd like to extract the coefficients of a logistic regression model in your workflow (and for some reason you don't feel like using the Model Coefficients tool, which does exactly that for all the generalized linear model tools), first you would hook up the O anchor of your Logistic Regression tool to an R tool and paste in the generic code that reads in and unserializes a model object created by a predictive tool.

# Read in serialized model object

modelObj <- read.Alteryx("#1", mode="data.frame")

# Unserialize model

model <- unserializeObject(as.character(modelObj$Object[1]))

The next thing you need to know is what kind of model object is being created. What this means beyond “logistic regression” or “linear regression,” is which R package was used to generate the model. Sometimes the same command or code might work across different model types (hooray for polymorphism!), but it is better to know exactly what kind of model you’re working with to ensure you use the right code to extract information. Additionally, there are add-on packages that you might be able to leverage that only work with models generated from certain packages.

In the before-times, discovering which package a tool leverages to generate a model might have required some detective work. Now, for your viewing pleasure and use, I’ve created this handy spreadsheet. You can learn more about the packages installed with the R tool here.

Once you know what type of model object you’re working with, if you don’t know the R code you need off the top of your head, you can turn to the Google machine.

For example, if I am trying to find the code to help me extract coefficients from a logistic regression model, I might search something like:

"R glm logistic regression extract coefficients"

I include R to define the programming language I am working in, glm describes the package/function I am working with, logistic regression is the model, and extract coefficients is what I'm trying to do.

The first link that shows up for me when I perform this search is a StackOverflow thread. StackOverflow is a lifesaving question and answer forum for developers. It is one of my favorite websites on the internet (after the Alteryx Community, of course). If you find a StackOverflow link, you're probably on the right track to finding the code you need.

https://stackoverflow.com/questions/4780152/extract-coefficients-from-glm-in-r

This particular thread contains a few different code snippets that will work for our use case. For simplicity, let's try:

# extract coefficients

data <- model$coefficients

Note: For working with some model objects, you might need to load in the library the model was built with. For example, the function importance() to extract importance variables from a random forest model (the Forest Model tool) requires that the randomForest library is loaded, but because we are not using a function from a particular package in this example (we are accessing a part of the model object with the dollar sign operator) we do not need to load a package or function.

As the last step, we will need to write the data back out to a data stream in our workflow with the function write.Alteryx().

# write out coefficients to data stream

write.Alteryx(data, 1)

Note: In order to write things out of the R tool successfully, the data needs to be in a data frame format. You can check the structure of the data returned by a function or command without writing it out by using print() and print(str()) on your data object and checking the results window. You can read more about R data frames in the blog post Code Friendly Data Structures: Data Frames in R.

And now we have our model's coefficients in an Alteryx data stream.

This is a simple (and depending on what you do with the coefficients, possibly unnecessary/ill-advised) example, but it works as a template to open up a lot of possibilities. In addition to using functions that can extract additional model metrics and information, there are many R packages that have been designed to enhance model interpretability by creating additional plots or generating additional metrics from a model object.

One example is the NeuralNetTools package, which makes additional plots for neural networks generated with the R package nnet, including a general diagram of the structure of the trained neural network. You can read about this process in the KB article How To: Create a Plot of an Alteryx Neural Network Model.

Another example is leveraging the R package randomForestExplainer to create additional explanatory plots for a random forest model.

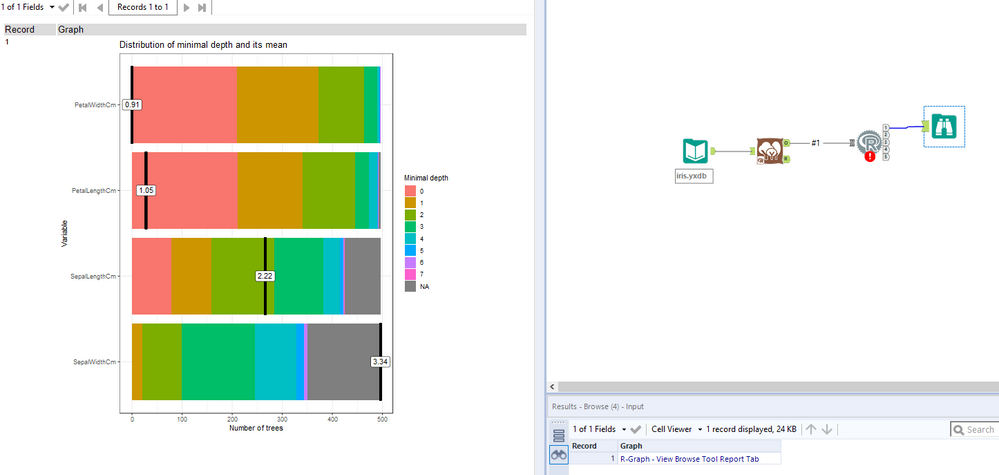

In this example, we create a plot of the Distribution of Minimal Depths of predictor variables in the trees that make up the forest, which can be used to understand variable importance, and how the predictor variables contribute to the forest.

The code to accomplish this follows the same general structure as the first example, with a few twists. The first being that the randomForestExplainer package doesn't come shipped with Alteryx by default, so we need to install it. Here, I have a function that conditionally installs the package depending on if the package is already installed or not.

# conditionally install visualization package (if it is not present in library)

# package documentation: https://cran.rstudio.com/web/packages/randomForestExplainer/vignettes/randomForestExplainer.html

cond.install <- function(package.name){

options(repos = "http://cran.rstudio.com") #set repo

#check for package in library, if package is missing install

if(package.name%in%rownames(installed.packages())==FALSE) {

install.packages(package.name, .libPaths()[2])}else{require(package.name, character.only = TRUE)}}

# conditionally install package

cond.install('randomForestExplainer')

Note: The function is currently set to install the package to the second R library associated with the R tool (the first library is the admin library on my machine, the second is the user library. You can check the libraries on your machine by running the function .libPaths() from an R tool and checking the Results Window).

Next, we read in and unserialize the model:

# Read in serialized model object

modelObj <- read.Alteryx("#1", mode="data.frame")

# Unserialize model

model <- unserializeObject(as.character(modelObj$Object[1]))

Finally, we create a plot object in the first output anchor of the tool and use the function plot_min_beth_distribution on the unserialized model to create the plot.

#create a slot for an output graph in anchor 1

AlteryxGraph(1, width=576, height=576)

# Plot sample tree

plot_min_depth_distribution(model)

There are a lot of different ways to leverage the model objects created by Alteryx - I'd be really excited to learn how you've used this trick. Please post any examples you have of extracting information or expanding on the outputs of the Predictive tools in the comments!

Here are some other examples from across the community on leveraging custom R code to get more information from a model object:

Extract ARIMA and ETS Coefficients

Fitted Values Chart- How to Get Values?

Variable Importance Plot Boosted Model Values

Extract and Use Coefficients from Regression Tool Further Down the Flow

How to Understand and Visualize Variable Influences in a Random Forest

A geographer by training and a data geek at heart, Sydney joined the Alteryx team as a Customer Support Engineer in 2017. She strongly believes that data and knowledge are most valuable when they can be clearly communicated and understood. She currently manages a team of data scientists that bring new innovations to the Alteryx Platform.

A geographer by training and a data geek at heart, Sydney joined the Alteryx team as a Customer Support Engineer in 2017. She strongly believes that data and knowledge are most valuable when they can be clearly communicated and understood. She currently manages a team of data scientists that bring new innovations to the Alteryx Platform.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.