Alteryx Designer Desktop Discussions

Find answers, ask questions, and share expertise about Alteryx Designer Desktop and Intelligence Suite.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Designer Desktop

- :

- Re: Is there a way to do a delete statement in In-...

Is there a way to do a delete statement in In-DB

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi All,

Is there a way to do a delete statement in in-DB alteryx ? I know that we can do "Delete Data and Append" in creation mode of Write Data-InDB. But there is no way to specify the where condition or its values in here. In the normal Non-IndB tools, we could update the pre or post sql statement by placing it in the macro. But when I do the same for In-DB tools, there is no way to specify the SQL statements here. Could someone help on this.

Regards,

Jeeva.

Solved! Go to Solution.

- Labels:

-

In Database

-

Macros

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi Jeeva,

So, there is no out of the box easy way to just 'delete records In-DB' that I know of. Feel free to post it in the Ideas section. I've looked at the issue a couple of different ways as with the information being talked about across 10 comments, I'm not sure if I understand the original use case... one thing to remember if you are wanting to simulate SQL code, is you can put a Dynamic Output tool on your In-DB tools to see the query that is being sent to the DB. This may help with troubleshooting if you want to try some methods.

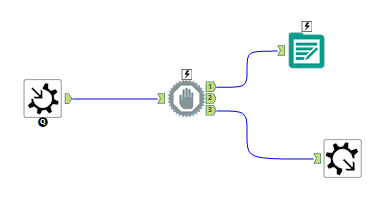

You could use a Block until Done in the Macro, so that nothing exits the Macro until the Output tool is finished? Of course, if this is an In-DB Macro then that all changes...

However the other option is to use a formula tool to change one of the fields to a Delete flag, then use that flag in an output tool Post sql delete statement, similar to the response (#7) from @MarqueeCrew. I think I may have your order around the wrong way though as you want to delete the records and then write data In-DB....?

Kane

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Ok. Thanks all for your explanations. Here I am explaining the actual situation I am trying to handle here.

I have a table which has data for past 12 months. Now in future, every month I receive moving 12 months data. for example, first month JAN-DEC, next month FEB-JAN, then MAR-FEB, so on.. Most important thing here is I dont have any key in this data. So first time I load the whole data in my main table, next month I am loading the newly received data in some stage table, then use In-DB to compare main and stage table to find out all the months which has changes in the overlapping period, then I am loading only the data for those months which has changes from stage to main table, also delete the already existing data for those months from main table. The problem I am facing here while using In-DB for this is, after I find out the months to delete and reload, I am not able to delete those months using In-DB write tool. Write-IN DB just deletes the whole data, no option to specify any "WHERE" condition.

Challenges here - I dont want to delete the whole data every time from main table, also dont want to create new table every time. Also I dont want to stream out the whole data out of In-DB. (All these constraints mainly because the amount of data is huge).

And when I try to use any flag, I am not sure how I can update the flag in table based on the months I found out. Also, I dont know how I can delete based on flag in In-DB, if I do this outside of In-DB, how does the output tool with post sql know if the data load has been completed.

Let me know if any clarifications required.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

In answer to

| And when I try to use any flag, I am not sure how I can update the flag in table based on the months I found out.

The Filter/Formula combo in my last post should do this

| Also, I dont know how I can delete based on flag in In-DB

I don't think that you can.

So, I would say your method goes something like,

- Stream your new data into a temp table

- Use In-DB tools to compare new data to old data

- Flag data to be removed as it is being replaced

- Merge new data into the table

- Flag old data to be removed as it is too old

- Stream 1 record out of the DB and into a block until done tool (like the screenshot above)

- First part of the block until done can be anything (a browse for example)

- Second part of the block until done is your Output tool with Post SQL

Kane

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Jeeva

Since you don’t have Key columns, finding the rows that changed for existing rows on Main table is little tricky

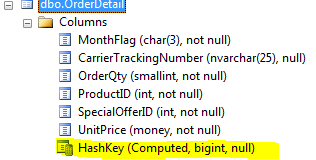

I would like to add some change in your Table .i.e Add HASHBYTES (here I used “SHA2_256” Algorithm)

I have created a sample Table with Hash Key columns as a computed column like below

[HashKey] AS (CONVERT([bigint],hashbytes('SHA2_256',((((((((([MonthFlag]+'|')+[CarrierTrackingNumber])+'|')+CONVERT([varchar](255),isnull([OrderQty],(0))))+'|')+CONVERT([varchar](255),isnull([ProductID],(0))))+'|')+CONVERT([varchar](255),isnull([SpecialOfferID],(0))))+'|')+CONVERT([varchar](255),isnull([UnitPrice],(0)))))) PERSISTED

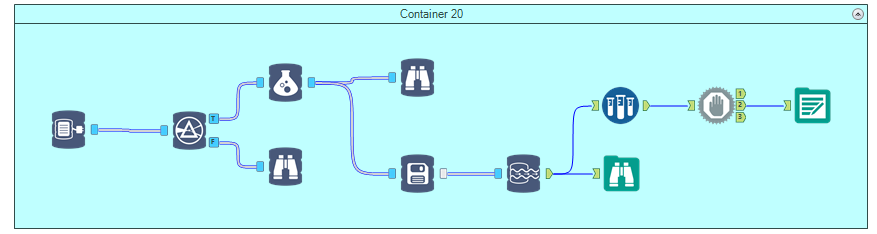

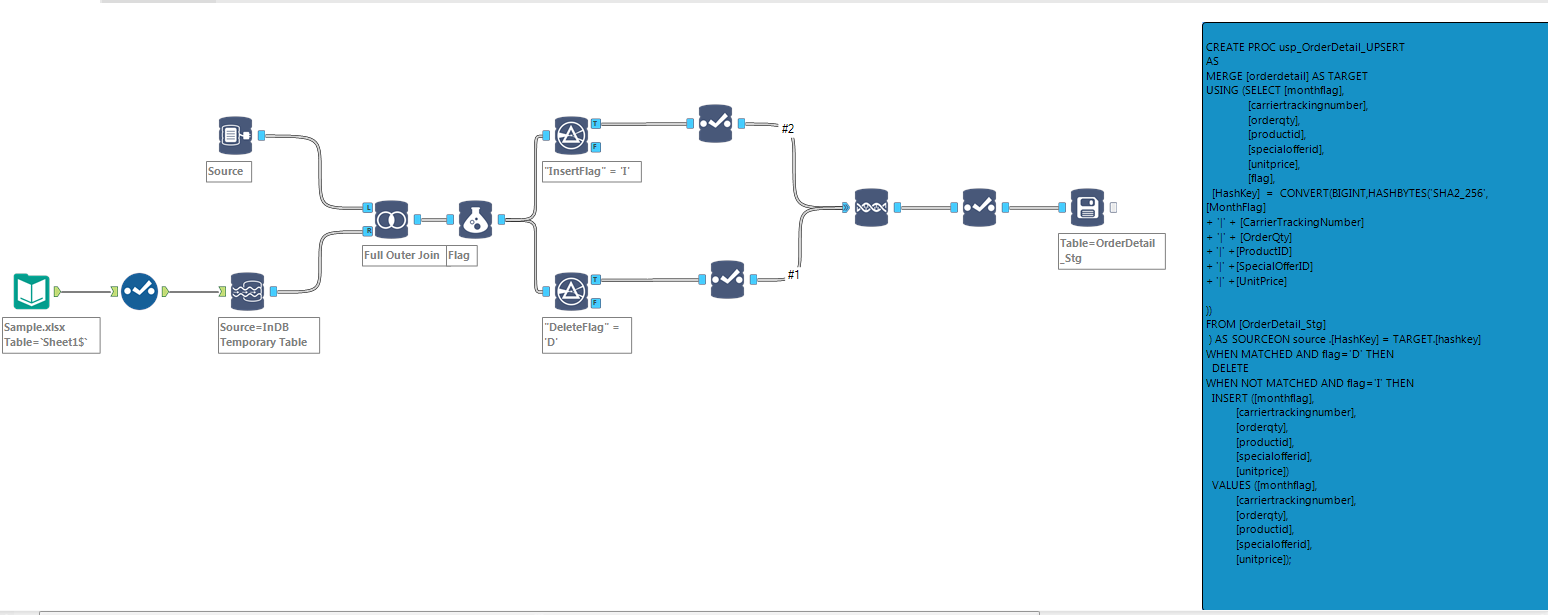

Then I connect In- DB for Main table and connect the source file which comes with Moving data and join them (Make sure you do Full Outer Join) and join with All columns

This give you data comparison of both source and main table

Now flag them based on the new and existing row. Here I flagged New Rows that are not exist in main table as “I” and “D” as the existing row which needs to be deleted

Finally load them using write InDB to your Staging table. I selected Delete and Append as this is a Staging Table

One Issue here: when you design your staging table computed column cannot be used while writing In DB. So I Derived the Hashkey at final SQL Script

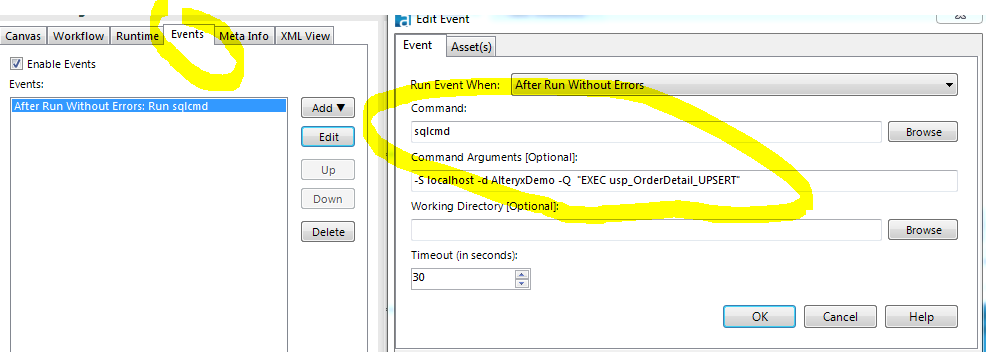

Finally I created a stored Procedure to Delete ONLY the row changed and Insert all New Rows (UPSERT) and execute the SP using SQLCMD

This proc does Delete and Insert task using Merge SQL Statement

CREATE PROC usp_OrderDetail_UPSERT

AS

MERGE [orderdetail] AS TARGET

USING (SELECT [monthflag],

[carriertrackingnumber],

[orderqty],

[productid],

[specialofferid],

[unitprice],

[flag],

[HashKey] = CONVERT(BIGINT,HASHBYTES('SHA2_256',

[MonthFlag]

+ '|' + [CarrierTrackingNumber]

+ '|' + [OrderQty]

+ '|' + [ProductID]

+ '|' + [SpecialOfferID]

+ '|' + [UnitPrice]

))

FROM [OrderDetail_Stg]

) AS SOURCE ON source .[HashKey] = TARGET.[hashkey]

WHEN MATCHED AND flag='D' THEN

DELETE

WHEN NOT MATCHED AND flag='I' THEN

INSERT ([monthflag],

[carriertrackingnumber],

[orderqty],

[productid],

[specialofferid],

[unitprice])

VALUES ([monthflag],

[carriertrackingnumber],

[orderqty],

[productid],

[specialofferid],

[unitprice]); here is the actual workfow looks like

Here is the step for, how this method does actually works:

- Compare the Target and Source using Full Outer and Flag then NEW and OLD rows

- Filter ONLY D and I flags rows into Staging table i.e for Delete and Insert

- Using MERGE Delete ONLY the rows which are changed in Target then LOAD all New Rows. By this way you no need to delete all the data from Main table

- Delete is not the direct option here, because I think , In-DB tool are intended for data retrieval performance

- So call it using the Alteryx Event after successful run

Your challenges addressed here:

- I don’t want to delete the whole data every time from main table : this method don’t delete all the data but delete ONLY the data which has changed

- dont want to create new table every time: yes it just uses the existing staging table and every time delete and append the required data

- Also I dont want to stream out the whole data out of In-DB: yes, this does not streams the data out but we did everything using InDB except the Stor Proc which has to be executed using Event. I am sure MERGE does good JOB for huge volume of data

finally , most of DB folks looking the SQL tool to call sql code in Alteryx ,

So please vote for chris idea

http://community.alteryx.com/t5/Alteryx-Product-Ideas/Have-an-SQL-Tool/idi-p/5392

i also raised for executing the Alteryx workflow with piece of Logic in Sequence, so that we can load the data into staing and do some data cleaning using SQL then load it into target. and it fits into your scenorio as well . vote the below too if you find its useful

hope this helps and let us know if you still need anything

Happy Data Blending :)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Thanks each and everyone for their time.. Its really useful to different solutions to handle a particular problem. I think I got what I need, I am going to try both what Kane and Pichaipillai suggested, let me see which one works fast as I have to do this for millions of records..Happy day. Thanks for the help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Thanks! This is a simple workaround.

However the other option is to use a formula tool to change one of the fields to a Delete flag, then use that flag in an output tool Post sql delete statement, similar to the response (#7) from @MarqueeCrew...

Kane

- « Previous

-

- 1

- 2

- Next »

-

Academy

6 -

ADAPT

2 -

Adobe

204 -

Advent of Code

3 -

Alias Manager

78 -

Alteryx Copilot

26 -

Alteryx Designer

7 -

Alteryx Editions

95 -

Alteryx Practice

20 -

Amazon S3

149 -

AMP Engine

252 -

Announcement

1 -

API

1,210 -

App Builder

116 -

Apps

1,360 -

Assets | Wealth Management

1 -

Basic Creator

15 -

Batch Macro

1,559 -

Behavior Analysis

246 -

Best Practices

2,696 -

Bug

720 -

Bugs & Issues

1 -

Calgary

67 -

CASS

53 -

Chained App

268 -

Common Use Cases

3,825 -

Community

26 -

Computer Vision

86 -

Connectors

1,426 -

Conversation Starter

3 -

COVID-19

1 -

Custom Formula Function

1 -

Custom Tools

1,939 -

Data

1 -

Data Challenge

10 -

Data Investigation

3,489 -

Data Science

3 -

Database Connection

2,221 -

Datasets

5,223 -

Date Time

3,229 -

Demographic Analysis

186 -

Designer Cloud

743 -

Developer

4,376 -

Developer Tools

3,534 -

Documentation

528 -

Download

1,038 -

Dynamic Processing

2,941 -

Email

929 -

Engine

145 -

Enterprise (Edition)

1 -

Error Message

2,262 -

Events

198 -

Expression

1,868 -

Financial Services

1 -

Full Creator

2 -

Fun

2 -

Fuzzy Match

714 -

Gallery

666 -

GenAI Tools

3 -

General

2 -

Google Analytics

155 -

Help

4,711 -

In Database

966 -

Input

4,296 -

Installation

361 -

Interface Tools

1,902 -

Iterative Macro

1,095 -

Join

1,960 -

Licensing

252 -

Location Optimizer

60 -

Machine Learning

260 -

Macros

2,866 -

Marketo

12 -

Marketplace

23 -

MongoDB

82 -

Off-Topic

5 -

Optimization

751 -

Output

5,259 -

Parse

2,328 -

Power BI

228 -

Predictive Analysis

937 -

Preparation

5,171 -

Prescriptive Analytics

206 -

Professional (Edition)

4 -

Publish

257 -

Python

855 -

Qlik

39 -

Question

1 -

Questions

2 -

R Tool

476 -

Regex

2,339 -

Reporting

2,434 -

Resource

1 -

Run Command

576 -

Salesforce

277 -

Scheduler

411 -

Search Feedback

3 -

Server

631 -

Settings

936 -

Setup & Configuration

3 -

Sharepoint

628 -

Spatial Analysis

599 -

Starter (Edition)

1 -

Tableau

512 -

Tax & Audit

1 -

Text Mining

468 -

Thursday Thought

4 -

Time Series

432 -

Tips and Tricks

4,187 -

Topic of Interest

1,126 -

Transformation

3,732 -

Twitter

23 -

Udacity

84 -

Updates

1 -

Viewer

3 -

Workflow

9,983

- « Previous

- Next »