Alteryx Designer Desktop Discussions

Find answers, ask questions, and share expertise about Alteryx Designer Desktop and Intelligence Suite.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Designer Desktop

- :

- Re: Guidance in forecast comparison and combinatio...

Guidance in forecast comparison and combination

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Edit on original post in blue

Hi,

I am looking for some guidance in comparing forecast errors as part of the Predictive Analytics tools in Alteryx.

Background

I have set up a forecast of the ordered units that will be cancelled compared to the initial orderbook.

For that I have set up:

- ETS and several ARIMA models to forecast cancellations as a percentage of the initial orderbook

- ETS and several ARIMA models to forecast cancellations in units

The idea is to take the most meaningful forecast model

Results

I modified the results for readability in the overview below, which is the output from 2 TS Compare tools. For the forecast in percentage, the ETS model scores best on all error measures. For the forecast in units this is the ARIMA covariate 2 model. I am also looking to optimize my forecast by combining the best 2 models, which should reduce bias and variance. For example, here is a paper that discusses how combining forecasts improves the accuracy: http://repository.upenn.edu/cgi/viewcontent.cgi?article=1005&context=marketing_papers

My questions:

- Which is the most appropriate measure to compare all forecasts in percentages with all forecasts in units? MPE and MAPE?

- Would there be any objection for combining 2 of the 9 forecast models (equal/proportional/regression weighted) after they have been transformed into percentage or units only?

Forecast on percentage

| Forecast in units | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Actual and Forecast Values:

| Actual and Forecast Values:

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Accuracy Measures:

|

Accuracy Measures:

|

Thanks for your help!

Regards,

Bart

Solved! Go to Solution.

- Labels:

-

Predictive Analysis

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Accuracy measures

I have been in contact with Alteryx and they confirmed my understanding on the error measures:

- No metric is better than the others,

- ME and RSME are commonly used in most situations,

- MPE and MAPE support scale independent comparison within certain limits (for example, to compare the forecast in percentage with the forecast in units),

- MASE can be used if MAPE cannot be used due to meaningful zero values

Additionally, Chapter 2, Section 5 of Hyndman and Athanasopoulos's online book Forecasting: Principals and Practice provides a good discussion of the measures used to assess forecast model accuracy (http://otexts.com/fpp/).

Explaining accuracy to audience

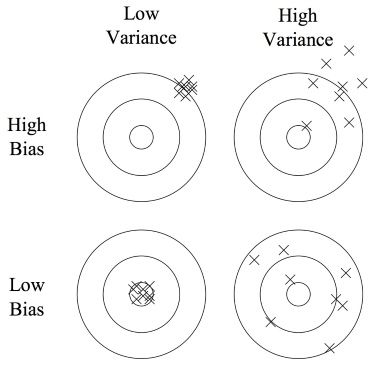

Based on several e-learnings I went through, I will use the terms 'bias' and 'variance' to explain to my audience the accuracy of the forecast models. With bias indicating the average distance from actual and variance indicating the spread of the predictions. I think this will create a better understanding as they have no background in statistics.

- Bias = ME

- Variance = MSE - (bias * bias) = (RMSE * RMSE) - (ME * ME)

Forecast model outcomes

Alteryx gave me feedback to have a closer look at the ETS model:

"One thing I noticed in your results is that you have a single value for all observations in the ETS forecast. Typically this means that there's not enough "signal" in your data, so the tool is returning the average value. Perhaps check the decomposition plots for your ETS model - see if there's excessive noise causing that static result."

When asking for direction, they replied:

"As far as reducing noise goes, have you used the Data Investigation tools yet? From there, you can get a better understanding of your data and the metadata. And try different Model, Seasonal and Trend Types – additive, multiplicative, etc, as well as your Information criteria."

Something did I noticed, was that my data was not sorted on date, but on another field. After sorting on dates, I noticed that it helped to make the forecasting model (more) meaningful and not have a flat line. I did not realize the forecasting tools do not do that automatically based on the date field provided.

Combining forecasts

The link in my post above provides a good direction on combining forecasts.

-

Academy

6 -

ADAPT

2 -

Adobe

204 -

Advent of Code

3 -

Alias Manager

78 -

Alteryx Copilot

27 -

Alteryx Designer

7 -

Alteryx Editions

96 -

Alteryx Practice

20 -

Amazon S3

149 -

AMP Engine

252 -

Announcement

1 -

API

1,210 -

App Builder

116 -

Apps

1,360 -

Assets | Wealth Management

1 -

Basic Creator

15 -

Batch Macro

1,559 -

Behavior Analysis

246 -

Best Practices

2,696 -

Bug

720 -

Bugs & Issues

1 -

Calgary

67 -

CASS

53 -

Chained App

268 -

Common Use Cases

3,825 -

Community

26 -

Computer Vision

87 -

Connectors

1,426 -

Conversation Starter

3 -

COVID-19

1 -

Custom Formula Function

1 -

Custom Tools

1,939 -

Data

1 -

Data Challenge

10 -

Data Investigation

3,489 -

Data Science

3 -

Database Connection

2,221 -

Datasets

5,223 -

Date Time

3,229 -

Demographic Analysis

186 -

Designer Cloud

743 -

Developer

4,377 -

Developer Tools

3,534 -

Documentation

528 -

Download

1,038 -

Dynamic Processing

2,941 -

Email

929 -

Engine

145 -

Enterprise (Edition)

1 -

Error Message

2,262 -

Events

198 -

Expression

1,868 -

Financial Services

1 -

Full Creator

2 -

Fun

2 -

Fuzzy Match

714 -

Gallery

666 -

GenAI Tools

3 -

General

2 -

Google Analytics

155 -

Help

4,711 -

In Database

966 -

Input

4,297 -

Installation

361 -

Interface Tools

1,902 -

Iterative Macro

1,095 -

Join

1,960 -

Licensing

252 -

Location Optimizer

60 -

Machine Learning

260 -

Macros

2,866 -

Marketo

12 -

Marketplace

23 -

MongoDB

82 -

Off-Topic

5 -

Optimization

751 -

Output

5,260 -

Parse

2,328 -

Power BI

228 -

Predictive Analysis

937 -

Preparation

5,171 -

Prescriptive Analytics

206 -

Professional (Edition)

4 -

Publish

257 -

Python

855 -

Qlik

39 -

Question

1 -

Questions

2 -

R Tool

476 -

Regex

2,339 -

Reporting

2,434 -

Resource

1 -

Run Command

576 -

Salesforce

277 -

Scheduler

411 -

Search Feedback

3 -

Server

631 -

Settings

936 -

Setup & Configuration

3 -

Sharepoint

628 -

Spatial Analysis

599 -

Starter (Edition)

1 -

Tableau

512 -

Tax & Audit

1 -

Text Mining

468 -

Thursday Thought

4 -

Time Series

432 -

Tips and Tricks

4,188 -

Topic of Interest

1,126 -

Transformation

3,733 -

Twitter

23 -

Udacity

84 -

Updates

1 -

Viewer

3 -

Workflow

9,983

- « Previous

- Next »