Alteryx Designer Desktop Discussions

Find answers, ask questions, and share expertise about Alteryx Designer Desktop and Intelligence Suite.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Designer Desktop

- :

- sum last n days quantity using window function

sum last n days quantity using window function

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

My sample data looks like:

article | Qty | Date |

A | 3 | 2019-10-11 |

A | 5 | 2019-10-08 |

A | 1 | 2019-10-05 |

A | 2 | 2019-09-15 |

A | 1 | 2019-09-09 |

A | 1 | 2019-09-01 |

B | 3 | 2019-10-11 |

B | 2 | 2019-10-08 |

B | 3 | 2019-10-05 |

B | 1 | 2019-09-15 |

B | 4 | 2019-09-09 |

C | 1 | 2019-10-11 |

C | 2 | 2019-10-08 |

C | 1 | 2019-10-05 |

C | 1 | 2019-09-15 |

C | 0 | 2019-09-09 |

C | 4 | 2019-09-01 |

C | 1 | 2019-08-28 |

and I want following output:

Article | date | Qty | Sum_Qty_28_days |

A | 2019-09-01 | 1 | 1 |

A | 2019-09-09 | 1 | 2 |

A | 2019-09-15 | 2 | 4 |

A | 2019-10-05 | 1 | 4 |

A | 2019-10-08 | 5 | 8 |

A | 2019-10-11 | 3 | 11 |

B | 2019-09-09 | 4 | 4 |

B | 2019-09-15 | 1 | 5 |

B | 2019-10-05 | 3 | 8 |

B | 2019-10-08 | 2 | 6 |

B | 2019-10-11 | 3 | 9 |

C | 2019-08-28 | 1 | 1 |

C | 2019-09-01 | 4 | 5 |

C | 2019-09-09 | 0 | 5 |

C | 2019-09-15 | 1 | 6 |

C | 2019-10-05 | 1 | 2 |

C | 2019-10-08 | 2 | 4 |

C | 2019-10-11 | 1 | 5 |

The logic is: Column “Sum_Qty_28_days “ should sum up the values of “Qty ” column for same article which falls under last 28 days E.g. “Sum_Qty_28_days” value for “article” = ‘A’ and date = ‘’2019-10-8” is 8 because it is summing up the “Qty” values associated with dates (coming within previous 28 days) Which are: 2019-09-15, 2019-10-05, 2019-10-08 for “article” = ‘A’.

Is this possible using SQL window function? I tried myself with following code:

SUM("Qty") OVER (PARTITION BY "article", date_trunc('month',"Date") ORDER BY "Date")

But, it is far from what I need. It is summing up the Qty for dates falling in same month. However, I need to sum of Qty for last 28 days.

Note: I already have solution using non In-database tools, but that it taking huge amount of execution time for whole dataset. I request for a solution with In-database tools only or using window function.

Thanks.

Solved! Go to Solution.

- Labels:

-

In Database

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

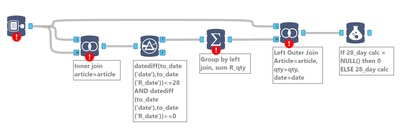

Hi @PKN I don't have access to a database at the moment, but I've made a workflow schema which should do what you want

Hope that helps,

Ollie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi @OllieClarke ,

Thanks for the solution. Unfortunately, Filter-In tool used gives an error "Error: Filter In-DB (3): Error SQLPrepare: [EXASOL][EXASolution driver]identifier Tool14_e791.article is ambiguous. (Session: 1652459517215047596)

" when I am running the workflow.

Ragards,

PKN

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

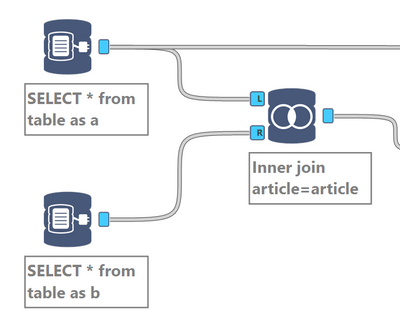

@PKN hmmm, what if you have 2 connections (both connecting to the same table) and alias them?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

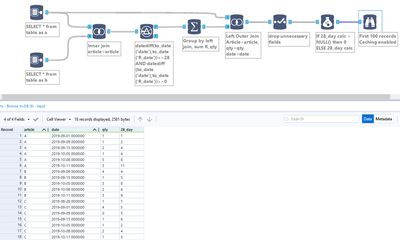

@PKN I found a database.Looks like this approach works

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Yes, this works. Thanks a lot @OllieClarke

Just one doubt. I have millions of records in original table and when I am doing inner join on just article then the join operation creates billion records. Where these records are processed/stored during/after join? Like on the Alteryx server or on the database memory from where I am reading/storing the records, or somewhere else? Right now the workflow is running fine, I am just worried that if in future the number of records will increase whether It can cause error due to memory issues during workflow execution?

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Glad to hear it @PKN. If you're only using in-db tools, then everything will happen in your database. The in-db tools are really a way to construct SQL queries using the familiar alteryx interface, unless you have a browse tool, or an output of some kind they won't bring any data into your memory (I think I'm right in saying that they won't even execute the created query without a browse or output). There's more info on the in-db tools here. In terms of scaling, you should be safe from memory limitations (unless you're streaming out the data into memory) - the limitations, if they do exist, would be on the database side.

Hope that helps,

Ollie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Thanks @OllieClarke. Thanks a lot for all the help.

-

Academy

6 -

ADAPT

2 -

Adobe

204 -

Advent of Code

3 -

Alias Manager

78 -

Alteryx Copilot

25 -

Alteryx Designer

7 -

Alteryx Editions

94 -

Alteryx Practice

20 -

Amazon S3

149 -

AMP Engine

252 -

Announcement

1 -

API

1,208 -

App Builder

116 -

Apps

1,360 -

Assets | Wealth Management

1 -

Basic Creator

15 -

Batch Macro

1,558 -

Behavior Analysis

246 -

Best Practices

2,693 -

Bug

719 -

Bugs & Issues

1 -

Calgary

67 -

CASS

53 -

Chained App

268 -

Common Use Cases

3,823 -

Community

26 -

Computer Vision

85 -

Connectors

1,426 -

Conversation Starter

3 -

COVID-19

1 -

Custom Formula Function

1 -

Custom Tools

1,936 -

Data

1 -

Data Challenge

10 -

Data Investigation

3,486 -

Data Science

3 -

Database Connection

2,220 -

Datasets

5,221 -

Date Time

3,227 -

Demographic Analysis

186 -

Designer Cloud

740 -

Developer

4,369 -

Developer Tools

3,528 -

Documentation

526 -

Download

1,037 -

Dynamic Processing

2,937 -

Email

927 -

Engine

145 -

Enterprise (Edition)

1 -

Error Message

2,256 -

Events

198 -

Expression

1,868 -

Financial Services

1 -

Full Creator

2 -

Fun

2 -

Fuzzy Match

711 -

Gallery

666 -

GenAI Tools

3 -

General

2 -

Google Analytics

155 -

Help

4,706 -

In Database

966 -

Input

4,291 -

Installation

360 -

Interface Tools

1,900 -

Iterative Macro

1,094 -

Join

1,957 -

Licensing

252 -

Location Optimizer

60 -

Machine Learning

259 -

Macros

2,862 -

Marketo

12 -

Marketplace

23 -

MongoDB

82 -

Off-Topic

5 -

Optimization

750 -

Output

5,252 -

Parse

2,327 -

Power BI

228 -

Predictive Analysis

936 -

Preparation

5,167 -

Prescriptive Analytics

205 -

Professional (Edition)

4 -

Publish

257 -

Python

855 -

Qlik

39 -

Question

1 -

Questions

2 -

R Tool

476 -

Regex

2,339 -

Reporting

2,431 -

Resource

1 -

Run Command

575 -

Salesforce

277 -

Scheduler

411 -

Search Feedback

3 -

Server

629 -

Settings

933 -

Setup & Configuration

3 -

Sharepoint

626 -

Spatial Analysis

599 -

Starter (Edition)

1 -

Tableau

512 -

Tax & Audit

1 -

Text Mining

468 -

Thursday Thought

4 -

Time Series

431 -

Tips and Tricks

4,187 -

Topic of Interest

1,126 -

Transformation

3,726 -

Twitter

23 -

Udacity

84 -

Updates

1 -

Viewer

3 -

Workflow

9,976

- « Previous

- Next »