Alteryx Designer Desktop Discussions

Find answers, ask questions, and share expertise about Alteryx Designer Desktop and Intelligence Suite.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Designer Desktop

- :

- XML Parse - performance strategies

XML Parse - performance strategies

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi -

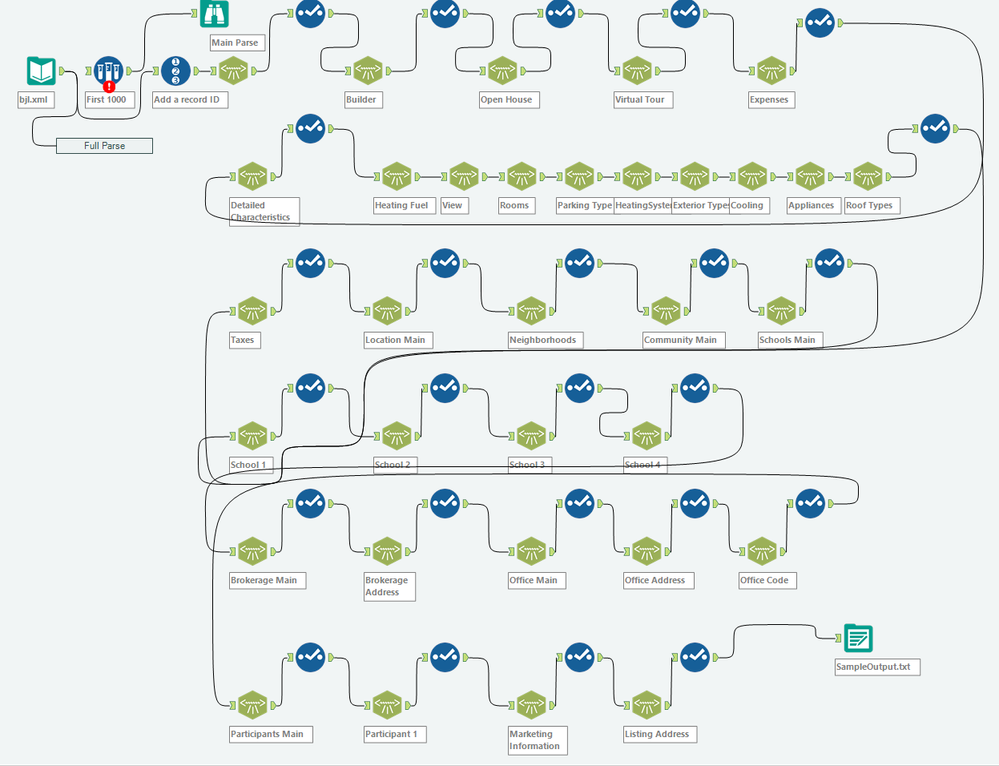

I have a large (1.7million records, 14GB) XML file. It has a lot of nested node structures. I've set up a parsing routine that does most of the work, but it takes ~3 hours to run on a pretty decent machine. I'll be running this daily, and I'm looking for strategies to improve performance. I was thinking to try some / all of the following but wanted to see if anyone has some good advice first:

1. split the file and run several smaller but identical jobs

2. since I've added a record id, after the main parse slit the resulting major OuterXML sections to their own jobs

3. somehow inspect the modified_time element within the XML and then (on day 2+) parse only the records that have been modified

Before I do this, I wonder if I'm missing a much more basic / fundamental approach change.

Also for #3, any tips on how to inspect and use an element like that would be appreciated.

Thanks!

Pete

Solved! Go to Solution.

- Labels:

-

Best Practices

-

Preparation

-

Transformation

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Chaos reigns within. Repent, reflect and restart. Order shall return.

Please Subscribe to my youTube channel.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

As an update, while the original method I was using, the parsing you started, and a couple other approaches all work, I think that its simply easier to handle complex XML (lots of nested nodes, lots of non-required elements, lots of records, big file size) in other tools and then use the command line tool. Haven't set up anything final yet, but that's what I'm looking at.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Just throwing this out there, but have you tried the Parse XML app up on the Gallery? One of our Engineers built it out (as both a standalone Analytic app, and a downloadable macro) and he's actually been looking for some complex XML files to break it :)

We have used it internally as a good way to start doing some data discovery on workflows and understanding the overall file XML structure, so I would be curious to see how others rate its performance.

Senior Solutions Architect

Alteryx, Inc.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi Sophia,

Thank you for responding!

I tried the app and it doesn't seem to work on the file. Do you think Alex would be willing to try to run it and provide some insight? If so, how would I get a sample XML to him?

Thanks!

Pete

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

@PeterGoldey I'll PM you about the app.

I know the file itself is huge (and I'm sure the parsing makes it larger) but were you able to get the macro version of Alex's app working at all?

Senior Solutions Architect

Alteryx, Inc.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

sure about tree analytics (new topic for me) and whether that tool would be

useful to my project.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

As an FYI, I haven't found anything easier / quicker. The XML is actually more complex than indicated in the original post. A couple strategies that I am using are:

Transposing child nodes: Some of the parent nodes (photos for one) contain n child nodes (photos1, photos2, etc.) each of which contain info for a single - in this case - image. By transposing the parent node parse results and extracting the numerical (1, 2, 3, etc.) from the name field (the child XML node name) i now have a record for each image for each original parent node record. I think filter out NULL "values" to reduce the record count and XML parse this vertical table in a single step.

Without the transpose step, there are as many as 140 child nodes each of which would need its own XML Parse tool.

Inspecting the main node and filtering

Other than the first full run, each successive run will only need to:

a) identify and parse new records

b) identify and parse change records

c) identify "missing" records so I can delete them from the master file

a) new and change records: filter for modification time stamp in the main node > [last max date]

b) delete records: On a file this size, its much faster to first to an "inventory" parse to pull out the unique keys and modification time stamps for all the records. I can then easily identify which of the step a) records are adds vs. changes and also which ID's no longer exist in the file so I can delete them from the master.

-

Academy

6 -

ADAPT

2 -

Adobe

204 -

Advent of Code

3 -

Alias Manager

78 -

Alteryx Copilot

26 -

Alteryx Designer

7 -

Alteryx Editions

95 -

Alteryx Practice

20 -

Amazon S3

149 -

AMP Engine

252 -

Announcement

1 -

API

1,208 -

App Builder

116 -

Apps

1,360 -

Assets | Wealth Management

1 -

Basic Creator

15 -

Batch Macro

1,559 -

Behavior Analysis

246 -

Best Practices

2,695 -

Bug

719 -

Bugs & Issues

1 -

Calgary

67 -

CASS

53 -

Chained App

268 -

Common Use Cases

3,825 -

Community

26 -

Computer Vision

86 -

Connectors

1,426 -

Conversation Starter

3 -

COVID-19

1 -

Custom Formula Function

1 -

Custom Tools

1,938 -

Data

1 -

Data Challenge

10 -

Data Investigation

3,487 -

Data Science

3 -

Database Connection

2,220 -

Datasets

5,222 -

Date Time

3,227 -

Demographic Analysis

186 -

Designer Cloud

742 -

Developer

4,372 -

Developer Tools

3,530 -

Documentation

527 -

Download

1,037 -

Dynamic Processing

2,939 -

Email

928 -

Engine

145 -

Enterprise (Edition)

1 -

Error Message

2,258 -

Events

198 -

Expression

1,868 -

Financial Services

1 -

Full Creator

2 -

Fun

2 -

Fuzzy Match

712 -

Gallery

666 -

GenAI Tools

3 -

General

2 -

Google Analytics

155 -

Help

4,708 -

In Database

966 -

Input

4,293 -

Installation

361 -

Interface Tools

1,901 -

Iterative Macro

1,094 -

Join

1,958 -

Licensing

252 -

Location Optimizer

60 -

Machine Learning

260 -

Macros

2,864 -

Marketo

12 -

Marketplace

23 -

MongoDB

82 -

Off-Topic

5 -

Optimization

751 -

Output

5,255 -

Parse

2,328 -

Power BI

228 -

Predictive Analysis

937 -

Preparation

5,169 -

Prescriptive Analytics

206 -

Professional (Edition)

4 -

Publish

257 -

Python

855 -

Qlik

39 -

Question

1 -

Questions

2 -

R Tool

476 -

Regex

2,339 -

Reporting

2,434 -

Resource

1 -

Run Command

575 -

Salesforce

277 -

Scheduler

411 -

Search Feedback

3 -

Server

630 -

Settings

935 -

Setup & Configuration

3 -

Sharepoint

627 -

Spatial Analysis

599 -

Starter (Edition)

1 -

Tableau

512 -

Tax & Audit

1 -

Text Mining

468 -

Thursday Thought

4 -

Time Series

431 -

Tips and Tricks

4,187 -

Topic of Interest

1,126 -

Transformation

3,730 -

Twitter

23 -

Udacity

84 -

Updates

1 -

Viewer

3 -

Workflow

9,980

- « Previous

- Next »