Alteryx Designer Desktop Discussions

Find answers, ask questions, and share expertise about Alteryx Designer Desktop and Intelligence Suite.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Designer Desktop

- :

- Sampling tool

Sampling tool

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hello!

Looking for a little guidance on the best way to set up a workflow to select a "weighted sample" that selects the largest proportion of samples from a high risk bucket, a moderate selection of samples from a medium risk bucket, and the smallest proportion from a low risk bucket.

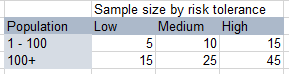

The sample size will vary based on (a) population size, and (b) required confidence level. Blue table below shows what my sample size might look like in different population and confidence scenarios.

Each transaction will be assigned to a "risk bucket" (green table below), which is what should drive the sample weighting. The weight would be as follows..

- Select 75% of sample high risk transactions

- Select 20% of sample medium risk transactions

- Select 5% of sample low risk transactions

Any guidance on how to set this up? The oversample tool seems to have a different purpose and I don't see how you designate the sample size. Would it be distilling all of the above into a complex web of IF statements?

Thanks a lot!!

- Labels:

-

Tips and Tricks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

The easiest way to do this is to use two filters - first filter checks for if something is high risk bucket (feeds to random % sample at 75%) second filter checks if something is medium risk bucket - feeds to sample % at 20% false anchor feeds to sample % tool 5%. Sample % tools go to Union tool to rejoin.

This is the most straight-forward way. I'd use a batch macro with two control parameters - but that's not as straight forward.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Thanks for this - interesting. One issue I can see is if there aren't enough transactions in the high risk bucket to satisfy the 75% target sample size, I would need to pass the remaining sample allotment to the next lower bucket.

But I guess the tricky part is the varying sample size. Can you feed/pass a target sample size to the sampling tool? Or could it be done with the batch macro that you mentioned?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi @rshack2005

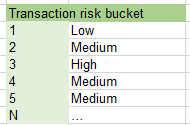

The sample tool is good if you have fixed cut offs, but if you have a complex situation like yours you need to roll-your-own sampler. Here's a generic sampling methodology to select X rows from a list

1. Add a random number

2. Sort by the random number, which randomizes the list.

3. Select the first X rows.

Your case is a bit more complex since the X varies by population and also risk level.

The salmon coloured "Create sample data..." container does what it says on the label. Replace this with your own data.

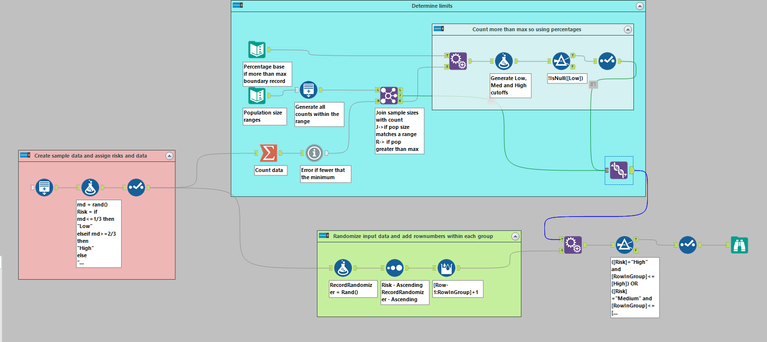

The blue "Determine limits" container is where you calculate the sample sizes for risk levels based on the population size.

The two text inputs hold your population ranges and sample amounts(bottom one) and percentage based sample amounts if you have more data than the maximum in the ranges. The range input is expanded to give one record for each value in the range. Your input data is counted and the message tool throws an error if you have fewer than the low bound of the smallest range. The count is joined to the expanded range data. There only two possible outcomes for this join.

1. There is a match to a single range record which comes out the J output and the R output is empty. In this case just pass the sample sizes out of the container

2. There are no matches in which case the R output contains the count and the J output is empty. The R output uses the percentages to calculates sample sizes based on the population count.

The final Union tool passes the record from condition 1 or 2

The green container applies the first two steps of the randomizing algorithm from above. The single record from the "Determine Limits" is appended to randomized data and then the Filter can apply Step 3 of the randomizing algorithm

([Risk]="High" and [RowInGroup]<=[High]) OR

([Risk]="Medium" and [RowInGroup]<=[Medium]) OR

([Risk]="Low" and [RowInGroup]<=[Low])This picks the first X records from the randomized sample data for each of the High, Medium and Low groups.

In the "Create Sample data..." container change the Generate rows tool Condition expression from RowCount <=250 to a different value to test the various ranges and also over and under conditions

Dan

-

Academy

6 -

ADAPT

2 -

Adobe

204 -

Advent of Code

3 -

Alias Manager

78 -

Alteryx Copilot

26 -

Alteryx Designer

7 -

Alteryx Editions

95 -

Alteryx Practice

20 -

Amazon S3

149 -

AMP Engine

252 -

Announcement

1 -

API

1,209 -

App Builder

116 -

Apps

1,360 -

Assets | Wealth Management

1 -

Basic Creator

15 -

Batch Macro

1,559 -

Behavior Analysis

246 -

Best Practices

2,695 -

Bug

719 -

Bugs & Issues

1 -

Calgary

67 -

CASS

53 -

Chained App

268 -

Common Use Cases

3,825 -

Community

26 -

Computer Vision

86 -

Connectors

1,426 -

Conversation Starter

3 -

COVID-19

1 -

Custom Formula Function

1 -

Custom Tools

1,939 -

Data

1 -

Data Challenge

10 -

Data Investigation

3,488 -

Data Science

3 -

Database Connection

2,221 -

Datasets

5,223 -

Date Time

3,229 -

Demographic Analysis

186 -

Designer Cloud

742 -

Developer

4,373 -

Developer Tools

3,531 -

Documentation

528 -

Download

1,037 -

Dynamic Processing

2,940 -

Email

928 -

Engine

145 -

Enterprise (Edition)

1 -

Error Message

2,260 -

Events

198 -

Expression

1,868 -

Financial Services

1 -

Full Creator

2 -

Fun

2 -

Fuzzy Match

713 -

Gallery

666 -

GenAI Tools

3 -

General

2 -

Google Analytics

155 -

Help

4,711 -

In Database

966 -

Input

4,295 -

Installation

361 -

Interface Tools

1,901 -

Iterative Macro

1,095 -

Join

1,959 -

Licensing

252 -

Location Optimizer

60 -

Machine Learning

260 -

Macros

2,864 -

Marketo

12 -

Marketplace

23 -

MongoDB

82 -

Off-Topic

5 -

Optimization

751 -

Output

5,258 -

Parse

2,328 -

Power BI

228 -

Predictive Analysis

937 -

Preparation

5,171 -

Prescriptive Analytics

206 -

Professional (Edition)

4 -

Publish

257 -

Python

855 -

Qlik

39 -

Question

1 -

Questions

2 -

R Tool

476 -

Regex

2,339 -

Reporting

2,434 -

Resource

1 -

Run Command

575 -

Salesforce

277 -

Scheduler

411 -

Search Feedback

3 -

Server

631 -

Settings

936 -

Setup & Configuration

3 -

Sharepoint

628 -

Spatial Analysis

599 -

Starter (Edition)

1 -

Tableau

512 -

Tax & Audit

1 -

Text Mining

468 -

Thursday Thought

4 -

Time Series

432 -

Tips and Tricks

4,187 -

Topic of Interest

1,126 -

Transformation

3,731 -

Twitter

23 -

Udacity

84 -

Updates

1 -

Viewer

3 -

Workflow

9,982

- « Previous

- Next »