Alteryx Designer Desktop Discussions

Find answers, ask questions, and share expertise about Alteryx Designer Desktop and Intelligence Suite.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Designer Desktop

- :

- Input Tool Truncating Fields to <256 Characters fo...

Input Tool Truncating Fields to <256 Characters for Database Connection

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

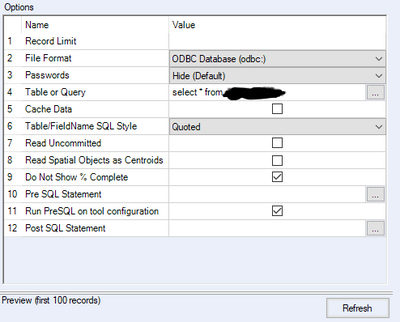

I am trying to use the input tool to connect to a SQL database. It has been truncating fields to <256 characters. I have tried:

1.) To use a SELECT tool to change the data types to 'WString' and increase the size to 10,000. This did not work.

2.) Looked at the INPUT tool for an option of increasing character length/limit when reading in the data..but I do not an option for this.

3.) I am trying to do this with the regular INPUT tool, but also wondering if anyone knows if this can be accomplished with the 'In-Database' INPUT tool as well.

Thank you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

hi @vujennyfer

I have worked with big text in sql and I didn't have problems, but I always use to read the oldb driver.

How long is your text field?

Where are you veryfing the lenght of the field loaded?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi @randreag , thanks for the reply.

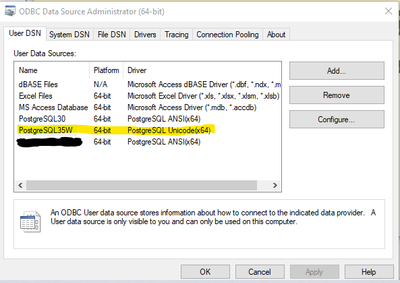

1.) I'm using PostgresSQL ODBC. Unicode x64 Driver. Do you think this is the issue? I have tried the ANSI x64 driver in the past and ran into the error " Input Data (54) Error SQLExecute: ERROR: character with byte sequence 0xe2 0x80 0x8b in encoding "UTF8" has no equivalent in encoding "WIN1252";¶Error while executing the query"

2.) The field is not very long. It is a description field with max character length of 1,996.

3.) For verifying length of field, I'm looking in SQL and seeing the full field description but when I use the INPUT and BROWSE tool in Alteryx, I see the field has been truncated from the BROWSE view.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

In the browse tool, it may give you a red arrow and say it was truncated for display purposes only, but if you actually export it or double click into it you should see the full text. The important thing is if you are getting any workflow warnings that indicate data is being truncated.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I am having the same issue, that's why I ended up here.

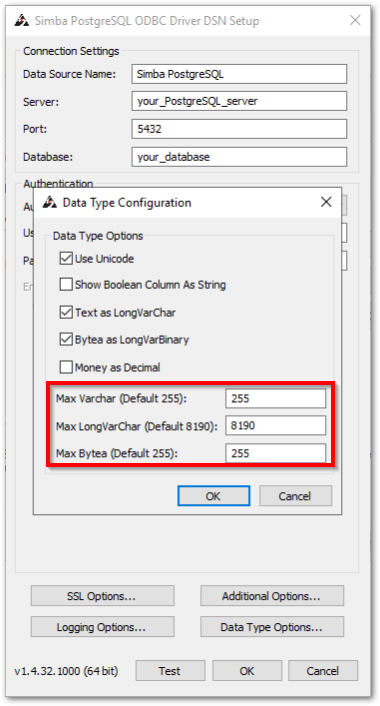

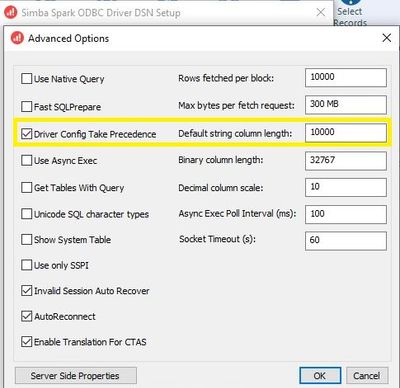

I think this is due to the configuration of the PostgresSQL ODBC driver

Hower, changing these settings did not work for me 😥

But perhaps it can help you further.

Br (ツ)

Thomas

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi All,

I have the same issue - my columns coming from Azure Databricks are being truncated to 255 characters. Changing this setting from 255 to 10000 did not resolve the issue.

Original size in Databricks is over 2k characters, but Alteryx defaults to 255. When I read from .csv I am able to read fields with values longer than 255, it's just ODBC that doesn't work

This article Defect GCSE-687: Data Returned by Databricks Query on Long Text Fields Truncates to 254 Characters (... suggests driver 2.6.23 I have a newer driver version 2.6.29.1049 (64 bit) however the issue persist

Any ideas?

Thanks

-

Academy

6 -

ADAPT

2 -

Adobe

204 -

Advent of Code

3 -

Alias Manager

78 -

Alteryx Copilot

26 -

Alteryx Designer

7 -

Alteryx Editions

95 -

Alteryx Practice

20 -

Amazon S3

149 -

AMP Engine

252 -

Announcement

1 -

API

1,210 -

App Builder

116 -

Apps

1,360 -

Assets | Wealth Management

1 -

Basic Creator

15 -

Batch Macro

1,559 -

Behavior Analysis

246 -

Best Practices

2,696 -

Bug

720 -

Bugs & Issues

1 -

Calgary

67 -

CASS

53 -

Chained App

268 -

Common Use Cases

3,825 -

Community

26 -

Computer Vision

86 -

Connectors

1,426 -

Conversation Starter

3 -

COVID-19

1 -

Custom Formula Function

1 -

Custom Tools

1,939 -

Data

1 -

Data Challenge

10 -

Data Investigation

3,489 -

Data Science

3 -

Database Connection

2,221 -

Datasets

5,223 -

Date Time

3,229 -

Demographic Analysis

186 -

Designer Cloud

743 -

Developer

4,376 -

Developer Tools

3,534 -

Documentation

528 -

Download

1,038 -

Dynamic Processing

2,941 -

Email

929 -

Engine

145 -

Enterprise (Edition)

1 -

Error Message

2,262 -

Events

198 -

Expression

1,868 -

Financial Services

1 -

Full Creator

2 -

Fun

2 -

Fuzzy Match

714 -

Gallery

666 -

GenAI Tools

3 -

General

2 -

Google Analytics

155 -

Help

4,711 -

In Database

966 -

Input

4,296 -

Installation

361 -

Interface Tools

1,902 -

Iterative Macro

1,095 -

Join

1,960 -

Licensing

252 -

Location Optimizer

60 -

Machine Learning

260 -

Macros

2,866 -

Marketo

12 -

Marketplace

23 -

MongoDB

82 -

Off-Topic

5 -

Optimization

751 -

Output

5,259 -

Parse

2,328 -

Power BI

228 -

Predictive Analysis

937 -

Preparation

5,171 -

Prescriptive Analytics

206 -

Professional (Edition)

4 -

Publish

257 -

Python

855 -

Qlik

39 -

Question

1 -

Questions

2 -

R Tool

476 -

Regex

2,339 -

Reporting

2,434 -

Resource

1 -

Run Command

576 -

Salesforce

277 -

Scheduler

411 -

Search Feedback

3 -

Server

631 -

Settings

936 -

Setup & Configuration

3 -

Sharepoint

628 -

Spatial Analysis

599 -

Starter (Edition)

1 -

Tableau

512 -

Tax & Audit

1 -

Text Mining

468 -

Thursday Thought

4 -

Time Series

432 -

Tips and Tricks

4,187 -

Topic of Interest

1,126 -

Transformation

3,732 -

Twitter

23 -

Udacity

84 -

Updates

1 -

Viewer

3 -

Workflow

9,983

- « Previous

- Next »