Alteryx Designer Desktop Discussions

Find answers, ask questions, and share expertise about Alteryx Designer Desktop and Intelligence Suite.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Designer Desktop

- :

- Error in Model Comparison

Error in Model Comparison

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hello:

I'm trying to use the model comparison tool comparing a decision tree and logistical regression model, I get the below errors. The models seem to run fine in and of themselves, but the model comparison does not. Has anyone else encountered this and have suggestions to correct it?

Model Comparison (12) Tool #3: Error in FileYXDBStreaming::Read - Unexpected number of bytes to read. Invalid argument

Model Comparison (12) Tool #3: Error in if (names(models_df)[1] != "Name" || names(models_df)[2] != "Object") { :

Model Comparison (12) Tool #3: Execution halted

Model Comparison (12) Tool #3: The R.exe exit code (1) indicated an error.

Solved! Go to Solution.

- Labels:

-

Predictive Analysis

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi @jbh1128d1

The Model Comparison tool on the Predictive District of the Gallery is not compatible with the Linear Regression, Logistic Regression, and Decision Tree tool versions released with Alteryx 11.0 (these tools have been largely unchanged in the versions following that release). To workaround this issue, you can change the tool version of your Decision Tree and Logistic Regression Tools to version 1.0.

- Right-click on your Logistic Regression or Decision Tree tool.

- Point to Choose Tool Version and select version 1.0

Please let me know if this doesn't resolve your issue!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi @denizbeser,

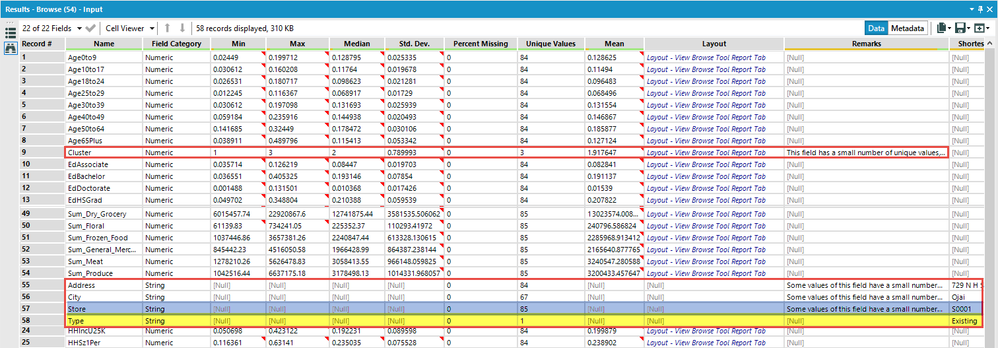

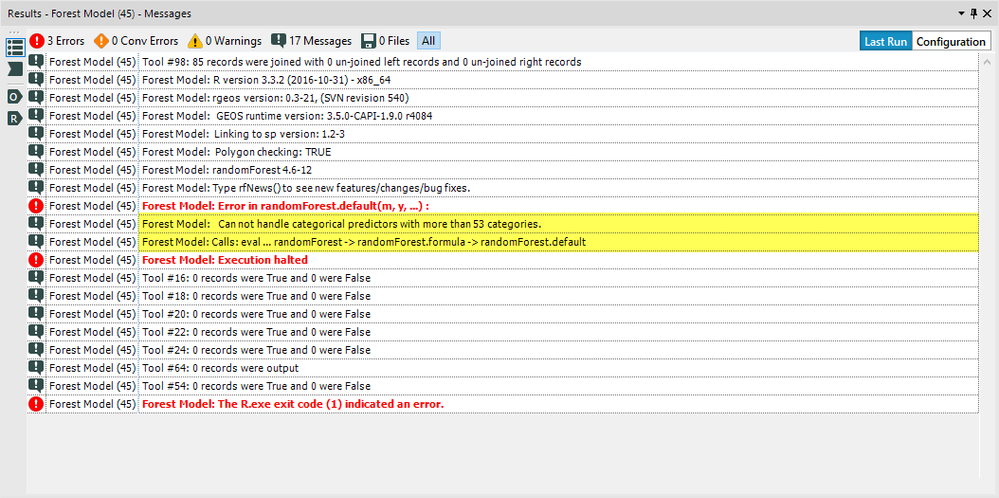

The first thing I noticed in your workflow is that your Target Variable, Cluster, has a numeric data type (Int16), although it seems to represent a categorical variable. This will cause the models you've selected to build Regression Trees/Forests, because it perceives the target variable is continuous. Changing the data type of your Cluster variable to string will cause the models to treat the variable as categorical.

Additionally, a few of your predictor variables are causing issues. Your Type variable only has one unique variable, your Store variable is a unique identifier, which has no predictive value and cause errors.

Also, your City predictor variable has 64 unique values out of 84 total records, the highest frequency being 5 for L.A. This is also most likely not a very valuable predictor variable, and can cause problems with the models you've selected. You can actually see this written out in the error messages if you turn on all macro messages (Workflow - Configuration > Runtime > Show All Macro Messages). This option passes on warnings and errors from R into the Results Window.

I was able to determine a lot of this of this using just the Field Summary Tool. When first working with a new data set, it is best practice to spend time doing data investigation. There is a series on the Community called Pre-Predictive: Using the Data Investigation Tools that you might find helpful. The first part of this series can be found here.

Correcting these issues with your Model configurations should allow your entire workflow to run successfully. I think the errors you are seeing in the Model Comparison tools are because model objects are not being passed down from the Model Tools.

There is another article on Community I think you might find helpful, called Troubleshooting the Predictive Tools. This article reviews helpful tips and tricks like turning on macro messages and searching R errors.

Does this all make sense? Do you have any questions? Please let me know!

Thanks!

-

Academy

6 -

ADAPT

2 -

Adobe

204 -

Advent of Code

3 -

Alias Manager

78 -

Alteryx Copilot

26 -

Alteryx Designer

7 -

Alteryx Editions

95 -

Alteryx Practice

20 -

Amazon S3

149 -

AMP Engine

252 -

Announcement

1 -

API

1,208 -

App Builder

116 -

Apps

1,360 -

Assets | Wealth Management

1 -

Basic Creator

15 -

Batch Macro

1,559 -

Behavior Analysis

246 -

Best Practices

2,695 -

Bug

719 -

Bugs & Issues

1 -

Calgary

67 -

CASS

53 -

Chained App

268 -

Common Use Cases

3,825 -

Community

26 -

Computer Vision

86 -

Connectors

1,426 -

Conversation Starter

3 -

COVID-19

1 -

Custom Formula Function

1 -

Custom Tools

1,938 -

Data

1 -

Data Challenge

10 -

Data Investigation

3,487 -

Data Science

3 -

Database Connection

2,220 -

Datasets

5,222 -

Date Time

3,227 -

Demographic Analysis

186 -

Designer Cloud

742 -

Developer

4,372 -

Developer Tools

3,530 -

Documentation

527 -

Download

1,037 -

Dynamic Processing

2,939 -

Email

928 -

Engine

145 -

Enterprise (Edition)

1 -

Error Message

2,258 -

Events

198 -

Expression

1,868 -

Financial Services

1 -

Full Creator

2 -

Fun

2 -

Fuzzy Match

712 -

Gallery

666 -

GenAI Tools

3 -

General

2 -

Google Analytics

155 -

Help

4,708 -

In Database

966 -

Input

4,293 -

Installation

361 -

Interface Tools

1,901 -

Iterative Macro

1,094 -

Join

1,958 -

Licensing

252 -

Location Optimizer

60 -

Machine Learning

260 -

Macros

2,864 -

Marketo

12 -

Marketplace

23 -

MongoDB

82 -

Off-Topic

5 -

Optimization

751 -

Output

5,255 -

Parse

2,328 -

Power BI

228 -

Predictive Analysis

937 -

Preparation

5,169 -

Prescriptive Analytics

206 -

Professional (Edition)

4 -

Publish

257 -

Python

855 -

Qlik

39 -

Question

1 -

Questions

2 -

R Tool

476 -

Regex

2,339 -

Reporting

2,434 -

Resource

1 -

Run Command

575 -

Salesforce

277 -

Scheduler

411 -

Search Feedback

3 -

Server

630 -

Settings

935 -

Setup & Configuration

3 -

Sharepoint

627 -

Spatial Analysis

599 -

Starter (Edition)

1 -

Tableau

512 -

Tax & Audit

1 -

Text Mining

468 -

Thursday Thought

4 -

Time Series

431 -

Tips and Tricks

4,187 -

Topic of Interest

1,126 -

Transformation

3,730 -

Twitter

23 -

Udacity

84 -

Updates

1 -

Viewer

3 -

Workflow

9,980

- « Previous

- Next »