Alteryx Designer Desktop Discussions

Find answers, ask questions, and share expertise about Alteryx Designer Desktop and Intelligence Suite.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Designer Desktop

- :

- Re: Create Row if Value Doesn't Exist

Create Row if Value Doesn't Exist

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I have a table that breaks out data based on classes. In some cases, a class does not exist. In this case, I want to create a class for it by duplicating the next, closest class.

| City | Class | Rent |

| New York | A | 30 |

| New York | B | 20 |

| New York | C | 10 |

| Los Angeles | A | 20 |

| Los Angeles | B | 15 |

| Los Angeles | C | 10 |

| Seattle | B | 10 |

| Seattle | C | 5 |

| Austin | A | 15 |

| Austin | C | 5 |

In the above example, Seattle does not have a Class A row. I want to duplicate the next highest class row for that city. For Seattle, I want to duplicate the Class B row and name it Class A. For Austin, I want to duplicate the Class A row and name it Class B. My end result would be like the table below.

| City | Class | Rent |

| New York | A | 30 |

| New York | B | 20 |

| New York | C | 10 |

| Los Angeles | A | 20 |

| Los Angeles | B | 15 |

| Los Angeles | C | 10 |

| Seattle | A | 10 |

| Seattle | B | 10 |

| Seattle | C | 5 |

| Austin | A | 15 |

| Austin | B | 15 |

| Austin | C | 5 |

My end result would be each row having a Class A, B and C row, with the row being duplicated from the highest available class.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

@taran42

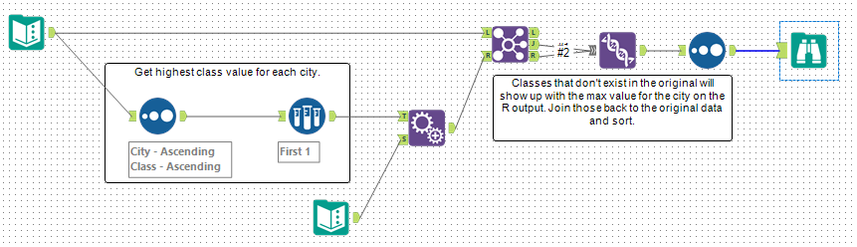

A bit different approach.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi @taran42,

while I think that both @gabrielvilella and @TonyA posts would solve the problem, I'd like to offer an additional approach because I think both have the same two potential flaws:

- Append Fields

- Second Text Input

Append Fields will create all possible combinations and could have a huge impact on large datasets when we built out all combinations. The second Text Input in my opinion is a duplicated information, therefore we'd always need to maintain two sources, which could get nasty at some point. If this works fine with your real dataset, go ahead and use it - both should satisfy you.

If they don't here is what you might try:

I would neither call it clean nor perfect and it has a flaw on his own (more on that later).

What happens?

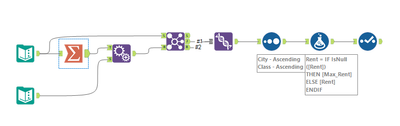

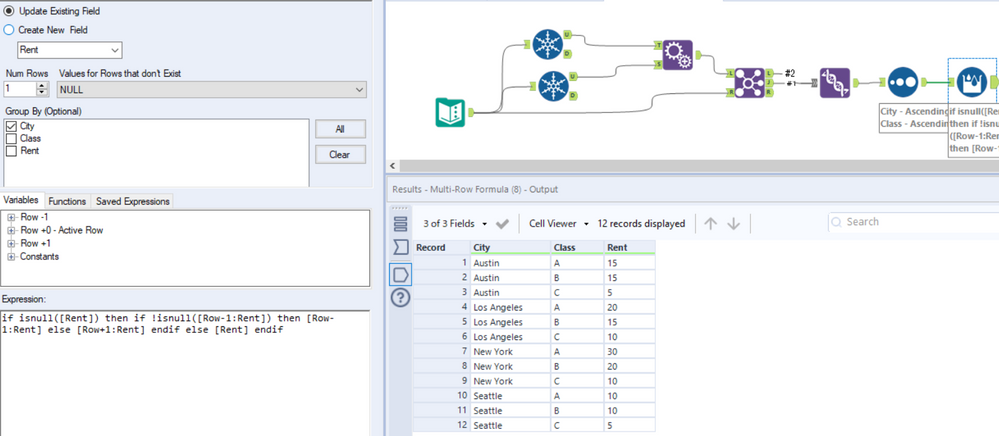

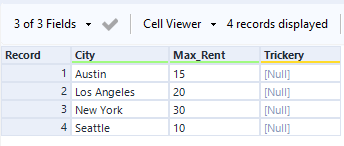

The upper stream uses a typical design-pattern and transposes and cross-tabs the data back and forth. Why you might ask? Because it already gets the data into the right shape. Take a look at it:

All missing rows have been created. They are not filled, but they are already there. At that point we could go for a Multi-Row and simply look 1-2 up/below and it's done. But what when we have hundreds of classes? That's why we have the second stream.

Lower Part

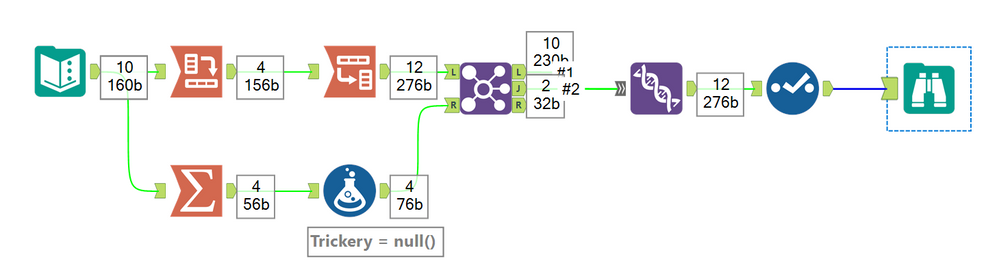

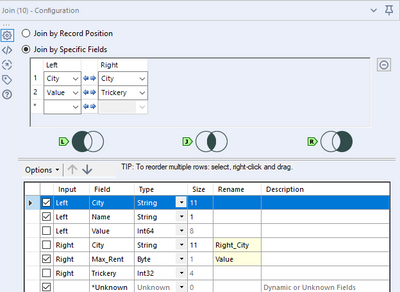

The lower part calculates the maximum rent PER city and adds a column called 'Trickery' that simply has a null value inside it.

Bringing it together

Because of the null value, we can do a very smart join. In the original dataset we always have City, Class and Rent. If the rent is empty, we'd like to fill it up. If we join on City and the empty rent field, we can use that to join the city and trickery field to only connect the missing data to each other.

We can also uncheck the 'Value' from the left (that is empty) and rename the 'Max_Rent' from the right to 'Value' to than union the correct data with the ones, that was fine by default.

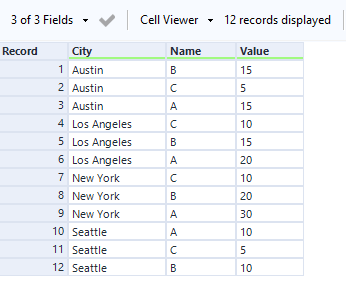

Afterwards the data looks like this:

And that's where we can see the flaw that the solution brings with it. We lost the original column names for Class / Rent during the process and weren't able to get them back. We could. But I don't want to add more complexity into a solution that is already a little bit of trickery itself. Therefor I added a simple Select-Tool to correct the issue and fix it. If you care about the order, you should also sort it - I didn't do that.

I'd probably go with one of the prior approaches if possible because mine might be harder to understand, but I thought it might be worth sharing.

I have attached the workflow for you to play around and seeing the exact configuration.

Best

Alex

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Thanks for the feedback, @grossal. Much cleaner and faster. I wouldn't consider your solution trickery at all. Joining on a null isn't usual practice but it does work. If someone really has an issue with it, it would be simple enough to assign a value to the "trickery" column and use a formula tool to rename the nulls after the transpose. As for the column renames, if that's really an issue, it's easy enough to add them back from the original data set with a Dynamic Rename.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

@grossal @Qiu @TonyA @gabrielvilella Thank you for the plethora of solutions!

All of these solutions got me where I needed to go. In the end I went with Grossal's answer since, like he mentioned, it did not have any extra data inputs. Also thanks a bunch for the detailed explanation of the process.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

@taran42

Thank you your feedback and comment.

Would you also mark the solution from me and @gabrielvilella @TonyA as accepted so others may refer it in the future. 😁

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

@Qiu I forgot you can select multiple solutions as correct. Done!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

@taran42

Thank you very much for your kindness! 😁

-

Academy

6 -

ADAPT

2 -

Adobe

204 -

Advent of Code

3 -

Alias Manager

78 -

Alteryx Copilot

26 -

Alteryx Designer

7 -

Alteryx Editions

95 -

Alteryx Practice

20 -

Amazon S3

149 -

AMP Engine

252 -

Announcement

1 -

API

1,210 -

App Builder

116 -

Apps

1,360 -

Assets | Wealth Management

1 -

Basic Creator

15 -

Batch Macro

1,559 -

Behavior Analysis

246 -

Best Practices

2,696 -

Bug

720 -

Bugs & Issues

1 -

Calgary

67 -

CASS

53 -

Chained App

268 -

Common Use Cases

3,825 -

Community

26 -

Computer Vision

86 -

Connectors

1,426 -

Conversation Starter

3 -

COVID-19

1 -

Custom Formula Function

1 -

Custom Tools

1,939 -

Data

1 -

Data Challenge

10 -

Data Investigation

3,489 -

Data Science

3 -

Database Connection

2,221 -

Datasets

5,223 -

Date Time

3,229 -

Demographic Analysis

186 -

Designer Cloud

743 -

Developer

4,376 -

Developer Tools

3,534 -

Documentation

528 -

Download

1,038 -

Dynamic Processing

2,941 -

Email

929 -

Engine

145 -

Enterprise (Edition)

1 -

Error Message

2,262 -

Events

198 -

Expression

1,868 -

Financial Services

1 -

Full Creator

2 -

Fun

2 -

Fuzzy Match

714 -

Gallery

666 -

GenAI Tools

3 -

General

2 -

Google Analytics

155 -

Help

4,711 -

In Database

966 -

Input

4,296 -

Installation

361 -

Interface Tools

1,902 -

Iterative Macro

1,095 -

Join

1,960 -

Licensing

252 -

Location Optimizer

60 -

Machine Learning

260 -

Macros

2,866 -

Marketo

12 -

Marketplace

23 -

MongoDB

82 -

Off-Topic

5 -

Optimization

751 -

Output

5,259 -

Parse

2,328 -

Power BI

228 -

Predictive Analysis

937 -

Preparation

5,171 -

Prescriptive Analytics

206 -

Professional (Edition)

4 -

Publish

257 -

Python

855 -

Qlik

39 -

Question

1 -

Questions

2 -

R Tool

476 -

Regex

2,339 -

Reporting

2,434 -

Resource

1 -

Run Command

576 -

Salesforce

277 -

Scheduler

411 -

Search Feedback

3 -

Server

631 -

Settings

936 -

Setup & Configuration

3 -

Sharepoint

628 -

Spatial Analysis

599 -

Starter (Edition)

1 -

Tableau

512 -

Tax & Audit

1 -

Text Mining

468 -

Thursday Thought

4 -

Time Series

432 -

Tips and Tricks

4,187 -

Topic of Interest

1,126 -

Transformation

3,732 -

Twitter

23 -

Udacity

84 -

Updates

1 -

Viewer

3 -

Workflow

9,983

- « Previous

- Next »