Weekly Challenges

Solve the challenge, share your solution and summit the ranks of our Community!Also available in | Français | Português | Español | 日本語

IDEAS WANTED

Want to get involved? We're always looking for ideas and content for Weekly Challenges.

SUBMIT YOUR IDEA- Community

- :

- Community

- :

- Learn

- :

- Academy

- :

- Challenges & Quests

- :

- Weekly Challenges

- :

- Re: Challenge #94: Have we reached Peak Pumpkin?

Challenge #94: Have we reached Peak Pumpkin?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

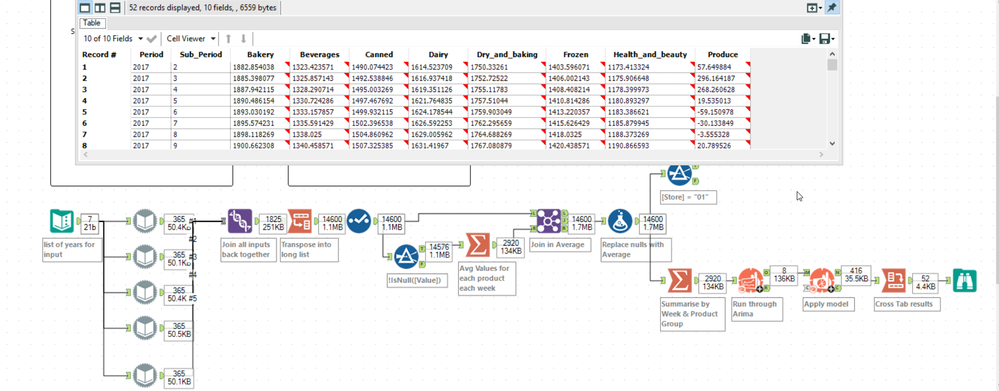

A solution to last week's Challenge has been posted here. I loved seeing the variety of solutions that our Challengers came up with! Admittedly, my solution probably has more tools than it should but it gets the job done...with only one Join tool! In the spirit of friendly competition, especially with the self-imposed challenge of "how many Join tools does it take to calculate pumpkin production", I thought I'd give a shout out to our "winner". Last week, our Challengers' solutions contained an average of 4 Join tool. Our "winner" with the Fewest Join Tools used: @vishalgupta, with 0 Join tools! You read that right...ZERO! There are a few Find/Replace tools in there, though...but I'll take it! Take the time to check out everyone's solutions; the variety is awesome, and I learned a few new tricks myself!

This week's Challenge will give you the chance to show off your skills with the Predictive Tools to answer one of the most pressing issues of our time: Have we reached Peak Pumpkin? Is the time of the demand for EVERYTHING PUMPKIN losing steam? Has Maple Pecan become a force to be reckoned with?

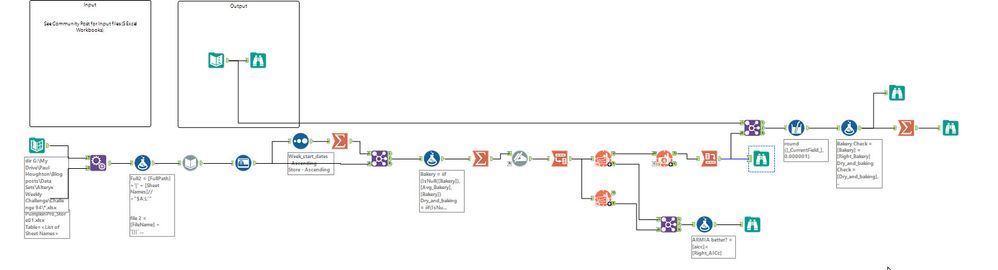

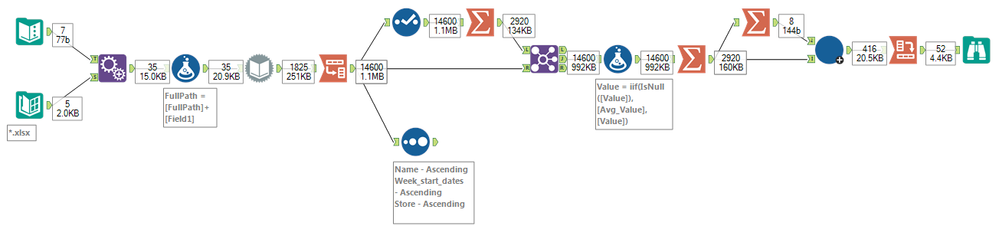

For this week's Challenge, we have five input files, one for each branch of a store, with data on pumpkin product sales from 2010 to 2016. Amalgamate the data into a single data stream to forecast the total expected sales for each product category for the year 2017.

Notes:

- Any Null values should be filled with the average value of that product for that week, across all stores.

- You will need to install the Predictive tools to use the Time Series tools (if you don't have them installed already).

- Choose the time series model whose Akaike Information Criterion, corrected (abbreviated by AICC) are consistently lower. The data from Store 1 is the best and most complete dataset to use for deciding on a model since it contains no Null values.

- The Predictive District on the Gallery has some tools and samples that you may find helpful for this exercise!

Notes for Users on versions 2018.2 and more recent:

Changes to the version of R used in the Predictive tools have caused ARIMA calculations to yield different results than those in the original post. Please reference the following instructions and start/solution files:

- Any Null values should be filled with the average value of that product for that week, across all stores.

- You will need to install the Predictive tools to use the Time Series tools (if you don't have them installed already).

- Perform this challenge using an ETS model to forecast values for the next year. Leave all settings, aside from those needed to configure the model, to "Auto".

- Expect your output to contain negative values

- Refer to the start file "challenge_94_2018_2_start_file.yxmd" and solution file "challenge_94_2018_2_solution.yxmd" that I posted to my reply to another Community user on page 3 of this Challenge's post.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

@LordNeilLord It's only lunch and I've already reached Peak Monday! The Start File has been updated. Thank you for setting me straight!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

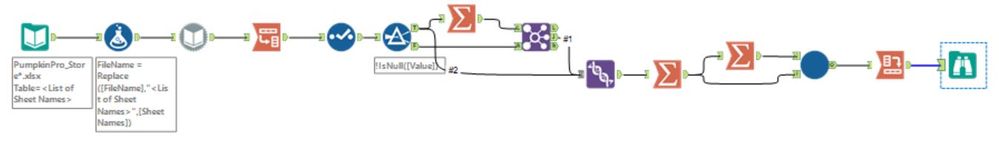

I used the directory tool and the 2 dynamic inputs to get all the data aggregated. I transposed it and just sent store 01 through the ARIMA and ETS to see that ARIMA had a lower AICC. Then I filtered the data for nulls and got the average to fill the holes. I summarized the data at the week and product level before running it through the time series tools (my quick batch process vs the factory tools).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I spent ages trying to get the answer just using the standard time series prediction tools but I couldn't get the right result....so I switched to using the Predictive District tools and STILL couldn't get the correct result. After much swearing I finally realised that I had only imported 4 out of 5 excel files! Lesson learnt...make sure you import all of the data first!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Doesnt look like the solution has been packaged right. Lots of broken macros.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

@paul_houghton, it's packaged now! That should be better!

-

Advanced

283 -

Apps

25 -

Basic

141 -

Calgary

1 -

Core

134 -

Data Analysis

184 -

Data Cleansing

6 -

Data Investigation

7 -

Data Parsing

12 -

Data Preparation

211 -

Developer

35 -

Difficult

77 -

Expert

16 -

Foundation

13 -

Interface

39 -

Intermediate

250 -

Join

206 -

Macros

53 -

Parse

139 -

Predictive

20 -

Predictive Analysis

14 -

Preparation

271 -

Reporting

54 -

Reporting and Visualization

17 -

Spatial

60 -

Spatial Analysis

52 -

Time Series

1 -

Transform

216

- « Previous

- Next »