Tool Mastery

Explore a diverse compilation of articles that take an in-depth look at Designer tools.- Community

- :

- Community

- :

- Learn

- :

- Academy

- :

- Tool Mastery

- :

- Tool Mastery | Score

Tool Mastery | Score

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Notify Moderator

on

07-10-2018

08:28 AM

- edited on

03-08-2019

12:08 PM

by

Community_Admin

This article is part of the Tool Mastery Series, a compilation of Knowledge Base contributions to introduce diverse working examples for Designer Tools. Here we’ll delve into uses of the Score Tool on our way to mastering the Alteryx Designer:

As most of us can agree, predictive models can be extremely useful. Predictive models can help companies allocate their limited marketing budget on the most profitable group of customers, help non-profit organizations to find the most willing donors to donate to their cause, or even determine the probability a student will be admitted into a given school. A well-designed predictive model can help us make smart and cost-effective business decisions. Now, how can we trust the model we create is indeed working? How do we know if the model we create will work with another data set? Once we feel confident with our model, how can we apply it to a new data set? These questions can all be answered with the Score Tool at Alteryx.

The Score Tool applies the model to a given data set, and creates an assessment column, known as a score, which estimates the target variable of the model. Not all score results are the same, and it’s important to know that before we drag the Score Tool onto the canvas.

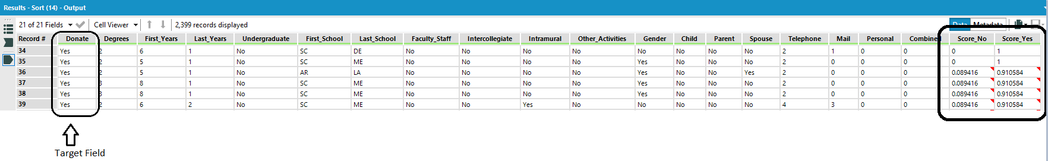

For example, a Logistic Regression model uses a categorical binary target, such as Yes/No or 1/0, which will generate a predicted probability value as the score result for the target field. For instance, customer ‘A&Z’ has score results - Yes (0.9998) and No (0.0002). The score results are generated based on the predictor variables that are passed in. In this case, the results tell us that customer ‘A&Z’ has 99.98% chance of saying ‘Yes’ to Donate.

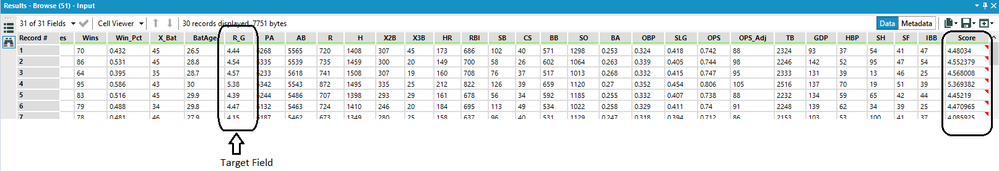

On the other hand, for a Linear Regression model, which uses a continuous target that has a range of values such as Age, the score result will be generated in the form of the actual continuous value. For instance, based on all the predictor variables passed into the Linear Regression model, baseball player ‘Alfronzo Z’ will expect to have 4.48034 run/game for the upcoming season. Having this valuable insight can gives users competitive advantage during the fantasy baseball draft.

Now, looking at the configuration of the Score Tool, we have -

Model Type:

As of Alteryx Version 2018.1, The Score Tool has the capability to evaluate models from two locations; a Local model or a Promote Model.

- Local Model: The model is pulled into the workflow from a local machine or is accessed within a database.

- Promote Model: The model is stored in the Promote model management system.

As the Promote Model option will only apply to users who have purchased Promote, this article focuses solely on the Local Model.

Configuration for local model:

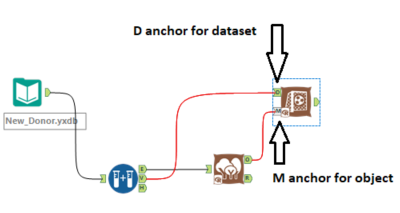

- Connect inputs - The Score Tool requires 2 inputs

- The model object produced in an R-based predictive tool. Newly created model (O anchor) needs to connect to the M input anchor of the Score Tool.

- A data stream that contains the predictor fields selected in the model configuration. Data stream needs to connect to the D input anchor of the Score Tool. This can be a standard Alteryx data stream or an XDF metadata stream.

Configuration:

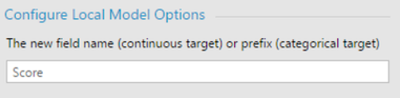

- The new field name (continuous target) or prefix (categorical target): The new field name or prefix MUST start with a letter and may contain letters, numbers, and the special character period (“.”) and underscore (“_”). You can basically name these new columns anything you want as long as they satisfy the format requirement.

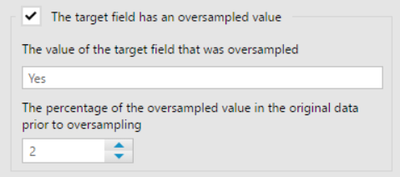

- The target field has an oversampled value: Select to provide: Check the checkbox if there's oversampled value in the target field coming from the model object. According to Wikipedia, oversampling is defined as a technique used to adjust the class distribution of a data set to correct bias in the original dataset. This checkbox helps the tool to know if it is dealing with oversampled value to prevent selection bias.

- The value of the target field that was oversampled - The name of the oversampled field. If you oversampled value "YES" in your target field before the data passed into the predictive tool, you will need to enter "Yes" here.

- The percentage of the oversampled value in the original data prior to oversampling - The percentage of values that were repeated during oversample. Basically, it's the percentage of the soon-to-be oversampled value in the target field. For instance, if you have 80% "No" and 20% "Yes" in original data target field, you will need to enter "2.0" here, if "Yes" is what you are oversampling.

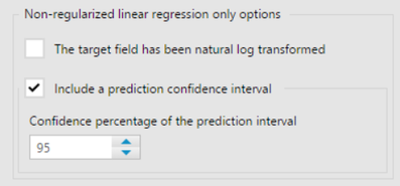

- Non-regularized linear regression only options:

- The target field has been natural log transformed – Select to apply a transformation that fits the values back to the original scale and use a Smearing estimator to account for the subsequent transformation bias. Sometimes, datasets go through log transformation to make it less skewed in terms of their distribution. This makes it easier to read and analyze for the users. The Score Tool will need to know if the dataset has gone through such transformation to get the most accurate results.

- Include a prediction confidence interval – Select to specify the value used to calculate confidence intervals

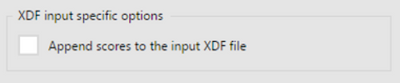

- XDF input specific options:

- Append scores to the input XDF file: Users have the option to append scores to the input XDF file instead of placing them into an Alteryx data stream

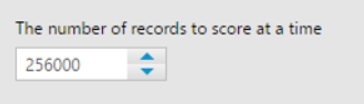

- The number of records to score at a time: Specify the number of records in a group. Input data will be processed one group at a time to avoid the in-memory processing limitation of R

The Score Tool allows users to apply an Alteryx Predictive model to a data set. This can be used for model assessment by scoring a data set with known target variable values, or to put the model into use to predict the target variable where it is unknown. The Score Tool is very useful, and I hope with this article you now feel ready to put it into use.

***Score Tool is a macro. If you want to see the architecture behind the tool simply right-click on the Score Tool and select ‘Open Macro’. Here you can see the R code we use to write the Score Tool***

By now, you should have expert-level proficiency with the Score Tool! If you can think of a use case we left out, feel free to use the comments section below! Consider yourself a Tool Master already? Let us know at community@alteryx.com if you’d like your creative tool uses to be featured in the Tool Mastery Series.

Stay tuned with our latest posts every #ToolTuesday by following @alteryx on Twitter! If you want to master all the Designer tools, consider subscribing for email notifications.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Notify Moderator

This is wonderful... I have semi-related question. I have used a linear regression to model Cities and Sales Generated... and that part seemed fine. But after connecting a new data set (additional cities) and scoring based on the model I created... the output from the score tool changed the field names adding an X in front. I've not seen this before and don't know if this is important? Were those fields ignored in the model? What does the prepended X mean?

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Notify Moderator

@DemandEngineerI know you posted this question eons ago so I'm answering it mostly for posterity in case anyone else has it in the future - basically R appends the "X" in front of fields where the column/fieldname isn't traditional R formatting (ie alphanumeric only with an alpha starting). If your column names started with numbers, they will have "X"'s in front of the column names after they emerge from the R tool inside the scoring macro.

Hope that helps! If this is frustrating to anyone - you can use a dynamic rename tool with a formula to replace the x's in the name after your last R tool.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Notify Moderator

Hi, I am building a decision tree model and want the output to be the probability of the row falling into each of the binary categories. How do I configure the tool?

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Notify Moderator

Great article @EddieW !

Reference to the example used, how do we input the "percentage of the oversampled value in the original data prior to oversampling" 20 or 0.2 ?

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Notify Moderator

hi @ahsanaali it should be 20 (i.e. specified in %).

There can be a bit of confusion here because in the Lift chart, the % should be specified as a number from 0 to 1.

Cheers,

Dawn.

-

2018.3

1 -

API

2 -

Apps

7 -

AWS

1 -

Configuration

3 -

Connector

3 -

Data Investigation

10 -

Database Connection

2 -

Date Time

4 -

Designer

1 -

Desktop Automation

1 -

Developer

8 -

Documentation

3 -

Dynamic Processing

10 -

Error

4 -

Expression

6 -

FTP

1 -

Fuzzy Match

1 -

In-DB

1 -

Input

6 -

Interface

7 -

Join

7 -

Licensing

2 -

Macros

7 -

Output

2 -

Parse

3 -

Predictive

16 -

Preparation

16 -

Prescriptive

1 -

Python

1 -

R

2 -

Regex

1 -

Reporting

12 -

Run Command

1 -

Spatial

6 -

Tips + Tricks

2 -

Tool Mastery

99 -

Transformation

6 -

Visualytics

1

- « Previous

- Next »