Engine Works

Under the hood of Alteryx: tips, tricks and how-tos.- Community

- :

- Community

- :

- Learn

- :

- Blogs

- :

- Engine Works

- :

- The Cache Dataset Macro

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Notify Moderator

Editor's note: Caching is now built into Designer - just right click on a tool. Check out the announcement blog.

You can download the newest version of the Cache Dataset macro (V2) from the Alteryx Analytics Gallery and read about it here.

(You can also get the original version of the Cache Dataset here.)

One of the great things about Alteryx is how once you get the hang of it, you’re able to manipulate data almost as fast as you can think of the next step in your process. You end up spending less time fiddling with the mechanics of blending and analyzing your data, and more on the actual business problem at hand. You get into a rhythmic flow, that state of mind where you lose track of the outside world while splashing accents of regex and highlights of formula onto your canvas, and become one with the data to create a masterpiece of work.

But when building out a workflow that deals with large amounts of data and/or runs processor-heavy analytics, you might find it hard to get into that rhythmic flow that you’re used to when you have to wait several minutes between runs to see the results of adding a tool or a group of tools to the canvas.

One solution to this throttling of your flow is to sample all of the data. There are a couple ways to do this. One is to place sample tools strategically throughout your workflow. A more robust and cleaner way to accomplish the same thing is to create a user constant to limit the number of records on input tools:

- Click on any whitespace on the canvas, then in the configuration window, go to the “Workflow” tab

- Add a new user-defined constant called “sample” and set it to a relatively low number such as 1000

- In the configuration panel of any input tools, set the “Record Limit” option to “%User.sample%” (without quotes)

- Develop your workflow with the number of input records limited, and when you’re ready to run it with the full set of data, simply set the user-defined constant “sample” to be blank so that the data is not capped

The problem with this approach is that in many cases, you’re not going to see the behavior you need to build out your workflow appropriately.

But what if you could “save your spot” and run only the new tools you’ve added to the canvas, without re-running everything else over and over again? Well, I'm going to show you how you can do just that -- with the help of the Cache Dataset macro!

Here’s a quick demo of how it works.

Suppose you have a workflow that has three input data sources that each have over a hundred millions rows. You cleanse them and do a bit of processing, and already this is starting to take longer than you’d like to wait before adding the next tool and playing with the configuration.

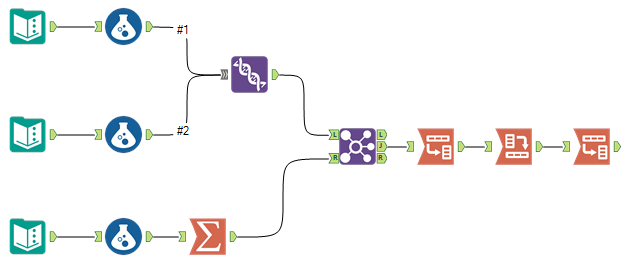

At this point, you decide to stop messing around and cut to the chase with the Cache Dataset macro. To do this, you first place the tools that you’re done configuring into a Tool Container. You then add a Cache Dataset macro to the last tool in the stream:

If you're not familiar with the Tool Container tool, you can find it in the Documentation tool category. To use it, simply place it onto the canvas and then drag a selection of tools into it.

Next, you need to configure the Cache Dataset macro, which thankfully is very simple. You’ll want to give it a unique and descriptive name (if you have multiple Cache Dataset macros on your canvas, be sure to give them each different names!), and you'll want to leave the Run Mode set to "Write".

Now when you run the workflow, the datastream being fed into the Cache Dataset macro (in this case, the output of the second transpose tool) will be saved as a temporary file. (More specifically, it will be saved as an Alteryx data file in the same folder as the Cache Dataset macro itself.)

Setup complete! Let's speed up our workflow!

Now that we've got the workflow setup to use the Cache Dataset macro, let's put it to use. After you've run the workflow once with the Cache Dataset macro in "Write" mode, you can switch it into "Read" mode so that it will reference the temporary file instead of the input stream. When you do this, you'll see an error indicator over the Cache Dataset macro telling you that it's in "read mode". This is not an actual error, but is meant to be a convenient visual indicator so that when you start to build out a large workflow with many Cache Dataset macros, you can tell instantly which ones are set to "Read" mode. This is important because it means that the input datastream is being ignored, and if you make changes to an upstream tool, then those changes won't flow through the Cache Dataset macro while it is in "Read" mode since it is reading from the saved temporary dataset instead.

Now when you run the workflow again, instead of taking a few minutes, it will finish running almost instantaneously! You can now continue building out your workflow from the Cache Dataset macro. When you've got your workflow setup to your liking, you can right click on the Cache Dataset macros and "Delete and Connect Around" to get rid of them without disrupting the flow of data, or you can simply place the macro into "Bypass" mode, which will let the data flow through it untouched as if it weren't even there.

Back into the flow

Once you start using the Cache Dataset macro, you'll get the hang of it quickly, and find yourself getting back into that sweet groove that you're accustomed to, even when crafting some heavy resource-intensive processing in your workflow.

Hopefully you find the Cache Dataset macro to be a useful addition to your toolkit that allows you to get more out Alteryx. If you have any questions, comments, or feedback, please share it in the comment section below, I'd really appreciate it and am happy to help out with any issues you might run into.

One quick note before parting: As mentioned, this macro writes out temporary datasets to the folder containing the Cache Dataset macro. You may want to occasionally delete the datasets in that folder if they are taking up much space. At some point, I plan to update the macro to include logic for cleaning up these temporary datasets, but that will be for another time. When I do, I'll be sure to link to the update here. In the meantime, that is left to the user.

Afterward

To wrap this up, I wanted to show an example of what a workflow might look like that has been developed one section at a time with the Cache Dataset macro. In the workflow screenshot below, I had three large datasets that I needed to transform and normalize before developing a model with the Alteryx predictive toolset. It was actually this very problem that was the inspiration for the Cache Dataset macro. On the left side is the final workflow with all of the Tool Containers enabled and Cache Datasets in "Write" mode (circled in red to make them more apparent), and on the right is the same workflow with the containers disabled and the caching macros set to "Read" mode.

Shoutout to fellow Content Engineer Neil Ryan, who also developed a caching macro with the same functionality independently, before I did. I just don't feel right taking full credit for this solution to a common problem we've all run into, when Neil beat me to the punch. Despite creating basically the same thing, for whatever reason his macro didn't get as much visibility within our group, and as a result I'm the one writing this blog post. It's a veritable Leibniz-Newton situation. So if this is well received, I'm happy to share the glory with Neil. But if you don't like it, please direct your anger and disappointment toward Neil, since when you think about it, it was his idea first.

Updates:

- 10/12/2015: A minor update to the macro has been uploaded so that it can be present in workflows uploaded to the Gallery in 10.0. When a macro has an input tool and output tool that both point to the same file, it interprets this as a problem and will indicate that the validation has failed. This happens in this macro, since we are both reading and writing from the temporary cached file, even though it never happens at the same time. We get around this by pointing the input/output tools to different placeholder filenames, and since the Action tools update the path using the name specified by the user in the configuration, we are good to go. Keep in mind however, that if you are uploading a workflow to the Gallery that uses this macro, you will be ok if it is set to "write" or "bypass" mode, but will run into problems if you have it set to "read" mode!

- 10/14/2015: Added link to download the macro from the Gallery at the top of the page.

- 04/11/2016: Added link to the Cache Dataset V2 macro at the top of the page.

Lead Backend Engineer

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.