Data Science

Machine learning & data science for beginners and experts alike.- Community

- :

- Community

- :

- Learn

- :

- Blogs

- :

- Data Science

- :

- Alteryx Data Science Design Patterns: Predictive ...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Notify Moderator

In our first post we listed the components in a predictive model and reviewed the first four. Let’s continue the discussion by reviewing the fifth component: functional form.

Data-Generating Process

A predictive model aims to predict the behavior of some real-world process. Data scientists term this process the data-generating process because it generates a model’s input data.

- A generating process can be physical. For example, the process that gradually wears out a part on an industrial machine might generate vibration, temperature, and sound metrics that predict when the part will fail.

- A generating process can be biological. For example, one might measure neural changes in experimental lab mice subjected to exercise and diet regimens, to see how physical activity and caloric restriction affect neural health.

- Finally, a generating process can be social. For example, a business’ customers might decide periodically whether to continue to purchase services from the business, or instead to change service providers (churn).

Notice that some of the variables in the examples above are natural effects of the generating process. Such variables are endogenous. The variables that humans manipulate are termed decision, treatment, or independent variables, depending on context. (Here’s a longer list of variable types, if you want to learn more.)

The examples above of the three types of generating process are simplistic. Really most generating processes you’re likely to model will be mixtures of these three types. (In fact, whenever decision variables are present, you have some degree of social influence at work in your generating process.) Knowing that, and knowing about variable types, will help you think critically about what variables might predict a particular generating process’ behavior. For example, if you’re modeling a medicine’s efficacy, you might need to include among your input variables biological measures of how well the body absorbs the medicine, and also behavioral measures of how well experimental subjects comply with their doctors’ prescriptions.

Predictability

Our most basic assumption about a generating process is that its behavior is to some extent predictable, at least when we have chosen a good set of transformations and model features. Let’s pause for a moment to consider what this assumption means. First, note that while the assumption does not always hold, it is surprisingly hard to find a good example of a completely unpredictable (random) generating process. A coin toss is a very common example of a supposedly random process. But empirical studies of physical coin tossing reveal a small degree of predictability. Likewise, people often say that a stock’s price is completely unpredictable because it is (approximately) a random walk, meaning the price is equally likely to increase or decrease by any given amount, each time the price changes. But as a practical matter stock prices fall into a limited range, and within that range the distribution of a stock’s future price, given its present price, is roughly bell shaped—not flat. A stock that is currently priced at $100 is far more likely to move to $101 than to $1,001 the next time the stock’s price changes. In this sense $101 is a better prediction of the stock’s price than $1,001, given its current price. The price is not completely random.

Perhaps the best example we can construct of a random process is a good random-number generator. Some random-number generators sample a measurement from a physical (often sub-atomic) process. Others are software algorithms that generate sequences of numbers having the same statistical distribution that sequences of true random numbers would have, even though the algorithm tells us which number comes next, given which number came last. Such algorithms are pseudo-random. They are important to think about, because while the distribution of their outputs satisfies many statistical tests of randomness, the algorithms themselves are completely predictable, because their generating process (a computer program running on a computer) is deterministic. Once we know the inputs, we can infer the outputs from the algorithm with certainty. In contrast, for some kinds of physical processes, this sort of knowledge is arguably not possible even in principle.

Functional Form

In workaday data science, we assume that our generating process is not truly random. Rather, there is a deterministic relationship between some set of relevant input variables and the outcome (dependent) variable we want to predict. In math we term such a relationship a function, because it maps every set of input-variable values to a single outcome-variable value.

There are three ways to specify a function:

- Most often we specify a functional form as some mathematical expression of a set of input arguments (model features): f(x1, x2) = x12 + 2x1x2 + log(x2), for example.

- We can also specify a function as a procedure that outputs a single value for any given combination of input values.

- Or we can specify a function as a table listing the output value corresponding to each possible set of input values.

Each of these is important in data science, as we’ll see below.

We can idealize the process of building a predictive model as discovering two things:

- which input variables determine the outcome variable

- the deterministic relationship’s functional form.

The first four parts of the predictive-model pattern concern the first of these; the remainder, the second.

In practice we don’t really expect to discover a generating process’ true functional form. Rather, we strive merely to approximate (estimate) it. Thus the famous aphorism, “All models are wrong, but some models are useful.” Many of the design patterns we will study in this blog series capture important methods for constructing useful though approximate models.

Examples

Let’s consider a few examples of functional forms and useful approximations to them. These examples illustrate that a model can approximate a generating process’ functional form, rather than specifying it perfectly or even explicitly, for any of several reasons.

Example 2.1: Approximate Feature Set

Part of specifying a function is specifying its arguments (inputs). If a model only uses some of the inputs required by the generating process’ true functional form, the model can only approximate that form. Variable-selection algorithms and variable-importance metrics help us identify useful inputs. To illustrate, recall our notional electronic medical record (EMR) dataset from the previous post, and the random-forest variable-importance plots for predicting percent body fat (PBF) from the other variables in the EMR. Let’s reproduce that plot here:

Figure 1: Variable Importance for Predicting PBF

Both plots agree that age and body-mass index (BMI) are important predictors (model features). The plots disagree about the importance of gender and weight. If we favor the node-purity measure of variable importance (the one currently displayed by Alteryx’s random-forest tool), we might choose {BMI, weight, age} as our feature set. Suppose now we choose ordinary least-squares (OLS) linear regression as our induction algorithm. The Alteryx dataflow would be as in Figures 2 and 3:

Figure 2: OLS Linear Model

Figure 3: OLS Linear Regression Configuration

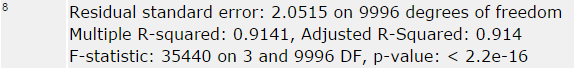

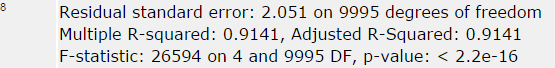

The resulting model-fitness metrics are in row eight of the model output in Figure 4:

Figure 4: Linear-Model Fitness Metrics for Top Three Node-Purity Variables

Let’s change the model to instead use {age, gender, BMI} as our feature set, the top three variables recommended by the mean square error (MSE) variable-importance plot in Figure 1. Figure 5 presents the same model-fitness metrics:

Figure 5: Linear-Model Fitness Metrics for Top Three MSE Variables

Neither model fits the data perfectly; both “explain” about 91% of the variation in the feature set. (That is, R squared is about 0.91. See Wikipedia’s article on R squared to learn more about this measure of model fitness.) But the second feature set explains the data slightly better. If we use the union of the two feature sets above, something curious happens:

Figure 6: Linear-Model Fitness Metrics for Union of Feature Sets

R squared stops improving, even though we added a fourth variable. This result suggests that the fourth variable carries no information not already carried by the top three MSE variables. These three could be the best feature set we could choose from the raw input variables, in the sense that this set seems to be the smallest set that achieves the best possible R squared. (We’ll learn more sophisticated patterns for choosing feature sets in subsequent posts.) To improve R squared further, we would need to take one of three tacks:

- Find some other input variable carrying information about PBF not already carried by the current set of input variables.

- Measure the current set of input variables more precisely.

- Use a model that assumes the generating process has a different functional form.

It’s easy to imagine that behavioral variables measuring level of physical activity and quality of diet could carry additional information about PBF. Let’s focus instead on the examples below, which illustrate the other two approaches.

Example 2.2: Approximate Feature Measurement

A second reason a model does not perfectly capture a generating process’ functional form is that, even if the model uses all of the right features, the features’ values may not be measured perfectly. Instead the measurements can (and almost always do) include some statistical error. For example, let’s suppose that the EMR data contain no measurement error in the age and gender variables. (Patients report these data correctly, and medical personnel record them correctly.) The BMI variable is already noisy; the simulated EMR data were constructed that way. Let’s make BMI noisier, to illustrate the effect of having a measurement that only approximately measures the true value. We can add uniformly distributed jitter to the BMI values using R’s jitter() function:

ex_2.2_data$bmi <- jitter(x = ex_2.2_data$bmi, amount = 2.0)

Figure 7: Add Uniform Jitter to a Variable in R

If we re-run the linear model, R squared drops to about 0.89. In this fashion noisy feature measurements at least partially obscure a generating process’ signal (functional form) from a model.

Missing and invalid feature values are the most extreme case of noisy measurement. In this case the (null) measurement has completely obscured the feature’s true value.

Example 2.3: Approximate Functional Form

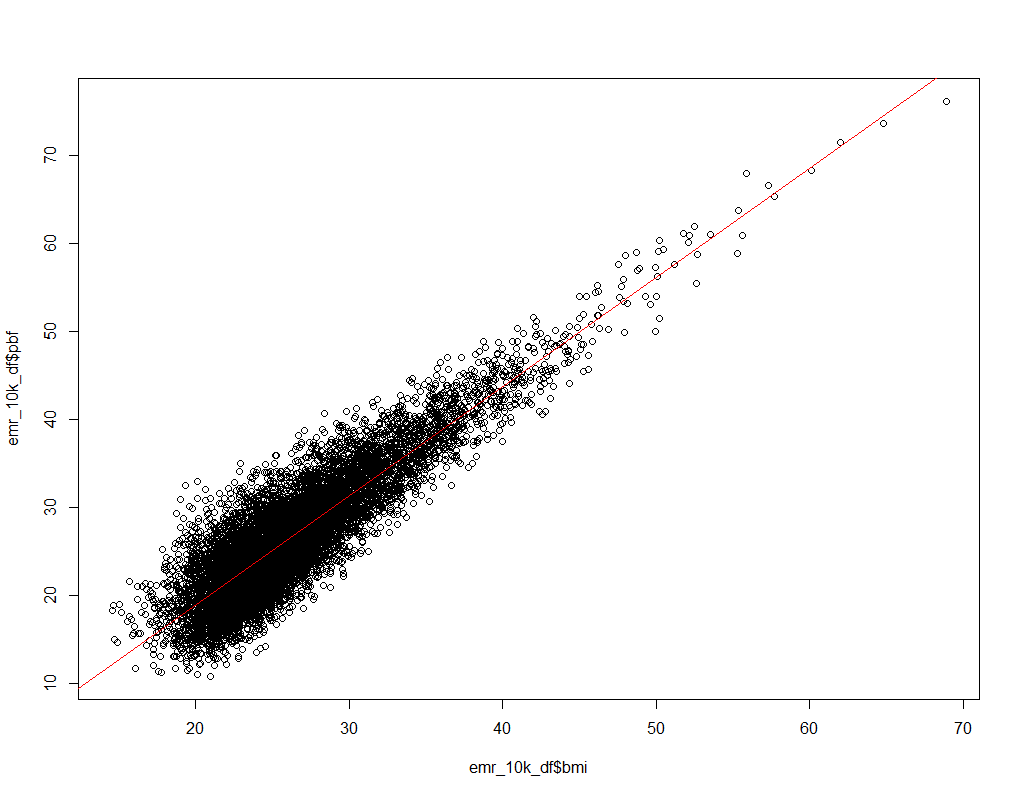

Data scientists often spend a great deal of time exploring input variables visually. One reason for such exploratory analysis is to gain insight about the geometric shape of the generating process’ functional form. The examples above assume that the functional form is linear (or at least that a linear function is a good approximation to the true functional form). Let’s explore the relationship between BMI and PBF to test this assumption. Figure 8 contains the scatter plot and the regression line between the two variables:

Figure 8: Scatterplot and Regression Line for PBF ~ BMI

We would like the scatter plot to center on the regression line. But the scatter plot’s teardrop shape is not quite symmetrical, and not quite centered on the regression line. This suggests that the generating process’ functional form is not perfectly linear. If we regress PBF on several functions of BMI, including BMI2 and log(BMI), we discover that PBF actually correlates very slightly better with log(BMI) than with BMI, suggesting that the relationship is not perfectly linear:

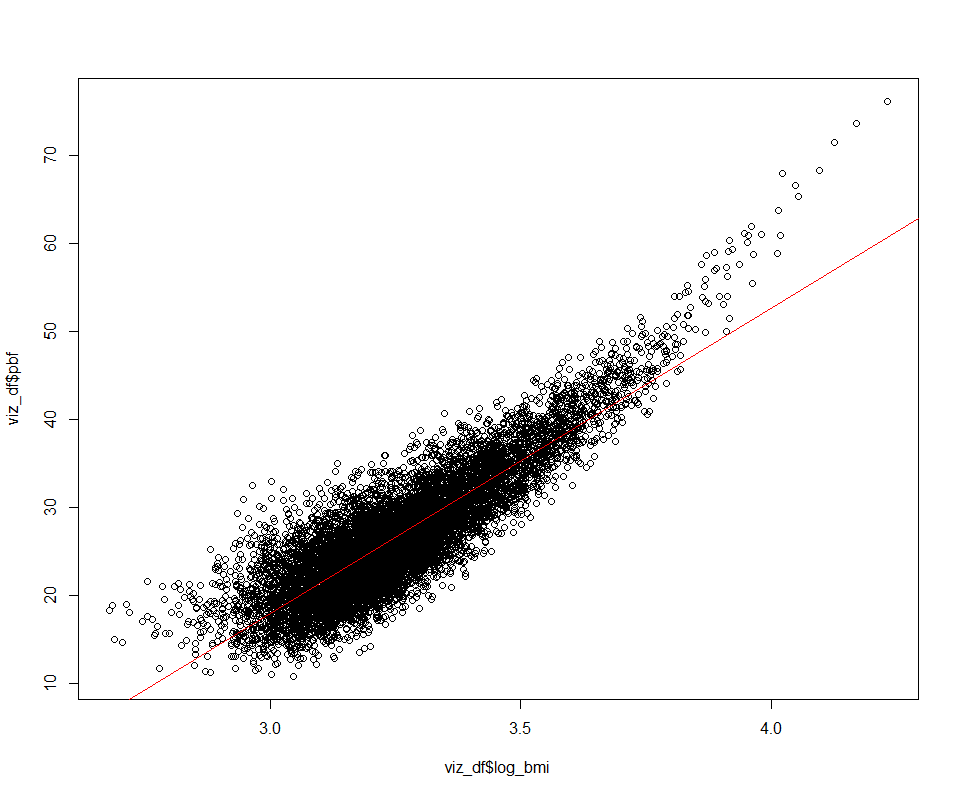

Figure 9: Scatterplot and Regression Line for PBF ~ log(BMI)

Now the relationship has the form PBF = β0 + β1log(BMI, and the regression line divides the data a little better. Interesting, however, replacing BMI with log(BMI) still does not improve the overall model. (We’ll explore why in a future post.)

The true functional form relating PBF and BMI is neither linear nor logarithmic. (I know because I constructed the function serving as the generating process. Such artificial generating processes are termed simulations.) The important point is not that our model is wrong about the function’s shape, but that it’s a pretty good approximation, even though we chose an imperfect linear approximation to the (very slightly nonlinear) functional form.

Example 2.4: Implicit Functional Form

So far we have considered approximating functional forms that you can write using symbols you probably learned in high-school math. Models using these functional forms are parametric. In the previous example, the constants β0 and β1 are the model’s (slope and intercept) parameters. Sometimes the true functional form has a very complicated shape, one that would be difficult or practically impossible to approximate with a parametric model. The alternative is a non-parametric model. These models still produce functions, because they still predict a single value for each possible set of model-feature values. But they don’t tell us the function’s shape, its mathematical form. That form is merely implicit in the fitted induction algorithm. Many such models are termed machine-learning models, emphasizing that only the machine “learns” the functional form, while the model is a “black box” to its human users. Random forests, support-vector machines, and neural networks are all examples of machine-learning models.

It’s important to realize that using a machine-learning model does not free us from the mathematical considerations of classical parametric models. The theoreticians who discover machine-learning algorithms try to produce the same sorts of guarantees about these algorithms that parametric methods enjoy. Often the math is all the more challenging, because the theoreticians can’t reason directly about a closed-form function’s behavior. These guarantees (when they exist) still concern whether and how quickly the model converges on a single answer as you feed it more and more input data. And it is remarkable that some machine-learning models are far more flexible about the functional forms they can approximate than classical models. In particular, a neural network can be constructed that approximates any given continuous function as closely as we like. This flexibility is one reason machine-learning models hold such great appeal.

The Alteryx data flow for a random-forest model just replaces the linear-regression tool in Figure 2 with the forest-model tool:

Figure 10: Random-Forest Model

This model only explains 82.64% of the variation in the input variables, despite its flexibility. This suggests that the linear shape of our OLS linear regression model is closer to the generating function’s true shape than that of the (non-linear) function induced by a collection of decision trees. All of which brings us to a good model-development rule of thumb. First, fit the best parametric model you can. Only try machine-learning models when no parametric model gives you good-enough results. Parametric models aren’t somehow obsolete just because machine-learning models came along. On the contrary: their explicit functional form makes them more transparent, and often closely approximates the generating process’ true functional form. Use them when you can.

We have used the phrase ‘model fitness’ without explaining it. Let’s fix that. Model fitness means two things:

- The model’s predictions agree with the data we used to develop (train, fit) the model.

- The model’s predictions agree with data not used to train the model. (The model generalizes.)

A model underfits its training data when it fails at the first thing. Usually this occurs because the model’s functional form is less complex than that of the generating process. That is to say, the model is insufficiently complex. Overfitting occurs when the model fails at the second thing. Usually this occurs because the model’s functional form is too complex—because the fitting process has fitted it to measurement noise in the training data, as well as to the generating process’ true functional form.

While people don’t set out deliberately to underfit or overfit a model, several common practices lead to underfitting and overfitting. We’ll review some of these antipatterns in future posts.

If we had all of the data that the generating process generated, and we used all of it to train the model, we could always reduce both senses of model fitness to the first. In particular, we could—technically speaking—specify the generating process’ functional form perfectly, by specifying the exact output value for each set of inputs. Such a specification would not be very interesting, because it would not perform a key function of an analytic model, namely summarizing the data. It would just be a table containing all of the data.

We have thus arrived at a very basic data-science design pattern (DSDP) that the above examples illustrate. The pattern is hundreds of years old, and has been expressed in many ways. Centuries ago it appeared as Occam’s razor. Classical statisticians call it the bias-variance tradeoff. We’ll call it the underfitting/overfitting tradeoff: use the fewest input variables you can, and the simplest model form you can, to maximize model fitness. We’ll see this pattern at work when we examine the last three components of a predictive model.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.