Data Science

Machine learning & data science for beginners and experts alike.- Community

- :

- Community

- :

- Learn

- :

- Blogs

- :

- Data Science

- :

- Corroboration is Key: Reproducing Goldacre Researc...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Notify Moderator

Dr. Ben Goldacre is a best-selling author, physician, academic, journalist, and this year's Inspire Europe closing keynote speaker. In addition to authoring Bad Science and Bad Pharma, Goldacre has given multiple Ted Talks and actively participates in research at his current posting as a Senior Clinical Research Fellow at the Centre for Evidence-Based Medicine.

Earlier this year, Goldacre served as the lead author of a research paper published in the BMJ exploring reporting compliance to the EU Clinical Trials Register (EUCTR). The paper, titled Compliance with requirement to report results on the EU Clinical Trials Register: cohort study and w..., examines compliance with the European Commission (EC) guideline 2012/c302/03.

The 2012 EC guideline 2012/c 302/03 mandates that all Clinical Trials registered on the EUCTR since 2004 post their results to the European Medicines Agency (EMA) within 12 months of the trial’s completion. Trial reports are then posted publicly on the EUCTR within 15 working days of receipt by the EMA. The final date for compliance with this regulation was December 21st, 2016.

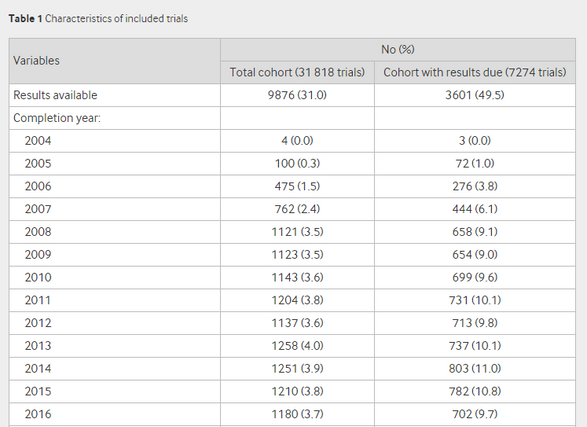

The intent of this legislation is to enable medical professionals and patients to make informed choices about which treatments work best by ensuring all trial results are reported in a common repository. What Goldacre et al. found in their research is that of the 7274 trials listed as completed on the EUCTR, only 3673 (49.5%, confidence interval 48.4% - 50.6%) had reported results, indicating poor compliance with the EC’s requirement to post all trial results within 12 months of completion.

One of the most exciting parts of this paper is the release of a website that gets updated monthly with compliance rates, which can be found at http://eu.trialstracker.net/. The intent of this resource is to improve reporting rates by providing clear and accessible information to trial sponsors.

In the spirit of transparency and reproducibility, in addition to the website and making their paper open access, Goldacre et al. have published their data and analytical code, which can be found here.

The bulk of Goldacre et al’s analysis was performed in Stata, a statistical software used most commonly in economics, sociology, political science, biomedicine, and epidemiology. In the same spirit of reproducibility and democratization of analytics, we have taken Goldacre et al.'s data and replicated some of their analysis in an Alteryx (a more user-friendly software) workflow, and as a Python-based Jupyter Notebook (an open-source software).

Alteryx

After reading in the data to Alteryx, we can start reproducing the analysis performed by Goldacre et al. by following their Stata code. First, we use a Select tool to modify the data types from numeric to string for fields where numbers represent discrete categories. Next, we use a Multi-Field Formula tool to convert the values of fields with boolean data types (e.g., "results" or "multiple sponsors") from 1's and 0's to more descriptive values ("yes" or "no", respectively). Then, we use a Formula tool to convert categorical variables values to with more descriptive strings (for fields with more than two categories), as well as to generate three new columns (features) that are used later in the analysis. The first new column created with the Filter Tool is a categorical country count, the second new column contains the trials' completion year, and the third new column flags if a sponsor name is "bad" (no sponsor name provided or sponser name is unclear). We can then use a Filter tool with the new completion year column to drop the rows with invalid end years (2000, 2018, and 2041).

In their Stata code, after preprocessing their data, Goldacre et. al perform some initial data investigation. These processes are replicated and expanded on in the attached workflow in the Tool Containers labeled in Data Investigation. In Alteryx, we start data investigation using the data profiling feature in the Browse tool.

Replicating the Stata code, also we investigate the number of active trials per each sponsor and explore dividing the sponsors into quartiles and deciles based on this column. For this investigation, we use a Unique tool to make sure each sponsor, and their number of active trials, is only counted once. We explore quantiles and deciles using a couple different methods, including Tile tools and Summarize tools, as well as generate a histogram with the Histogram tool to visualize the distribution of the number of active trials each sponsor has.

From this investigation, we see that the number of active trials feature is very skewed. Of the 4546 Sponsors included in the data set, only 420 have more than 11 active trials.

Also following the Stata code, we investigate date inconsistencies and missing end dates in the original data set. We use a Formula tool to calculate the difference (in days) between maximum and minimum end dates and Summarize tools to calculate summary statistics. We use Filter tools to identify the number of trials with no completion date, given they are not in Phase 1 (which are not required to report results unless it is pediatric), and are marked as all complete.

We find that for trials where there is a difference between the minimum reported end date and the maximum reported end date (n = 2010); the average difference between the two dates is 154.8 days. We also find that excluding trials in Phase 1 (that are not pediatric), there are 3270 trials marked as all completed that are missing a maximum end date.

Data investigation is a critical step in any research process, as it allows you to identify patterns that may be worth investigating, as well as assess the overall quality of the data set you are working with.

After preprocessing and data investigation, the next step in data preparation is to filter the provided data down to the rows that verifiably have results due. We can do this easily in Alteryx with a series of Filter tools. The first Filter tool selects for trials where the trial is completed in all participating countries. The second Filter tool drops rows where the completion date is missing. The third Filter tool drops rows where the completion date is within the past 12 months of the date (12/19/2016). Finally, the fourth Filter tool drops rows that were terminated in phase 1, unless it is a pediatric trial (as specified by the regulation).

In their paper, Goldacre et. al include a really nice Sankey diagram that visualizes how the data is filtered down to the study cohort. Mostly for fun, I decided to recreate it using the R tool in Alteryx (but using Alteryx colors, obviously).

There is a Data Science Blog post on the Alteryx Community called Custom Interactive Visualizations Part 1 that describes the R Code used to do this if you are interested in doing it yourself (the code is also included in the attached workflow in the Tool Container labeled Figure 1 | Sankey Diagram).

Reproducing the headline result of the paper is easily accomplished with a couple of Summarize tools- used to group and count the trials with and without results, and create a total count of all records, an Append Fields tool to join each the total count to the group counts, and a Formula tool to calculate the percentage of trails with or without results, as well as a confidence interval for a population proportion.

In the Browse tool, we can confirm that our results match what is reported in the paper. Hooray for parity!

Using Alteryx, we are able to reproduce most of the data and the tables included in the original paper.

For the metrics reported in Table 3, we created a Logistic Regression model and examined the Report (R) output to examine the significance of certain variables in estimating whether a trial will be reported. Because the configuration for this test is not identical to the Logistic Regression Goldacre et al. performed to generate the metrics in Table 3, we do not see identical results. However, we do see similar patterns to what has been reported in the Goldacre et. al paper. We see high significance (indicated by low P-values) in the number of clinical trials a sponsor has active (split into quartiles), the sponsor status being commercial, if all trials in the given clinical trial are terminated, and a higher number of countries involved in a clinical trial.

Python

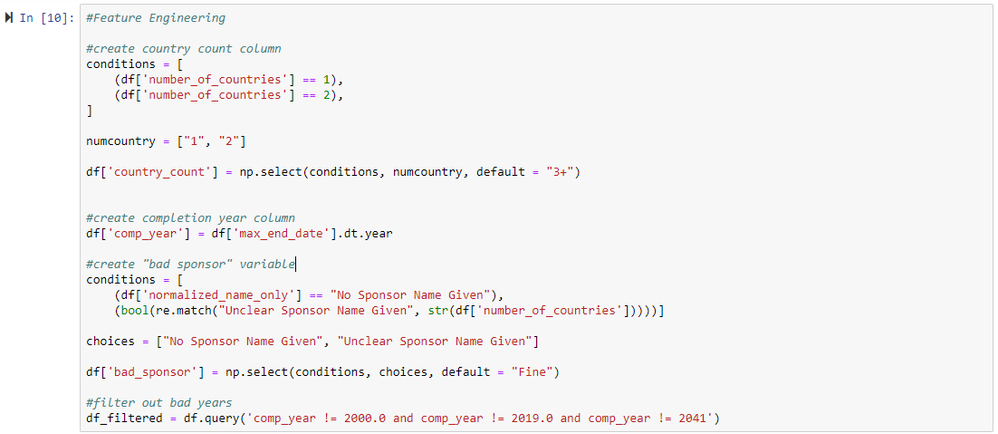

For people interested in exploring this data analysis in an open-source platform, we started the analysis in a Jupyter Notebook. Using Pandas, we load in the Excel file (downloaded from the Github site) and perform the same feature engineering and data filtering.

First, we read in the data and load necessary packages

In the next cell, we create the column for a categorical country count (how many countries are involved in a trial; 1, 2, or 3+), a column for completion year, and a column indicating if a sponsor name is bad.

Once the preprocessing is completed, we can filter the data down to the Study Cohort dataset.

Finally, we can check that our initial numbers match the results reported in the research paper.

This notebook is available for your experimentation and further development on Google Colab here. Don't forget to download the raw data from the Github repository before you start!

Reproducibility, the open sharing of data, and expanding on the research of others are all at the heart of the scientific process, and we live in an exciting time where it is more possible than ever. With tools like Alteryx and Python, we have easy and open-source ways to process data and derive new knowledge.

A geographer by training and a data geek at heart, Sydney joined the Alteryx team as a Customer Support Engineer in 2017. She strongly believes that data and knowledge are most valuable when they can be clearly communicated and understood. She currently manages a team of data scientists that bring new innovations to the Alteryx Platform.

A geographer by training and a data geek at heart, Sydney joined the Alteryx team as a Customer Support Engineer in 2017. She strongly believes that data and knowledge are most valuable when they can be clearly communicated and understood. She currently manages a team of data scientists that bring new innovations to the Alteryx Platform.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.