Data Science

Machine learning & data science for beginners and experts alike.- Community

- :

- Community

- :

- Learn

- :

- Blogs

- :

- Data Science

- :

- Alteryx Data Science Design Patterns: Cross Valid...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Notify Moderator

Alteryx Data Science Design Patterns: Cross Validation

Now that we’ve reviewed what it takes to put together a simple model, let’s spend a couple of posts on two more widely used sets of patterns for making models out of models. In this post we’ll focus on cross validation. We won’t look at patterns specifying how to use cross validation for specific modeling problems. (That comes later.) Rather, we’ll describe a common set of techniques used in such patterns.

Cross validation (CV) is a collection of techniques based on repeatedly partitioning a sample dataset, computing some model-fitness statistic on each partition, and combining these statistics into an overall result. We distinguish CV techniques along two dimensions: how we structure the partitions, and how we use them. Most descriptions of CV address these concerns together. Let’s instead approach them separately. CV is a complex subject, so at the end of this post we’ve provided several widely cited references for further reading.

Partitioning Techniques

In what follows we use the term partition to mean a way of dividing a dataset into subsets, so each data point is in exactly one subset. (The subsets are mutually exclusive and collectively exhaustive.)

Single Two-Way Randomized Split

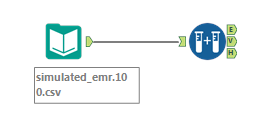

The most basic (and decades old) sample-partitioning technique is simply to split a sample dataset into two parts: the training (or estimation) set and the validation set. The appropriate proportion of data in each set varies with context. CV techniques generally assume that the sample points are independent and identically distributed (iid). Under this assumption one randomly assigns each data point to the training set according to a Bernoulli trial having probability of success equal to the fraction of sample data you want to assign to the training set. (The remaining data points go in the validation set.) A common heuristic is to set the fraction to 70%. In Alteryx we use the Create Samples tool to create a single two-way randomized split:

Single Three-Way Randomized Split

A modification of the single two-way randomized split is to create a single randomized partition of the sample dataset into three parts: training and validation sets (as before), plus a testing (or holdout) set. One heuristic for the proportions of these sets is 50% for the training set, and 25% for each of the other two. You can also use the Create Samples tool to create a single three-way randomized split.

K Fold

K-fold splitting is the most common splitting technique. One randomly partitions the sample dataset into K folds (subsets), where the probability of assignment to each fold is 1/K, for some counting number K. The result is K partitions of the data. In the ith partition, fold i is treated as the validation set, and the union of the other folds is the training set. Common values of K are 5 and 10. Figure 2 below illustrates the third partition of five-fold splitting, in which the training set includes folds 1-2 and 4-5, and fold 3 is the validation set.

|

fold 1 |

fold 2 |

fold 3 |

fold 4 |

fold 5 |

Figure 2: Third Partition of Five-Fold Splitting

Leave P Out

An important variant of k-fold splitting is leave-p-out splitting. We choose a counting number p (less than the sample size n) to be the number of data points in each fold, still assigning points to folds randomly. Then the number of folds (and partitions) is the binomial coefficient of choosing p out of n. This number grows quickly with n and p, making (exhaustive) leave-p-out splitting computationally prohibitive for many problems. One can instead choose a proper subset of the set of possible folds in such cases; see below.

A historically important special case of k-fold splitting is leave-one-out splitting, in which p is one, so that there are n partitions, each having a validation set containing a single data point. Leave-one-out CV has some undesirable properties that motivated the search for alternative CV methods. As a result, nowadays leave-one-out CV is sometimes considered an antipattern.

Balanced Incomplete

Balanced incomplete splitting is leave-p-out splitting, but only selecting a balanced subset of the n-choose-p possible partitions. In balanced subsetting, every sample data point appears in the same number of partitions, and every pair of data points also appears in the same number of partitions.

Monte Carlo

Monte Carlo splitting is leave-p-out splitting, but choosing the validation set randomly, with or without replacement, for each partition. The with-replacement case violates the usual practice of partitioning the data, but is still considered cross validation. The without-replacement case amounts to repeating the single two-way randomized split technique, which is the splitting technique used in repeated learning testing.

CV Use Cases

CV’s fundamental purpose is estimating a model’s out-of-sample fitness. This estimate is often used to tune or select a model. We discuss these uses below. There are CV procedures for model combination as well. We won’t review these in this post, but may survey them in our next post (on methods for combining models).

Fitness Estimation

The most naïve way to estimate how well a model fits its data population is to fit the model to an entire sample, and then test the fitted model on the same sample. The resulting model-fitness estimate is termed the re-substitution estimate. It almost always overestimates out-of-sample fitness (that is, it underestimates out-of-sample error). This antipattern is called re-substitution.

Simple Two-Way Validation

The most basic improvement on re-substitution is simple two-way validation:

- Generate a single two-way randomized split.

- Fit the model to the training set. (Fitting the model must include all aspects of fitting, including variable selection.)

- Estimate the model’s out-of-sample fitness on the validation set.

The resulting estimate is termed the validation estimate of model fitness.

Simple Three-Way Validation

When we have enough data, and we’re choosing among several possible models, an alternative is simple three-way validation:

- Generate a single three-way randomized split.

- Fit the model on the training set.

- Estimate each candidate model’s fitness on the validation set.

- Measure the selected model’s out-of-sample fitness on the test set.

Simple Cross Validation

Simple cross validation repeats simple two-way validation over a set of partitions:

- Fit the model to the entire sample dataset. (This is the model whose out-of-sample fitness the CV procedure estimates. Let’s call this the overall model.)

- Generate a set of partitions of the sample dataset.

- Perform simple two-way validation on each partition. That is, (re)fit the model to each partition’s training set, and estimate the (re)fitted model’s fitness on the partition’s validation set. (Normally, the only purpose of the fitting within this step is to produce the step’s fitness estimate.)

- Combine the partitions’ fitness estimates into an overall estimate of out-of-sample fitness for the overall model. (Most often we average the partitions’ fitness estimates to obtain the overall fitness estimate.)

The resulting CV estimate of the overall model’s fitness is usually a substantial improvement over the re-substitution and validation estimates. The trick is to choose partitioning and estimate-combination methods appropriate to the model type.

Alteryx provides a K-fold CV tool on the Gallery. Alteryx 11 is targeted to include K-fold CV as an option built into some of the predictive tools.

Second-Order Parameter Tuning

The Multi-Level Tuning Problem

CV is often used to tune models containing second-order parameters (sometimes termed free parameters or hyper-parameters). Such models are termed multi-level or hierarchical models. One can view the distinction between first-order and second-order parameters in several ways.

- An elementary, classical model (such as ordinary least-squares linear regression) contains only first-order parameters. We modernize the first-order model by adding to it second-order features such as regularization or distributions on the first-order parameters. The parameters required by these second-order features are second-order parameters.

- At least some second-order parameters specify or influence model structure. Examples include the depth of a tree, the number of layers in a neural network, the number of clusters in a clustering model, and regularization parameters (which often influence variable selection).

- We learn the values of a model’s first-order parameters directly, by fitting the model to a sample dataset. In contrast, we learn the value of a model’s second-order parameters indirectly, by repeatedly fitting the first-order model using different second-order parameter values, and choosing the second-order values that produce the best overall fit. In other words, we wrap the first-order optimization process for fitting the first-order model within a separate second-order optimization procedure for fitting the second-order parameters. (Such procedures are termed wrapper procedures.)

There is no mathematical requirement that we use separate procedures to solve the first-order and second-order fitting problems. We could try to choose all (first- and second-order) parameter values using a single monolithic optimization algorithm. Rather, we distinguish first- and second-order parameters for analytical convenience, because the first- and second-order models’ formal structures have well-known, efficient fitting algorithms.

Basic Multi-Level CV Tuning

In multi-level tuning the CV estimate of the first-order model’s fitness is frequently used as the wrapper optimization algorithm’s objective function. The basic multi-level CV tuning algorithm is,

- Choose a starting set of second-order parameter values.

- Use simple CV to fit the first-order model using the current second-order parameter values, and to compute the fitted model’s CV fitness estimate.

- If the fitness estimate at step 2 is optimal (so far), record the second-order parameter values and the fitness estimate.

- If the second-order optimization algorithm’s stopping condition is satisfied, go to step 7.

- Update the current second-order parameters’ values using the second-order optimization algorithm’s search strategy.

- Go to step 2.

- Fit the first-order model to the entire dataset, using the optimal second-order parameter values chosen in steps 1-6.

It is somewhat counterintuitive that the above procedure fits the second-order parameters (in steps 1-6) before fitting the first-order parameters (at step 7). Note too that the procedure’s overall CV estimate is the CV estimate computed at step 2 and recorded at step 3, for the optimal second-order parameter set.

Grid search is a very common second-order optimization algorithm. It discretizes the space of second-order parameters and iterates over the grid, computing the first-order CV estimate at each point in the grid. It stops only once the entire grid of possible second-order parameter sets has been tried.

Basic multi-level CV tuning is widely used, but the CV estimate it produces can be optimistic (wildly optimistic, sometimes!), because it it lacks a way of measuring model fitness that is independent of the fitting procedure. We can improve on the procedure’s overall fitness estimate by providing an independent assessment of model fitness.

Nested CV

Nested CV is one way to provide an independent assessment of overall fitness of a multi-level model tuned with basic multi-level CV tuning. Essentially one uses two CV procedures. The inner CV performs basic multi-level CV tuning to fit both first- and second-order parameters. The outer CV estimates the fitted model’s out-of-sample fitness, again using basic multi-level CV tuning. Here’s pseudocode:

Generate a set of partitions {Pi, 1 ≤ i ≤ Kouter) of the sample dataset for the second-order (outer) CV. (Let Ti be the training set of the ith partition, and Vi be the validation set.)

for 1 ≤ i ≤ Kouter loop

Perform basic multi-level CV tuning on Ti, yielding fitted model Mi.

Estimate Mi’s fitness Fi on Vi.

end outer loop

Fit the overall model M to the entire sample dataset, again using basic multi-level CV tuning.

Combine the estimates Fi to yield the overall estimate F of M’s out-of-sample fitness.

Note that the partitioning strategies for the two CVs can be different. For example, Varma and Simon (2006) use leave-one-out CV on 40 data points in the outer CV loop, but 10-fold CV for the basic multi-level CV tuning.

Model Selection

Second-order parameter tuning is sometimes termed model selection, because each iteration of the outer loop in the two-loop fitting process estimates model fitness for a given set of structural decisions defining a specific first-order model. We can extend this notion of model selection to choosing across different classes of models, still using CV to estimate model fitness. An additional second-order parameter can determine the model class. The only constraint is that all model types must produce the same model-fitness metric. For example, to select a classification model, we could compute the misclassification rate regardless of model type. Here again CV’s essential function is to estimate out-of-sample fitness.

References

Here are some useful resources for studying CV more deeply (in no particular order):

S. Arlot and A. Celisse, “Cross-Validation Procedures for Model Selection”

Ping Zhang, “Model Selection via Multifold Cross Validation”

Trevor Hastie et/al, The Elements of Statistical Learning, 2nd Ed.

Ron Kohavi, “A Study of Cross-Validation and Bootstrap for Accuracy Estimation and Model Selection”

Jun Shao, “Linear Model Selection by Cross-Validation”

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.