Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

I've seen this question before and have run into it myself. I'd like to see a new tool that would allow a developer (of a workflow) to choose a path of logic based upon criteria known only during the execution of a module.

If LEFT INPUT Count of records < 10,000 THEN Path1 (e.g. use a calgary join)

ELSE Path 2 (e.g. use a standard join)

endif

Thanks,

Mark

-

API SDK

-

Category Developer

-

Engine

-

Runtime

Please add official support for newer versions of Microsoft SQL Server and the related drivers.

According to the data sources article for Microsoft SQL Server (https://help.alteryx.com/current/DataSources/SQLServer.htm), and validation via a support ticket, only the following products have been tested and validated with Alteryx Designer/Server:

Microsoft SQL Server

Validated On: 2008, 2012, 2014, and 2016.

- No R versions are mentioned (2008 R2, for instance)

- SQL Server 2017, which was released in October of 2017, is notably missing from the list.

- SQL Server 2019, while fairly new (~6 months old), is also missing

This is one of the most popular data sources, and the lack of support for newer versions (especially a 2+ year old product like Sql Server 2017) is hard to fathom.

ODBC Driver for SQL Server/SQL Server Native Client

Validated on ODBC Driver: 11, 13, 13.1

Validated on SQL Server Native Client: 10,11

- ODBC Driver 17+ is not mentioned, even though it was released in February of 2018. https://docs.microsoft.com/en-us/sql/connect/odbc/windows/release-notes-odbc-sql-server-windows?view...

- SQL Server Native Client is deprecated. It is being replaced by Microsoft OLE DB Driver for SQL Server. However, there is not a mention of Microsoft OLE DB Driver for SQL Server. The latest version of this is 18.3.0. https://docs.microsoft.com/en-us/sql/connect/oledb/release-notes-for-oledb-driver-for-sql-server?vie...

When developing and/or troubleshooting workflows, I frequently disable the outputs using the checkbox in the Runtime configuration settings to speed up the workflow and prevent sending emails and/or overwriting data in the output sources... however, 9/10 times I forget to turn off this checkbox when I save my workflow back up to the Gallery. This results in countless emails from users to the tune of "I ran the workflow successfully, but there was no output?" 🙂

Would love love love to see some sort of warning notification (similar to the ones that already shown for data sources etc.) when saving to the Gallery if the "Disable All Tools that Write Output" option is selected in the Runtime settings.

Thank you!!

NJ

Limit conversion warning allows for a minimum of 1 message. Can we set the minimum to 0 to completely ignore the message?

Perhaps we can allow warning messages a similar function as ERROR messages and allow the designer to Ignore, Warn or Cancel?

ConvError: Imputation (441): Tool #104: No demand: 0.200000000000031 had more precision than a double. Some precision was lost.

ConvError: Summarize (456): Data: 0.360000000004675 had more precision than a double. Some precision was lost.

End: Designer x64: Finished running FP Model - Marquee Crew v3.yxmd in 32.3 seconds with 16 field conversion errors and 4 warnings

Thanks,

Mark

-

Engine

-

Feature Request

-

Runtime

In some of our larger workflows it's sometime tedious to run a workflow in order to see some data, when adding something in the beginning of the workflow. Running und stopping it as soon as the tools gets a green border is sometimes an option.

It would be convenient to have an option in the context menu to run a workflow only until a specific tool.

In effect, only this specific tool has an output visible for inspection and only the streams necessary for this tool have been run - everything else is ignored and I'm fine to not see data for the other tools.

This would speed up the development of small parts in a larger workflow much more convenient.

Regards

Christopher

PS: Yes, I can put everything else in a container and deactivate it. But a straight forward way without turning containers on and off would be preferable in my opinion. (I think KNIME as something similar.)

-

Engine

-

Runtime

We are working on building out training content in a story mode and would like to have short snippets playing in a loop for people to see embedded in the workflow. Currently you can add a .gif to a comment background and it will provide a still image on the worklfow itself but functions as a gif in the configuration display. The interesting part is when you are running the workflow the .gif works and then it pauses it when the workflow has completed!

Hey @apolly

You and the team have been doing a lot of innovative changes to the results window for data.

Could I ask for an uplift to the results window for Workflow Messages?

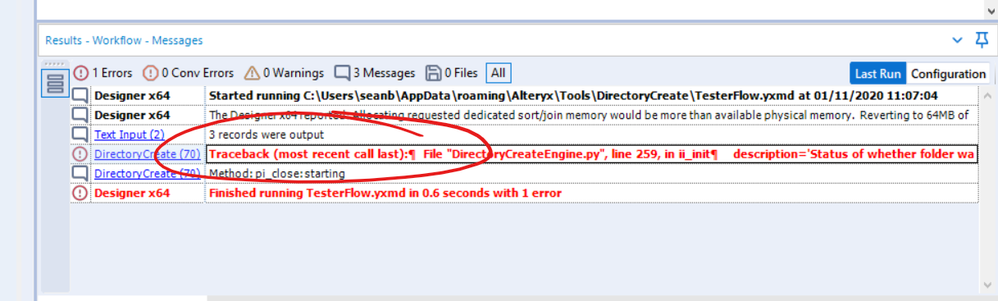

Summary: Error messages in the workflow results window cannot be fully viewed - have to be copied into Notepad and then reformatted before you can read.

Request: Allow user to double-click to see full readable version of a workflow result message

Detail:

If you have an error message in a workflow result - it gives you a message that is often longer than the window allows and there is no cell-viewer option

As a result, there is really no way to get to the important part of the error message to understand what's going on, other than to use Notepad

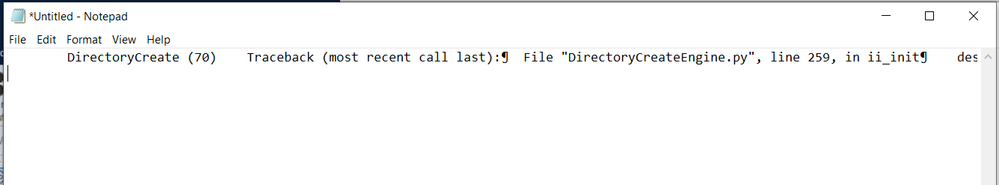

Step 1: Copy into Notepad

(you can see the end of line characters being misunderstood)

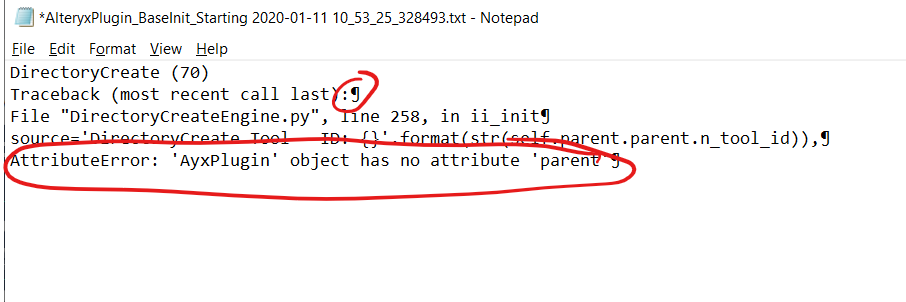

Step 2: Manually clean this up by breaking on the line breaks

And now you can see the important part of the result message..

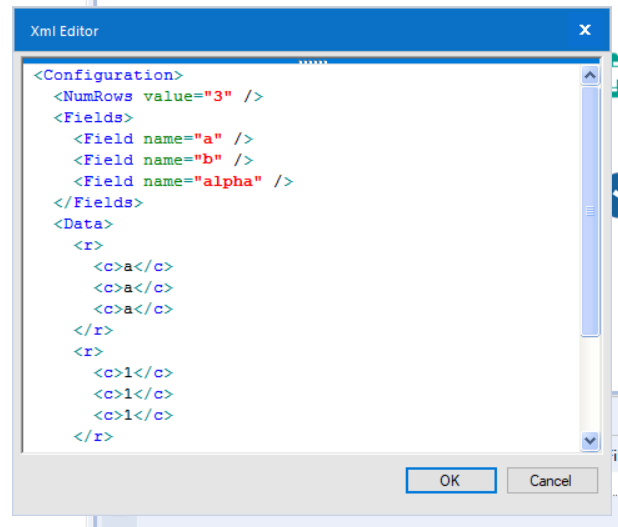

Could we rather add the ability to double-click on a result message in the result window and bring up a modal window that formats the error message for you (similar to the modal window used for XML editing of a tool). That would eliminate this entire wasteful effort of trying to read an error message and having to use Notepad?

-

Engine

-

General

-

Runtime

-

User Experience Design

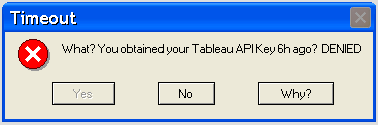

The current version of the Publish to Tableau macro retrieves an API key at the start of the workflow run. Often times the workflow may take several hours to run before it's ready to write to Tableau by which time the API may have expired. (I think the default tableau server setting times out in 2 hrs) It's one of those soul crushing "I should've forked the output!"

Sample Log Error -

- Tool #46: TableauServer.UploadChunks (238): Iteration #1: Tool #19: Tool #4: Tableau Server API Request (Upload file) Error Code 401002: Unauthorized Access -- Invalid authentication credentials were provided.

- Tool #46: Tool #252: Tool #4: Tableau Server API Request (Publish file) Error Code 401002: Unauthorized Access -- Invalid authentication credentials were provided.

The idea would be to change when the macro obtains the API from when the workflow is initiated to just before the workflow is ready to write to the Tableau avoiding these timeouts.

(If you're having this issue in the meantime you can have your Tableau server admin up the timeout)

Preface: I have only used the in-DB tools with Teradata so I am unsure if this applies to other supported databases.

When building a fairly sophisticated workflow using in-DB tools, sometimes the workflow may fail due to the underlying queries running up against CPU / Memory limits. This is most common when doing several joins back to back as Alteryx sends this as one big query with various nested sub queries. When working with datasets in the hundereds of millions and billions of records, this can be extremely taxing for the DB to run as one huge query. (It is possible to get arround this by using in-DB write out to a temporary table as an intermediate step in the workflow)

When a routine does hit a in-DB resource limit and the DB kills the query, it causes Alteryx to immediately fail the workflow run. Any "temporary" tables Alteryx creates are in reality perm tables that Alteryx usually just drops at the end of a successful run. If the run does not end successfully due to hitting a resource limit, these "Temporary" (perm) tables are not dropped. I only noticed this after building out a workflow and running up against a few resource limits, I then started getting database out of space errors. Upon looking into it, I found all the previously created "temporary" tables were still there and taking up many TBs of space.

My proposed solution is for Alteryx's in-DB tools to drop any "temporary" tables it has created when a run ends - regardless of if the entire module finished successfully.

Thanks,

Ryan

It would be cool to have annotations that dynamically update. E.g. a record count would be displayed in the annotation and update after a run if changes occurred.

-

Engine

-

Runtime

With the release of 2018.3, cache has become an adhoc task. With complex workflow and multiple inputs we need a method to cache and save the cache selection by tool. Once the workflow runs after opening, the cache would be saved at the latest tool downstream.

This way we don't have to create adhoc cache steps and run the workflow 2X before realizing the time saving features of cache.

This would work similar to the cache feature in 11.0 but with enhanced functionality...the best of the old cache with the new cache intent.

Embed the cache option into tools.

Thanks!

I understand that Server and Designer + Scheduler versions have the option to "cancel workflows running longer than X”.

I'd like to see that functionality in the desktop edition as well.

when using the R-Tool for simple tasks (like renaming files, for example) in an interative macro - there's a delay on every iteration as the R Tool starts up R.

The following are repeated on every iteration (with delays):

Can we look at an option to forward scan an alteryx job to look for R Tools, then load R into process once to eliminate these delays on every iteration?

-

Engine

-

Runtime

It would be helpful to have the Read Uncommitted listed as a global runtime setting.

Most of the workflows I design need this set, so rather than risk forgetting to click this option on one of my inputs it would be beneficial as a global setting.

For example: the user would be able to set specific inputs according to their need and the check box on the global runtime setting would remain unchecked.

However, if the user checked the box on the global runtime setting for Read Uncommitted then all of the workflow would automatically use an uncommtted read on all of the inputs.

When the user unchecks the global runtime setting for Read Uncommitted, then only the inputs that were set up with this option will remain set up with the read uncommitted.

The new Cache tool does not function if the 'Disable All Tools that Write Output' option is selected in the workflow runtime properties. There is no indication of why the cache is not working and this may be confusing because many users won't associate the 'cache' as a normal output. The interface should be changed to make this more clear or the cache function configured to ignore this workflow runtime option.

-

Engine

-

Runtime

Currently if a user has multiple connections in a workflow that connect to a password-protected source, and that password changes, the user will be locked out of their account by login attempts as Alteryx attempts to validate the connection.

Today I had to manually edit the XML of another user's workflow in order to remove references to their server, so they could correct their password without locking the account for a third time today.

While I understand that aliases are a good workaround to this problem, the issue still has potential to occur.

Having an option to load a workflow in a "SECURE" or "SAFE" mode, where it would not validate a query until runtime, or refreshing the metadata manually, would help to significantly reduce lockouts which would improve the usability of the tool.

Hi all,

I was wondering if any of you have achieved "Transaction rollback" type of feature in alteryx.

Following is the usecase:

If a workflow that writes data into multiple outputs (could be relational tables / files) is failed half way through in writing to one of the outputs, is there an option to rollback the partially loaded data & reset the process to the original state (i.e., before the execution of the workflow)? (OR) does this needs to be done programatically?

There is a workflow level property - "Cancel Running Workflow on Error". This stops the execution but doesn't perform rollback.

Thanks,

Sandeep.

It's often challenging to estimate run time of various workflows AND a run time of over 3+ hours can often be indicative of errors in the workflow. Could we have an estimated runtime calculator? This would also help when pushing against deadlines for timing.

Fingers crossed and thanks!

-

Engine

-

Runtime

Hi All,

This is a fairly straightforward request. I'd like to be able to pass through interface tool values to the workflow events the same way I would pass it through to a tool in the workflow (%Question.<tool name>%). One use-case for this is that we are calling a workflow and passing in an ID, and if this workflow fails, I'd like to trigger an event that will call back to the application and say this specific workflow for this ID failed.

The temporary solution is to have the workflow write to a temp file and have the event reference that temp file, but this is clunky and risky if there are parallel runs occurring.

Best,

devKev

Countless times I've been asked by management how long a process will take to run and I really can't say beyond an educated guess (using input file size and complexity of workflow). Yet, when downloading files off the internet or moving files around in a network, Microsoft will give an estimated time of completion (e.g. 10 minutes remaining till files are downloaded). It would be so great if Alteryx would show something similar with regard to how long a workflow will take to finish running. Not sure if you can create an algoithm based on the nubmer of tools, import file size, network connection etc. to give a ETA on when a workflow may finish running but it would be super helpful for me when working on high priortity project so I can communicate with the business side.

Thanks!

-

Engine

-

Runtime

- New Idea 392

- Accepting Votes 1,783

- Comments Requested 20

- Under Review 181

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 106

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

229 -

Bug

1 -

Category Address

13 -

Category Apps

114 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

252 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

219 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

658 -

Category Interface

246 -

Category Join

109 -

Category Machine Learning

3 -

Category Macros

156 -

Category Parse

78 -

Category Predictive

79 -

Category Preparation

405 -

Category Prescriptive

2 -

Category Reporting

205 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

92 -

Configuration

1 -

Content

2 -

Data Connectors

985 -

Data Products

4 -

Desktop Experience

1,613 -

Documentation

64 -

Engine

136 -

Enhancement

419 -

Event

1 -

Feature Request

219 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

15 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

228 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

87 -

UX

227 -

XML

7

- « Previous

- Next »

-

Carolyn on: Blob output to be turned off with 'Disable all too...

- MJ on: Add Tool Name Column to Control Container metadata...

-

fmvizcaino on: Show dialogue when workflow validation fails

- ANNE_LEROY on: Create a SharePoint Render tool

- jrlindem on: Non-Equi Relationships in the Join Tool

- AncientPandaman on: Continue support for .xls files

- EKasminsky on: Auto Cache Input Data on Run

- jrlindem on: Global Field Rename: Automatically Update Column N...

- simonaubert_bd on: Workflow to SQL/Python code translator

- abacon on: DateTimeNow and Data Cleansing tools to be conside...