Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Hi all!

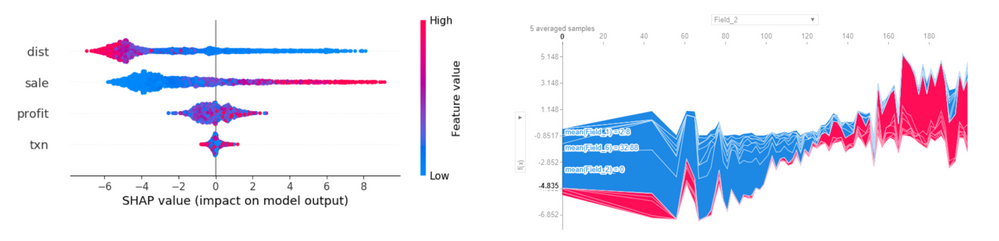

Based on the title, here's some background information: SHAPLEY Values

Currently, one way of doing so is to utilize the Python tool to write out the script and install the package. However, this will require running Alteryx as an administrator in order to successfully load, test, and run the script. The problem is, a substantial number of companies do not grant such privileges to their Alteryx teams to run as administrator fully as it will always require admin credentials to log in to even open Alteryx after closing it.

I am aware that there is a macro covering SHAP but I've recently tested it and it did not work as intended, plus it covers non-categorical values as determinants only, thereby requiring a conversion of categorical variables into numeric categories or binary categories.

It will be nice to have a built in Alteryx ML tool that does this analysis and produces a graph akin to a heat map that showcases the values like below:

By doing so, it adds more value to the ML suite and actually helps convince companies to get it.

Otherwise teams will just use Python and be done with it, leaving only Alteryx as the clean-up ETL tool. It leaves much to be desired, and can leave some teams hanging.

I hope for some consideration on this - thank you.

Credit to @pgdelafuente in his post Export Variables from Assisted Modelling Feature I... - Alteryx Community

This came up in a call with a large client - basically there's no easy way to output the feature importance plot, the accuracy metrics of the selected model (i.e. root mean squared error, correlation, max error, etc.). I would assume this is an easy addition into the Assisted Modeling tools, and perhaps useful for some of the Predictive tools!

-

Category Machine Learning

-

Machine Learning

Alteryx hosting CRAN

Installing R packages in Alteryx has been a tricky issue with many posts over the years and it fundamentally boils down to the way the install.packages() function is used; I've made a detailed post on the subject. There is a way that Alteryx can help remedy the compatibility challenge between their updates of Predictive Tools and the ever-changing landscape that is open-source development. That way is for Alteryx to host their own CRAN!

The current version of Alteryx runs R 4.1.3, which is considered an 'old release', and there are over 18,000 packages on CRAN for this version of R. By the time you read this post, there is likely a newer version of one of these packages that the package author has submitted to the R Foundation's CRAN. There is also a good chance that package isn't compatible with any Alteryx tool that uses R. What if you need that package for a macro you've downloaded? How do you get the old version, the one that is compatible? This is where Alteryx hosting CRAN comes into full fruition.

Alteryx can host their own CRAN, one that is not updated by one of many package authors throughout its history, and the packages will remain unchanged and compatible with the version of Predictive Tools that is released. All we need to do as Alteryx users is direct install.packages() to the Alteryx CRAN to get our new packages, like so,

install.packages(pkg_name, repo = "https://cran.alteryx.com")

There is a R package to create a CRAN directory, so Alteryx can get R to do the legwork for them. Here is a way of using the miniCRAN package,

library(miniCRAN)

library(tools)

path2CRAN <- "/local/path/to/CRAN"

ver <- paste(R.version$major, strsplit(R.version$minor, "\\.")[[1]][1], sep = ".") # ver = 4.1

repo <- "https://cran.r-project.org" # R Foundation's CRAN

m <- available.packages() # a matrix of all packages and their meta data from repo

pkgs4CRAN <- m[,"Package"] # character vector of all packages from repo

makeRepo(pkgs = pkgs4CRAN, path = path2CRAN, type = c("win.binary", "source"), repos = repo) # makes the local repo

write_PACKAGES(paste(path2CRAN, "bin/windows/contrib", ver, sep = "/"), type = "win.binary") # creates the PACKAGES file for package binaries

write_PACKAGES(paste(path2CRAN, "src/contrib", sep = "/"), type = "source") # creates the PACKAGES files for package sources

It will create a directory structure that replicates R Foundation's CRAN, but just for the version that Alteryx uses, 4.1/.

Alteryx can create the CRAN, host it to somewhere meaningful (like https://cran.alteryx.com), update Predictive Tools to use the packages downloaded with the script above and then release the new version of Predictive Tools and announce the CRAN. Users like me and you just need to tell the R Tool (for example) to install from the Alteryx repo rather than any others, which may have package dependency conflicts.

This is future-proof too. Let's say Alteryx decide to release a new version of Designer and Predictive Tools based on R 4.2.2. What do they do? Download R 4.2.2, run the above script, it'll create a new directory called 4.2/, update Predictive Tools to work with R 4.2.2 and the packages in their CRAN, host the 4.2/ directory to their CRAN and then release the new version of Designer and Predictive Tools.

Simple!

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

-

Enhancement

-

Machine Learning

I'm really liking the new assisted modelling capabilities released in 2020.2, but it should not error if the data contains: spatial, blob, date, datetime, or datetime types.

This is essentially telling the user to add an extra step of adding a select before the assisted modelling tool and then a join after the models. I think the tool should be able to read in and through these field types (especially dates) and just not use them in any of the modelling.

An even better enhancement would be to transform date as part of the assisted modelling into something usable for the modelling (season, month, day of week, etc.)

-

Category Predictive

-

Desktop Experience

-

Machine Learning

-

Tool Improvement

Model evaluation (including feature importance) is only available in assisted modelling within the machine learning tools.

It would be great if there was a tool to do this when using the expert mode so that you could see some standard performance metrics for your model(s) and view the feature importance.

-

Machine Learning

-

Tool Improvement

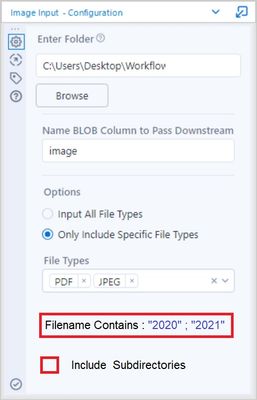

For the Image Input Tool please add:

1) A wildcard input for filename.

2) A check-box to choose sub-folders.

-

Enhancement

-

Machine Learning

Assisted modeling is a great idea but right now it's a bit unflexible.

IMHO the greatest strength is the semi-automated transform tool, which would extremely helpful on its own.

What would be great is the possibility of using the features without having to go through the assisted modeling wizard but as single tools with minimal configuration, so that it could be used as an automated system for quickly choosing variables.

This way we could have a pretty much perfect rapid prototyping tool for machine learning tasks, leaving more freedom in modeling and enabling less skilled analysts in easily finding on which variables they should focus.

What do you think?

-

Machine Learning

Hello,

as shown in the Alteryx Inspire Demo, Assisted modeling is going to work with a wizard and generate several tools as result.

The data evaluation functions and feature engineering assist however would be extremely useful tools in their own, is there any chance we can use them as separate tools in the upcoming version?

Thanks in advance!

-

Category Predictive

-

Desktop Experience

-

Feature Request

-

Machine Learning

Currently there are forecasting tools under time series (prediciting for the future). But can a back casting function/tool be added to predict historic data points.

-

Category Time Series

-

Desktop Experience

-

Enhancement

-

Machine Learning

Can we have a tool to optimize another tool's configuration based on an output target? For example optimize the fuzzy logic setup to find the optimal tool configuration that yields the best matching score for a given data set.

-

Machine Learning

-

New Request

Hello All,

During my trial of assisted modelling, I've enjoyed how well guided the process is, however, I've come across one area for improvement that would help those (including myself) overcome any hurdles when getting started.

When I ran my first model, I was presented with an error stating that certain fields had classes in the validation dataset that were not present in the Training dataset.

Upon investigation (and the Alteryx Community!) I discovered that this was due to a step in the One Hot Encoding tool.

Basically, the Default setting is for all fields to be set to error under the step for dealing with values not present in the training dataset, but there is an option to ignore these scenarios.

My suggestion:

Add an additional step to Assisted Modelling that gives the user the option to Ignore / Error as they see fit.

If this were to be implemented then it would remove the only barrier I could find in Assisted Modelling.

Hope this is useful and happy to provide further context / details if needed.

-

Feature Request

-

Machine Learning

-

Tool Improvement

It would be great if it was possible to output the top most influential features in producing the score for each individual entity/row when using the predictive and machine learning tools.

Similar to the way they work in DataRobot. Details here and here.

This would enable some simple interpretation of how a model came to an individual prediction and the most important features in that particular row/case.

-

Category Predictive

-

Desktop Experience

-

Machine Learning

-

New Tool

- New Idea 290

- Accepting Votes 1,791

- Comments Requested 22

- Under Review 166

- Accepted 55

- Ongoing 8

- Coming Soon 7

- Implemented 539

- Not Planned 111

- Revisit 59

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

220 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

79 -

Category Demographic Analysis

2 -

Category Developer

209 -

Category Documentation

80 -

Category In Database

215 -

Category Input Output

645 -

Category Interface

240 -

Category Join

103 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

79 -

Category Preparation

395 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

89 -

Configuration

1 -

Content

1 -

Data Connectors

968 -

Data Products

3 -

Desktop Experience

1,550 -

Documentation

64 -

Engine

127 -

Enhancement

342 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

13 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

204 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

24 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

81 -

UX

223 -

XML

7

- « Previous

- Next »

- Shifty on: Copy Tool Configuration

- simonaubert_bd on: A formula to get DCM connection name and type (and...

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- haraldharders on: Improve Text Input tool

- simonaubert_bd on: Unique key detector tool

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- jackchoy on: Enhancing Data Cleaning

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections

-

AlteryxIdeasTea

m on: Expression Editors: Quality of life update

| User | Likes Count |

|---|---|

| 10 | |

| 7 | |

| 5 | |

| 5 | |

| 3 |