Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Dynamic Input should either:

(a) have the option of merging files with different field schemas

(b) Return a list of rejected filepaths

One of the problems I have with using Alteryx is the frequent need to input a bunch of files, but a few have an extra/missing field. The extra/missing field is often unimportant to me, but it means that the dynamic input doesn't work.

-

API SDK

-

Category Developer

-

Enhancement

A client just asked me if there was an easy way to convert regular Containers to Control Containers - unfortunately we have to delete the old container and readd the tools into the new Control Container.

What if we could just right click on the regular Container and say "Convert to Control Container"? Or even vice versa?!

-

Category Developer

-

Category Documentation

-

Desktop Experience

-

Enhancement

Hello,

As of today, there are only few packages that are embedded with Alteryx Python tool. However :

1/Python becomes more and more popular. We will use this tool intensively in the next years

2/Python is based on existing packages. This is the force of the language

3/On Alteryx, adding a package is not that easy : you need to have admin rights and if you want your colleagues to open your workflow, it also means that he has to install it himself. In corporate environments, it means loosing time, several days on a project.

Personnaly, I would Polars, DuckDB.. that are way faster than Panda.

-

API SDK

-

Category Developer

-

Enhancement

Hello all,

I really appreciate the ability to test tools in the Laboratory category :

However, these nice tools should go out of laboratory and become supported after a few monhs/quarters. Right now, without Alteryx support, we cannot use it for production workflow.

Example given :

Visual Layout Tool introduced in 2017

https://community.alteryx.com/t5/Alteryx-Designer-Knowledge-Base/Tool-Mastery-Visual-Layout/ta-p/835...

Make columns Tool also introduced in 2017

https://community.alteryx.com/t5/Alteryx-Designer-Knowledge-Base/Make-Columns-Tool/ta-p/67108

Transpose In-DB in 10.6 introduced 2016

https://help.alteryx.com/10.6/LockInTranspose.htm

etc, etc...

Best regards,

Simon

-

API SDK

-

Category Developer

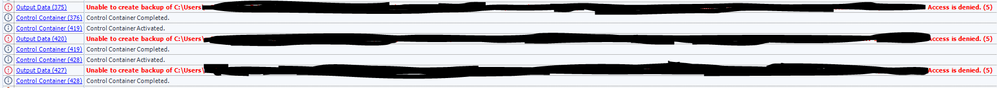

Allow users the ability to add a delay on the connection between Control Container tools. I frequently have to rerun workflows that use the control container because the workflow has not registered that the file was properly closed on outputting from one output tool to the next. The network drives haven't resolved and show that the file is still open while its moved on to the next control container. Users should have an option in the Configuration screen to add a delay before a signal is sent for the next container to run.

In the past I was able to use a CReW tool (Wait a Second) in conjunction with the Block Until Done tool to add the delay in manually. But I have since converted all of my workflows over to Control Containers. Since then half of the times the workflow has run I encounter the following errors.

-

API SDK

-

Category Developer

Hi

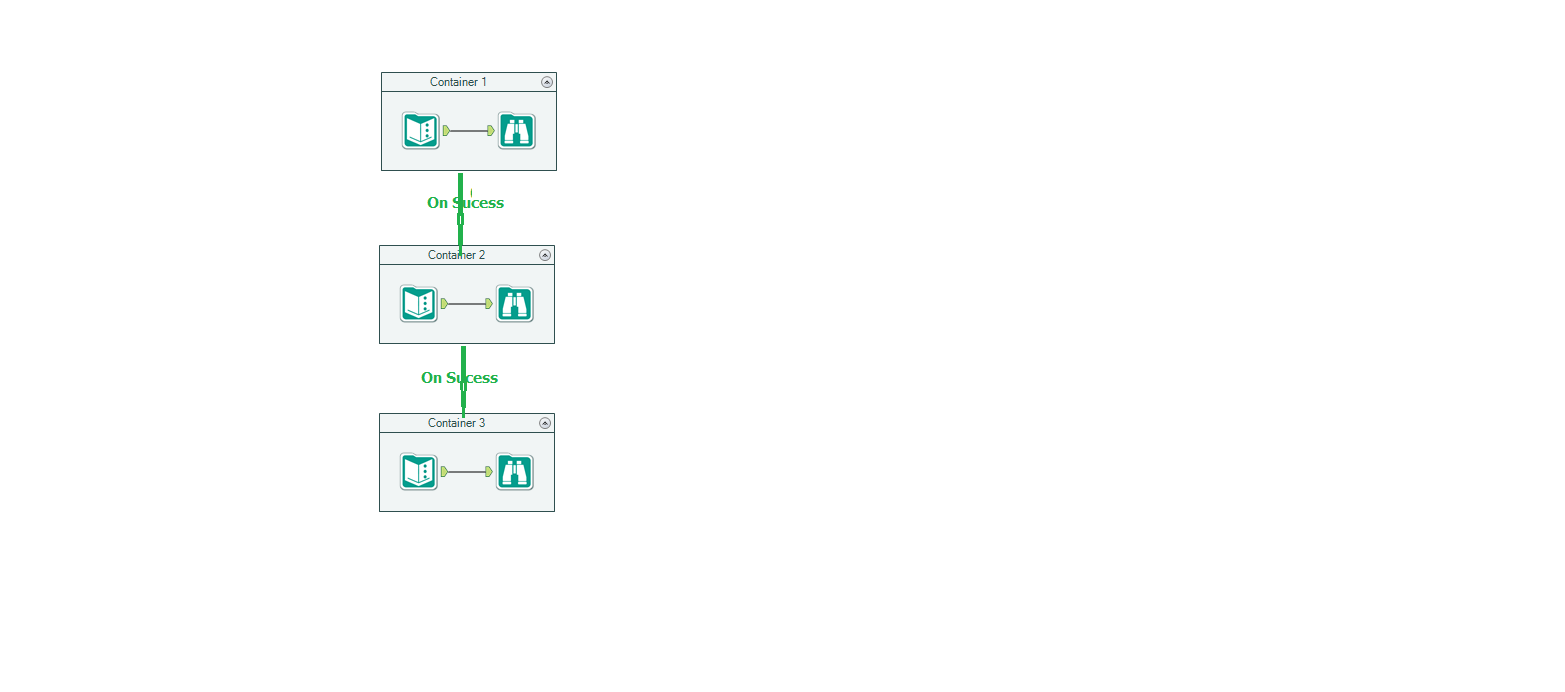

Wanted to control the order of execution of objects in Alteryx WF but right now we have ONLY block until done which is not right choice for so many cases

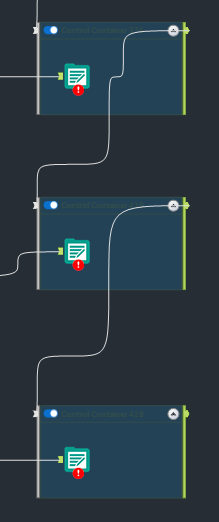

Can we have a container (say Sequence Container) and put piece of logic in each container and have control by connecting each container?

Hope this way we can control the execution order

It may be something looks like below

-

API SDK

-

Category Developer

Currently the only way to do IF / FOR / WHILE loop is either in Formula tool or via iterative/batch macro.

Instead, it will be hugely useful and a lot more intuitive if there is the ability to build the FOR / WHILE logic embedded in a container (similar to LabVIEW interface https://www.ni.com/en-sg/support/documentation/supplemental/08/labview-for-loops-and-while-loops-exp...).

Advantages include:

- Increased readability. (not having to go into a macro!)

- Increased agility. (more power/ features can be added or modified on the go for something that is more than a Formula tool but not too much interface like a Macro App)

- More intuitive

Dawn.

-

API SDK

-

Category Developer

I've seen this question before and have run into it myself. I'd like to see a new tool that would allow a developer (of a workflow) to choose a path of logic based upon criteria known only during the execution of a module.

If LEFT INPUT Count of records < 10,000 THEN Path1 (e.g. use a calgary join)

ELSE Path 2 (e.g. use a standard join)

endif

Thanks,

Mark

Hello all,

As of today, you can use the Dynamic Select Field with two options

-by types (you can dynamically select all, all date, etc..)

-by formula

I suggest 2 easy improvements

-from a list field. You connect a field list to a second entry with a "Field name" field

-from flow : You connect a flow to a second entry and the common fields are selected

Best regards,

Simon

-

API SDK

-

Category Developer

Here's a sample of what you get if no records are read into a python tool:

Error: CReW SHA256 (4): Tool #1: Traceback (most recent call last):

File "D:\Engine_10804_3513901e8d4d4ab48a13c314a18fd1f9_\2f1b1eb4701e445775092128efe77f76\workbook.py", line 7, in <module>

df = Alteryx.read('#1')

File "C:\Program Files\Alteryx\bin\Miniconda3\envs\DesignerBaseTools_venv\lib\site-packages\ayx\export.py", line 35, in read

return _CachedData_(debug=debug).read(incoming_connection_name, **kwargs)

File "C:\Program Files\Alteryx\bin\Miniconda3\envs\DesignerBaseTools_venv\lib\site-packages\ayx\CachedData.py", line 306, in read

data = db.getData()

File "C:\Program Files\Alteryx\bin\Miniconda3\envs\DesignerBaseTools_venv\lib\site-packages\ayx\Datafiles.py", line 500, in getData

data = self.connection.read_nparrays()

RuntimeError: DataWrap2WrigleyDb::GoRecord: Attempt to seek past the end of the file

I've fixed this in my macro by forcing a DUMMY record into the python tool (deleting it on the back-end). It would be much nicer to have error handling that prevents the issue. Even as a configuration option, what to do with no input this would simplify things.

This error condition potentially effects every python tool created.

Cheers,

Mark

-

API SDK

-

Category Developer

Hello All,

As of today, Alteryx can use the proxy settings set in Windows Network and Internet Settings "Server pulls the proxy settings displayed in Engine > Proxy from the Windows internet settings for the user logged into the machine. If there are no proxy settings for the user logged into the machine, Engine > Proxy isn't available within the System Settings menu.". Then, you can override the credentials (but not the adress) in system settings but also in user settings.

The issue : in many organizations, there are several proxies that you can use for different use case. And by default, it can happen access to API are blocked by these proxies. The user, which is not admin cannot change his Windows Settings... and even if it's done by IT, it will impact all the system, including other software and leading to safety failures.

What I suggest :

-ability to change credentials AND adress

-a multi-level settings for both credentials and adress:

default : Windows Settings

System Settings

User Settings

Workflow Settings

Download tool/ Settings

Best Regards,

Simon

-

API SDK

-

Category Developer

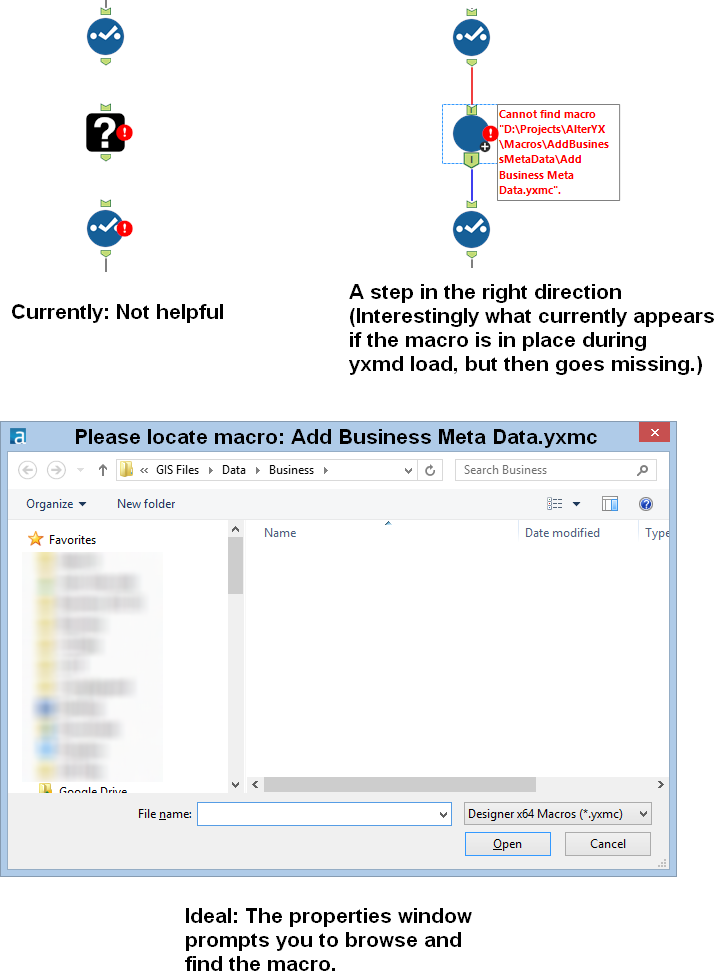

Idea: Prompt the user to find a missing macro instead of the current UX of a question mark icon.

Issue: When a macro referenced in a workflow is missing, then there is no way to a) know what the name of the macro was (assuming you were lazy like me and didn't document with a comment) and b) find the macro so you can get back to business.

When this happens to me know, I have to go to the XML view and search for macros and then cycle through them until I find the one that's missing. Then I have to either copy the macro back into that location or manually edit the workflow XML. Not cool man.

Solution: When a macro is missing, the image below at the right should be shown. In the properties window, a file browse tool should allow the user to find the macro.

-

API SDK

-

Category Developer

-

Category Macros

-

Desktop Experience

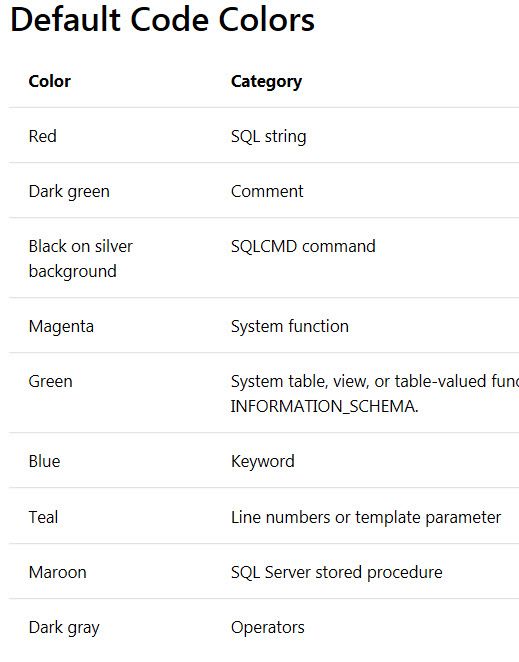

We need color coding in the SQL Editor Window for input tools. We are always having to pull our code out of there and copy it into a Teradata window so it is easier to ready/trouble shoot. This would save us some time and some hassle and would improve the Alteryx user experience. ( I think you've used a couple of my ideas already. This one is a good one too. )

-

API SDK

-

Category Developer

I would love to be able to see the actual curl statement that is executed as part of the download tool. Maybe something like a debug switch can be added which would produce 1 extra output field which is the curl statement itself? This would greatly enhance the ability to debug when things aren't working as expected from the download tool.

-

API SDK

-

Category Developer

Who needs a 1073741823 sized string anyways? No one, or close enough to no one. But, if you are creating some fancy new properties in the formula tool and just cranking along and then you see that your **bleep** data stream is 9G for nine rows of data you find yourself wondering what the hell is going on. And then, you walk your way way down the workflow for a while finding slots where the default 1073741823 value got set, changing them to non-insane sized strings, and the your data flow is more like 64kb and your workflow runs in 3 seconds instead of 30 seconds.

Please set the default value for formula tools to a non-insane value that won't be changed by default by 99.99999% of use cases. Thank you.

-

API SDK

-

Category Developer

If a tool fails, there should be a way to customise the error message. Currently a way to do it: log all messages in a file, read that file with another workflow, then customise the messages (Alteryx workflow error handling - Alteryx Community). However, there should be a more convenient solution. We should be able to:

- Find/replace parts of a message.

- Specify, which tools messages to modify.

- Change the message type.

- Change the order of the messages in the results window, to prioritise the critical ones.

- Pick which messages cannot be hidden by "xxx more errors not displayed".

This would especially help for macros, as sometimes we have a specific tool failing within a macro and producing a non-user friendly message.

-

API SDK

-

Category Developer

This has probably been mentioned before, but in case it hasn't....

The dynamic input tool is useful for bringing in multiple files / tabs, but quickly stops being fit for purpose if schemas / fields differ even slightly. The common solution is to then use a dynamic input tool inside a batch macro and set this macro to 'Auto Configure by Name', so that it waits for all files to be run and then can output knowing what it has received.

It's a pain to create these batch macros for relatively straightforward and regular processes - would it be possible to have this 'Auto Configure by Name' as an option directly in the dynamic input tool, relieving the need for a batch macro?

Thanks,

Andy

-

API SDK

-

Category Developer

-

Category Macros

-

Desktop Experience

Alteryx hosting CRAN

Installing R packages in Alteryx has been a tricky issue with many posts over the years and it fundamentally boils down to the way the install.packages() function is used; I've made a detailed post on the subject. There is a way that Alteryx can help remedy the compatibility challenge between their updates of Predictive Tools and the ever-changing landscape that is open-source development. That way is for Alteryx to host their own CRAN!

The current version of Alteryx runs R 4.1.3, which is considered an 'old release', and there are over 18,000 packages on CRAN for this version of R. By the time you read this post, there is likely a newer version of one of these packages that the package author has submitted to the R Foundation's CRAN. There is also a good chance that package isn't compatible with any Alteryx tool that uses R. What if you need that package for a macro you've downloaded? How do you get the old version, the one that is compatible? This is where Alteryx hosting CRAN comes into full fruition.

Alteryx can host their own CRAN, one that is not updated by one of many package authors throughout its history, and the packages will remain unchanged and compatible with the version of Predictive Tools that is released. All we need to do as Alteryx users is direct install.packages() to the Alteryx CRAN to get our new packages, like so,

install.packages(pkg_name, repo = "https://cran.alteryx.com")

There is a R package to create a CRAN directory, so Alteryx can get R to do the legwork for them. Here is a way of using the miniCRAN package,

library(miniCRAN)

library(tools)

path2CRAN <- "/local/path/to/CRAN"

ver <- paste(R.version$major, strsplit(R.version$minor, "\\.")[[1]][1], sep = ".") # ver = 4.1

repo <- "https://cran.r-project.org" # R Foundation's CRAN

m <- available.packages() # a matrix of all packages and their meta data from repo

pkgs4CRAN <- m[,"Package"] # character vector of all packages from repo

makeRepo(pkgs = pkgs4CRAN, path = path2CRAN, type = c("win.binary", "source"), repos = repo) # makes the local repo

write_PACKAGES(paste(path2CRAN, "bin/windows/contrib", ver, sep = "/"), type = "win.binary") # creates the PACKAGES file for package binaries

write_PACKAGES(paste(path2CRAN, "src/contrib", sep = "/"), type = "source") # creates the PACKAGES files for package sources

It will create a directory structure that replicates R Foundation's CRAN, but just for the version that Alteryx uses, 4.1/.

Alteryx can create the CRAN, host it to somewhere meaningful (like https://cran.alteryx.com), update Predictive Tools to use the packages downloaded with the script above and then release the new version of Predictive Tools and announce the CRAN. Users like me and you just need to tell the R Tool (for example) to install from the Alteryx repo rather than any others, which may have package dependency conflicts.

This is future-proof too. Let's say Alteryx decide to release a new version of Designer and Predictive Tools based on R 4.2.2. What do they do? Download R 4.2.2, run the above script, it'll create a new directory called 4.2/, update Predictive Tools to work with R 4.2.2 and the packages in their CRAN, host the 4.2/ directory to their CRAN and then release the new version of Designer and Predictive Tools.

Simple!

-

API SDK

-

Category Developer

-

Category Machine Learning

-

Category Predictive

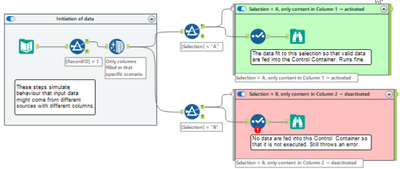

Sometimes, Control Containers produce error messages even if they are deactivated by feeding an empty table into their input connection.

(Note that this is a made up example of something which can happen if input tables might be from different sources and have different columns so that they need separated treatment.)

According to the product team, this is expected behaviour since a selection does not allow zero columns selected. This might be true (which I doubt a bit), but it is at least counter-intuitive. If this behaviour cannot be avoided in total, I have a proposal which would improve the user experience without changing the entire workflow validation logic.

(The support engineer understands the point and has raised a defect.)

Instead of writing messages inside Control Containers directly to the log output (on screen, in logfile) and to mark the workflow as erroneous, I propose to introduce a message (message, warning, error) stack for tools inside Control Containers:

- When the configuration validation is executed:

- Messages (messages, warnings, errors) produced outside of Control Containers are output to the screen log and to the log files (as today).

- Messages (messages, warning, errors) produced inside of Control Containers are not yet output but stored in a message stack.

- At the moment when it is decided whether a Control container is activated or deactivated:

- If Control Container activated: Write the previously stored message stack for this Control Container to the screen and to the log output, and increase error and warning counts accordingly.

- If Control Container deactivated: Delete the message stack for this Control Container (w/o reporting anything to the log and w/o increasing error and warning count).

This would result in a different sequence of messages than today (because everything inside activated Control Containers would be reported later than today). Since there’s no logical order of messages anyways, this would not matter. And it would avoid the apparently illogical case that deactivated Control Containers produce errors.

-

Category Developer

-

Enhancement

This has probably been mentioned before, but in case it hasn't....

Right now, if the dynamic input tool skips a file (which it often does!) it just appears as a warning and continues processing. Whilst this is still useful to continue processing, could it be built as an option in the tool to select a 'error if files are skipped'?

Right now it is either easy to miss this is happening, or in production / on server you may want this process to be stopped.

Thanks,

Andy

-

API SDK

-

Category Developer

-

Category Input Output

-

Data Connectors

- New Idea 317

- Accepting Votes 1,790

- Comments Requested 22

- Under Review 171

- Accepted 54

- Ongoing 8

- Coming Soon 7

- Implemented 539

- Not Planned 110

- Revisit 57

- Partner Dependent 4

- Inactive 674

-

Admin Settings

21 -

AMP Engine

27 -

API

11 -

API SDK

223 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

79 -

Category Demographic Analysis

2 -

Category Developer

212 -

Category Documentation

80 -

Category In Database

215 -

Category Input Output

646 -

Category Interface

242 -

Category Join

105 -

Category Machine Learning

3 -

Category Macros

154 -

Category Parse

76 -

Category Predictive

79 -

Category Preparation

398 -

Category Prescriptive

1 -

Category Reporting

200 -

Category Spatial

82 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

91 -

Configuration

1 -

Content

1 -

Data Connectors

969 -

Data Products

3 -

Desktop Experience

1,569 -

Documentation

64 -

Engine

129 -

Enhancement

362 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

25 -

Licenses and Activation

15 -

Licensing

14 -

Localization

8 -

Location Intelligence

81 -

Machine Learning

13 -

My Alteryx

1 -

New Request

212 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

25 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

82 -

UX

223 -

XML

7

- « Previous

- Next »

- asmith19 on: Auto rename fields

- Shifty on: Copy Tool Configuration

- simonaubert_bd on: A formula to get DCM connection name and type (and...

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- haraldharders on: Improve Text Input tool

- simonaubert_bd on: Unique key detector tool

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- jackchoy on: Enhancing Data Cleaning

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections