Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Hello,

A lot of time, when you have a dataset, you want to know if there is a group of fields that works together. That can help to normalize (like de-joining) your data model for dataviz, performance issue or simplify your analysis.

Exemple

order_id item_id label model_id length color amount

| 1 | 1 | A | 10 | 15 | Blue | 101 |

| 2 | 1 | A | 10 | 15 | Blue | 101 |

| 3 | 2 | B | 10 | 15 | Blue | 101 |

| 4 | 2 | B | 10 | 15 | Blue | 101 |

| 5 | 2 | B | 10 | 15 | Blue | 101 |

| 6 | 3 | C | 20 | 25 | Red | 101 |

| 7 | 3 | C | 20 | 25 | Red | 101 |

| 8 | 3 | C | 20 | 25 | Red | 101 |

| 9 | 4 | D | 20 | 25 | Red | 101 |

| 10 | 4 | D | 20 | 25 | Red | 101 |

| 11 | 4 | D | 20 | 25 | Red | 101 |

Here, we could split the table in three :

-order

order_id item_id model_id amount

| 1 | 1 | 10 | 101,2 |

| 2 | 1 | 10 | 103 |

| 3 | 2 | 10 | 104,8 |

| 4 | 2 | 10 | 106,6 |

| 5 | 2 | 10 | 108,4 |

| 6 | 3 | 20 | 110,2 |

| 7 | 3 | 20 | 112 |

| 8 | 3 | 20 | 113,8 |

| 9 | 4 | 20 | 115,6 |

| 10 | 4 | 20 | 117,4 |

| 11 | 4 | 20 | 119,2 |

-model

model_id length color

| 10 | 15 | Blue |

| 20 | 25 | Red |

-item

item_id label

| 1 | A |

| 2 | B |

| 3 | C |

| 4 | D |

The tool would take :

-a dataframe in entry

-configuration : ability to select fields.

-output : a table with the recap of groups

<style> </style>

field group field remaining fields

| 1 | item_id | False |

| 1 | label | False |

| 2 | model_id | False |

| 2 | color | False |

| 3 | order_id | True |

| 3 | link to group 1 | True |

| 3 | link to group 2 | True |

| 3 | amount | True |

Very important : the non-selected fields (like here, amount), are in the result but all in the "remaining" group.

Algo steps:

1/pre-groups : count distinct of each fields. goal : optimization of algo, to avoid to calculate all pairs

fields that has the same count distinct than the number of rows are automatically excluded and sent to the remaining group

fields that have have the same count distinct are set in the same pre-group

2/ for each group, for each pair of fields,

let's do a distinct of value of the pair

like here

item_id label

| 1 | A |

| 2 | B |

| 3 | C |

| 4 | D |

if in this table, the count distinct of each field is equal to the number of rows, it's a "pair-group"

here, for the model, you will have

-model_id,length

-model_id,color

-length,color

3/Since a field can only belong to one group, it means model_id,length,color which would first (or second) group, then item_id and label

If a field does not belong to a group, he goes to "remaining group" at the end

in the remaining group, you can add a link to the other group since you don't know which field is the key.

<style> </style>

field group field remaining fields

| 1 | item_id | False |

| 1 | label | False |

| 2 | model_id | False |

| 2 | length | False |

| 2 | color | False |

| 3 | order_id | True |

| 3 | link to group 1 | True |

| 3 | link to group 2 | True |

| 3 | amount | True |

Best regards,

Simon

PS : I have in mind an evolution with links between non-remaining table (like here, the model could be linked to the item as an option)

-

Category Data Investigation

-

Desktop Experience

-

New Request

Hello,

This is a feature I haven't seen in any data prepation/etl. The core feature is to detect the unique key in a dataframe. More than often, you have to deal with a dataset without knowing what's make a row unique. This can lead to misinterpret the data, cartesian product at join and other funny stuff.

How do I imagine that ?

a specific tool in the Data Investigation category

Entry; one dataframe, ability to select fields or check all, ability to specify a max number of field for combination (empty or 0=no max).

Algo : it tests the count distinct every combination of field versus the count of rows

Result : one row by field combination that works. If no result : "no field combination is unique. check for duplicate or need for aggregation upstream".

ex :

order_id line_id amount customer site

| 1 | 1 | 100 | A | U_250 |

| 1 | 2 | 12 | A | U_250 |

| 1 | 3 | 45 | A | U_250 |

| 2 | 1 | 75 | A | U_250 |

| 2 | 2 | 12 | A | U_250 |

| 3 | 1 | 15 | B | U_250 |

| 4 | 1 | 45 | B | U_251 |

The user will select every field but excluding Amount (he knows that Amount would have no sense in key)

The algo will test the following key

-each separate field

-each combination of two fields

-each combination of three fields

-each combination of four fields

to match the number of row (7)

And gives something like that

choice number of fields field combination

| very good | 2 | order_id,line_id |

| average | 3 | order_id,line_id, customer |

| average | 3 | order_id,line_id, site |

| bad | 4 | order_id,line_id, site, customer |

| … | … | …. |

Best regards,

Simon

-

Category Data Investigation

-

Desktop Experience

-

New Request

Hello,

Unless you're lucky, your input dataset can have fields with the wrong types. That can lead to several issues such as :

-performance (a string is waaaaaaaay slower than let's say a boolean)

-compliance with master data management

-functional understanding (e.g : if i have a field called "modified" typed as string, I don't know if it contains the modification date, an information about the modification, etc... while if it's is typed as date, I already know it's a date)

-ability to do some type-specific operations (you can't multiply a string or extract a week from a string)

right now, the existing tools have been focused on strings but I think we can do better.

Here a proposition :

entry : a dataframe

configuration :

-selection of fields

or

-selection of field types

-ability to do it on a sample (optional)

Algo :

| Alteryx | Byte | bool | only 2 values. 0 and 1 | to be done |

| Alteryx | Int16 | bool | only 2 values. 0 and 1 | to be done |

| Alteryx | Int16 | Byte | min=>0, max <=255 | to be done |

| Alteryx | Int32 | bool | only 2 values. 0 and 1 | to be done |

| Alteryx | Int32 | Byte | min>=0, max <=255 | to be done |

| Alteryx | Int32 | Int16 | min>=-32,768, max <=32,767 | to be done |

| Alteryx | Int64 | bool | only 2 values. 0 and 1 | to be done |

| Alteryx | Int64 | Byte | min>=0, max <=255 | to be done |

| Alteryx | Int64 | Int16 | min>=-32,768, max <=32,767 | to be done |

| Alteryx | Int64 | Int32 | min>=-–2,147,483,648, max <=2,147,483,647 | to be done |

| Alteryx | Fixed Decimal | bool | only 2 values. 0 and 1 | to be done |

| Alteryx | Fixed Decimal | Byte | No decimal part, min>=0, max <=255 | to be done |

| Alteryx | Fixed Decimal | Int16 | No decimal part, min>=-32,768, max <=32,767 | to be done |

| Alteryx | Fixed Decimal | Int32 | No decimal part, min>=-–2,147,483,648, max <=2,147,483,647 | to be done |

| Alteryx | Fixed Decimal | Int36 | No decimal part, min>=-––9,223,372,036,854,775,808, max <=9,223,372,036,854,775,807 | to be done |

| Alteryx | Float | bool | only 2 values. 0 and 1 or 0,-1 | to be done |

| Alteryx | Float | Byte | No decimal part, min>=0, max <=255 | to be done |

| Alteryx | Float | Int16 | No decimal part, min>=-32,768, max <=32,767 | to be done |

| Alteryx | Float | Int32 | No decimal part, min>=-–2,147,483,648, max <=2,147,483,647 | to be done |

| Alteryx | Float | Int36 | No decimal part, min>=-––9,223,372,036,854,775,808, max <=9,223,372,036,854,775,807 | to be done |

| Alteryx | Float | Fixed Decimal | to be done | to be done |

| Alteryx | Double | bool | only 2 values. 0 and 1 or 0,-1 | to be done |

| Alteryx | Double | Byte | No decimal part, min>=0, max <=255 | to be done |

| Alteryx | Double | Int16 | No decimal part, min>=-32,768, max <=32,767 | to be done |

| Alteryx | Double | Int32 | No decimal part, min>=-–2,147,483,648, max <=2,147,483,647 | to be done |

| Alteryx | Double | Int36 | No decimal part, min>=-––9,223,372,036,854,775,808, max <=9,223,372,036,854,775,807 | to be done |

| Alteryx | Double | Fixed Decimal | to be done | to be done |

| Alteryx | Double | Float | when no need for doube precision | to be done |

| Alteryx | DateTime | Date | no hours, minutes, seconds | to be done |

| Alteryx | String | bool | only 2 values. 0 and 1 or 0,-1 or True/False or TRUE/FALSE or equivalent in some languages such as VRAI/FAUX, Vrai/Faux | to be done |

| Alteryx | String | Byte | No decimal part, min>=0, max <=255 | to be done |

| Alteryx | String | Int16 | No decimal part, min>=-32,768, max <=32,767 | to be done |

| Alteryx | String | Int32 | No decimal part, min>=-–2,147,483,648, max <=2,147,483,647 | to be done |

| Alteryx | String | Int36 | No decimal part, min>=-––9,223,372,036,854,775,808, max <=9,223,372,036,854,775,807 | to be done |

| Alteryx | String | Fixed Decimal | to be done | to be done |

| Alteryx | String | Float | when no need for doube precision | to be done |

| Alteryx | String | Double | when need for double precision | to be done |

| Alteryx | String | Date | test on several date formats | to be done |

| Alteryx | String | Time | test on several time formats | to be done |

| Alteryx | String | DateTime | test on several datetime formats | to be done |

| Alteryx | WString | bool | only 2 values. 0 and 1 or 0,-1 or True/False or TRUE/FALSE or equivalent in some languages such as VRAI/FAUX, Vrai/Faux | to be done |

| Alteryx | WString | Byte | No decimal part, min>=0, max <=255 | to be done |

| Alteryx | WString | Int16 | No decimal part, min>=-32,768, max <=32,767 | to be done |

| Alteryx | WString | Int32 | No decimal part, min>=-–2,147,483,648, max <=2,147,483,647 | to be done |

| Alteryx | WString | Int36 | No decimal part, min>=-––9,223,372,036,854,775,808, max <=9,223,372,036,854,775,807 | to be done |

| Alteryx | WString | Fixed Decimal | to be done | to be done |

| Alteryx | WString | Float | when no need for doube precision | to be done |

| Alteryx | WString | Double | when need for double precision | to be done |

| Alteryx | WString | String | Latin-1 character only | to be done |

| Alteryx | WString | Date | test on several date formats | to be done |

| Alteryx | WString | Time | test on several time formats | to be done |

| Alteryx | WString | DateTime | test on several datetime formats | to be done |

| Alteryx | V_String | bool | only 2 values. 0 and 1 or 0,-1 or True/False or TRUE/FALSE or equivalent in some languages such as VRAI/FAUX, Vrai/Faux | to be done |

| Alteryx | V_String | Byte | No decimal part, min>=0, max <=255 | to be done |

| Alteryx | V_String | Int16 | No decimal part, min>=-32,768, max <=32,767 | to be done |

| Alteryx | V_String | Int32 | No decimal part, min>=-–2,147,483,648, max <=2,147,483,647 | to be done |

| Alteryx | V_String | Int36 | No decimal part, min>=-––9,223,372,036,854,775,808, max <=9,223,372,036,854,775,807 | to be done |

| Alteryx | V_String | Fixed Decimal | to be done | to be done |

| Alteryx | V_String | Float | when no need for doube precision | to be done |

| Alteryx | V_String | Double | when need for double precision | to be done |

| Alteryx | V_String | String | Same length | to be done |

| Alteryx | V_String | Date | test on several date formats | to be done |

| Alteryx | V_String | Time | test on several time formats | to be done |

| Alteryx | V_String | DateTime | test on several datetime formats | to be done |

| Alteryx | V_WString | bool | only 2 values. 0 and 1 or 0,-1 or True/False or TRUE/FALSE or equivalent in some languages such as VRAI/FAUX, Vrai/Faux | to be done |

| Alteryx | V_WString | Byte | No decimal part, min>=0, max <=255 | to be done |

| Alteryx | V_WString | Int16 | No decimal part, min>=-32,768, max <=32,767 | to be done |

| Alteryx | V_WString | Int32 | No decimal part, min>=-–2,147,483,648, max <=2,147,483,647 | to be done |

| Alteryx | V_WString | Int36 | No decimal part, min>=-––9,223,372,036,854,775,808, max <=9,223,372,036,854,775,807 | to be done |

| Alteryx | V_WString | Fixed Decimal | to be done | to be done |

| Alteryx | V_WString | Float | when no need for doube precision | to be done |

| Alteryx | V_WString | Double | when need for double precision | to be done |

| Alteryx | V_WString | String | Same length,latin- character only | to be done |

| Alteryx | V_WString | WString | Same length | to be done |

| Alteryx | V_WString | V_String | latin- character only | to be done |

| Alteryx | V_WString | Date | test on several date formats | to be done |

| Alteryx | V_WString | Time | test on several time formats | to be done |

| Alteryx | V_WString | DateTime | test on several datetime formats | to be done |

The output would be something like that

| Field | Input type | Proposition | Conversion |

| toto | float | int | formula (with example)/native tool/datetime conversion tool… |

Best regards,

Simon

-

Category Data Investigation

-

Desktop Experience

-

New Request

Hi all!

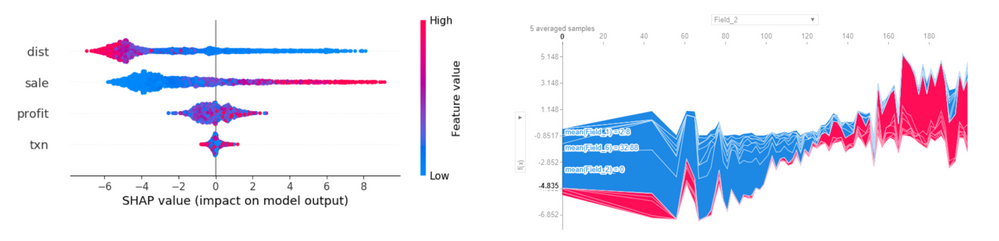

Based on the title, here's some background information: SHAPLEY Values

Currently, one way of doing so is to utilize the Python tool to write out the script and install the package. However, this will require running Alteryx as an administrator in order to successfully load, test, and run the script. The problem is, a substantial number of companies do not grant such privileges to their Alteryx teams to run as administrator fully as it will always require admin credentials to log in to even open Alteryx after closing it.

I am aware that there is a macro covering SHAP but I've recently tested it and it did not work as intended, plus it covers non-categorical values as determinants only, thereby requiring a conversion of categorical variables into numeric categories or binary categories.

It will be nice to have a built in Alteryx ML tool that does this analysis and produces a graph akin to a heat map that showcases the values like below:

By doing so, it adds more value to the ML suite and actually helps convince companies to get it.

Otherwise teams will just use Python and be done with it, leaving only Alteryx as the clean-up ETL tool. It leaves much to be desired, and can leave some teams hanging.

I hope for some consideration on this - thank you.

-

Category Data Investigation

-

Category Machine Learning

-

Category Predictive

-

Desktop Experience

Please add a data validator workflow.

Suggested features will be the following:

1. Add validation name and set the field/s of your data you want to validate. (it can have more than one validation name on one workflow)

2. On the selected validation(name). Add features that will check/validate the information below:

A. Verify data type

B. Contains Null

C. Max and Min string length

D. Allowed values only, else it will give you an error

E. Regex expected to match and not allowed to match.

3. It can have two(2) outputs. One is True(which is match) and False(which is fail over/error).

-

Category Data Investigation

-

Desktop Experience

Many software & hardware companies take a very quantitative approach to driving their product innovation so that they can show an improvement over time on a standard baseline of how the product is used today; and then compare this to the way it can solve the problem in the new version and measure the improvement.

For example:

- Database vendors have been doing this for years using TPC benchmarks (http://www.tpc.org/) where a FIXED set of tasks is agreed as a benchmark and the database vendors then they iterate year over year to improve performance based on these benchmarks

- Graphics card companies or GPU companies have used benchmarks for years (e.g. TimeSpy; Cinebench etc).

How could this translate for Alteryx?

- Every year at Inspire - we hear the stats that say that 90-95% of the time taken is data preparation

- We also know that the reason for buying Alteryx is to reduce the time & skill level required to achieve these outcomes - again, as reenforced by the message that we're driving towards self-service analytics & Citizen-data-analytics.

The dream:

Wouldn't it be great if Alteryx could say: "In the 2019.3 release - we have taken 10% off the benchmark of common tasks as measured by time taken to complete" - and show a 25% reduction year over year in the time to complete this battery of data preparation tasks?

One proposed method:

- Take an agreed benchmark set of tasks / data / problems / outcomes, based on a standard data set - these should include all of the common data preparation problems that people face like date normalization; joining; filtering; table sync (incremental sync as well as dump-and-load); etc.

- Measure the time it takes users to complete these data-prep/ data movement/ data cleanup tasks on the benchmark data & problem set using the latest innovations and tools

- This time then becomes the measure - if it takes an average user 20 mins to complete these data prep tasks today; and in the 2019.3 release it takes 18 mins, then we've taken 10% off the cost of the largest piece of the data analytics pipeline.

What would this give Alteryx?

This could be very simple to administer; and if done well it could give Alteryx:

- A clear and unambiguous marketing message that they are super-focussed on solving for the 90-95% of your time that is NOT being spent on analytics, but rather on data prep

- It would also provide focus to drive the platform in the direction of the biggest pain points - all the teams across the platform can then rally around a really deep focus on the user and accelerating their "time from raw data to analytics".

- A competitive differentiation - invite your competitors to take part too just like TPC.org or any of the other benchmarks

What this is / is NOT:

- This is not a run-time measure - i.e. this is not measuring transactions or rows per second

- This should be focussed on "Given this problem; and raw data - what is the time it takes you, and the number of clicks and mouse moves etc - to get to the point where you can take raw data, and get it prepped and clean enough to do the analysis".

- This should NOT be a test of "Once you've got clean data - how quickly can you do machine learning; or decision trees; or predictive analytics" - as we have said above, that is not the big problem - the big problem is the 90-95% of the time which is spent on data prep / transport / and cleanup.

Loads of ways that this could be administered - starting point is to agree to drive this quantitatively on a fixed benchmark of tasks and data

@LDuane ; @SteveA ; @jpoz ; @AshleyK ; @AJacobson ; @DerekK ; @Cimmel ; @TuvyL ; @KatieH ; @TomSt ; @AdamR_AYX ; @apolly

-

Category Data Investigation

-

Desktop Experience

Similar to the Select tool's Unknown Field Checkbox, I figured it would be useful for the Data Cleansing tool to have this functionality as well in order to avoid a scenario where after a cross-tab you have a new numeric field, one of which has a Null value, so you can't total up multiple fields because the Null value will prevent the addition from happening. If the Unknown Field box were checked off in the Data Cleansing tool then this problem would be avoided.

-

Category Data Investigation

-

Desktop Experience

I would like to see more files types supported to be able to be dragged from a folder onto a workflow. More precisely a .txt and a .dat file. This will greatly help my team and I do be able to analyze new and unknown data files that we receive on a daily basis.

Thank you.

-

Category Data Investigation

-

Category Input Output

-

Data Connectors

-

Desktop Experience

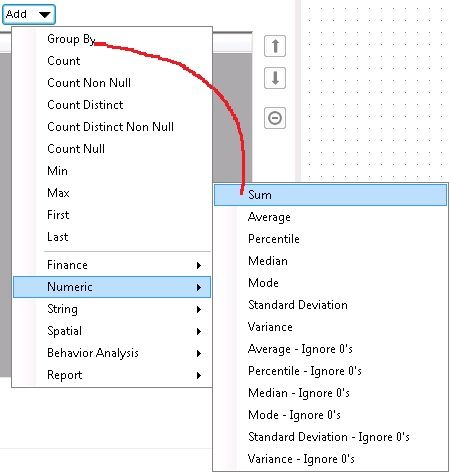

The sum function is probably the one I use most in the summarize tool. It is a silly thing, but it would be nice for "Sum" to be in the single-click list, rather than in the "Numeric" category...

-

Category Data Investigation

-

Category Interface

-

Category Preparation

-

Desktop Experience

This idea arose recently when working specifically with the Association Analysis tool, but I have a feeling that other predictive tools could benefit as well. I was trying to run an association analysis for a large number of variables, but when I was investigating the output using the new interactive tools, I was presented with something similar to this:

While the correlation plot draws your high to high associations, the user is unable to read the field names, and the tooltip only provides the correlation value rather than the fields with the value. As such, I shifted my attention to the report output, which looked like this:

While I could now read everything, it made pulling out the insights much more difficult. Wanting the best of both worlds, I decided to extract the correlation table from the R output and drop it into Tableau for a filterable, interactive version of the correlation matrix. This turned out to be much easier said than done. Because the R output comes in report form, I tried to use the report extract macros mentioned in this thread to pull out the actual values. This was an issue due to the report formatting, so instead I cracked open the macro to extract the data directly from the R output. To make a long story shorter, this ended up being problematic due to report formats, batch macro pathing, and an unidentifiable bug.

In the end, it would be great if there was a “Data” output for reports from certain predictive tools that would benefit from further analysis. While the reports and interactive outputs are great for ingesting small model outputs, at times there is a need to extract the data itself for further analysis/visualization. This is one example, as is the model coefficients from regression analyses that I have used in the past. I know Dr. Dan created a model coefficients macro for the case of regression, but I have to imagine that there are other cases where the data is desired along with the report/interactive output.

-

Category Data Investigation

-

Category Predictive

-

Desktop Experience

There is a need when visualizing in-Database workflows to be able to visualize sorted data. This sorting could be done 1 of 2 ways: In a browse tool, or as a stand-alone Sort tool. Either would address the need. Without such a tool being present, the only way to sort the data is to "Data Stream Out" and then visualize the data in Alteryx. However, this process violates the premise of the usefulness of the in-DB toolkit, which is to keep your data in-DB and process using the DB engine. Streaming out big data in order to add a sort is not efficient.

Granted, the in-DB processing doesn't care whether data is sorted or not. However, when attempting to find extreme values after an aggregation, or when trying to identify something as simple as whether null values are present in a field, then a sort becomes extremely useful, and a necessary tool for human consumption of data (regardless of the database's processing needs).

Thanks very much for hearing my idea!

-

Category Data Investigation

-

Category In Database

-

Data Connectors

-

Desktop Experience

Dear GUI Gurus,

A minor, but time saving GUI enhancement would be appreciated. When adding a tool to the canvas, the current behavior is to make visible the tool anchor that was last used on prior tools. That being said, when I look at the results window, I might be adding a "vanilla" configuration tool to the canvas and stare at a BLANK results window. When users are adding tools to the canvas, I suggest that the best practice is to VIEW the incoming data before configuring the tool.

I ALWAYS set the results to view the INCOMING DATA ANCHOR.

This minor change would be welcome to me.

Cheers,

Mark

-

Category Data Investigation

-

Desktop Experience

Right now - if a tool generates an error - there is nothing productive that you can do with the error rows, these are just sent to the error log and depending on your settings the entire canvas will fail.

Could we change this in the Designer to work more like SSIS - where almost every tool has an error output, so that you can send the good rows one way, and the error rows the other way, and then continue processing? The error rows can be sent to an error table or workflow or data-quality service; and the good rows can be sent onwards. Because you have access to the error rows, you can also do run stats of "successful rows vs. unsuccessful"

This would make a big difference in the velocity of developing a canvas or prepping data.

Can take some screenshots if that helps?

-

Category Data Investigation

-

Desktop Experience

-

General

Browse tool is really a powerful tool. We can see all information regarding datasets very rapidly.

Unfortunately, we only can export information (graphs, tables) manually through PNG files...

One major interest of Alteryx in Big Company is to perform DATA Quality reviews.

If we could export Browse tool informations (graphs, tables) automatically in pdf file or other solutions, we could save a lot of time in Data Quality tasks.

The only solution is to use DataViz tool or set up specific render in Alteryx (very time-consumming)

Main benefit would be the ability to share insights of DATA Quality with other business units.

Best Regards

-

Category Data Investigation

-

Desktop Experience

Unsupervised learning method to detect topics in a text document.

Helpful for users interested in text mining.

-

Category Data Investigation

-

Category Predictive

-

Desktop Experience

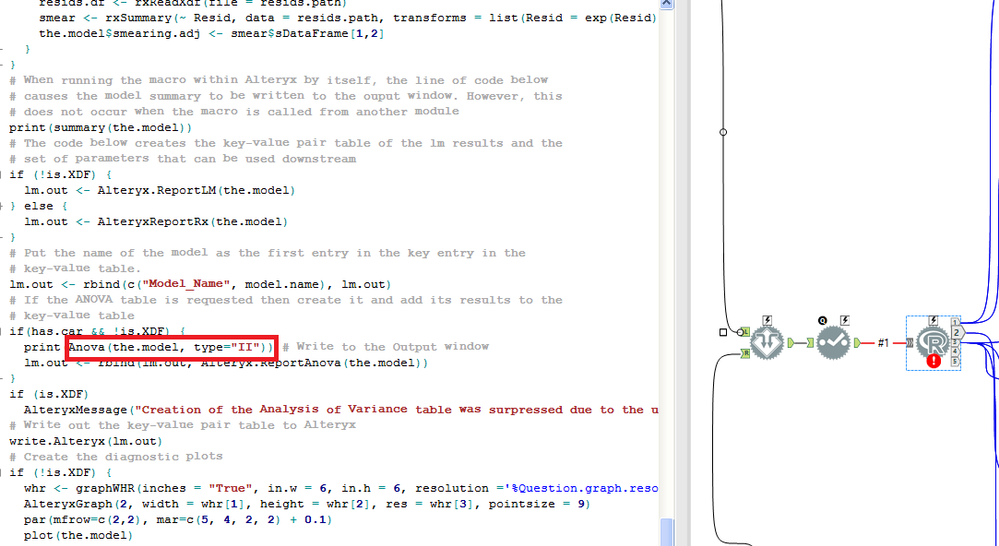

We don't have a seperate ANOVA tool in Alteryx, do you think of any reason?

It's not raw data or row blended data but insights gathered that's important:

Linear Regression Tool has a report for Type II ANOVA based on the model table we provide.

But both type II and other types are not available as standalone statistics tools...

Here is the list of different types of Anova that may be useful;

ANOVA models Definitions

| t-tests | Comparison of means between two groups; if independent groups, then independent samples t-test. If not independent, then paired samples t-test. If comparing one group against a fixed value, then a one-sample t-test. |

| One-way ANOVA | Comparison of means of three or more independent groups. |

| One-way repeated measures ANOVA | Comparison of means of three or more within-subject variables. |

| Factorial ANOVA | Comparison of cell means for two or more between-subject IVs. |

| Mixed ANOVA (SPANOVA) | Comparison of cells means for one or more between-subjects IV and one or more within-subjects IV. |

| ANCOVA | Any ANOVA model with a covariate. |

| MANOVA | Any ANOVA model with multiple DVs. Provides omnibus F and separate Fs. |

Looking forward for the addition of ANOVA tools to the data investigation tool box...

-

Category Data Investigation

-

Desktop Experience

We have the brows icon witch connect at on output at a time. But to be more efficient I would like a browser tool witch connect to 2 or 3 outputs at one icon. Connect to True false at the filter or L J R at the join record.

-

Category Data Investigation

-

Desktop Experience

Python pandas dataframes and data types (numpy arrays, lists, dictionaries, etc.) are much more robust in general than their counterparts in R, and they play together much easier as well. Moreover, there are only a handful of packages that do everything a data scientist would need, including graphing, such as SciKit Learn, Pandas, Numpy, and Seaborn. After utliizing R, Python, and Alteryx, I'm still a big proponent of integrating with the Python language much like Alteryx has integrated with R. At the very least, I propose to create the ability to create custom code such as a Python tool.

-

API SDK

-

Category Data Investigation

-

Category Developer

-

Category Predictive

Hi all,

One if the most common data-investigation tasks we have to do is comparing 2 data-sets. This may be making sure the columns are the same, field-name match, or even looking at row data. I think that this would be a tremendous addition to the core toolset. I've made a fairly good start on it, and am more than happy if you want to take this and extend or add to it (i give this freely with no claim on the work).

Very very happy to work with the team to build this out if it's useful

Cheers

Sean

-

Category Data Investigation

-

Desktop Experience

One of the tools that I use the most is the SELECT tool because I normally get large data sets with fields that I won't be using for a specific analysis or with fields that need re-naming. In the same way, sometimes Alteryx will mark a field in a different type than the one I need (e.g. date field as string). That's when the SELECT comes in handy.

However, often times when dealing with multiple sources and having many SELECT tools on your canvas can make the workflow look a little "crowded". Not to mention adding extra tools that will need later explanation when presenting/sharing your canvas with others. That is why my suggestion is to give the CONNECTION tool "more power" by offering some of the functionality found in the SELECT tool.

For instance, if one of the most used features of the SELECT tool is to choose the fields that will move through the workflow, then may be we can make that feature available in the CONNECTION tool. Similarly, if one of the most used features (by Alteryx users) is to re-name fields or change the field type, then may be we can make that available in the CONNECTION tool as well.

At the end, developers can benefit from speeding up workflow development processes and end-users will benefit by having cleaner workflows presented to them, which always help to get the message across.

What do you guys think? Any of you feel the same? Leave your comments below.

-

Category Connectors

-

Category Data Investigation

-

Category Input Output

-

Data Connectors

- New Idea 317

- Accepting Votes 1,790

- Comments Requested 22

- Under Review 171

- Accepted 54

- Ongoing 8

- Coming Soon 7

- Implemented 539

- Not Planned 110

- Revisit 57

- Partner Dependent 4

- Inactive 674

-

Admin Settings

21 -

AMP Engine

27 -

API

11 -

API SDK

223 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

79 -

Category Demographic Analysis

2 -

Category Developer

212 -

Category Documentation

80 -

Category In Database

215 -

Category Input Output

646 -

Category Interface

242 -

Category Join

105 -

Category Machine Learning

3 -

Category Macros

154 -

Category Parse

76 -

Category Predictive

79 -

Category Preparation

398 -

Category Prescriptive

1 -

Category Reporting

200 -

Category Spatial

82 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

91 -

Configuration

1 -

Content

1 -

Data Connectors

969 -

Data Products

3 -

Desktop Experience

1,569 -

Documentation

64 -

Engine

129 -

Enhancement

362 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

25 -

Licenses and Activation

15 -

Licensing

14 -

Localization

8 -

Location Intelligence

81 -

Machine Learning

13 -

My Alteryx

1 -

New Request

212 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

25 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

82 -

UX

223 -

XML

7

- « Previous

- Next »

- asmith19 on: Auto rename fields

- Shifty on: Copy Tool Configuration

- simonaubert_bd on: A formula to get DCM connection name and type (and...

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- haraldharders on: Improve Text Input tool

- simonaubert_bd on: Unique key detector tool

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- jackchoy on: Enhancing Data Cleaning

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections

| User | Likes Count |

|---|---|

| 11 | |

| 8 | |

| 4 | |

| 3 | |

| 3 |