Weekly Challenges

Solve the challenge, share your solution and summit the ranks of our Community!Also available in | Français | Português | Español | 日本語

IDEAS WANTED

Want to get involved? We're always looking for ideas and content for Weekly Challenges.

SUBMIT YOUR IDEA- Community

- :

- Community

- :

- Learn

- :

- Academy

- :

- Challenges & Quests

- :

- Weekly Challenges

- :

- Re: Challenge #205: Taynalysis

Challenge #205: Taynalysis

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Slight differences in my answer to the provided example

Check out my collaboration with fellow ACE Joshua Burkhow at AlterTricks.com

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Some slight differences in expected vs. actual

Some slight differences in expected vs. actual- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi All ,

This is my very first weekly challenge response 🙂

I am excited to share the news that my paper "Workday Data Migration : How we saved over 2000 hours of manual effort" was chosen for the Excellence Award !!!!

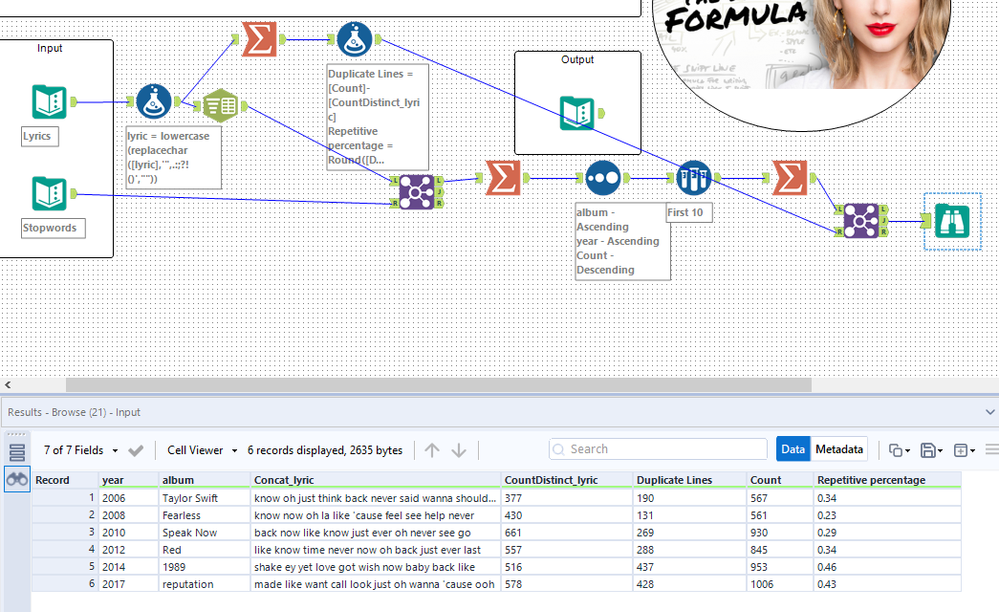

For this weekly challenge , I used the summarise function and the count function on the lyric field to return counts , count distinct of lines per album. Using the data I arrived at the duplicate records. The data matched for some records but was off by 1 number for a few. Attached is my workflow.

Regards

Sambit

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

#################################

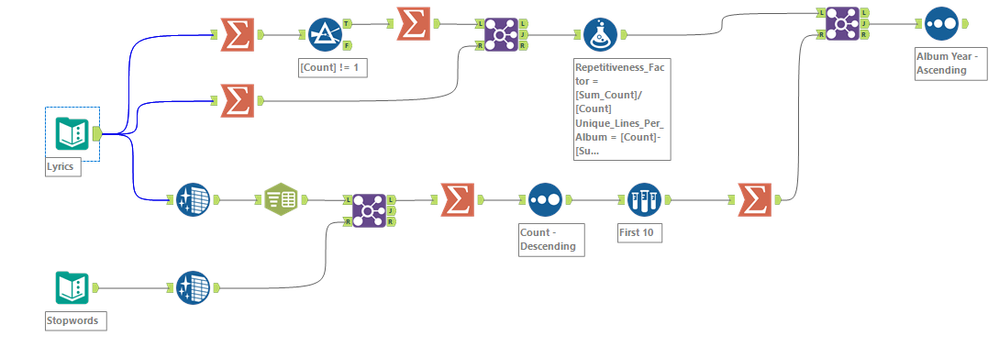

# List all non-standard packages to be imported by your

# script here (only missing packages will be installed)

from ayx import Package

#Package.installPackages(['pandas','numpy'])

#################################

from ayx import Alteryx

from collections import Counter

import re

dfExpected = Alteryx.read("#Output")

dfLyrics = Alteryx.read("#Lyrics")

dfStopwords = Alteryx.read("#Stopwords")

#################################

# Create a simple list of stopwords

stopwords = [w[0] for w in dfStopwords.values.tolist()]

#################################

dfTop10 = dfLyrics.groupby(['year','album'])['lyric'].apply(" ".join).reset_index()

def get_top_10_words(word_list):

word_list = re.sub(r"[^a-zA-Z0-9\s\']", r'', word_list)

list_ = word_list.split()

not_stop = [word for word in list_ if word.lower() not in stopwords]

counter = Counter(not_stop)

return " ".join([word for (word, count) in counter.most_common(10)])

dfTop10['lyric'] = dfTop10['lyric'].apply(get_top_10_words)

#################################

dfLines = dfLyrics.groupby(['year','album'])['lyric'].agg(['nunique','count']).reset_index()

dfLines['dups'] = dfLines['count'] - dfLines['nunique']

dfLines['percent'] = dfLines['dups'] * 100 / dfLines['count']

dfLines = dfLines[['year', 'album', 'nunique', 'dups', 'count', 'percent']]

#################################

dfOutput = dfTop10.merge(dfLines).rename(columns=

{"year": "Album Year",

"album": "Album Name",

"lyric": "Top_10_Lyrics",

"nunique": "Unique_Lines_Per_Album",

"dups": "Duplicate_Lines_Per_Album",

"count": "Total_Lines_Per_Album",

"percent": "Repetativeness_Percentage"

}

)

#################################

Alteryx.write(dfOutput, 1)I wanted to practice my data frames with this one, so I used the python tool. Like others have mentioned, my results are very close to the expected output counts.

On a whim I also tried training an RNN (not attached) to generate new T-Swift songs, but after 30 epochs it was over-fitting. Reducing the number of epochs produced incoherent lyrics. At approximately 33k words, there wasn't enough data to satisfy the network. We'll have to wait for more Taylor albums! 🙂

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

-

Advanced

274 -

Apps

24 -

Basic

128 -

Calgary

1 -

Core

112 -

Data Analysis

170 -

Data Cleansing

4 -

Data Investigation

7 -

Data Parsing

9 -

Data Preparation

195 -

Developer

35 -

Difficult

70 -

Expert

14 -

Foundation

13 -

Interface

39 -

Intermediate

237 -

Join

206 -

Macros

53 -

Parse

138 -

Predictive

20 -

Predictive Analysis

12 -

Preparation

271 -

Reporting

53 -

Reporting and Visualization

17 -

Spatial

60 -

Spatial Analysis

49 -

Time Series

1 -

Transform

214

- « Previous

- Next »