Search

Close

Free Trial

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Weekly Challenges

Solve the challenge, share your solution and summit the ranks of our Community!Also available in | Français | Português | Español | 日本語

IDEAS WANTED

Want to get involved? We're always looking for ideas and content for Weekly Challenges.

SUBMIT YOUR IDEA- Community

- :

- Community

- :

- Learn

- :

- Academy

- :

- Challenges & Quests

- :

- Weekly Challenges

- :

- Re: Challenge #159: April ENcyptanalytics

SOLVED

Challenge #159: April ENcyptanalytics

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

16 - Nebula

05-17-2019

12:25 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Spoiler

Why do small things that cause a lot of grief like this always happen to me? I built out my whole workflow and ran it only to find out it failed when passing through the macro. So I redid basically everything only to realize the only reason it was failing was because I created my own Text Input file that I called Field1 when the macro was expecting a field called data. *facepalm*

jamielaird

14 - Magnetar

06-23-2019

09:16 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Here's my solution

Spoiler

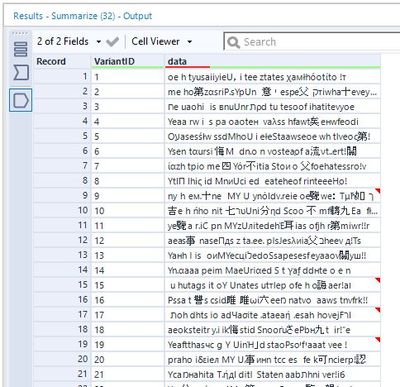

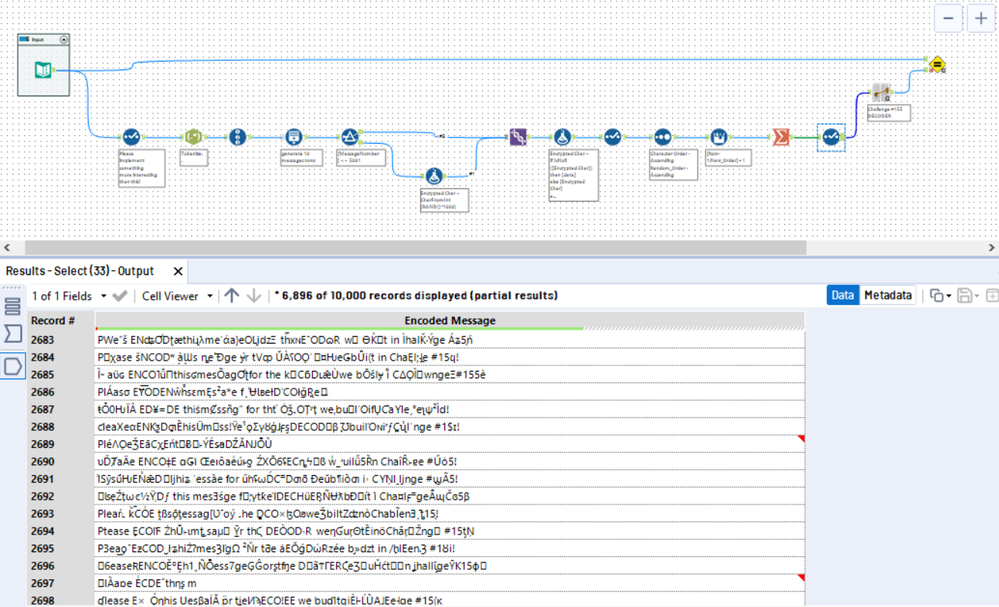

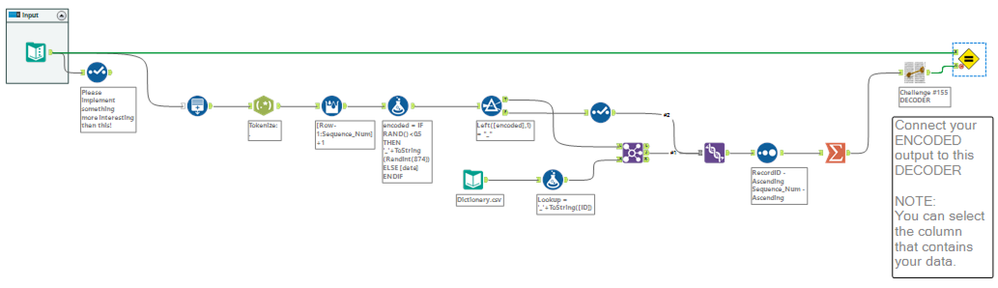

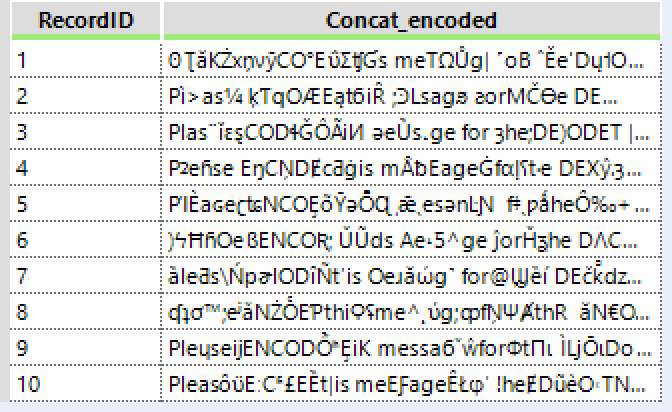

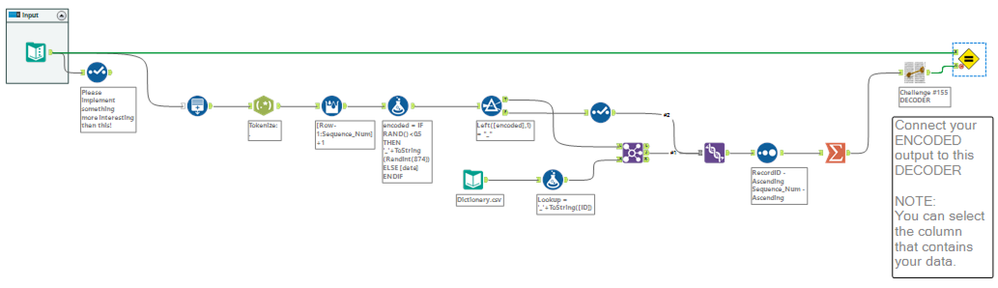

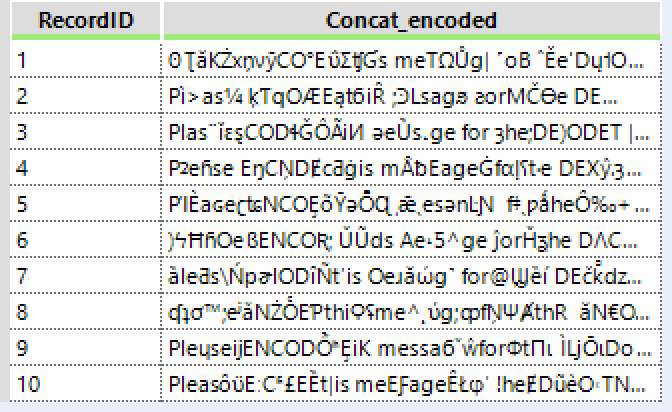

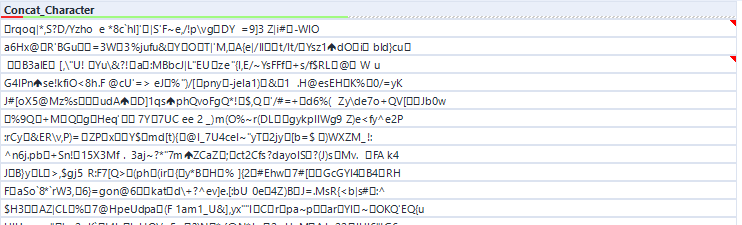

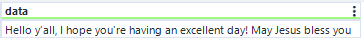

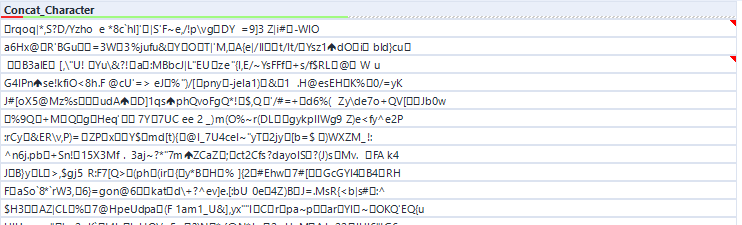

I created a dictionary of ~800 UTF-8 characters and performed a random character substitution for half of the letters in the string. With 10 differently encoded versions of the original text it can be cracked easily using the decoder.

LordNeilLord

15 - Aurora

07-14-2019

04:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

13

RWvanLeeuwen

11 - Bolide

08-13-2019

08:02 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

17 - Castor

09-01-2019

12:17 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Very different kind of challenge - thank you @TerryT

Spoiler

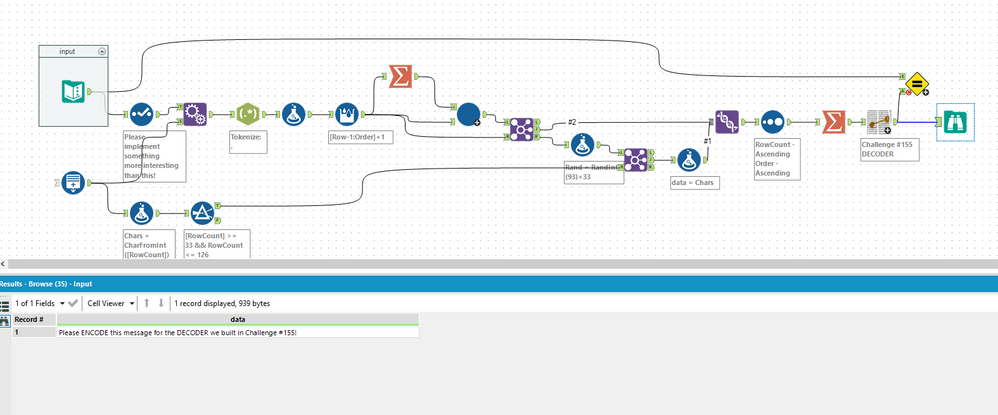

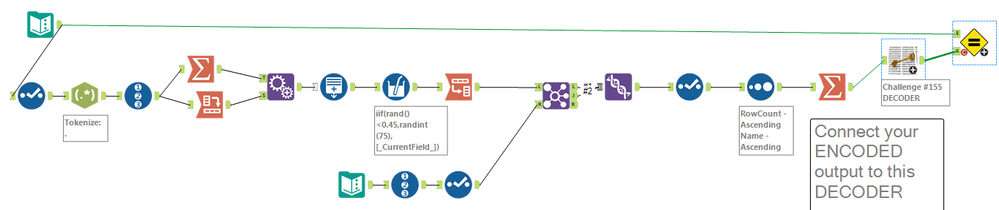

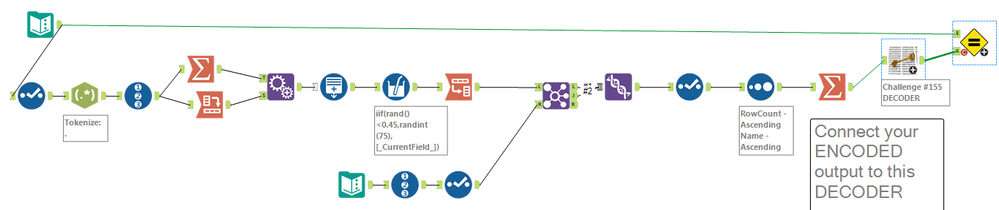

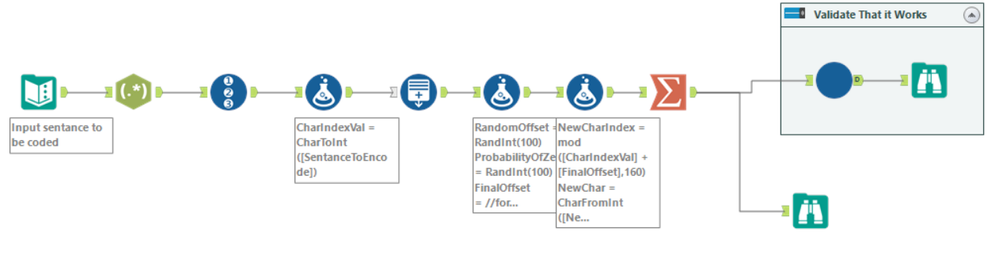

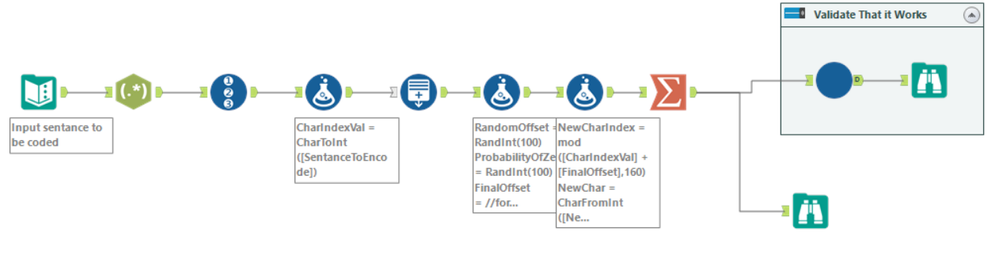

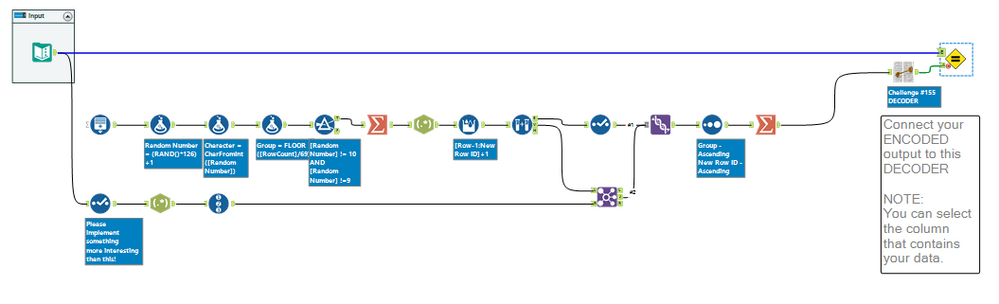

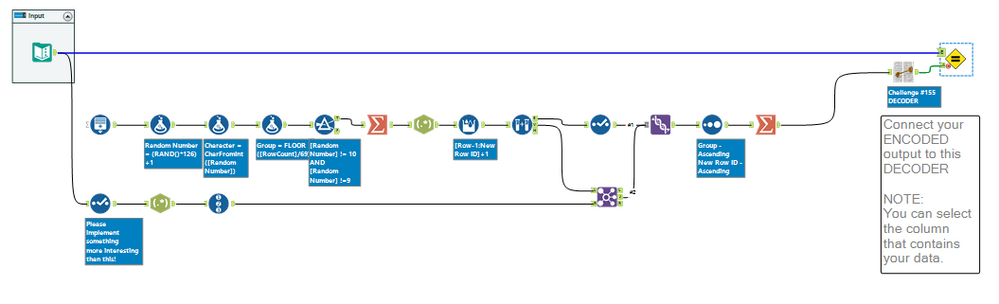

Solution was to split into characters; then add a random offset on the char ID; but for 1 in 10 of these set the offset to 0; then use a mod function to bring this back to the 1-160 range.

Added the 155 challenge decoder as a macro to check this.

Solution was to split into characters; then add a random offset on the char ID; but for 1 in 10 of these set the offset to 0; then use a mod function to bring this back to the 1-160 range.

Added the 155 challenge decoder as a macro to check this.

TimHuff

7 - Meteor

09-09-2019

10:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

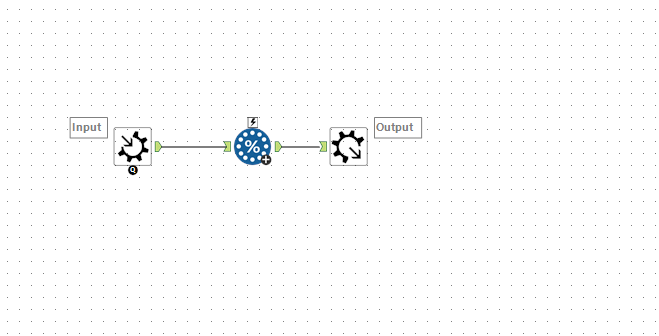

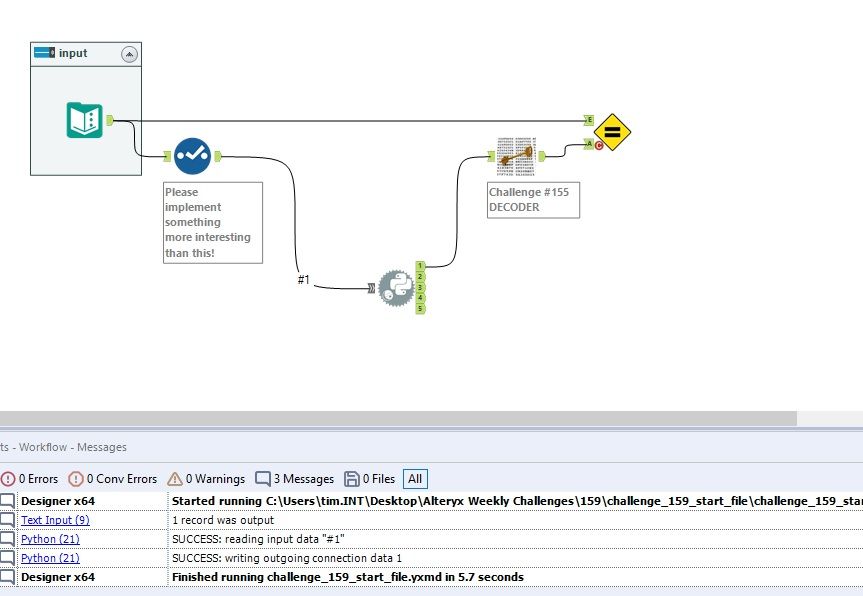

All the special sauce is in the Python tool.

TimothyManning

8 - Asteroid

09-17-2019

03:12 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

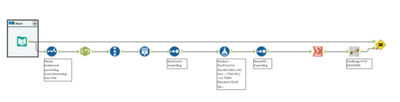

Spoiler

Cool Challenge!

Cool Challenge!

TonyA

Alteryx Alumni (Retired)

09-28-2019

06:13 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

This one was a lot of fun.

Spoiler

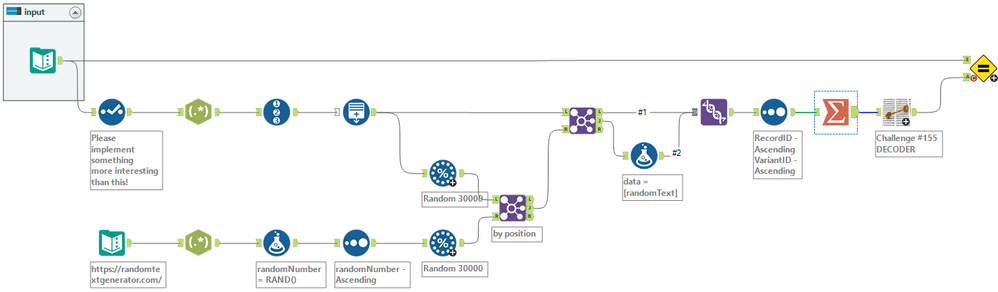

I assumed that the number of rows would be equal to the number of characters in the message. I played a bit with the sampling rate (what percentage of the values to hold at the original value) just to see when the encoding would start to break. For this 70 character example, I started getting random errors at less than 25% sampling. I'm sure this would change based on the number of rows of encoding.

Karam

8 - Asteroid

10-10-2019

09:44 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

nivi_s

8 - Asteroid

10-12-2019

03:03 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Labels

-

Advanced

273 -

Apps

24 -

Basic

128 -

Calgary

1 -

Core

112 -

Data Analysis

170 -

Data Cleansing

4 -

Data Investigation

7 -

Data Parsing

9 -

Data Preparation

195 -

Developer

35 -

Difficult

69 -

Expert

14 -

Foundation

13 -

Interface

39 -

Intermediate

237 -

Join

206 -

Macros

53 -

Parse

138 -

Predictive

20 -

Predictive Analysis

12 -

Preparation

271 -

Reporting

53 -

Reporting and Visualization

17 -

Spatial

59 -

Spatial Analysis

49 -

Time Series

1 -

Transform

214

- « Previous

- Next »