Weekly Challenges

Solve the challenge, share your solution and summit the ranks of our Community!Also available in | Français | Português | Español | 日本語

IDEAS WANTED

Want to get involved? We're always looking for ideas and content for Weekly Challenges.

SUBMIT YOUR IDEA- Community

- :

- Community

- :

- Learn

- :

- Academy

- :

- Challenges & Quests

- :

- Weekly Challenges

- :

- Re: Challenge #145: SANTALYTICS 2018 - Part 1

Challenge #145: SANTALYTICS 2018 - Part 1

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

@mbarone @mattagone @Pepper YAY ROCHESTER ALTERYX USER GROUP! Thanks for providing a solution!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Great challenge!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

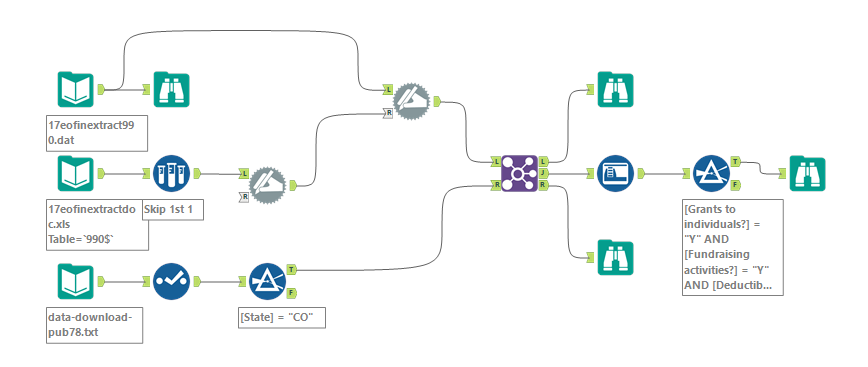

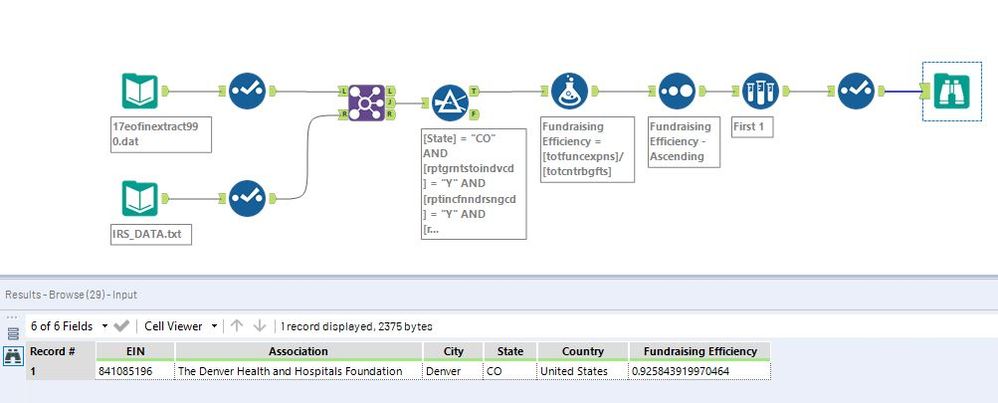

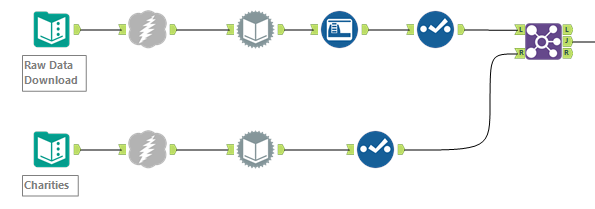

Here is my solution. Fun one, as I have been looking for a charity in my home state to take advantage of tax credits this is also very time appropriate!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

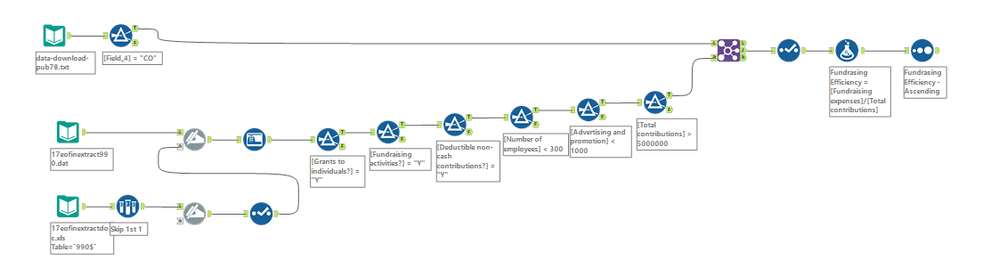

I definitely did more work than I needed to, but started by pulling in all the data first then figured out what I needed.

Way too many tools with dead ends

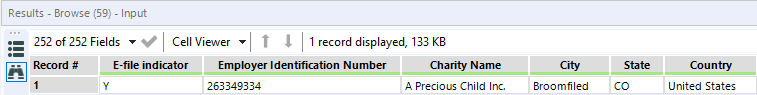

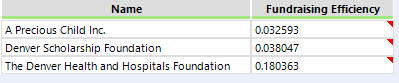

Way too many tools with dead ends Came up with A Precious Child Inc.

Came up with A Precious Child Inc.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

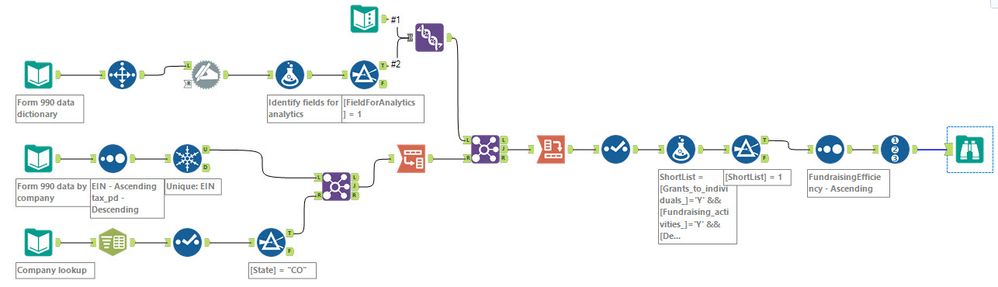

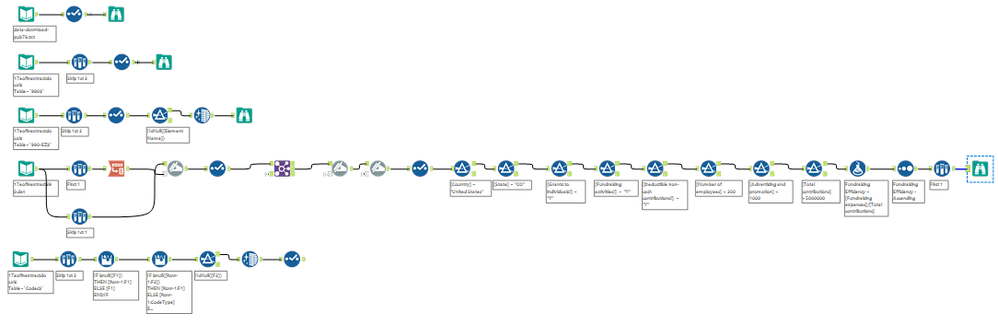

I did the same as most, but went for separate filters.

Two reasons:

- You can see from the annotation each step that the filter is doing, so don't have to looking into the expresssion.

- It's more efficient with the data.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

That's interesting @JoeS - I'd always assumed a single filter would be more efficient. Just out of interest, why do multiple filters make it more efficient?

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

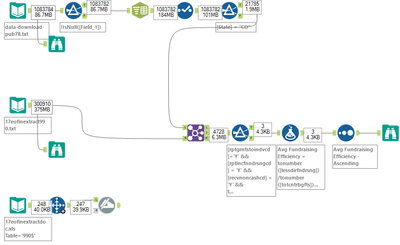

@andyuttley I believe it's the way that the expression is compiled in the Alteryx Engine.

If you have them all in one filter, each record is checked against each condition. So in this example all 300K records are checked against all 6 conditions in one filter.

If you have them in separate filters records are removed from the process in a waterfall way.

It's most efficient to have your biggest remover (made up that phrase, but the condition that most records fail) as the first filter and then continue with that approach and then there will be less records for each condition to be checked against.

It's not really going to make a difference on only 300k records.

I just did some testing, 22.5 seconds on average for one filter and 21 seconds for 6 filters (in the order in the post, not the most efficient).

Which is quite considerable actually, as its the auto-field that takes most of the time in my workflow (performance profiling says 18.5 seconds).

Although, what is weird is that if you add up the individual times of the performance profiling, you get 145ms for 6 filters vs 117ms for 1 filter.

My guess here is that as the timings are so small, the actual overhead of the performance profiling added to each tool slows it down.

As the workflow consistently runs quicker with 6 filters and no performance profiling.

I found this out on processes years back with hundreds of millions of records. So we are talking really small margins with 300k records.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Wooooooooooooooooooooooooooooo!!! Santalytics!!

Anyway, mine was probably similar to the others.

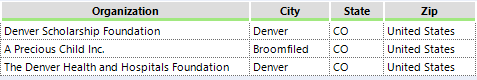

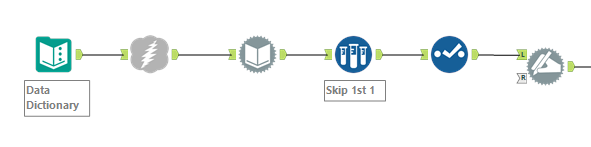

I then downloaded the Data Dictionary using the same method and dynamically renamed the columns from the first row of data:

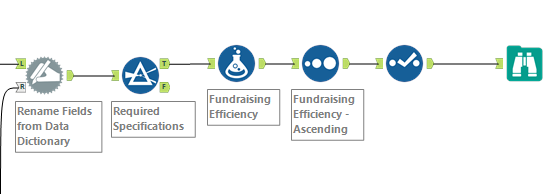

Then, I simply used a dynamic rename tool to take the names from the dictionary description, applied the appropriate filters and calculated the Efficiency, sorting accordingly:

And here are the results:

* "Patiently" awaits the next instalment

-

Advanced

273 -

Apps

24 -

Basic

128 -

Calgary

1 -

Core

112 -

Data Analysis

170 -

Data Cleansing

4 -

Data Investigation

7 -

Data Parsing

9 -

Data Preparation

195 -

Developer

35 -

Difficult

69 -

Expert

14 -

Foundation

13 -

Interface

39 -

Intermediate

237 -

Join

206 -

Macros

53 -

Parse

138 -

Predictive

20 -

Predictive Analysis

12 -

Preparation

271 -

Reporting

53 -

Reporting and Visualization

17 -

Spatial

59 -

Spatial Analysis

49 -

Time Series

1 -

Transform

214

- « Previous

- Next »