Search

Close

Free Trial

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Weekly Challenges

Solve the challenge, share your solution and summit the ranks of our Community!Also available in | Français | Português | Español | 日本語

IDEAS WANTED

Want to get involved? We're always looking for ideas and content for Weekly Challenges.

SUBMIT YOUR IDEA- Community

- :

- Community

- :

- Learn

- :

- Academy

- :

- Challenges & Quests

- :

- Weekly Challenges

- :

- Re: Challenge #131: Think Like a CSE... The R Erro...

Challenge #131: Think Like a CSE... The R Error Message: cannot allocate vector of size...

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

22 - Nova

04-04-2020

10:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

hanykowska

11 - Bolide

07-23-2020

02:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I have successfully avoided this challenge for over a year, but today is the day I end it.

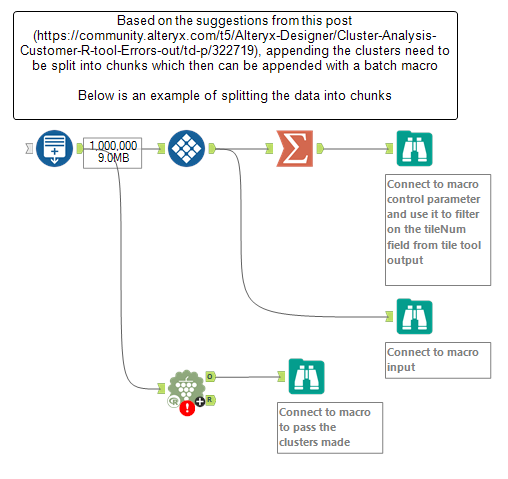

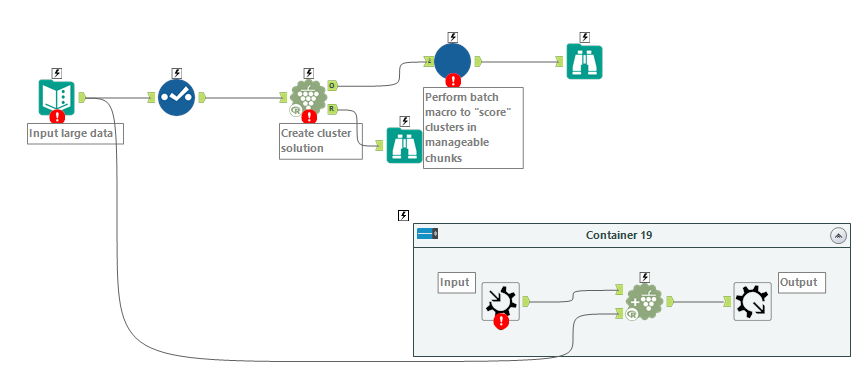

Spoiler

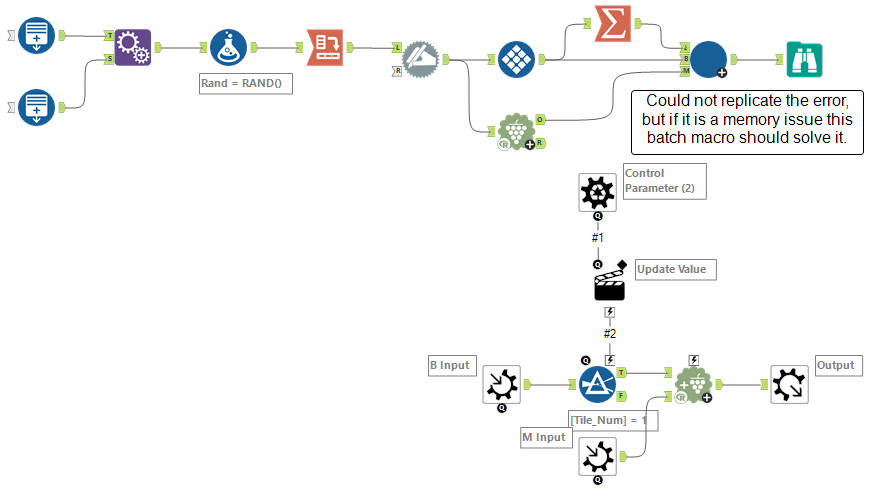

I have tried to reproduce this error a number of times, but sadly couldn't manage it. After a number of tries I went on to check the comments to see if anyone else managed but in the process I saw some of the responses... So I waited a while to try and forget what I saw but couldn't. Today I decided to look through community and see if there are any resources I could base my solution on, and I did! It wasn't exactly the same issue but the memory allocation issue was present (https://community.alteryx.com/t5/Alteryx-Designer/Cluster-Analysis-Customer-R-tool-Errors-out/td-p/3...) so I decided to suggest a solution of splitting data into chunks for appending the clusters and mocked up a short example below (didn't bother to make an actual batch macro though...)

JennyMartin

9 - Comet

08-26-2020

05:32 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

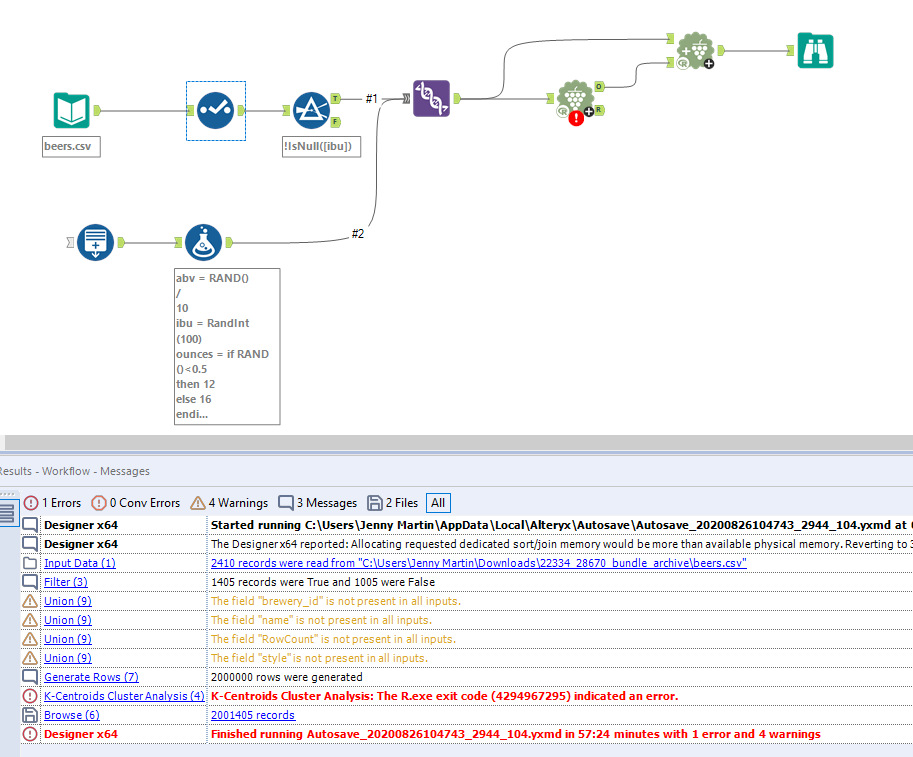

Spoiler

The memory issue would be solved but breaking the data into smaller chunks (as I found out by making the dataset incrementally larger)

Spent hours trying to recreate the exact error but figured this was close enough!

JethroChen

10 - Fireball

09-15-2020

06:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

AngelosPachis

16 - Nebula

09-16-2020

11:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

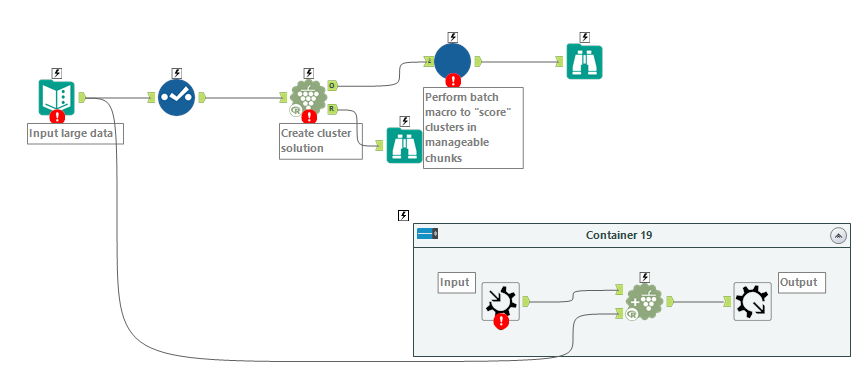

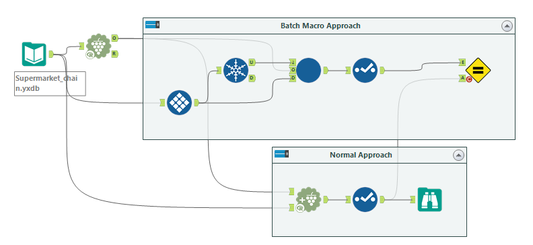

My solution for challenge #131.

Workflow

Spoiler

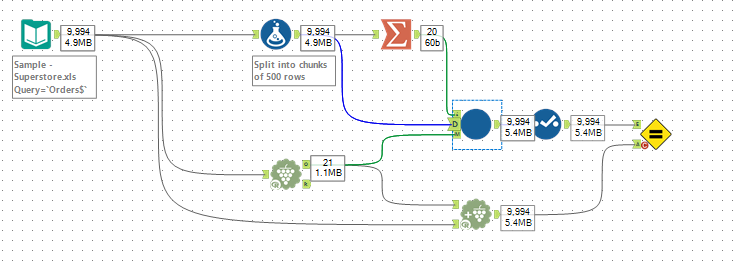

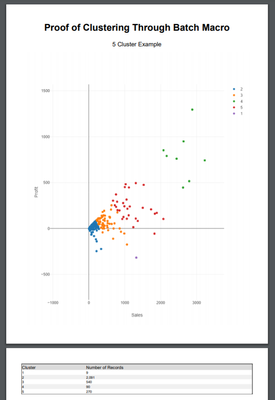

I didn't spend time on creating a dataset of 7000 Gb, but I created a workflow as a proof of concept.

Using Tableau Superstore dataset, I broke it down into chunks of 500 rows and then fed it into the macro containing the append clusters tool. Then to validate that my macro works as expected, I simply compared my macro output to that of an Append Clusters tool.

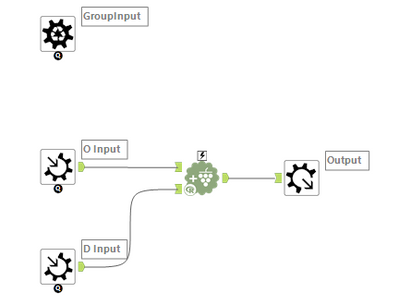

Macro

15 - Aurora

09-16-2020

12:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

johnemery

11 - Bolide

10-15-2020

01:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Spoiler

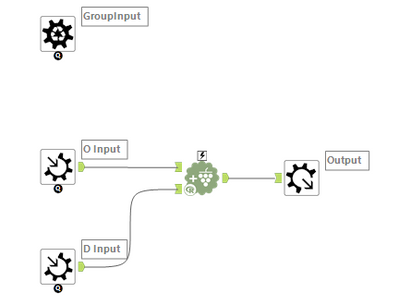

The error shown occurred on the Append Cluster tool. This tells us that the cluster tool itself was able to handle the size of the data.

Searching for the error, we can quickly find that the error is memory-related. Turns out, ~7500 GB of memory is a bit more than we have available.

By placing the Append Cluster tool workflow in a batch macro, we can apply the clusters to the data set in chunks, thereby working around the memory limitations.

Searching for the error, we can quickly find that the error is memory-related. Turns out, ~7500 GB of memory is a bit more than we have available.

By placing the Append Cluster tool workflow in a batch macro, we can apply the clusters to the data set in chunks, thereby working around the memory limitations.

16 - Nebula

11-28-2020

06:37 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Spoiler

As the problem is a memory problem and it's happening on cluster appending and not clustering itself, the user should split data in small chunks and perform the append on each chunk. A batch macro should do the trick.

17 - Castor

11-28-2020

07:01 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

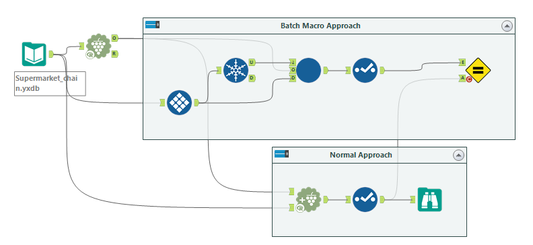

My solution.

Spoiler

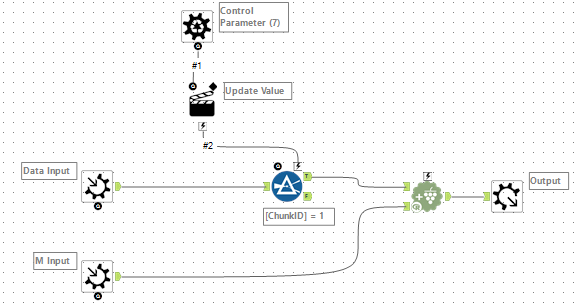

The reason of this problem is that Designer cannot allocate the memory because the data is too large.

But modeling has finished, so the solution is to make the batch macro of clustering part.

At this time, I made the workflow that compare the result between normal workflow and batch macro approach workflow.

How many the user would make the groups, he has to investigate the original data.

Workflow:

But modeling has finished, so the solution is to make the batch macro of clustering part.

At this time, I made the workflow that compare the result between normal workflow and batch macro approach workflow.

How many the user would make the groups, he has to investigate the original data.

Workflow:

Batch Macro:

16 - Nebula

12-03-2020

12:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Labels

-

Advanced

273 -

Apps

24 -

Basic

128 -

Calgary

1 -

Core

112 -

Data Analysis

170 -

Data Cleansing

4 -

Data Investigation

7 -

Data Parsing

9 -

Data Preparation

195 -

Developer

35 -

Difficult

69 -

Expert

14 -

Foundation

13 -

Interface

39 -

Intermediate

237 -

Join

206 -

Macros

53 -

Parse

138 -

Predictive

20 -

Predictive Analysis

12 -

Preparation

271 -

Reporting

53 -

Reporting and Visualization

17 -

Spatial

59 -

Spatial Analysis

49 -

Time Series

1 -

Transform

214

- « Previous

- Next »