Alteryx Server Discussions

Find answers, ask questions, and share expertise about Alteryx Server.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Server

- :

- What is the maximum size for Exported Workflows?

What is the maximum size for Exported Workflows?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

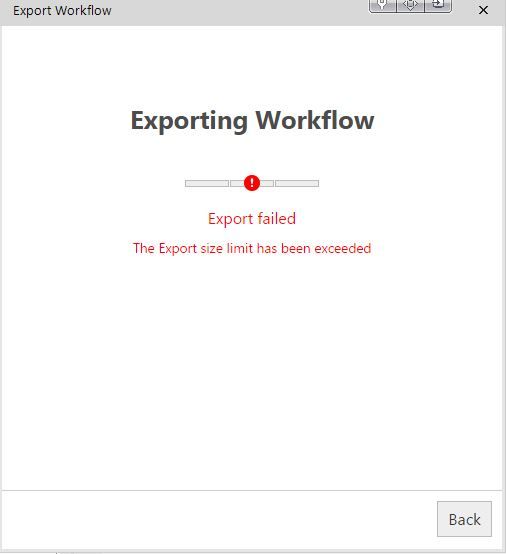

I recently had this error message which came because I left a data file in the workflow by mistake, but it made me think what is the maximum size for an exported workflow? Can anyone answer this.

As I start chaining workflows I want to make sure they can carry as an entire process if I need to.

Thanks,

Andre

Solved! Go to Solution.

- Labels:

-

Error Message

-

Scheduler

-

Server

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi @andrewdatakim,

Thanks for your question. There is a size limit and it is tied to the ZIP program utilized in the Export Workflow function. A packaged workflow can be produced with up to 65,535 items and a total archive size of 4.2 GBs.

Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hello,

I am looking for how to scale an on prem architecture when I have 200 workflows per day?

Sizing are always by users However I have 5 users who should execute 200 workflows per day

Is there any documentations or tips to size alteryx server by workloads?

Thanks

Asma

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Not sure but maybe Alteryx will provide confirmation, when exporting it may include metadata. some flows get bloated with meta-data and thus may get significantly larger for this reason, 100mb with metadata vs 5 or 6 kb in the actual flow "code". Try doing a select all, delete and then paste back and save to see how big your flow is after vs before. if it is notably smaller you had a lot of meta data generated by test runs and it is likely small enough to export at that point. Same issue can cause sluggish editing of a flow as it is working through all the metadata built up with each run.

Wish there was an easy purge function but there is none as far as I know, other than the select all/delete/paste back trick.

I have not seen anyone produce a flow that is actually more than a few MBs once meta data is "removed".

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

to asma_rez and anyone considering sizing....

A more useful utilization rate is concurrent flows vs flows per day, number of users doesn't really matter and 200 flows a day is nothing if spread over 24 hours. You also need to quantify complexity of flows, I have flows that run in hours doing time consuming but not memory intensive work, other flows doing memory intensive work but maybe runs in 20 minutes, others may be CPU and memory intensive doing data modeling, major performance hogs....

so it is very subjective to they type of flows and frequency and concurrency. The server configuration can handle more or less depending on how much RAM and Disk and how many cpu's your server has.

I have emulated server function with great success with a standalone designer with API/Scheduler feature added and i have also used the full Server product, 200 flows a day would be light work for both generally. data modeling is normally manually run on a single system so the big question is concurrency, normally a system running 10 to 15 flows at a time (concurrent) is fine before things stack up. this is empirical with our config of 24 GB ram, 4 CPU and 400 GB dedicated hard drive. be aware that each flow can generate 10 to 20GB of temp files while running and the default is to use local drive space, you can override to NAS. so 10 flows, that use a lot of temp space, generating 20GB temp files needs 200GB drive space over and above any system overhead. often IT sets up a VM or dedicated system with minimal drive space which causes issues people often don't recognize.

you should do some performance profiling of the flows you want to deploy and based on the peak requirements of the "stressed" flow load you can config your Microsoft Server's memory, drive and cpu. The server product offers more features for distributing processing but single server running a standalone designer with Scheduler option can easily handle what you propose in my experience so a server product will handle it at least as well. Just remember the windows server sizing is probably more important for performance than the Alteryx software.

If you grow a lot you can add more servers and Alteryx Server can handle setting them up as workers

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

To Asma_rez....or anyone on sizing

A more useful utilization rate is concurrent flows vs flows per day, number of users doesn't really matter and 200 flows a day is nothing if spread over 24 hours. You also need to quantify complexity of flows, I have flows that run in hours doing time consuming but not memory intensive work, other flows doing memory intensive work but maybe runs in 20 minutes, others may be CPU and memory intensive doing data modeling, major performance hogs....

so it is very subjective to they type of flows and frequency and concurrency. The server configuration can handle more or less depending on how much RAM and Disk and how many cpu's your server has.

I have emulated server function with great success with a standalone designer with API/Scheduler feature added and i have also used the full Server product, 200 flows a day would be light work for both generally. data modeling is normally manually run on a single system so the big question is concurrency, normally a system running 10 to 15 flows at a time (concurrent) is fine before things stack up. this is empirical with our config of 24 GB ram, 4 CPU and 400 GB dedicated hard drive. be aware that each flow can generate 10 to 20GB of temp files while running and the default is to use local drive space, you can override to NAS. so 10 flows, that use a lot of temp space, generating 20GB temp files needs 200GB drive space over and above any system overhead. often IT sets up a VM or dedicated system with minimal drive space which causes issues people often don't recognize.

you should do some performance profiling of the flows you want to deploy and based on the peak requirements of the "stressed" flow load you can config your Microsoft Server's memory, drive and cpu. The server product offers more features for distributing processing but single server running a standalone designer with Scheduler option can easily handle what you propose in my experience so a server product will handle it at least as well. Just remember the windows server sizing is probably more important for performance than the Alteryx software.

If you grow a lot you can add more servers and Alteryx Server can handle setting them up as workers

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi @DanC ,

I have a workflow with multiple input files in .yxdb format, the combined file size is almost 20 GB.

I am trying to package the workflow but getting same error mentioned above, is there any way to handle this scenerio.

Kindly help.

Thanks in advance.

-

AAH Welcome

2 -

Administration

2 -

Alias Manager

25 -

Alteryx Connect

1 -

Alteryx Designer

17 -

Alteryx Hub

1 -

Alteryx Practice

3 -

Alteryx Server

1 -

AMP Engine

34 -

Analytic Apps

1 -

API

316 -

App

1 -

App Builder

10 -

Apps

260 -

Authentication

1 -

Automating

1 -

Batch Macro

42 -

Behavior Analysis

1 -

Best Practices

290 -

Bug

74 -

Category Documentation

1 -

Category Interface

1 -

Chained App

82 -

Common Use Cases

110 -

Community

6 -

Configuration

1 -

Connectors

114 -

CREW Macros

1 -

Custom Tools

1 -

Customer feedback Survey

1 -

Data Connection

1 -

Data Science

1 -

Database Connection

291 -

Datasets

67 -

Date Time

3 -

Developer

7 -

Developer Tools

103 -

Documentation

107 -

Download

83 -

Dynamic Processing

77 -

Email

67 -

Engine

35 -

Error Message

359 -

Events

43 -

Gallery

1,300 -

Gallery API

1 -

General

4 -

Help

11 -

In Database

64 -

Input

162 -

Installation

128 -

Interface

1 -

Interface Tools

160 -

Iterative Macro

1 -

Join

12 -

Licenses and Activation

1 -

Licensing

55 -

Loaders

1 -

Macros

126 -

MongoDB

229 -

Off-Topic

1 -

Optimization

55 -

Output

244 -

People Person

1 -

Preparation

2 -

Publish

184 -

Python

1 -

R Tool

18 -

Reporting

86 -

Resource

1 -

Run As

58 -

Run Command

83 -

Salesforce

27 -

Schedule

236 -

Scheduler

333 -

Search Feedback

1 -

Server

1,882 -

Settings

493 -

Setup & Configuration

4 -

Sharepoint

69 -

Sharing

2 -

Spatial Analysis

13 -

System Administration

1 -

Tableau

62 -

Tips and Tricks

206 -

Tool Improvement

1 -

Topic of Interest

2 -

Transformation

2 -

Updates

77 -

Upgrades

157 -

User Interface

3 -

Workflow

497 -

Workflow running status

1

- « Previous

- Next »